Go 异步抢占

https://mp.weixin.qq.com/s/NIYxzycAqHzeVSgU7ixhNg

代码会死循环吗?Go 异步抢占

Illustration created for “A Journey With Go”, made from the original Go Gopher, created by Renee French.

ℹ️ This article is based on Go 1.13.

ℹ️ Go implements an asynchronous preemption in Go 1.14, making some part of this article obsolete. However, those sections will be marked as it, making the article still useful to understand the need for the asynchronous preemption.

For more details about asynchronous preemption, I suggest you read “Go: Asynchronous Preemption.”

Go manages the goroutines thanks to an internal scheduler. This scheduler aims to switch goroutines between them and make sure they all can get runnable time. However, the scheduler could need to preempt the goroutines to establish a correct turnover.

Scheduler and preemption

Let’s use a simple example to show how the scheduler works:

For ease of reading, the examples will not use atomic operations.

func main() {

var total int

var wg sync.WaitGroup

for i := 0; i < 10; i++ {

wg.Add(1)

go func() {

for j := 0; j < 1000; j++ {

total += readNumber()

}

wg.Done()

}()

}

wg.Wait()

}

//go:noinline

func readNumber() int {

return rand.Intn(10)

}

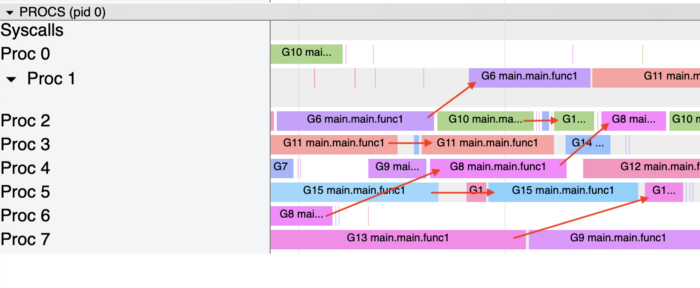

Here is the tracing:

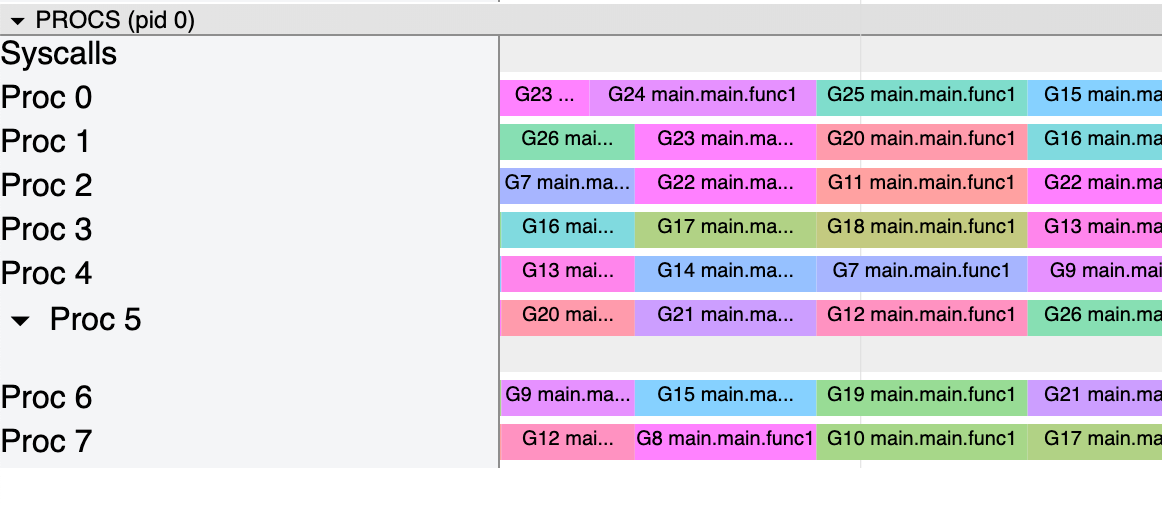

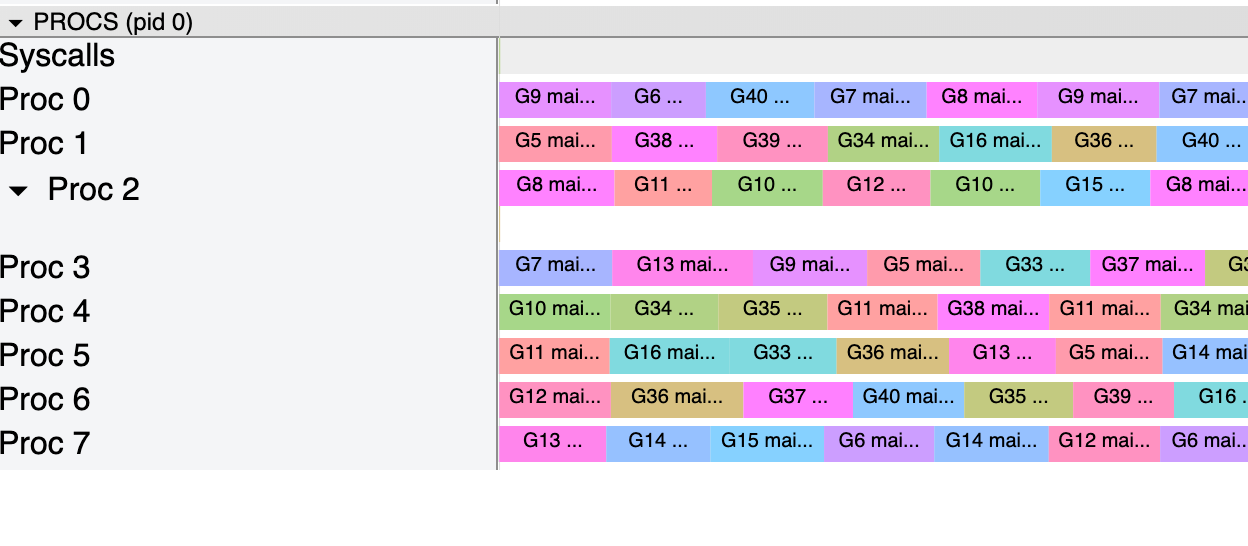

We clearly see that the scheduler rotates goroutines on the processors, giving running time to all of them. To alternate the running time, Go schedules the goroutines when they stopped due to a system call, blocking on channel, sleeping, waiting on a mutex, etc. In the previous example, the scheduler benefits from the mutex in the number generator to give running time to all of the goroutines. This can also be visualized in the tracing:

However, Go also needs a way to stop a running goroutine if it does not have any pause. This action, called preemption, allows the scheduler to switch goroutines. Any goroutine running for more than 10ms is marked as preemptible. Then, the preemption is done at the function prolog when the goroutine’s stack is increasing.

Let’s look at an example of this behavior with the previous lock, modified not to be used anymore, from the number generators:

func main() {

var total int

var wg sync.WaitGroup

for i := gen(0); i < 20; i++ {

wg.Add(1)

go func(g gen) {

for j := 0; j < 1e7; j++ {

total += g.readNumber()

}

wg.Done()

}(i)

}

wg.Wait()

}

var generators [20]*rand.Rand

func init() {

for i := int64(0); i < 20; i++ {

generators[i] = rand.New(rand.NewSource(i).(rand.Source64))

}

}

type gen int//go:noinline

func (g gen) readNumber() int {

return generators[int(g)].Intn(10)

}

Here is the tracing:

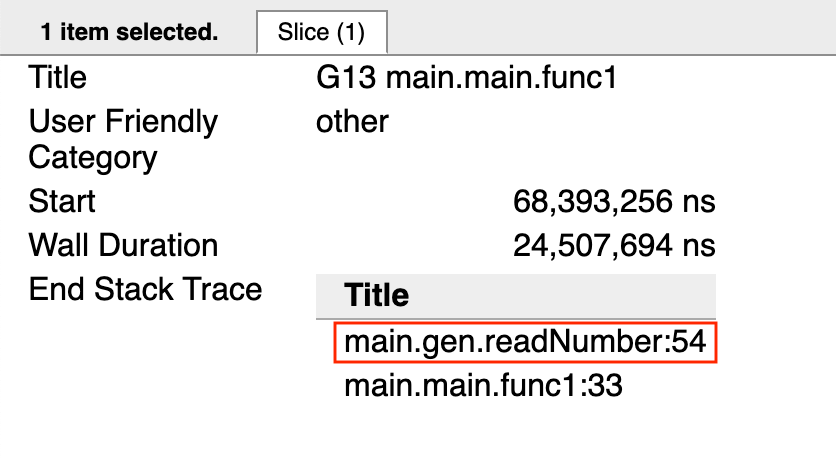

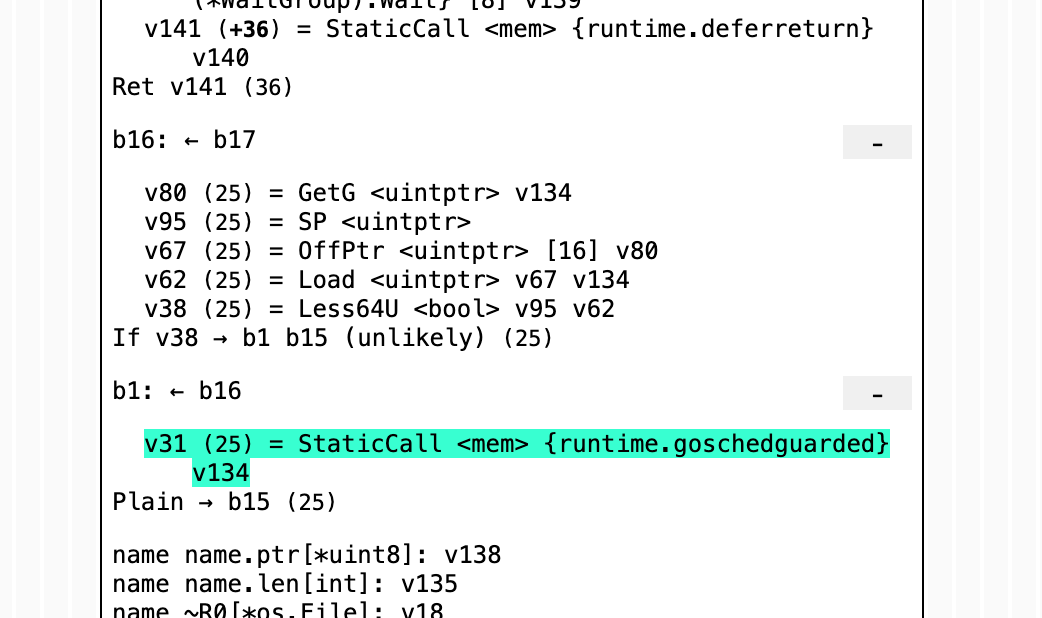

However, the goroutines are preempted at the function prolog:

This check is automatically added by the compiler; here is an example of the asm code generated by the previous example:

The runtime ensures the stack can grow by inserting instruction on each function prolog. This also allows the scheduler to run if necessary.

Most of the time, the goroutines will give the scheduler the ability to run all of them. However, a loop without function calls could block the scheduling.

Forcing preemption

Let’s start with a simple example that shows how a loop could block the scheduling:

func main() {

var total int

var wg sync.WaitGroup

for i := 0; i < 20; i++ {

wg.Add(1)

go func() {

for j := 0; j < 1e6; j++ {

total ++

}

wg.Done()

}()

}

wg.Wait()

}

Since there are no function calls and the goroutines will never block, the scheduler does not preempt them. We can see that in the tracing:

Goroutines are not preempted

However, Go provides several solutions to fix this issue:

- Forcing the scheduler to run thanks to the method

runtime.Gosched():

for j := 0; j < 1e8; j++ {

if j % 1e7 == 0 {

runtime.Gosched()

}

total ++

}

Here is the new tracing:

- Using the experimentation that allows loops to be preempted. It can be activated by rebuilding the Go toolchain with the instruction

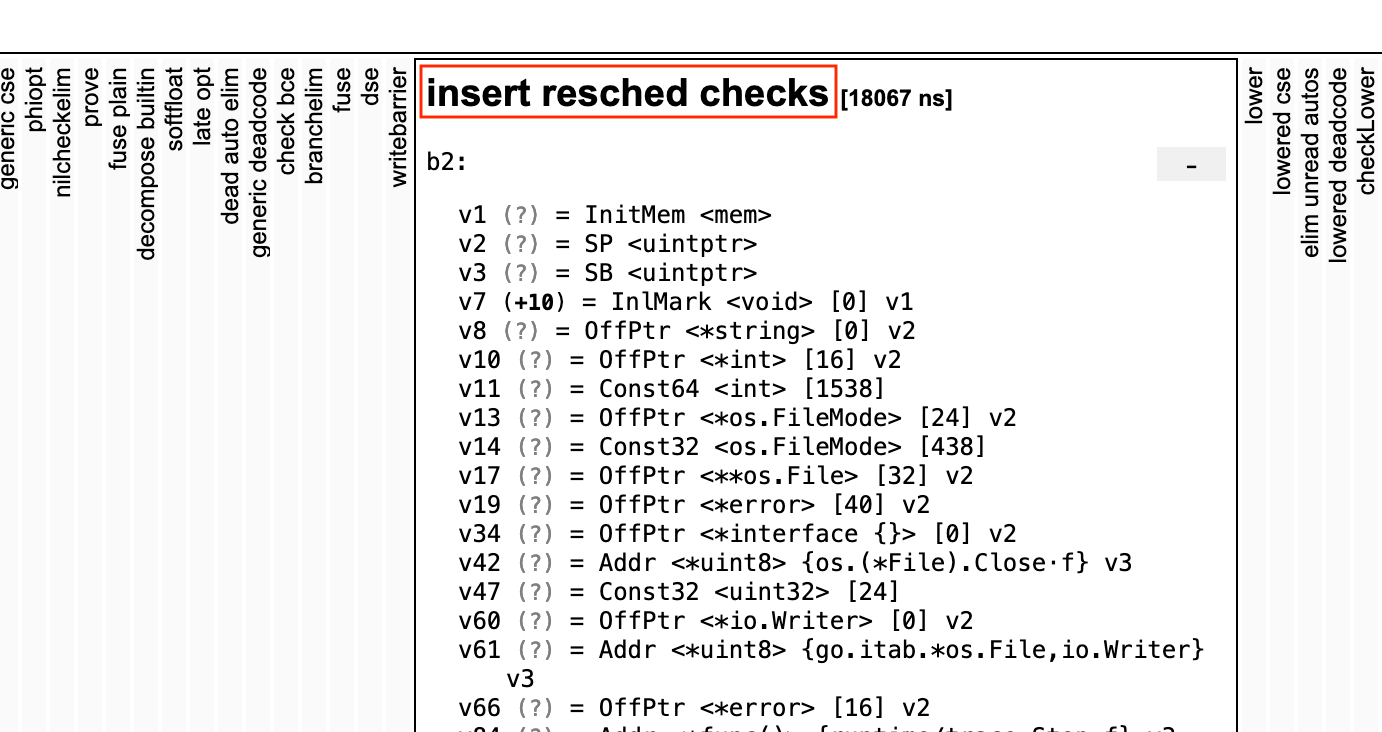

GOEXPERIMENT=preemptibleloopsor adding the flag-gcflags -d=ssa/insert_resched_checks/onwhile usinggo build. This time, the code does not need to be modified; here is the new tracing:

When preemption is activated in the loops, the compiler will add a pass when generating the SSA code:

This pass will add instructions to call the scheduler from time to time:

For information about the Go compiler, I suggest you read my article “Go: Overview of the Compiler.”

However, this approach could slow the code down a bit since it forces the scheduler to trigger probably more often than necessary. Here is a benchmark between the two versions:

name old time/op new time/op delta

Loop-8 2.18s ± 2% 2.05s ± 1% -6.23%

ℹ️ The issue raised in this section is now fixed with Go 1.14 and the asynchronous preemption. However, the two solutions explained here are still valid. runtime.Gosched() can be used to trigger the scheduler, and the preemptible loops option is still part of the standard library.

Incoming improvements

As of now, the scheduler uses cooperative preemption techniques that cover most of the cases. However, in some unusual cases, it can become a real pain point. A proposal for a new “non-cooperative preemption” has been submitted that aims to solve this problem as explained in the document:

I propose that the Go implementation switch to non-cooperative preemption, which would allow goroutines to be preempted at essentially any point without the need for explicit preemption checks. This approach will solve the problem of delayed preemption and do so with zero runtime overhead.

The document suggests several techniques with the advantages and their drawbacks and could land in the next versions of Go.

ℹ️ This article is based on Go 1.14.

The preemption is an important part of the scheduler that lets it distribute the running time among the goroutines. Indeed, without preemption, a long-running goroutine that hogs the CPU would block the other goroutines from being scheduled. The version 1.14 introduces a new technique of asynchronous preemption, giving more power and control to the scheduler.

For more details about the previous behavior and its drawback, I suggest you read my article “Go: Goroutine and Preemption.”

Workflow

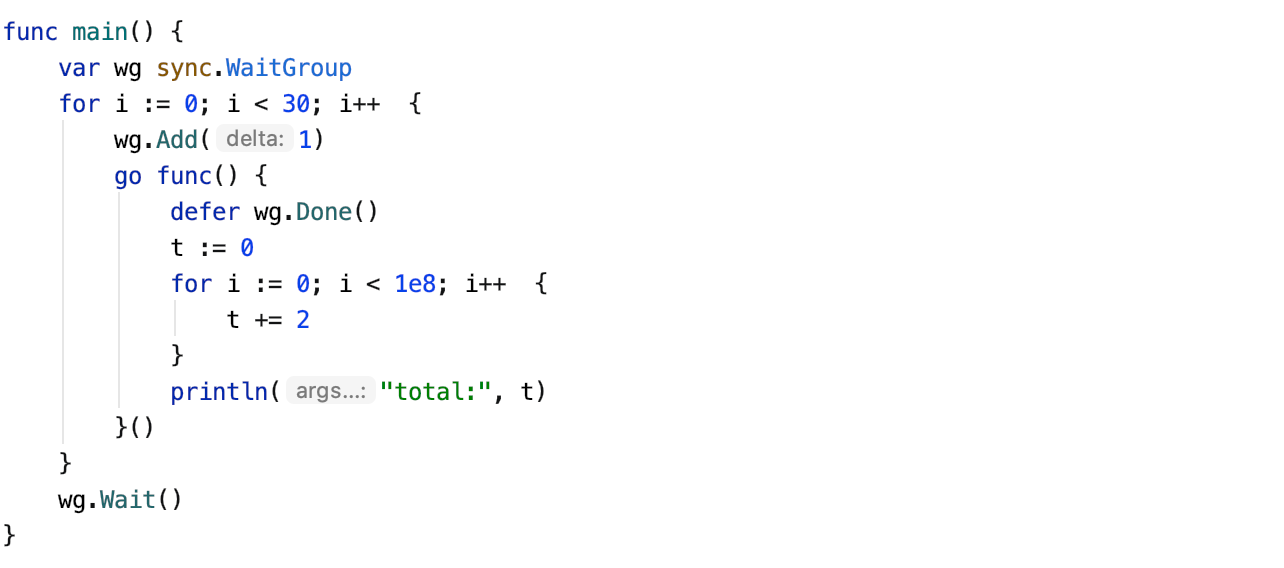

Let’s start with an example where preemption is needed. Here is a code where many goroutines loop for a while without any function call, meaning no opportunity for the scheduler to preempt them:

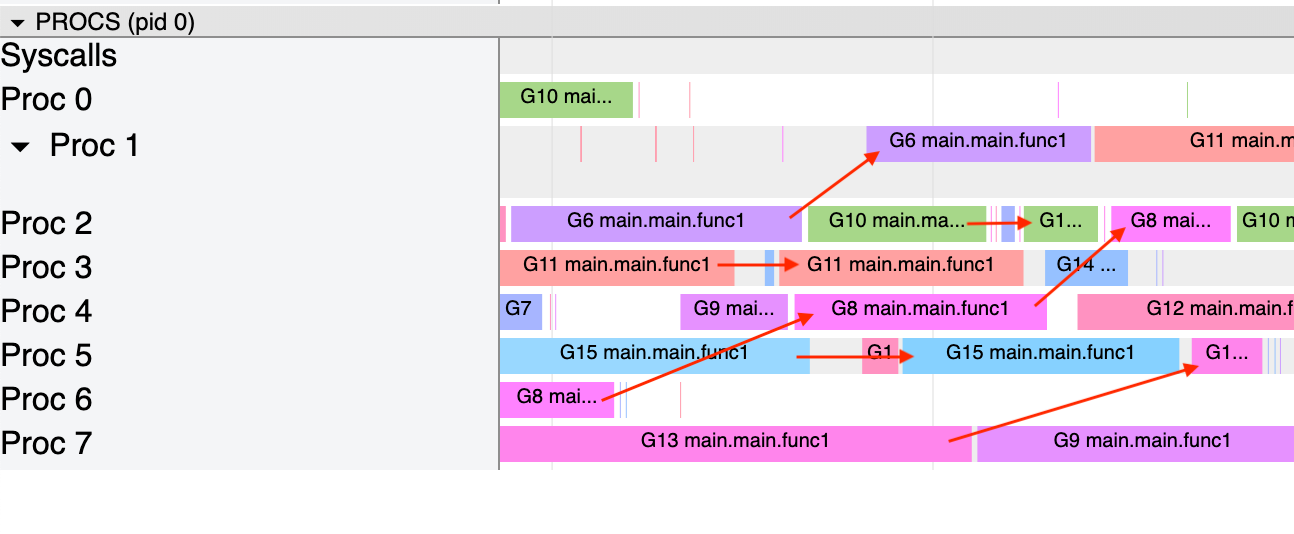

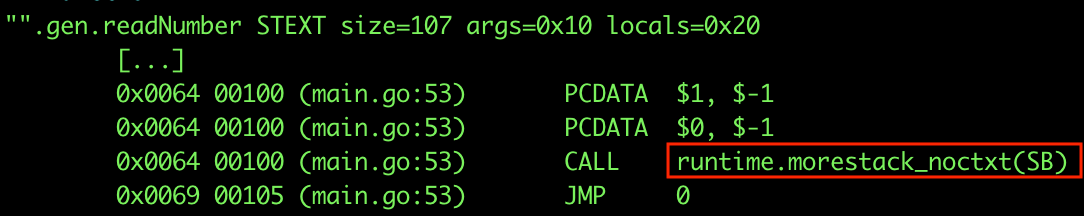

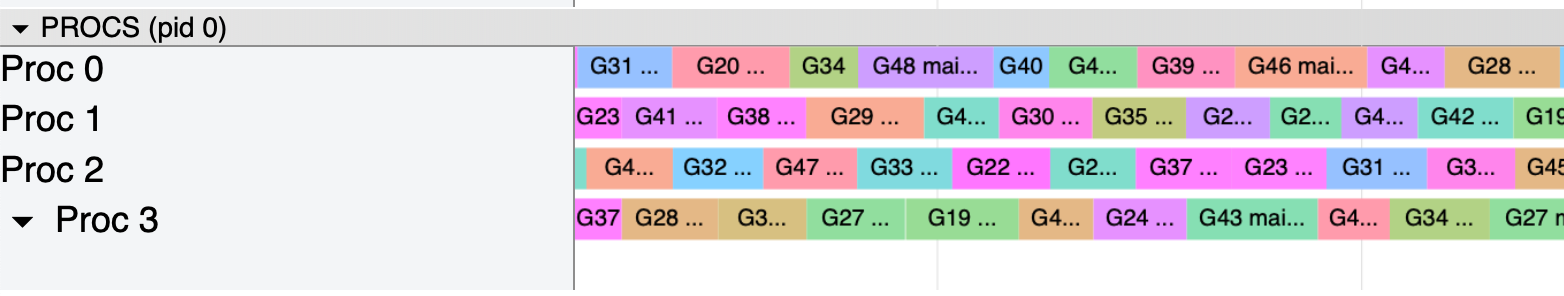

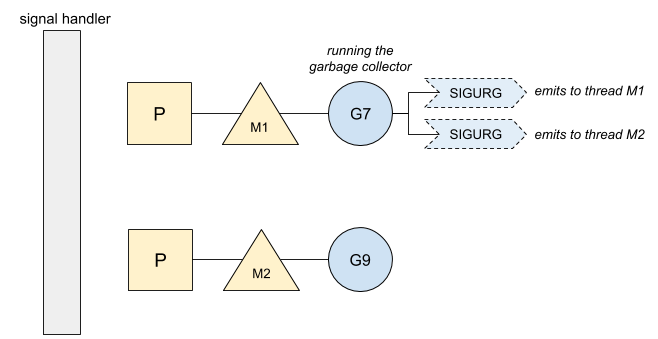

However, when visualizing the traces from this program, we clearly see the goroutines are preempted and switch among them:

We can also see that all the blocks representing the goroutines have the same length. The goroutines get almost the same running time (around 10/20ms):

The asynchronous preemption is triggered based on a time condition. When a goroutine is running for more than 10ms, Go will try to preempt it.

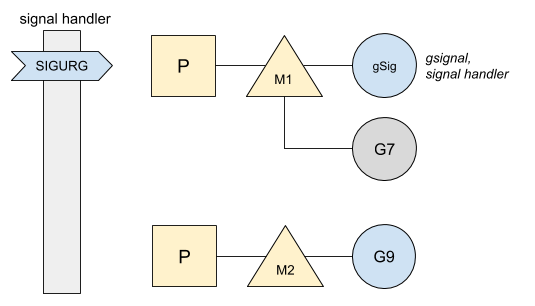

The preemption is initiated by the thread sysmon, dedicated to watching after the runtime, long-running goroutines included. Once a goroutine is detected running more than 10ms, a signal is emitted to the current thread for its preemption:

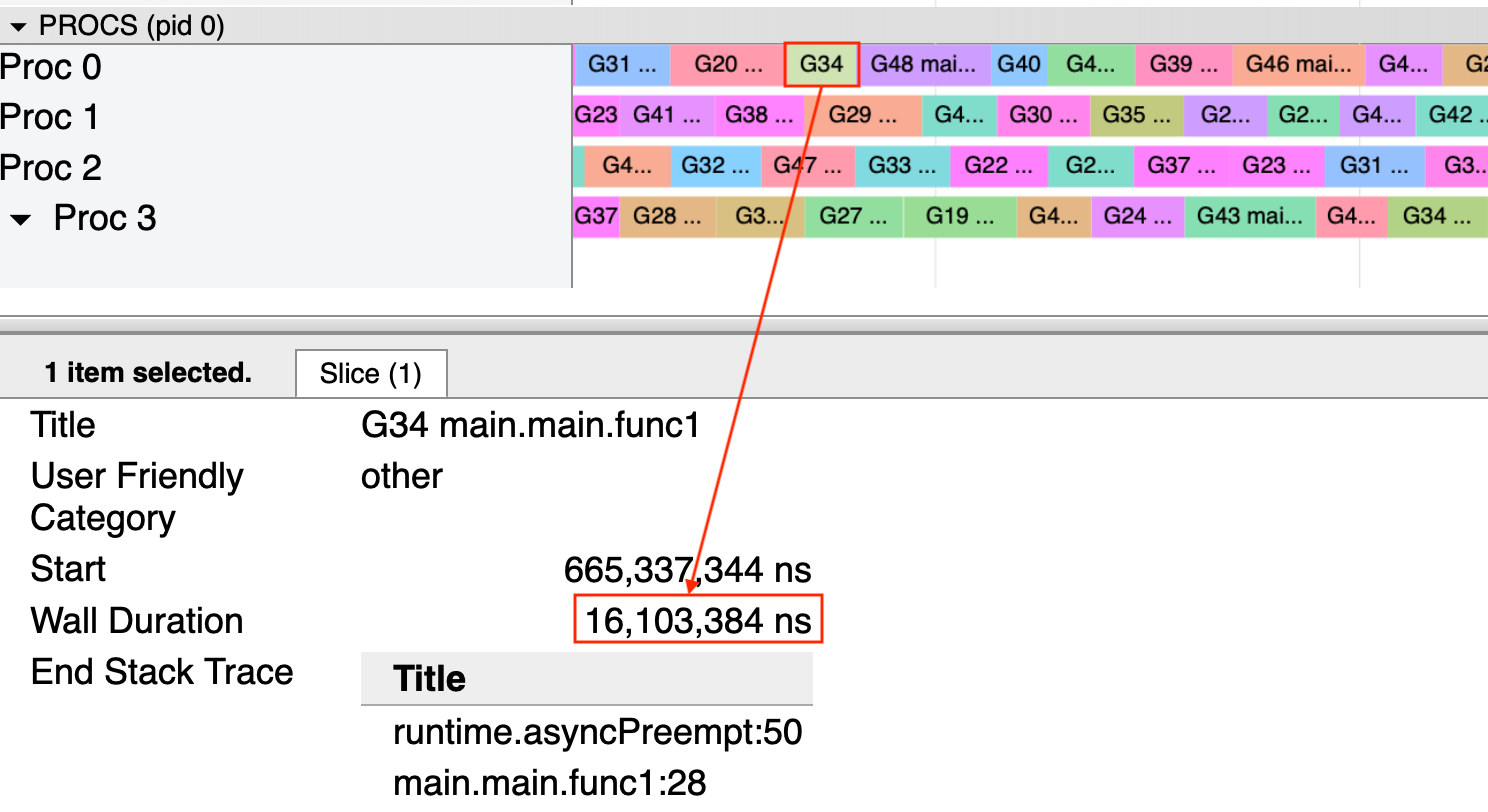

Then, once the message is received by the signal handler, the thread is interrupted to handle it, and therefore does not run the current goroutine anymore — G7 in our example. Instead, gsignal is scheduled to manage the incoming signal. Since it finds out it is a preemption instruction, it sets instruction up to stop the current goroutine when the program resumes after the signal handling. Here is a diagram of this second phase:

For more details about gsignal, I suggest you read my article “Go: gsignal, Master of Signals.”

Implementation

The first detail of the implementation we have seen is the chosen signal SIGURG. This choice is well explained in the proposal “Proposal: Non-cooperative goroutine preemption”:

- It should be a signal that’s passed-through by debuggers by default.

- It shouldn’t be used internally by libc in mixed Go/C binaries […].

- It should be a signal that can happen spuriously without consequences.

- We need to deal with platforms without real-time signals […].

Then, once the signal is injected and received, Go needs a way to stop the current goroutine when the program resumes. To achieve this, Go will push an instruction in the program counter, to make it looks like the running program called a function in the runtime. This function parks the goroutine and hands it to the scheduler that will run another one.

We should note that Go cannot stop the program anywhere; the current instruction must be a safe point. For instance, if the program is currently calling the runtime, it would not be safe to preempt the goroutine since many functions in the runtime should not be preempted.

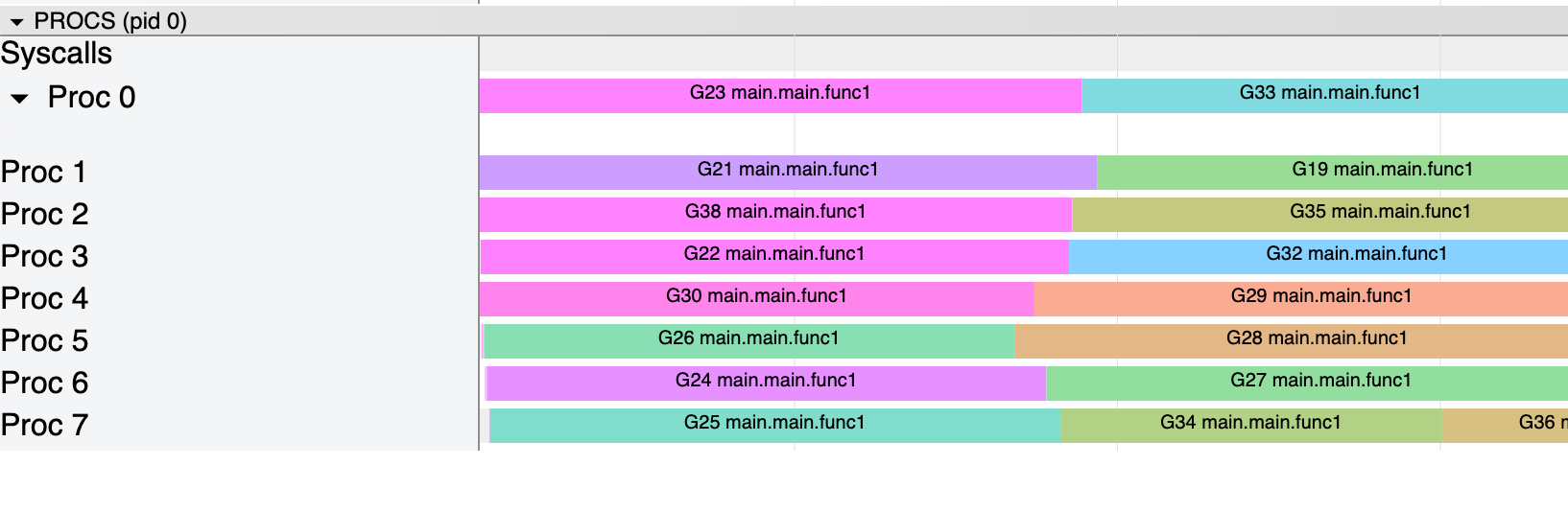

This new preemption also benefits the garbage collector that can stop all the goroutines in a more efficient way. Indeed, stopping the world is now much easier, Go just has to send a signal to every running thread. Here is an example when the garbage collector is running:

Then, each thread receives the signal and pauses the execution until the garbage collector starts the world again.

For more information about the phase “Stop the World,” I suggest you read my article “Go: How Does Go Stop the World?”

At last, this feature is shipped with a flag to deactivate the asynchronous preemption. You can run your program with GODEBUG=asyncpreemptoff=1, that will allow you to debug your program if you see anything incorrect due to the upgrade to Go 1.14, or see how your application performs with or without asynchronous preemption.

ℹ️ 本文基于 Go 1.14。

抢占是调度器的重要部分,基于抢占调度器可以在各个协程中分配运行的时间。实际上,如果没有抢占机制,一个长时间占用 CPU 的协程会阻塞其他的协程被调度。1.14 版本引入了一项新的异步抢占的技术,赋予了调度器更大的能力和控制力。

我推荐你阅读我的文章”Go:协程和抢占“[1]来了解更多之前的特性和它的弊端。

工作流

我们以一个需要抢占的例子来开始。下面一段代码开启了几个协程,在几个循环中没有其他的函数调用,意味着调度器没有机会抢占它们:

然而,当把这个程序的追踪过程可视化后,我们清晰地看到了协程间的抢占和切换:

我们还可以看到表示协程的每个块儿的长度都相等。所有的协程运行时间相同(约 10 到 20 毫秒)。

异步抢占是基于一个时间条件触发的。当一个协程运行超过 10ms 时,Go 会尝试抢占它。

抢占是由线程 sysmon 初始化的,该线程专门用于监控包括长时间运行的协程在内的运行时。当某个协程被检测到运行超过 10ms 后,sysmon 向当前的线程发出一个抢占信号。

之后,当信息被信号处理器接收到时,线程中断当前的操作来处理信号,因此不会再运行当前的协程,在我们的例子中是 G7。取而代之的是,gsignal 被调度为管理发送来的信号。当它发现它是一个抢占指令后,在程序处理信号后恢复时它准备好指令来中止当前的协程。下面是这第二个阶段的示意图:

如果你想了解更多关于 gsignal 的信息,我推荐你读一下我的文章”Go:gsignal,信号的掌控者“[2]。

实现

我们在被选中的信号 SIGURG 中第一次看到了实现的细节。这个选择在提案”提案:非合作式协程抢占“[3]中有详细的解释:

- 它应该是调试者默认传递过来的一个信号。

- 它不应该是 Go/C 混合二进制中 libc 内部使用的信号。

- 它应该是一个可以伪造而没有其他后果的信号。

- 我们需要在没有实时信号时与平台打交道。

然后,当信号被注入和接收时,Go 需要一种在程序恢复时能终止当前协程的方式。为了实现这个过程,Go 会把一条指令推进程序计数器,这样看起来运行中的程序调用了运行时的函数。该函数暂停了协程并把它交给了调度器,调度器之后还会运行其他的协程。

我们应该注意到 Go 不能做到在任何地方终止程序;当前的指令必须是一个安全点。例如,如果程序现在正在调用运行时,那么抢占协程并不安全,因为运行时很多函数不应该被抢占。

这个新的抢占机制也让垃圾回收器受益,可以用更高效的方式终止所有的协程。诚然,STW 现在非常容易,Go 仅需要向所有运行的线程发出一个信号就可以了。下面是垃圾回收器运行时的一个例子:

然后,所有的线程都接收到这个信号,在垃圾回收器重新开启全局之前会暂停执行。

如果你想了解更多关于 STW 的信息,我建议你阅读我的文章”Go:Go 怎样实现 STW?“[4]。

最后,这个特性被封装在一个参数中,你可以用这个参数关闭异步抢占。你可以用 GODEBUG=asyncpreemptoff=1 来运行你的程序,如果你因为升级到了 Go 1.14 发现了不正常的现象就可以调试你的程序,或者观察你的程序有无异步抢占时的不同表现。

via: https://medium.com/a-journey-with-go/go-asynchronous-preemption-b5194227371c

https://mp.weixin.qq.com/s/Wp15aOLeYhZYla275TzISw

go trace 剖析 go1.14 异步抢占式调度

大纲

-

抢占调度

-

go tool trace

-

View trace

-

golang 抢占调度

-

思考问题

-

总结

抢占调度

go 1.14 版本带来了一个非常重要的特性:异步抢占的调度模式。之前我们通过解释一个简单的协程调度原理(),并且实现协程调度例子都提到了一个点:协程是用户态实现的自我调度单元,每个协程都是君子才能维护和谐的调度秩序,如果出现了流氓(占着 cpu 不放的协程)你是无可奈何的。

go1.14 之前的版本所谓的抢占调度是怎么样的:

- 如果 sysmon 监控线程发现有个协程 A 执行之间太长了(或者 gc 场景,或者 stw 场景),那么会友好的在这个 A 协程的某个字段设置一个抢占标记 ;

- 协程 A 在 call 一个函数的时候,会复用到扩容栈(morestack)的部分逻辑,检查到抢占标记之后,让出 cpu,切到调度主协程里;

这样 A 就算是被抢占了。我们注意到,A 调度权被抢占有个前提:A 必须主动 call 函数,这样才能有走到 morestack 的机会(旁白:能抢君子调度权,抢占不了流氓的)。

举个栗子,然后看下 go1.13 和 go1.14 的分析对比:

特殊处理:

- 为了研究方便,我们只用一个 P(处理器),这样就确保是单处理器的场景;

- 回忆下 golang 的 GMP 模型:调度单元 G,线程 M,队列 P,由于 P 只有一个,所以每时每刻有效执行的 M 只会有一个,也就是单处理器的场景(旁白:个人理解有所不同,有些人喜欢直接把 P 理解成处理器,我这里把 P 说成队列是从实现的角度来讲的);

- 打开 golang 调试大杀器 trace 工具(可以直观的观察调度的情况);

- 搞一个纯计算且耗时的函数

calcSum(不给任何机会);

下面创建一个名为 example.go 的文件,写入以下内容:

package mainimport ("fmt""os""runtime""runtime/trace""sync")func calcSum(w *sync.WaitGroup, idx int) {defer w.Done()var sum, n int64for ; n < 1000000000; n++ {sum += n}fmt.Println(idx, sum)}func main() {runtime.GOMAXPROCS(1)f, _ := os.Create("trace.output")defer f.Close()_ = trace.Start(f)defer trace.Stop()var wg sync.WaitGroupfor i := 0; i < 10; i++ {wg.Add(1)go calcSum(&wg, i)}wg.Wait()}

我们分别看下 go1.13, go.14 对于这个程序的表现区别。

trace 这个就再简单提下,trace 是 golang 内置的一种调试手段,能够 trace 一段时间程序的运行情况。能看到:

- 协程的调度运行情况;

- 跑在每个处理器 P 上协程情况;

- 协程出发的事件链;

编译、运行的程序:

$ go build -gcflags "-N -l" ./example.go$ ./example

这样在本地就能生成一个 trace.output 文件;

分析 trace 输出:

$ go tool trace -http=":6060" ./trace.output提一点,如果你的浏览器 View trace 是空白页没有任何显示,那么原因是:浏览器的一些 js 接口被禁用了。

Trace Viewer is running with WebComponentsV0 polyfill, and some features may be broken. As a workaround, you may try running chrome with "--enable-blink-features=ShadowDOMV0,CustomElementsV0,HTMLImports" flag. See crbug.com/1036492.

解决方法有两个:

1)加上参数,打开浏览器的参数开关 2)使用 go1.14 分析渲染,因为 go1.14 解决了这个问题;

go tool trace

在后台执行命令:

$ go tool trace -http=":6060" ./trace.output这样就能分析 trace.output 这个文件了,可以用浏览器来方便查看分析的结果,如下:

名词解释:

- View trace:查看跟踪(这个是今天要使用的重点),能看到一段时间内 goroutine 的调度执行情况,包括事件触发链;

- Goroutine analysis:Goroutine 分析,能看到这段时间所有 goroutine 执行的一个情况,执行堆栈,执行时间;

- Network blocking profile:网络阻塞概况(分析网络的一些消耗)

- Synchronization blocking profile:同步阻塞概况(分析同步锁的一些情况)

- Syscall blocking profile:系统调用阻塞概况(分析系统调用的消耗)

- Scheduler latency profile:调度延迟概况(函数的延迟占比)

- User defined tasks:自定义任务

- User defined regions:自定义区域

- Minimum mutator utilization:Mutator 利用率使用情况

我们今天分析抢占调度,只需要看 View trace 这个展示就行了。

View trace

- 横坐标为时间线,表示采样的顺序时间;

- 纵坐标为采样的指标,分为两大块:STATS,PROCS

(这些采样值都要配合时间轴来看,你可以理解成是一些快照数据)

STATS

处于上半区,展示的有三个指标 Goroutines,Heap,Threads,你鼠标点击彩色的图样,就能看到这一小段时间的采样情况:

- Goroutines:展示某个时间 GCWaiting,Runnable,Running 三种状态的协程个数;

- Heap:展示某个时间的 NextGC,Allocated 的值;

- Threads:展示 InSyscall,Running 两个状态的线程数量情况;

PROCS

- 显示每个处理器当时正在处理的协程,事件,和一些具体运行时信息;

- Proc 的个数由 GOMAXPROCS 参数控制,默认和机器核心数一致;

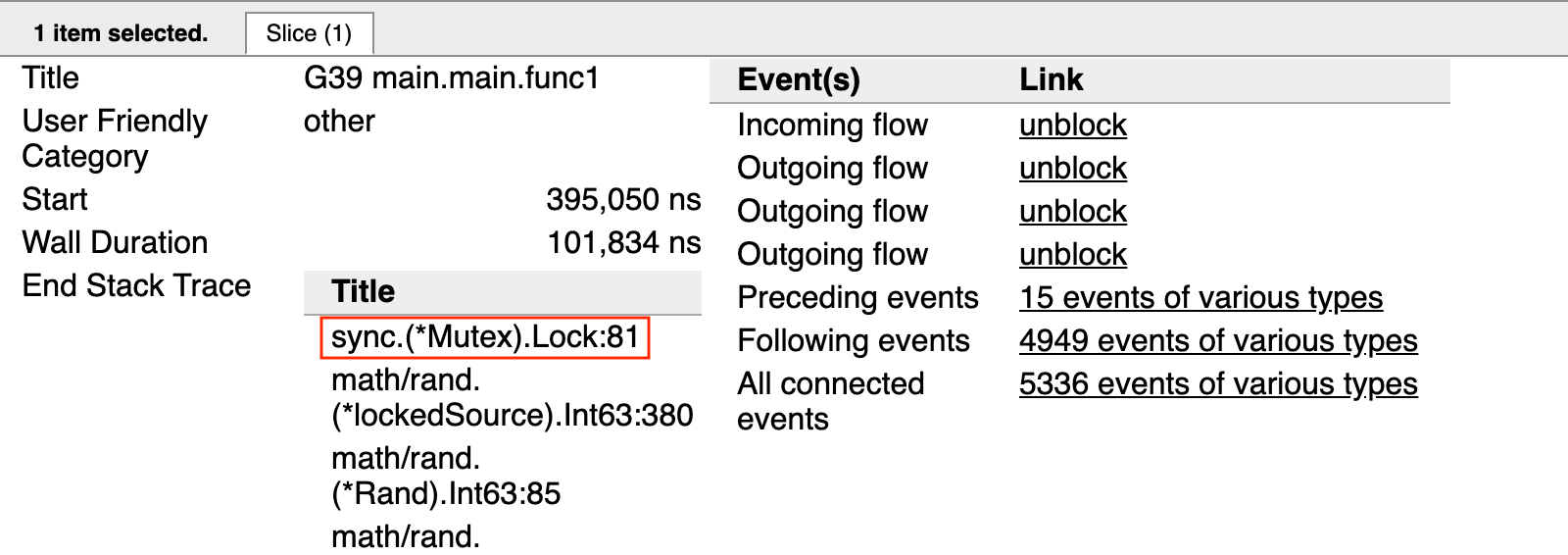

你点击一个协程区域,就会显示这个时间段的情况,有一些指标:

- Start:开始时间(就是时间轴上的刻度)

- Wall Duration:持续时间(这个 goroutine 在这个处理器上连续执行的小段时间)

- Start Stack Trace:协程调用栈(切进来执行的 goroutine 调用栈)

- End Stack Trace:切走时候时候的调用栈

- Incoming flow:触发切入的事件

- Outgoing flow:触发切走的事件

- Preceding events:这个协程相关的之前所有的事件

- Follwing events:这个协程相关的之后所有的事件

- All connected:这个协程相关的所有事件

golang 抢占调度

现在有了 View trace 的基础知识,我们用来观察 go1.13 和 go1.14 的抢占情况。编译 exmaple.go 文件,然后执行生成 trace.output 文件,go tool trace 分析这个文件,结果如下:

go1.13 trace 分析

trace 内部分析如下:

从 trace 这个图我们可以非常直观的得出一些信息:

- 只有一个处理器(Proc 0)调度协程;

- 因为我们代码里面设置

GOMAXPROCS = 1 - 一共有 10 协程执行(可以数一下);

- example.go 的 for 循环就是 10 次

- 10 个协程在 Proc 0 上是串行执行的,从图里非常明显可以看到,执行完之后 goroutine 才会执行下一个协程,无法抢占;

- 每个 goroutine 连续执行 1.6s 左右,10 个协程执行时间总的时间耗费 16 s;

- 协程切入是从

main.calcSum:11切入的,切出是从main.calcSum:17切出的(fmt.Println 这个函数);

所以从这个 trace 分析,我们明确的看到,针对 calcSum 这样的流氓函数,go1.13 是毫无办法的,一旦执行开始,只能等执行结束。每个 goroutine 耗费了 1.6s 这么长的时间,也无法抢占他的执行权。

go1.14 trace 分析

现在我们看下用 go1.14 编译出的同一份程序,运行的 trace 结果:

猛的一看,密密麻麻的,红蓝相间的细条。我下面详细的说明,我们选中一个片段,并且看一下整体信息:

- 还是只有一个处理器(Proc 0)调度协程;

- 因为我们代码里面设置

GOMAXPROCS = 1 - 程序运行的总时间还是 16s(这个小伙伴能不能理解?虽然 10 个 goroutine 是并发运行的,但是你只有一个处理器,所以时间肯定是一样的,但如果你有多个处理器的时候,就不一样了);

- 这个 goroutine 只执行了 20ms 就让出处理器了;

- 我们大概知道,example.go 里面

calcaSum函数在我的机器上大概需要 1.6s 的时间,所以执行 20ms 就切走了肯定是还没有执行完的,是被强制抢占了处理器; - 切入的栈还是

main.calcSum:11,这个跟 go1.13 一样; - 切出的栈变了,

runtime.asyncPreempt:50这就是最大的不同,从这个地方也能明确的知道,被异步抢占了;

这样密密麻麻的红蓝片段,无法知道一共多少 goroutine?其实是可以的,可以通过 STATS 区来看:

选中 Goroutines 区域,点点点,就会看到 Goroutines 的总数一直是 10 个,正在运行的 goroutine (Running)是 1 个,等待调度的(Runnable)是 9 个。

可以执行 W 快捷键,可以把图片放大:

思考问题

问题一:go1.14 确实带来了并发,我们看到在我们的 demo 里面,goroutine 的运行被强制切成了小段的时间片,所以再流氓的函数也不再害怕。但是为啥在我们 example.go 的演示里面,虽然 10 个 goroutine 全并发了,运行总时间却没有丝毫优化?

根本原因:只有一个处理器,所以,就算你做了多少并发,不同的 goroutine 反复横跳,效果还是一样的,因为只有一个处理器干活。

问题二:如果我用 2 个处理器呢?

先看结果:

go1.13

go 1.14

从 trace 图看到时间还是一样,都缩短一倍,8秒。

思考两个问题:

- 添加处理器后,时间为啥能缩短一倍(16s -> 8s)?

因为处理器变成 2 了,这个应该很容易理解,总共就 10 个 协程,之前一个处理器处理 10个,时间就耗费 16 s,现在 2 个处理器,每个处理器平均处理 5 个,处理器是并行运行的,所以自然时间就少一半了。

- 为啥 go1.13,go1.14 时间还是一样的?

根本原因在于:我们举的例子是纯计算类型的,每个协程的时间固定是 1.6 s,这个是没法减少的。16 个协程总时间是 16 s 这个是无法减少的。你只有两个处理器,最好的情况也就是 2 个处理器并行处理,时间完全减半。

问题三:那这种抢占行调度有啥用?

这种协程调度本质上是为了增加系统的吞吐能力。抢占型调度是为了让大家公平的得到调度,不会让系统因为某一个协程卡死(因为处理器资源有限),举个例子:

G1: ||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||G2: ||||G3: ||||G4: |||

假设:

- 只有一个处理器(GOMAXPROCS = 1);

- G1,G2,G3,G4 依次在调度队列里,并且这个协程都是纯计算的逻辑;

- G1 执行需要 1 小时,G2 执行需要 0.02 秒,G3 执行需要 0.02 秒,G4 执行需要 0.01 秒;

如果是 go1.13 这样不可抢占的模式,先执行 G1,那么一个小时之后再执行 G2,G3,G4,这段时间不能执行任何逻辑,相当于系统卡死 1 小时,1小时内无作为(并且还会堆积请求),系统吞吐率极低;

如果是 go1.14,那么先执行 G1,20ms之后,让出调度权,依次执行 G2,G3,G4 瞬间就完成了,并且之后有新的请求来,系统还是可以响应的,吞吐率高。尤其是在 IO 操作的情况,其实只需要一点点 cpu 就行了,这些抢占过来的 cpu 能够用在很多更有效的场景。

总结

- go1.14 带来了真正的抢占式任务调度,让 goroutine 任务的调度执行更加公平,避免了流氓协程降低整个系统吞吐能力的情况发生;

- 本片文章从简单栗子入手,通过 trace 工具图形化展示了在 go1.13 和 go1.14 的调度执行情况,从 trace 结果来看,实锤,非常直观;

- 我们理解了抢占调度带来的好处,并且形象的观测到了,并且还发现了

runtime.asyncPreempt这个函数(预告:后面会有个代码原理层面的详细梳理,在此我们只需要知道 go1.14 确是实现了异步抢占的调度方式,是通过异步信号来实现的); - 先让你把抽象的概念具现化,让你看得到,摸得到,然后再去细化原理。这个也是笔者喜欢的一种学习方式;

- 我们顺便操作并解释了

go tool trace的使用,和参数含义,trace 工具可是个 golang 问题排查的大杀器,非常推荐;