Hadoop(2.x) 伪分布式搭建

系统环境

/* 操作系统: CentOS 7 主机名: centos02 IP: 192.168.122.1

Java: 1.8 Hadoop: 2.8.5 */

新建目录

[root@centos02 opt]# pwd /opt [root@centos02 opt]# [root@centos02 opt]# mkdir -m 777 bigdata [root@centos02 opt]# mkdir -m 777 bigdata/hadoop [root@centos02 opt]#

解压 Hadoop 到指定目录

[root@centos02 opt]# tar xzf hadoop-2.8.5.tar.gz -C /opt/bigdata/hadoop

修改解压的Hadoop文件夹的权限

[root@centos02 opt]# chown -R 777 /opt/bigdata/hadoop/hadoop-2.8.5

新建Hadoop临时文件目录

[root@centos02 opt]# cd /opt/bigdata/hadoop/hadoop-2.8.5 [root@centos02 hadoop-2.8.5]# pwd /opt/bigdata/hadoop/hadoop-2.8.5 [root@centos02 hadoop-2.8.5]# [root@centos02 hadoop-2.8.5]# mkdir -m 777 hadoop-temp [root@centos02 hadoop-2.8.5]# mkdir -m 777 hadoop-temp/tmp [root@centos02 hadoop-2.8.5]# mkdir -m 777 hadoop-temp/name [root@centos02 hadoop-2.8.5]# mkdir -m 777 hadoop-temp/data [root@centos02 hadoop-2.8.5]# [root@centos02 hadoop-2.8.5]# mkdir -m 777 hadoop-temp/yarn [root@centos02 hadoop-2.8.5]# mkdir -m 777 hadoop-temp/yarn/logs [root@centos02 hadoop-2.8.5]# [root@centos02 hadoop-2.8.5]# cd ./hadoop-temp [root@centos02 hadoop-temp]# pwd /opt/bigdata/hadoop/hadoop-2.8.5/hadoop-temp [root@centos02 hadoop-temp]#

添加环境变量

[root@centos02 hadoop-2.8.5]# vim /etc/profile

# Java export JAVA_HOME=/usr/local/java/jdk1.8 export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar export PATH=$JAVA_HOME/bin:$PATH # Hadoop export HADOOP_HOME=/opt/bigdata/hadoop/hadoop-2.8.5 export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export HADOOP_INSTALL=$HADOOP_HOME export YARN_HOME=$HADOOP_HOME export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

[root@centos02 hadoop-2.8.5]# source /etc/profile

修改Hadoop运行环境变量

hadoop-env.sh

[root@centos02 hadoop-2.8.5]# cd ./etc/hadoop [root@centos02 hadoop]# [root@centos02 hadoop]# vim hadoop-env.sh

# export JAVA_HOME=${JAVA_HOME} export JAVA_HOME=/usr/local/java/jdk1.8

yarn-env.sh

[root@centos02 hadoop]# vim yarn-env.sh

export JAVA_HOME=/usr/local/java/jdk1.8

mapred-env.sh

[root@centos02 hadoop]# vim mapred-env.sh

export JAVA_HOME=/usr/local/java/jdk1.8

修改Hadoop配置文件

core-site.xml

[root@centos02 hadoop]# vim core-site.xml

<configuration> <property> <name>fs.defaultFS</name> <value>hdfs://centos02:9000</value> </property> <property> <name>hadoop.home</name> <value>/opt/bigdata/hadoop/hadoop-2.8.5</value> </property> <property> <name>hadoop.tmp.dir</name> <value>${hadoop.home}/hadoop-temp/tmp</value> </property> <property> <name>hadoop.security.authorization</name> <value>false</value> <description>是否启用服务器级别授权</description> </property> <property> <name>hadoop.proxyuser.root.hosts</name> <value>*</value> </property> <property> <name>hadoop.proxyuser.root.groups</name> <value>*</value> </property> </configuration>

hdfs-site.xml

[root@centos02 hadoop]# vim hdfs-site.xml

<configuration> <property> <name>dfs.replication</name> <value>1</value> <description>副本数</description> </property> <property> <name>hadoop.home</name> <value>/opt/bigdata/hadoop/hadoop-2.8.5</value> </property> <property> <name>dfs.name.dir</name> <value>${hadoop.home}/hadoop-temp/name</value> </property> <property> <name>dfs.data.dir</name> <value>${hadoop.home}/hadoop-temp/data</value> </property> <property> <name>dfs.blocksize</name> <value>128m</value> </property> <property> <name>dfs.webhdfs.enabled</name> <value>true</value> <description>是否可以通过web站点进行HDFS管理</description> </property> <property> <name>dfs.permissions.enabled</name> <value>false</value> <description>是否启用HDFS中的权限检查。如果为false,则禁用权限检查</description> </property> </configuration>

mapred-site.xml

[root@centos02 hadoop]# mv mapred-site.xml.template mapred-site.xml [root@centos02 hadoop]# vim mapred-site.xml

<configuration> <property> <name>mapreduce.framework.name</name> <value>yarn</value> <description>MapReduce的任务运行在yarn上</description> </property> <property> <name>mapreduce.jobhistory.address</name> <value>centos02:10020</value> <description>历史记录服务器地址</description> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>centos02:19888</value> <description>历史运行记录的Web地址和端口号</description> </property> </configuration>

yarn.site.xml

[root@centos02 hadoop]# vim yarn-site.xml

<configuration> <property> <name>yarn.resourcemanager.hostname</name> <value>centos02</value> <description>主机名</description> </property> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> <description> NodeManager上运行的附属服务。 需配置成mapreduce_shuffle,才可运行MapReduce程序</description> </property> <property> <name>hadoop.home</name> <value>/opt/bigdata/hadoop/hadoop-2.8.5</value> </property> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> <description>启用日志聚合</description> </property> <property> <name>yarn.log-aggregation-retain-seconds</name> <value>604800</value> <description>收集的日志的保留时间 604800=7*24*3600</description> </property> <property> <name>yarn.nodemanager.log.retain-second</name> <value>604800</value> <description>日志的保留时间 604800=7*24*3600</description> </property> <property> <name>yarn.nodemanager.remote-app-log-dir</name> <value>${hadoop.home}/hadoop-temp/yarn/logs</value> </property> <property> <name>yarn.resourcemanager.address</name> <value>${yarn.resourcemanager.hostname}:8032</value> </property> <property> <name>yarn.resourcemanager.webapp.address</name> <value>${yarn.resourcemanager.hostname}:8088</value> </property> <property> <name>yarn.resourcemanager.admin.address</name> <value>${yarn.resourcemanager.hostname}:8033</value> </property> <property> <name>yarn.resourcemanager.scheduler.address</name> <value>${yarn.resourcemanager.hostname}:8030</value> </property> <property> <name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name> <value>org.apache.hadoop.mapred.ShuffleHandler</value> </property> <property> <name>yarn.nodemanager.vmem-check-enable</name> <value>false</value> <description>忽略虚拟机内存的检查(非必须配置项)</description> </property> </configuration>

查看 Hadoop版本

[root@centos02 bin]# hadoop version Hadoop 2.8.5 Subversion https://git-wip-us.apache.org/repos/asf/hadoop.git -r 0b8464d75227fcee2c6e7f2410377b3d53d3d5f8 Compiled by jdu on 2018-09-10T03:32Z Compiled with protoc 2.5.0 From source with checksum 9942ca5c745417c14e318835f420733 This command was run using /opt/bigdata/hadoop/hadoop-2.8.5/share/hadoop/common/hadoop-common-2.8.5.jar [root@centos02 bin]#

格式化 Hadoop

[root@centos02 hadoop]# cd $HADOOP_HOME [root@centos02 hadoop-2.8.5]# pwd /opt/bigdata/hadoop/hadoop-2.8.5 [root@centos02 hadoop-2.8.5]# [root@centos02 hadoop-2.8.5]# cd ./bin [root@centos02 bin]# pwd /opt/bigdata/hadoop/hadoop-2.8.5/bin [root@centos02 bin]#

[root@centos02 bin]# hdfs namenode -format 19/08/28 06:45:02 INFO namenode.NameNode: STARTUP_MSG: /************************************************************ STARTUP_MSG: Starting NameNode STARTUP_MSG: user = root STARTUP_MSG: host = centos02/192.168.122.1 STARTUP_MSG: args = [-format] STARTUP_MSG: version = 2.8.5 STARTUP_MSG: classpath = /opt/bigdata/hadoop/hadoop-2.8.5/etc/hadoop:/opt/bigdata/hadoop/hadoop-2.8.5/share/hadoop/common/lib/jersey-server-1.9.jar:...

............

............

............

STARTUP_MSG: build = https://git-wip-us.apache.org/repos/asf/hadoop.git -r 0b8464d75227fcee2c6e7f2410377b3d53d3d5f8; compiled by 'jdu' on 2018-09-10T03:32Z STARTUP_MSG: java = 1.8.0_181 ************************************************************/ 19/08/28 06:45:02 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT] 19/08/28 06:45:02 INFO namenode.NameNode: createNameNode [-format] 19/08/28 06:45:02 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 19/08/28 06:45:02 WARN common.Util: Path /opt/bigdata/hadoop/hadoop-2.8.5/hadoop-temp/name should be specified as a URI in configuration files. Please update hdfs configuration. 19/08/28 06:45:02 WARN common.Util: Path /opt/bigdata/hadoop/hadoop-2.8.5/hadoop-temp/name should be specified as a URI in configuration files. Please update hdfs configuration. Formatting using clusterid: CID-9360eaf7-7648-456a-ab49-b56d0232ce68 19/08/28 06:45:02 INFO namenode.FSEditLog: Edit logging is async:true 19/08/28 06:45:02 INFO namenode.FSNamesystem: KeyProvider: null 19/08/28 06:45:02 INFO namenode.FSNamesystem: fsLock is fair: true 19/08/28 06:45:02 INFO namenode.FSNamesystem: Detailed lock hold time metrics enabled: false 19/08/28 06:45:02 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000 19/08/28 06:45:02 INFO blockmanagement.DatanodeManager: dfs.namenode.datanode.registration.ip-hostname-check=true 19/08/28 06:45:02 INFO blockmanagement.BlockManager: dfs.namenode.startup.delay.block.deletion.sec is set to 000:00:00:00.000 19/08/28 06:45:02 INFO blockmanagement.BlockManager: The block deletion will start around 2019 八月 28 06:45:02 19/08/28 06:45:02 INFO util.GSet: Computing capacity for map BlocksMap 19/08/28 06:45:02 INFO util.GSet: VM type = 64-bit 19/08/28 06:45:02 INFO util.GSet: 2.0% max memory 889 MB = 17.8 MB 19/08/28 06:45:02 INFO util.GSet: capacity = 2^21 = 2097152 entries 19/08/28 06:45:02 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false 19/08/28 06:45:02 INFO blockmanagement.BlockManager: defaultReplication = 1 19/08/28 06:45:02 INFO blockmanagement.BlockManager: maxReplication = 512 19/08/28 06:45:02 INFO blockmanagement.BlockManager: minReplication = 1 19/08/28 06:45:02 INFO blockmanagement.BlockManager: maxReplicationStreams = 2 19/08/28 06:45:02 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000 19/08/28 06:45:02 INFO blockmanagement.BlockManager: encryptDataTransfer = false 19/08/28 06:45:02 INFO blockmanagement.BlockManager: maxNumBlocksToLog = 1000 19/08/28 06:45:02 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE) 19/08/28 06:45:02 INFO namenode.FSNamesystem: supergroup = supergroup 19/08/28 06:45:02 INFO namenode.FSNamesystem: isPermissionEnabled = false 19/08/28 06:45:02 INFO namenode.FSNamesystem: HA Enabled: false 19/08/28 06:45:02 INFO namenode.FSNamesystem: Append Enabled: true 19/08/28 06:45:03 INFO util.GSet: Computing capacity for map INodeMap 19/08/28 06:45:03 INFO util.GSet: VM type = 64-bit 19/08/28 06:45:03 INFO util.GSet: 1.0% max memory 889 MB = 8.9 MB 19/08/28 06:45:03 INFO util.GSet: capacity = 2^20 = 1048576 entries 19/08/28 06:45:03 INFO namenode.FSDirectory: ACLs enabled? false 19/08/28 06:45:03 INFO namenode.FSDirectory: XAttrs enabled? true 19/08/28 06:45:03 INFO namenode.NameNode: Caching file names occurring more than 10 times 19/08/28 06:45:03 INFO util.GSet: Computing capacity for map cachedBlocks 19/08/28 06:45:03 INFO util.GSet: VM type = 64-bit 19/08/28 06:45:03 INFO util.GSet: 0.25% max memory 889 MB = 2.2 MB 19/08/28 06:45:03 INFO util.GSet: capacity = 2^18 = 262144 entries 19/08/28 06:45:03 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033 19/08/28 06:45:03 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0 19/08/28 06:45:03 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000 19/08/28 06:45:03 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.window.num.buckets = 10 19/08/28 06:45:03 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.num.users = 10 19/08/28 06:45:03 INFO metrics.TopMetrics: NNTop conf: dfs.namenode.top.windows.minutes = 1,5,25 19/08/28 06:45:03 INFO namenode.FSNamesystem: Retry cache on namenode is enabled 19/08/28 06:45:03 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis 19/08/28 06:45:03 INFO util.GSet: Computing capacity for map NameNodeRetryCache 19/08/28 06:45:03 INFO util.GSet: VM type = 64-bit 19/08/28 06:45:03 INFO util.GSet: 0.029999999329447746% max memory 889 MB = 273.1 KB 19/08/28 06:45:03 INFO util.GSet: capacity = 2^15 = 32768 entries 19/08/28 06:45:03 INFO namenode.FSImage: Allocated new BlockPoolId: BP-1572421829-192.168.122.1-1566945903160 19/08/28 06:45:03 INFO common.Storage: Storage directory /opt/bigdata/hadoop/hadoop-2.8.5/hadoop-temp/name has been successfully formatted. 19/08/28 06:45:03 INFO namenode.FSImageFormatProtobuf: Saving image file /opt/bigdata/hadoop/hadoop-2.8.5/hadoop-temp/name/current/fsimage.ckpt_0000000000000000000 using no compression 19/08/28 06:45:03 INFO namenode.FSImageFormatProtobuf: Image file /opt/bigdata/hadoop/hadoop-2.8.5/hadoop-temp/name/current/fsimage.ckpt_0000000000000000000 of size 321 bytes saved in 0 seconds. 19/08/28 06:45:03 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 19/08/28 06:45:03 INFO util.ExitUtil: Exiting with status 0 19/08/28 06:45:03 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at centos02/192.168.122.1 ************************************************************/ [root@centos02 bin]#

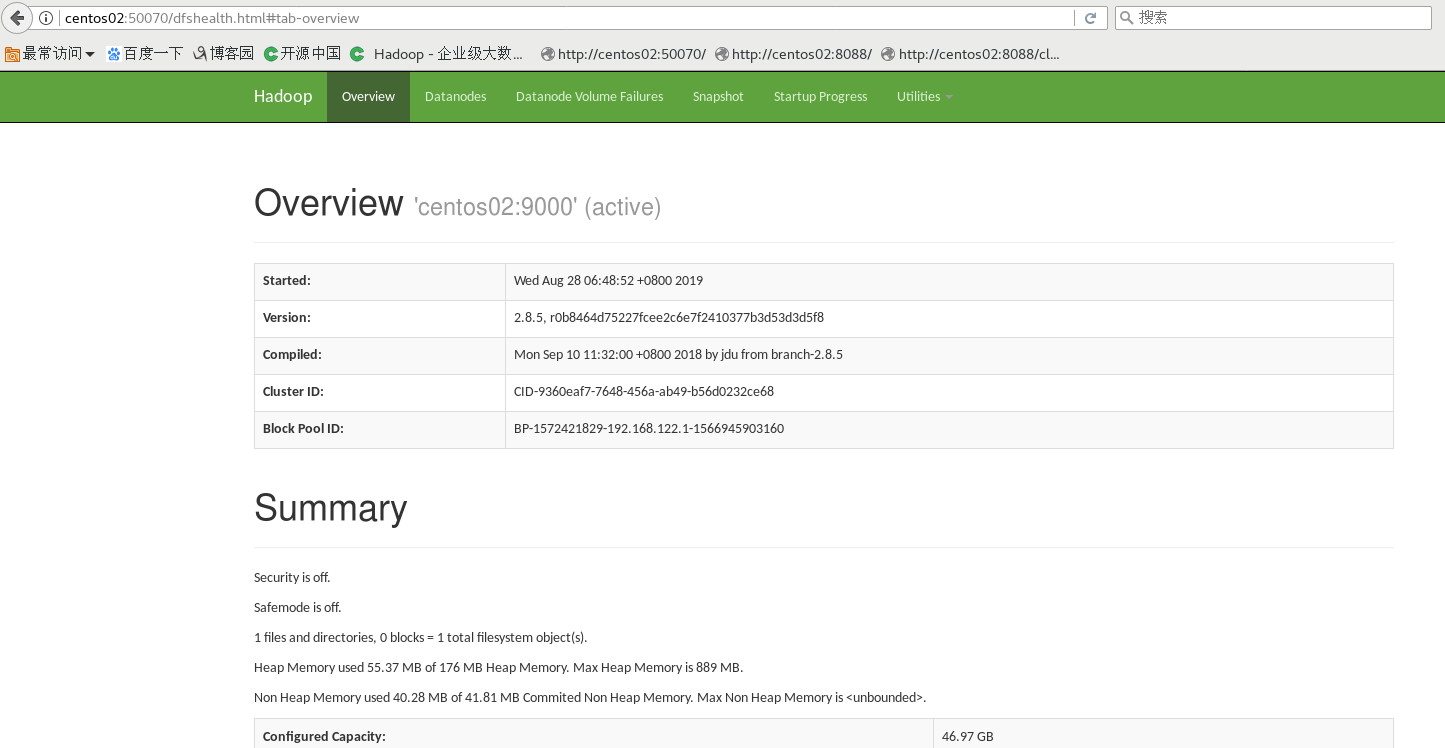

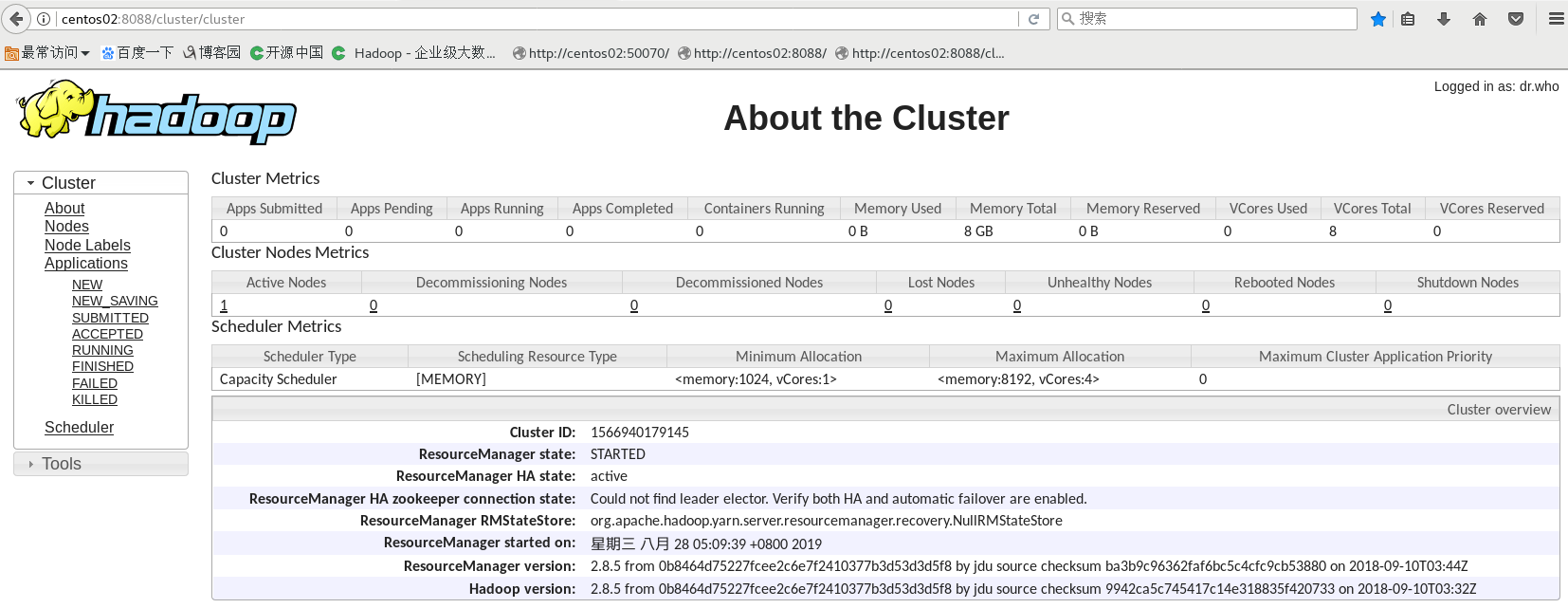

启动 Hadoop

[root@centos02 bin]# cd ../ [root@centos02 hadoop-2.8.5]# [root@centos02 hadoop-2.8.5]# cd ./sbin [root@centos02 sbin]# pwd /opt/bigdata/hadoop/hadoop-2.8.5/sbin [root@centos02 sbin]# ll 总用量 124 -rwxr-xr-x 1 777 hadoop 2752 9月 10 2018 distribute-exclude.sh -rwxr-xr-x 1 777 hadoop 6467 9月 10 2018 hadoop-daemon.sh -rwxr-xr-x 1 777 hadoop 1360 9月 10 2018 hadoop-daemons.sh -rwxr-xr-x 1 777 hadoop 1640 9月 10 2018 hdfs-config.cmd -rwxr-xr-x 1 777 hadoop 1427 9月 10 2018 hdfs-config.sh -rwxr-xr-x 1 777 hadoop 2339 9月 10 2018 httpfs.sh -rwxr-xr-x 1 777 hadoop 3763 9月 10 2018 kms.sh -rwxr-xr-x 1 777 hadoop 4134 9月 10 2018 mr-jobhistory-daemon.sh -rwxr-xr-x 1 777 hadoop 1648 9月 10 2018 refresh-namenodes.sh -rwxr-xr-x 1 777 hadoop 2145 9月 10 2018 slaves.sh -rwxr-xr-x 1 777 hadoop 1779 9月 10 2018 start-all.cmd -rwxr-xr-x 1 777 hadoop 1471 9月 10 2018 start-all.sh -rwxr-xr-x 1 777 hadoop 1128 9月 10 2018 start-balancer.sh -rwxr-xr-x 1 777 hadoop 1401 9月 10 2018 start-dfs.cmd -rwxr-xr-x 1 777 hadoop 3734 9月 10 2018 start-dfs.sh -rwxr-xr-x 1 777 hadoop 1357 9月 10 2018 start-secure-dns.sh -rwxr-xr-x 1 777 hadoop 1571 9月 10 2018 start-yarn.cmd -rwxr-xr-x 1 777 hadoop 1347 9月 10 2018 start-yarn.sh -rwxr-xr-x 1 777 hadoop 1770 9月 10 2018 stop-all.cmd -rwxr-xr-x 1 777 hadoop 1462 9月 10 2018 stop-all.sh -rwxr-xr-x 1 777 hadoop 1179 9月 10 2018 stop-balancer.sh -rwxr-xr-x 1 777 hadoop 1455 9月 10 2018 stop-dfs.cmd -rwxr-xr-x 1 777 hadoop 3206 9月 10 2018 stop-dfs.sh -rwxr-xr-x 1 777 hadoop 1340 9月 10 2018 stop-secure-dns.sh -rwxr-xr-x 1 777 hadoop 1642 9月 10 2018 stop-yarn.cmd -rwxr-xr-x 1 777 hadoop 1340 9月 10 2018 stop-yarn.sh -rwxr-xr-x 1 777 hadoop 4295 9月 10 2018 yarn-daemon.sh -rwxr-xr-x 1 777 hadoop 1353 9月 10 2018 yarn-daemons.sh [root@centos02 sbin]#

[root@centos02 sbin]# jps 4741 Jps [root@centos02 sbin]#

[root@centos02 sbin]# start-all.sh This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh 19/08/28 06:48:47 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Starting namenodes on [centos02] The authenticity of host 'centos02 (192.168.122.1)' can't be established. ECDSA key fingerprint is SHA256:P1L8h3CQufhYRfgBafwGjB18GugzL+XCi62o2x8cM0I. ECDSA key fingerprint is MD5:c8:13:aa:73:1e:8b:1b:8e:e2:89:88:1e:b9:e7:7d:83. Are you sure you want to continue connecting (yes/no)? yes centos02: Warning: Permanently added 'centos02,192.168.122.1' (ECDSA) to the list of known hosts. centos02: starting namenode, logging to /opt/bigdata/hadoop/hadoop-2.8.5/logs/hadoop-root-namenode-centos02.out localhost: starting datanode, logging to /opt/bigdata/hadoop/hadoop-2.8.5/logs/hadoop-root-datanode-centos02.out Starting secondary namenodes [0.0.0.0] The authenticity of host '0.0.0.0 (0.0.0.0)' can't be established. ECDSA key fingerprint is SHA256:P1L8h3CQufhYRfgBafwGjB18GugzL+XCi62o2x8cM0I. ECDSA key fingerprint is MD5:c8:13:aa:73:1e:8b:1b:8e:e2:89:88:1e:b9:e7:7d:83. Are you sure you want to continue connecting (yes/no)? yes 0.0.0.0: Warning: Permanently added '0.0.0.0' (ECDSA) to the list of known hosts. 0.0.0.0: starting secondarynamenode, logging to /opt/bigdata/hadoop/hadoop-2.8.5/logs/hadoop-root-secondarynamenode-centos02.out 19/08/28 06:48:47 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable starting yarn daemons starting resourcemanager, logging to /opt/bigdata/hadoop/hadoop-2.8.5/logs/yarn-root-resourcemanager-centos02.out localhost: starting nodemanager, logging to /opt/bigdata/hadoop/hadoop-2.8.5/logs/yarn-root-nodemanager-centos02.out [root@centos02 sbin]#

[root@centos02 sbin]# jps 5443 ResourceManager 5269 SecondaryNameNode 5062 DataNode 5752 NodeManager 6202 Jps 4907 NameNode [root@centos02 sbin]#

浙公网安备 33010602011771号

浙公网安备 33010602011771号