Netty 模型

Demo代码

-

使用Maven的话请在pom.xml中注入netty依赖

<!-- https://mvnrepository.com/artifact/io.netty/netty-all --> <dependency> <groupId>io.netty</groupId> <artifactId>netty-all</artifactId> <version>4.1.43.Final</version> </dependency> -

NettyClient

package com.ronnie.netty.sample; import io.netty.bootstrap.Bootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelInitializer; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioSocketChannel; public class NettyClient { public static void main(String[] args) throws InterruptedException { // 客户端需要一个事件循环组 NioEventLoopGroup group = new NioEventLoopGroup(); try { // 创建客户端启动对象 // 注意: 客户端使用的不是 ServerBootstrap 而是 Bootstrap Bootstrap bootstrap = new Bootstrap(); // 设置相关参数 bootstrap.group(group) //设置线程组 .channel(NioSocketChannel.class) // 设置客户端通道实现类(反射) .handler(new ChannelInitializer<SocketChannel>() { @Override protected void initChannel(SocketChannel ch) throws Exception { ch.pipeline().addLast(new NettyClientHandler()); // 加入自己的处理器 } }); System.out.println("Client side is ready......"); // 启动客户端去连接服务器端 // channelFuture涉及到netty的异步模型 ChannelFuture channelFuture = bootstrap.connect("127.0.0.1", 6668).sync(); // 给关闭通道进行监听 channelFuture.channel().closeFuture().sync(); } catch (InterruptedException e) { e.printStackTrace(); } finally { group.shutdownGracefully(); } } } -

NettyClientHandler

package com.ronnie.netty.sample; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.ChannelInboundHandlerAdapter; import io.netty.util.CharsetUtil; public class NettyClientHandler extends ChannelInboundHandlerAdapter { // 当同道就绪, 就会触发该方法 @Override public void channelActive(ChannelHandlerContext ctx) throws Exception { System.out.println("Client " + ctx); ctx.writeAndFlush(Unpooled.copiedBuffer("Hello, server: ROFL", CharsetUtil.UTF_8)); } /** * 当通道有读取事件时会触发 * @param ctx * @param msg * @throws Exception */ @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { ByteBuf buf = (ByteBuf) msg; System.out.println("The message server responded: " + buf.toString(CharsetUtil.UTF_8)); System.out.println("Server address: " + ctx.channel().remoteAddress()); } @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { cause.printStackTrace(); ctx.close(); } } -

NettyServer

package com.ronnie.netty.sample; import io.netty.bootstrap.ServerBootstrap; import io.netty.channel.ChannelFuture; import io.netty.channel.ChannelInitializer; import io.netty.channel.ChannelOption; import io.netty.channel.EventLoopGroup; import io.netty.channel.nio.NioEventLoopGroup; import io.netty.channel.socket.SocketChannel; import io.netty.channel.socket.nio.NioServerSocketChannel; public class NettyServer { public static void main(String[] args) throws InterruptedException { // 创建 BossGroup 与 WorkerGroup /** * 1. 创建两个线程组 bossGroup 和 workerGroup * 2. bossGroup 只是处理连接请求, 真正的和客户端业务处理, 会交给workGroup完成 * 3. 两个都是无限循环 * 4. bossGroup 和 workerGroup 含有的子线程(NioEventLoop)的个数 * 为默认实际 cpu核数 * 2 */ NioEventLoopGroup bossGroup = new NioEventLoopGroup(); EventLoopGroup workerGroup = new NioEventLoopGroup(); try { // 创建服务器端的启动对象, 配置参数 ServerBootstrap bootstrap = new ServerBootstrap(); // 使用链式编程来进行设置 bootstrap.group(bossGroup, workerGroup) // 设置两个线程组 .channel(NioServerSocketChannel.class) // 使用NioSocketChannel 作为服务器的通道实现 .option(ChannelOption.SO_BACKLOG, 128) // 设置线程队列等待连接的个数 .childOption(ChannelOption.SO_KEEPALIVE, true) // 设置保持活动连接状态 .childHandler(new ChannelInitializer<SocketChannel>() { // 创建一个通道初始化对象(匿名对象) /** * 给pipeline设置处理器 * @param ch * @throws Exception */ @Override protected void initChannel(SocketChannel ch) throws Exception { ch.pipeline().addLast(new NettyServerHandler()); } }); // 给workerGroup的 EventLoop 设置处理器 System.out.println("Server is ready......"); // 绑定一个端口并且同步, 生成了一个ChannelFuture对象 // 启动服务器(并绑定端口) ChannelFuture cf = bootstrap.bind(6668).sync(); // 对关闭通道进行监听 cf.channel().closeFuture().sync(); } catch (InterruptedException e) { e.printStackTrace(); } finally { // 优雅地关闭 bossGroup.shutdownGracefully(); workerGroup.shutdownGracefully(); } } } -

NettyServerHandler

package com.ronnie.netty.sample; import io.netty.buffer.ByteBuf; import io.netty.buffer.Unpooled; import io.netty.channel.Channel; import io.netty.channel.ChannelHandlerContext; import io.netty.channel.ChannelInboundHandlerAdapter; import io.netty.channel.ChannelPipeline; import io.netty.util.CharsetUtil; /** * 1. 自定义一个Handler需要继承 netty 规定好的某个 HandlerAdapter(适配器模式) * 2. 这时我们自定义一个Handler, 才能称为一个handler */ public class NettyServerHandler extends ChannelInboundHandlerAdapter { /** * 读取数据事件(这里我们可以读取客户端发送的消息) * ChannelHandlerContext ctx: 上下文对象, 含有管道 pipeline, 通道 channel, 地址 address * Object msg: 就是客户端发送的数据 默认Object * @param ctx * @param msg * @throws Exception */ @Override public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception { System.out.println("The server is reading thread: " + Thread.currentThread().getName()); System.out.println("server ctx = " + ctx); System.out.println("Check the relationship between channel and pipeline"); Channel channel = ctx.channel(); ChannelPipeline pipeline = ctx.pipeline(); // 本质是一个双向链表, 涉及到出栈入栈问题 // 将 msg转成一个 ByteBuf(是netty提供的, 不是NIO的 ByteBuffer, 性能更高) ByteBuf buf = (ByteBuf) msg; System.out.println("The message that client send: " + buf.toString(CharsetUtil.UTF_8)); System.out.println("The address of client: " + ctx.channel().remoteAddress()); } /** * 数据读取完毕 * @param ctx * @throws Exception */ @Override public void channelReadComplete(ChannelHandlerContext ctx) throws Exception { // write + flush, 将数据写入到缓冲并刷新 // 一般来说, 我们对发送的数据进行编码 ctx.writeAndFlush(Unpooled.copiedBuffer("Hello, dear client, Kappa", CharsetUtil.UTF_8)); } /** * 处理异常, 一般需要关闭通道 * @param ctx * @param cause * @throws Exception */ @Override public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception { ctx.close(); } }

需要细究的几个点

-

bossGroup 和 workerGroup 含有的子线程(NioEventLoop)的个数为默认实际 cpu核数 * 2。

-

如果传入的为空, 默认传入0

public NioEventLoopGroup() { this(0); }public NioEventLoopGroup(int nThreads) { this(nThreads, (Executor) null); }public NioEventLoopGroup(int nThreads, Executor executor) { this(nThreads, executor, SelectorProvider.provider()); }public NioEventLoopGroup( int nThreads, Executor executor, final SelectorProvider selectorProvider) { this(nThreads, executor, selectorProvider, DefaultSelectStrategyFactory.INSTANCE); }public NioEventLoopGroup(int nThreads, Executor executor, final SelectorProvider selectorProvider, final SelectStrategyFactory selectStrategyFactory) { super(nThreads, executor, selectorProvider, selectStrategyFactory, RejectedExecutionHandlers.reject()); }/** * @see MultithreadEventExecutorGroup#MultithreadEventExecutorGroup(int, Executor, Object...) */ protected MultithreadEventLoopGroup(int nThreads, Executor executor, Object... args) { // 如果传入的线程数为0, 就让线程数为默认事件循环线程数, 否则就使用传入的线程数 super(nThreads == 0 ? DEFAULT_EVENT_LOOP_THREADS : nThreads, executor, args); } -

一路追踪源码, 最终你会追踪到:

DEFAULT_EVENT_LOOP_THREADS = Math.max(1, SystemPropertyUtil.getInt( "io.netty.eventLoopThreads", NettyRuntime.availableProcessors() * 2));- DEFAULT_EVENT_LOOP_THREADS 最小为1, 通常是可获取的cpu核数 * 2

-

我们可以写个程序测试一下

package com.ronnie.netty.sample; import io.netty.util.NettyRuntime; public class Test { public static void main(String[] args) { System.out.println(NettyRuntime.availableProcessors()); } }- 打印结果为12, 我的cpu为 i7 8700, 6核12线程, 结论成立。

-

-

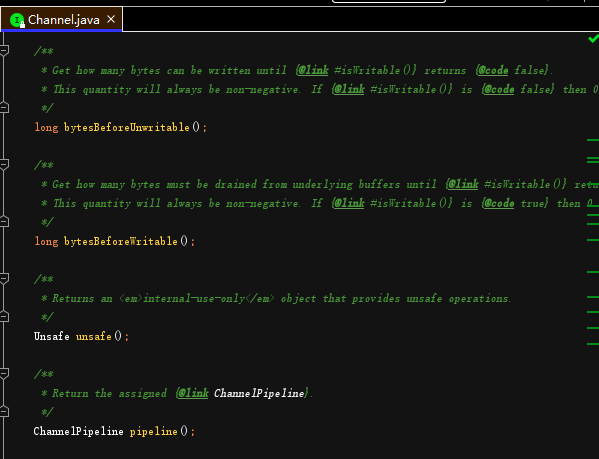

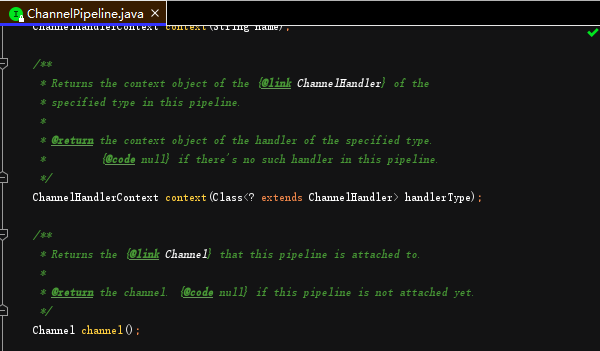

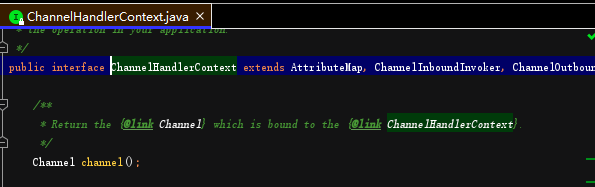

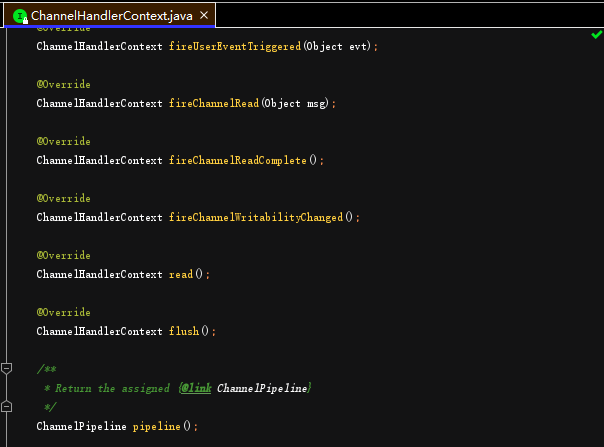

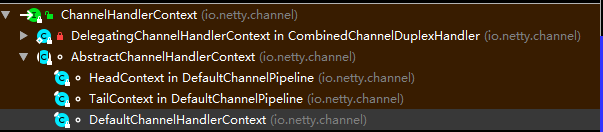

Pipeline中含有其对应的Channel的属性, Channel中也有其对应的Pipeline的属性, 两者是互相包容的, 而ChannelHandlerContext则包含了此两者, 是个很复杂的接口。