Android 12(S) MultiMedia Learning(八)NuPlayer Renderer

NuPlayer的AVSync由Renderer实现,接下来主要来看AVSync的工作原理

相关代码位置:

a. 构造函数

Renderer是在NuPlayer的start过程中被实例化,会传入一个AudioSink、MediaClock,之后还会调用setPlaybackSettings来设定播放模式(速率)

AudioSink:用于音频的播放,调用setDataSource时在MediaPlayerService::Client:中被创建

MediaClock:NuPlayer的AVSync是基于系统时间和Audio来同步的,Clock在NuPlayerDriver中实例化NuPlayer时创建,作为系统时间使用

Renderer对象创建完成之后会分别传入Audio和Video的Decoder中

void NuPlayer::onStart(int64_t startPositionUs, MediaPlayerSeekMode mode) { // ...... sp<AMessage> notify = new AMessage(kWhatRendererNotify, this); ++mRendererGeneration; notify->setInt32("generation", mRendererGeneration); mRenderer = new Renderer(mAudioSink, mMediaClock, notify, flags); mRendererLooper = new ALooper; mRendererLooper->setName("NuPlayerRenderer"); mRendererLooper->start(false, false, ANDROID_PRIORITY_AUDIO); mRendererLooper->registerHandler(mRenderer); status_t err = mRenderer->setPlaybackSettings(mPlaybackSettings); if (err != OK) { mSource->stop(); mSourceStarted = false; notifyListener(MEDIA_ERROR, MEDIA_ERROR_UNKNOWN, err); return; } float rate = getFrameRate(); if (rate > 0) { // 设定播放速度 mRenderer->setVideoFrameRate(rate); } if (mVideoDecoder != NULL) { mVideoDecoder->setRenderer(mRenderer); } if (mAudioDecoder != NULL) { mAudioDecoder->setRenderer(mRenderer); } } status_t NuPlayer::instantiateDecoder( bool audio, sp<DecoderBase> *decoder, bool checkAudioModeChange) { // ...... *decoder = new Decoder( notify, mSource, mPID, mUID, mRenderer, mSurface, mCCDecoder); // ...... }

b. Renderer的数据写入

NuPlayerDecoder收到mediacodec送上来的解码后的数据,会调用queueBuffer写入到Renderer队列当中去

bool NuPlayer::Decoder::handleAnOutputBuffer( size_t index, size_t offset, size_t size, int64_t timeUs, int32_t flags) { // ...... sp<MediaCodecBuffer> buffer; mCodec->getOutputBuffer(index, &buffer); // ...... int64_t frameIndex; bool frameIndexFound = buffer->meta()->findInt64("frameIndex", &frameIndex); buffer->setRange(offset, size); buffer->meta()->clear(); buffer->meta()->setInt64("timeUs", timeUs); if (frameIndexFound) { buffer->meta()->setInt64("frameIndex", frameIndex); } // ...... if (mRenderer != NULL) { // send the buffer to renderer.

// 通过queueBuffer做数据写入 mRenderer->queueBuffer(mIsAudio, buffer, reply); if (eos && !isDiscontinuityPending()) { mRenderer->queueEOS(mIsAudio, ERROR_END_OF_STREAM); } }

}

c. Renderer::queueBuffer

Renderer会对audio和video做不同的处理,先看看对audio是怎么处理的

Audio

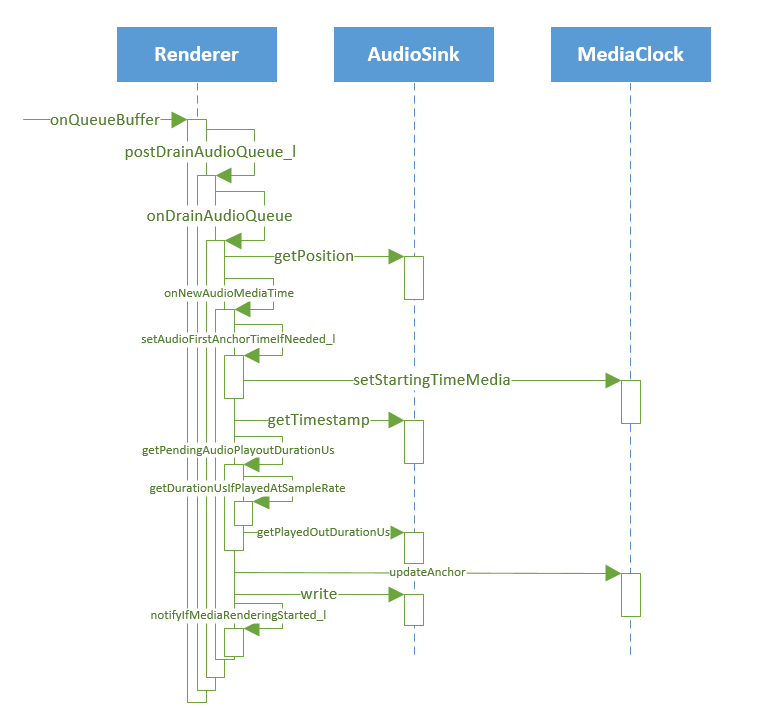

光看调用方法的数量就很头疼,下面贴代码解释解释:

void NuPlayer::Renderer::onQueueBuffer(const sp<AMessage> &msg) { int32_t audio; CHECK(msg->findInt32("audio", &audio)); // ...... sp<RefBase> obj; CHECK(msg->findObject("buffer", &obj)); sp<MediaCodecBuffer> buffer = static_cast<MediaCodecBuffer *>(obj.get()); sp<AMessage> notifyConsumed; CHECK(msg->findMessage("notifyConsumed", ¬ifyConsumed)); // 将传进来的buffer封装为一个QueueEntry 加入到队列当中 QueueEntry entry; entry.mBuffer = buffer; entry.mNotifyConsumed = notifyConsumed; entry.mOffset = 0; entry.mFinalResult = OK; entry.mBufferOrdinal = ++mTotalBuffersQueued; if (audio) { Mutex::Autolock autoLock(mLock); mAudioQueue.push_back(entry); postDrainAudioQueue_l(); } else { mVideoQueue.push_back(entry); postDrainVideoQueue(); } // ...... }

先看onQueueBuffer的前半部分,先判断送进来的buffer是audio还是video,接着封装为QueueEntry加入到队列当中,如果是Audio则调用postDrainAudioQueue_l处理,如果是video则调用postDrainVideoQueue来处理。

case kWhatDrainAudioQueue: { mDrainAudioQueuePending = false; int32_t generation; CHECK(msg->findInt32("drainGeneration", &generation)); if (generation != getDrainGeneration(true /* audio */)) { break; } // 如果onDrainAudioQueue return true会再次调用到该方法 if (onDrainAudioQueue()) { // ...... postDrainAudioQueue_l(delayUs); } break; }

postDrainAudioQueue_l发出一条消息,最终会调用到onDrainAudioQueue方法

bool NuPlayer::Renderer::onDrainAudioQueue() { // ...... // 获取到Audio已经播放了多少帧 uint32_t numFramesPlayed; if (mAudioSink->getPosition(&numFramesPlayed) != OK) { drainAudioQueueUntilLastEOS(); ALOGW("onDrainAudioQueue(): audio sink is not ready"); return false; } // 记录本次数据处理之前已经写入了多少帧 uint32_t prevFramesWritten = mNumFramesWritten; // 循环处理加入到AudioQueue中的QueueEntry while (!mAudioQueue.empty()) { QueueEntry *entry = &*mAudioQueue.begin(); // ...... mLastAudioBufferDrained = entry->mBufferOrdinal; if (entry->mOffset == 0 && entry->mBuffer->size() > 0) { int64_t mediaTimeUs; // 获取到数据中带有的时间戳 CHECK(entry->mBuffer->meta()->findInt64("timeUs", &mediaTimeUs)); ALOGV("onDrainAudioQueue: rendering audio at media time %.2f secs", mediaTimeUs / 1E6); // 调用onNewAudioMediaTime去更新信息 onNewAudioMediaTime(mediaTimeUs); } // 计算buffer中数据长度 size_t copy = entry->mBuffer->size() - entry->mOffset; // 将buffer写入到AudioSink中 ssize_t written = mAudioSink->write(entry->mBuffer->data() + entry->mOffset, copy, false /* blocking */); // ...... entry->mOffset += written; size_t remainder = entry->mBuffer->size() - entry->mOffset; if ((ssize_t)remainder < mAudioSink->frameSize()) { if (remainder > 0) { ALOGW("Corrupted audio buffer has fractional frames, discarding %zu bytes.", remainder); entry->mOffset += remainder; copy -= remainder; } // 通知mediacodec释放buffer entry->mNotifyConsumed->post(); mAudioQueue.erase(mAudioQueue.begin()); entry = NULL; } // 计算写入的帧数 size_t copiedFrames = written / mAudioSink->frameSize(); mNumFramesWritten += copiedFrames; { Mutex::Autolock autoLock(mLock); int64_t maxTimeMedia; // 计算更新最大媒体时间,更新给mediaclock maxTimeMedia = mAnchorTimeMediaUs + (int64_t)(max((long long)mNumFramesWritten - mAnchorNumFramesWritten, 0LL) * 1000LL * mAudioSink->msecsPerFrame()); mMediaClock->updateMaxTimeMedia(maxTimeMedia); notifyIfMediaRenderingStarted_l(); } // ...... } // calculate whether we need to reschedule another write. bool reschedule = !mAudioQueue.empty() && (!mPaused || prevFramesWritten != mNumFramesWritten); // permit pause to fill buffers return reschedule; }

onDrainAudioQueue这个方法很长,有几个关键点

1. onNewAudioMediaTime:拿到新的audio buffer之后会调用该方法去更新用于同步的锚点pts等信息

2. mAudioSink->write:没有去研究audiosink的工作原理,不过似乎有足够的数据写入之后就会自动开始工作做audio的渲染?

3. mMediaClock->updateMaxTimeMedia:更新最大媒体时间

计算方式通过:mAnchorTimeMediaUs + (mNumFramesWritten - mAnchorNumFramesWritten)* mAudioSink->msecsPerFrame * 100

= 本次写入数据的pts + 写入帧数 * 每帧播放时长

mAnchorTimeMediaUs 和 mAnchorNumFramesWritten 会在onNewAudioMediaTime中更新,但是mAnchorNumFramesWritten记录的是本 次数据写入之前的所有帧数,mNumFramesWritten记录的是数据写入之后的总帧数

接下来看看onNewAudioMediaTime这个方法

void NuPlayer::Renderer::onNewAudioMediaTime(int64_t mediaTimeUs) { Mutex::Autolock autoLock(mLock); if (mediaTimeUs == mAnchorTimeMediaUs) { return; } // 记录第一笔有效数据的pts setAudioFirstAnchorTimeIfNeeded_l(mediaTimeUs); // mNextAudioClockUpdateTimeUs is -1 if we're waiting for audio sink to start if (mNextAudioClockUpdateTimeUs == -1) { AudioTimestamp ts; // 获取当前audiosink渲染的时间戳,如果大于0就去开始更新mNextAudioClockUpdateTimeUs if (mAudioSink->getTimestamp(ts) == OK && ts.mPosition > 0) { mNextAudioClockUpdateTimeUs = 0; // start our clock updates } } int64_t nowUs = ALooper::GetNowUs(); if (mNextAudioClockUpdateTimeUs >= 0) { if (nowUs >= mNextAudioClockUpdateTimeUs) { // 当前媒体播放时间 int64_t nowMediaUs = mediaTimeUs - getPendingAudioPlayoutDurationUs(nowUs); // abc mMediaClock->updateAnchor(nowMediaUs, nowUs, mediaTimeUs); mUseVirtualAudioSink = false; mNextAudioClockUpdateTimeUs = nowUs + kMinimumAudioClockUpdatePeriodUs; } } else { int64_t unused; // 当getMediaTime发送错误 或者audiosink发送错误会进入到 if if ((mMediaClock->getMediaTime(nowUs, &unused) != OK) && (getDurationUsIfPlayedAtSampleRate(mNumFramesWritten) > kMaxAllowedAudioSinkDelayUs)) { // Enough data has been sent to AudioSink, but AudioSink has not rendered // any data yet. Something is wrong with AudioSink, e.g., the device is not // connected to audio out. // Switch to system clock. This essentially creates a virtual AudioSink with // initial latenty of getDurationUsIfPlayedAtSampleRate(mNumFramesWritten). // This virtual AudioSink renders audio data starting from the very first sample // and it's paced by system clock. ALOGW("AudioSink stuck. ARE YOU CONNECTED TO AUDIO OUT? Switching to system clock."); mMediaClock->updateAnchor(mAudioFirstAnchorTimeMediaUs, nowUs, mediaTimeUs); mUseVirtualAudioSink = true; } } // 更新本次之前已写入帧数 mAnchorNumFramesWritten = mNumFramesWritten; // 更新本次写入数据的开始时间戳 mAnchorTimeMediaUs = mediaTimeUs; }

1. mNextAudioClockUpdateTimeUs 是用来记录锚点更新时间的,意思就是每隔kMinimumAudioClockUpdatePeriodUs就去更新锚点,防止sync误差滚雪球,当Audiosink开始工作之后会触发锚点更新

2. 更新锚点前会先用公式计算 nowMediaUs =mediaTimeUs - getPendingAudioPlayoutDurationUs(nowUs),那这个nowMeidaUs指的是什么呢?

先看一下getPendingAudioPlayoutDurationUs,计算的是当前剩余可播时长;

所以nowMediaUs = 当前传入的pts(也可以理解为当前写入帧播完时的pts) - 剩余可以播时长 = 当前媒体播放时长

// Calculate duration of pending samples if played at normal rate (i.e., 1.0). int64_t NuPlayer::Renderer::getPendingAudioPlayoutDurationUs(int64_t nowUs) { // 计算当前写入帧总共可以播放时间 int64_t writtenAudioDurationUs = getDurationUsIfPlayedAtSampleRate(mNumFramesWritten); if (mUseVirtualAudioSink) { int64_t nowUs = ALooper::GetNowUs(); int64_t mediaUs; if (mMediaClock->getMediaTime(nowUs, &mediaUs) != OK) { return 0LL; } else { return writtenAudioDurationUs - (mediaUs - mAudioFirstAnchorTimeMediaUs); } } // 通过audiosink获取当前已经播放的时间 const int64_t audioSinkPlayedUs = mAudioSink->getPlayedOutDurationUs(nowUs); // pending意为当前剩余可播时长 int64_t pendingUs = writtenAudioDurationUs - audioSinkPlayedUs; if (pendingUs < 0) { // This shouldn't happen unless the timestamp is stale. ALOGW("%s: pendingUs %lld < 0, clamping to zero, potential resume after pause " "writtenAudioDurationUs: %lld, audioSinkPlayedUs: %lld", __func__, (long long)pendingUs, (long long)writtenAudioDurationUs, (long long)audioSinkPlayedUs); pendingUs = 0; } return pendingUs; } int64_t NuPlayer::Renderer::getDurationUsIfPlayedAtSampleRate(uint32_t numFrames) { int32_t sampleRate = offloadingAudio() ? mCurrentOffloadInfo.sample_rate : mCurrentPcmInfo.mSampleRate; if (sampleRate == 0) { ALOGE("sampleRate is 0 in %s mode", offloadingAudio() ? "offload" : "non-offload"); return 0; } // 音频帧的播放时间 = 音频帧的样本个数 / 采样频率 (单位为s) return (int64_t)(numFrames * 1000000LL / sampleRate); }

3. 调用updateAnchor来更新锚点 mMediaClock->updateAnchor(nowMediaUs, nowUs, mediaTimeUs),锚点到底是什么?

void MediaClock::updateAnchor( int64_t anchorTimeMediaUs, int64_t anchorTimeRealUs, int64_t maxTimeMediaUs) { if (anchorTimeMediaUs < 0 || anchorTimeRealUs < 0) { ALOGW("reject anchor time since it is negative."); return; } Mutex::Autolock autoLock(mLock); int64_t nowUs = ALooper::GetNowUs(); // 重新校准一下当前媒体播放时间,减少函数调用时间引起的误差 int64_t nowMediaUs = anchorTimeMediaUs + (nowUs - anchorTimeRealUs) * (double)mPlaybackRate; if (nowMediaUs < 0) { ALOGW("reject anchor time since it leads to negative media time."); return; } if (maxTimeMediaUs != -1) { mMaxTimeMediaUs = maxTimeMediaUs; } if (mAnchorTimeRealUs != -1) { // 用锚点时间来算一次当前媒体播放时间,如果和之前计算的时间差距在误差区间内,就不会去更新锚点 int64_t oldNowMediaUs = mAnchorTimeMediaUs + (nowUs - mAnchorTimeRealUs) * (double)mPlaybackRate; if (nowMediaUs < oldNowMediaUs + kAnchorFluctuationAllowedUs && nowMediaUs > oldNowMediaUs - kAnchorFluctuationAllowedUs) { return; } } // 更新锚点 updateAnchorTimesAndPlaybackRate_l(nowMediaUs, nowUs, mPlaybackRate); ++mGeneration; processTimers_l(); }

updateAnchor方法给传进来的参数换了个名字:

anchorTimeMediaUs -> 当前媒体播放时间

anchorTimeRealUs -> 当前媒体媒体播放时间对应的真实系统时间

maxTimeMediaUs -> 写入数据帧的pts

该方法做了三件事情:

(一)先调整了下当前媒体播放时间,公式为 nowMediaUs = anchorTimeMediaUs + (nowUs - anchorTimeRealUs) * (double)mPlaybackRate

当前媒体播放时间 = 传入时的媒体播放时间 +(当前系统时间 - 传入时的系统时间 = 语句执行时间)* 播放速率

所以播放速率 * 播放时间也可以获得真实的视频走过的时间

(二)同样使用上面的公式来计算当前媒体播放时间,不一样的是mAnchorTimeMediaUs记录的是锚点媒体播放时间,时间跨度是当前时间和锚点系统时间之间的长度

oldNowMediaUs = mAnchorTimeMediaUs + (nowUs - mAnchorTimeRealUs) * (double)mPlaybackRate

当前媒体播放时间 = 锚点媒体播放时间 + (当前系统时间 - 锚点系统时间)* 播放速率

从这里可以看出来,锚点其实记录的是一组 媒体播放时间 以及其对应的 真实的系统时间,通过锚点就可以计算任意系统时间的媒体播放时间了

(三)调用 updateAnchorTimesAndPlaybackRate_l 去更新锚点时间,误差过大时就会更新了

void MediaClock::updateAnchorTimesAndPlaybackRate_l(int64_t anchorTimeMediaUs, int64_t anchorTimeRealUs, float playbackRate) { if (mAnchorTimeMediaUs != anchorTimeMediaUs || mAnchorTimeRealUs != anchorTimeRealUs || mPlaybackRate != playbackRate) { mAnchorTimeMediaUs = anchorTimeMediaUs; mAnchorTimeRealUs = anchorTimeRealUs; mPlaybackRate = playbackRate; notifyDiscontinuity_l(); } }

到这里audio部分就结束了,接下来看看怎么处理video的

video

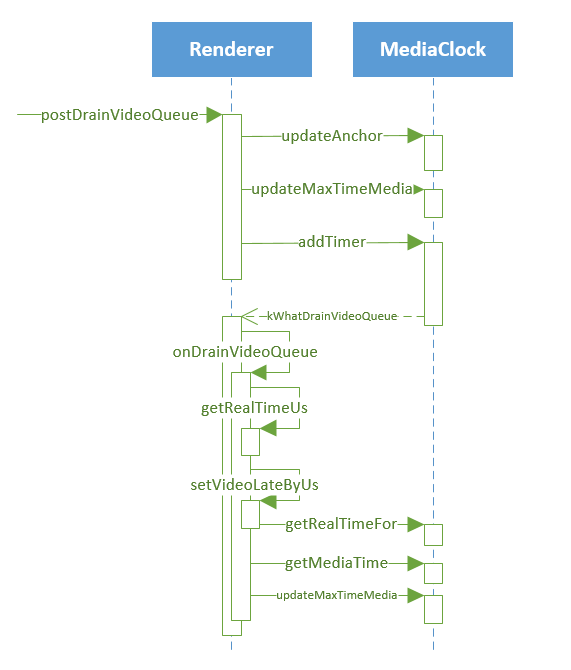

video数据的处理入口是postDrainVideoQueue方法

void NuPlayer::Renderer::postDrainVideoQueue() { if (mDrainVideoQueuePending || getSyncQueues() || (mPaused && mVideoSampleReceived)) { return; } if (mVideoQueue.empty()) { return; } QueueEntry &entry = *mVideoQueue.begin(); sp<AMessage> msg = new AMessage(kWhatDrainVideoQueue, this); msg->setInt32("drainGeneration", getDrainGeneration(false /* audio */)); if (entry.mBuffer == NULL) { // EOS doesn't carry a timestamp. msg->post(); mDrainVideoQueuePending = true; return; } int64_t nowUs = ALooper::GetNowUs(); // 如果数据中带的是真实的播放时间,那么会走到这个if中来,可能是与tunnel mode相关 if (mFlags & FLAG_REAL_TIME) { int64_t realTimeUs; CHECK(entry.mBuffer->meta()->findInt64("timeUs", &realTimeUs)); realTimeUs = mVideoScheduler->schedule(realTimeUs * 1000) / 1000; int64_t twoVsyncsUs = 2 * (mVideoScheduler->getVsyncPeriod() / 1000); int64_t delayUs = realTimeUs - nowUs; ALOGW_IF(delayUs > 500000, "unusually high delayUs: %lld", (long long)delayUs); // post 2 display refreshes before rendering is due msg->post(delayUs > twoVsyncsUs ? delayUs - twoVsyncsUs : 0); mDrainVideoQueuePending = true; return; } // 获取pts int64_t mediaTimeUs; CHECK(entry.mBuffer->meta()->findInt64("timeUs", &mediaTimeUs)); { Mutex::Autolock autoLock(mLock); if (mAnchorTimeMediaUs < 0) { // 如果video数据先到来,会用video pts去更新anchor mMediaClock->updateAnchor(mediaTimeUs, nowUs, mediaTimeUs); mAnchorTimeMediaUs = mediaTimeUs; } } mNextVideoTimeMediaUs = mediaTimeUs; if (!mHasAudio) { // smooth out videos >= 10fps // 如果没有音频就用视频更新最大媒体时间 mMediaClock->updateMaxTimeMedia(mediaTimeUs + kDefaultVideoFrameIntervalUs); } if (!mVideoSampleReceived || mediaTimeUs < mAudioFirstAnchorTimeMediaUs) { // 如果第一帧时间小于音频第一帧时间,立即渲染 msg->post(); } else { // Vsync查了资料是垂直同步时间 int64_t twoVsyncsUs = 2 * (mVideoScheduler->getVsyncPeriod() / 1000); // 定时在2倍vsync时间之前 发送消息处理本次avsync // post 2 display refreshes before rendering is due mMediaClock->addTimer(msg, mediaTimeUs, -twoVsyncsUs); } mDrainVideoQueuePending = true; }

mMediaClock->addTimer 这个方法用做定时,在到达应播时间前2vsync时发送kWhatDrainVideoQueue事件给Renderer,进入到onDrainVideoQueue方法中进一步处理

void NuPlayer::Renderer::onDrainVideoQueue() { if (mVideoQueue.empty()) { return; } QueueEntry *entry = &*mVideoQueue.begin(); if (entry->mBuffer == NULL) { // EOS notifyEOS(false /* audio */, entry->mFinalResult); mVideoQueue.erase(mVideoQueue.begin()); entry = NULL; setVideoLateByUs(0); return; } int64_t nowUs = ALooper::GetNowUs(); int64_t realTimeUs; int64_t mediaTimeUs = -1; if (mFlags & FLAG_REAL_TIME) { CHECK(entry->mBuffer->meta()->findInt64("timeUs", &realTimeUs)); } else { CHECK(entry->mBuffer->meta()->findInt64("timeUs", &mediaTimeUs)); // 获取应该播放的系统时间 realTimeUs = getRealTimeUs(mediaTimeUs, nowUs); } // 做一次矫正 realTimeUs = mVideoScheduler->schedule(realTimeUs * 1000) / 1000; bool tooLate = false; if (!mPaused) { setVideoLateByUs(nowUs - realTimeUs); tooLate = (mVideoLateByUs > 40000); if (tooLate) { ALOGV("video late by %lld us (%.2f secs)", (long long)mVideoLateByUs, mVideoLateByUs / 1E6); } else { int64_t mediaUs = 0; mMediaClock->getMediaTime(realTimeUs, &mediaUs); ALOGV("rendering video at media time %.2f secs", (mFlags & FLAG_REAL_TIME ? realTimeUs : mediaUs) / 1E6); if (!(mFlags & FLAG_REAL_TIME) && mLastAudioMediaTimeUs != -1 && mediaTimeUs > mLastAudioMediaTimeUs) { // If audio ends before video, video continues to drive media clock. // Also smooth out videos >= 10fps. mMediaClock->updateMaxTimeMedia(mediaTimeUs + kDefaultVideoFrameIntervalUs); } } } else { setVideoLateByUs(0); if (!mVideoSampleReceived && !mHasAudio) { // This will ensure that the first frame after a flush won't be used as anchor // when renderer is in paused state, because resume can happen any time after seek. clearAnchorTime(); } } // Always render the first video frame while keeping stats on A/V sync. if (!mVideoSampleReceived) { realTimeUs = nowUs; tooLate = false; } // 给NuPlayerDecoder发送消息,渲染 entry->mNotifyConsumed->setInt64("timestampNs", realTimeUs * 1000LL); entry->mNotifyConsumed->setInt32("render", !tooLate); entry->mNotifyConsumed->post(); mVideoQueue.erase(mVideoQueue.begin()); entry = NULL; mVideoSampleReceived = true; if (!mPaused) { if (!mVideoRenderingStarted) { mVideoRenderingStarted = true; notifyVideoRenderingStart(); } Mutex::Autolock autoLock(mLock); notifyIfMediaRenderingStarted_l(); } }

这个方法首先调用getRealTimeUs获取应当播放的系统时间,后面就发消息给NuPlayerDecoder,让mediacodec释放buffer并渲染了

int64_t NuPlayer::Renderer::getRealTimeUs(int64_t mediaTimeUs, int64_t nowUs) { int64_t realUs; if (mMediaClock->getRealTimeFor(mediaTimeUs, &realUs) != OK) { // If failed to get current position, e.g. due to audio clock is // not ready, then just play out video immediately without delay. return nowUs; } return realUs; } status_t MediaClock::getRealTimeFor( int64_t targetMediaUs, int64_t *outRealUs) const { if (outRealUs == NULL) { return BAD_VALUE; } Mutex::Autolock autoLock(mLock); if (mPlaybackRate == 0.0) { return NO_INIT; } int64_t nowUs = ALooper::GetNowUs(); int64_t nowMediaUs; status_t status = getMediaTime_l(nowUs, &nowMediaUs, true /* allowPastMaxTime */); if (status != OK) { return status; } *outRealUs = (targetMediaUs - nowMediaUs) / (double)mPlaybackRate + nowUs; return OK; } status_t MediaClock::getMediaTime_l( int64_t realUs, int64_t *outMediaUs, bool allowPastMaxTime) const { if (mAnchorTimeRealUs == -1) { return NO_INIT; } int64_t mediaUs = mAnchorTimeMediaUs + (realUs - mAnchorTimeRealUs) * (double)mPlaybackRate; if (mediaUs > mMaxTimeMediaUs && !allowPastMaxTime) { mediaUs = mMaxTimeMediaUs; } if (mediaUs < mStartingTimeMediaUs) { mediaUs = mStartingTimeMediaUs; } if (mediaUs < 0) { mediaUs = 0; } *outMediaUs = mediaUs; return OK; }

getRealTimeUs涉及到几个计算:

getMediaTime_l 获取当前的媒体时间:媒体播放锚点 + (当前系统时间 - 锚点系统时间) = 当前媒体播放位置

getRealTimeFor 获取目标pts应当播放的系统时间:本帧PTS - 当前媒体播放位置 + 当前系统时间 = 应该播放的系统时间

void NuPlayer::Decoder::onRenderBuffer(const sp<AMessage> &msg) { status_t err; int32_t render; size_t bufferIx; int32_t eos; size_t size; CHECK(msg->findSize("buffer-ix", &bufferIx)); if (!mIsAudio) { int64_t timeUs; sp<MediaCodecBuffer> buffer = mOutputBuffers[bufferIx]; buffer->meta()->findInt64("timeUs", &timeUs); if (mCCDecoder != NULL && mCCDecoder->isSelected()) { mCCDecoder->display(timeUs); } } if (mCodec == NULL) { err = NO_INIT; } else if (msg->findInt32("render", &render) && render) { int64_t timestampNs; CHECK(msg->findInt64("timestampNs", ×tampNs)); err = mCodec->renderOutputBufferAndRelease(bufferIx, timestampNs); } else { if (!msg->findInt32("eos", &eos) || !eos || !msg->findSize("size", &size) || size) { mNumOutputFramesDropped += !mIsAudio; } err = mCodec->releaseOutputBuffer(bufferIx); } if (err != OK) { ALOGE("failed to release output buffer for [%s] (err=%d)", mComponentName.c_str(), err); handleError(err); } if (msg->findInt32("eos", &eos) && eos && isDiscontinuityPending()) { finishHandleDiscontinuity(true /* flushOnTimeChange */); } }

调用renderOutputBufferAndRelease就可以做渲染啦,timestampNs应该就是应当播放的系统时间

到这里NuPlayer的AVsync就大致学习完了,如有不对的地方欢迎讨论和指正。