Android 12(S) MultiMedia Learning(七)NuPlayer GenericSource

本节来看一下NuPlayer Source中的GenericSource,GenericSource主要是用来播放本地视频的,接下来着重来看以下5个方法:

prepare,start,pause,seek,dequeueAccessUnit

相关代码位置:

http://aospxref.com/android-12.0.0_r3/xref/frameworks/av/media/libdatasource/DataSourceFactory.cpp

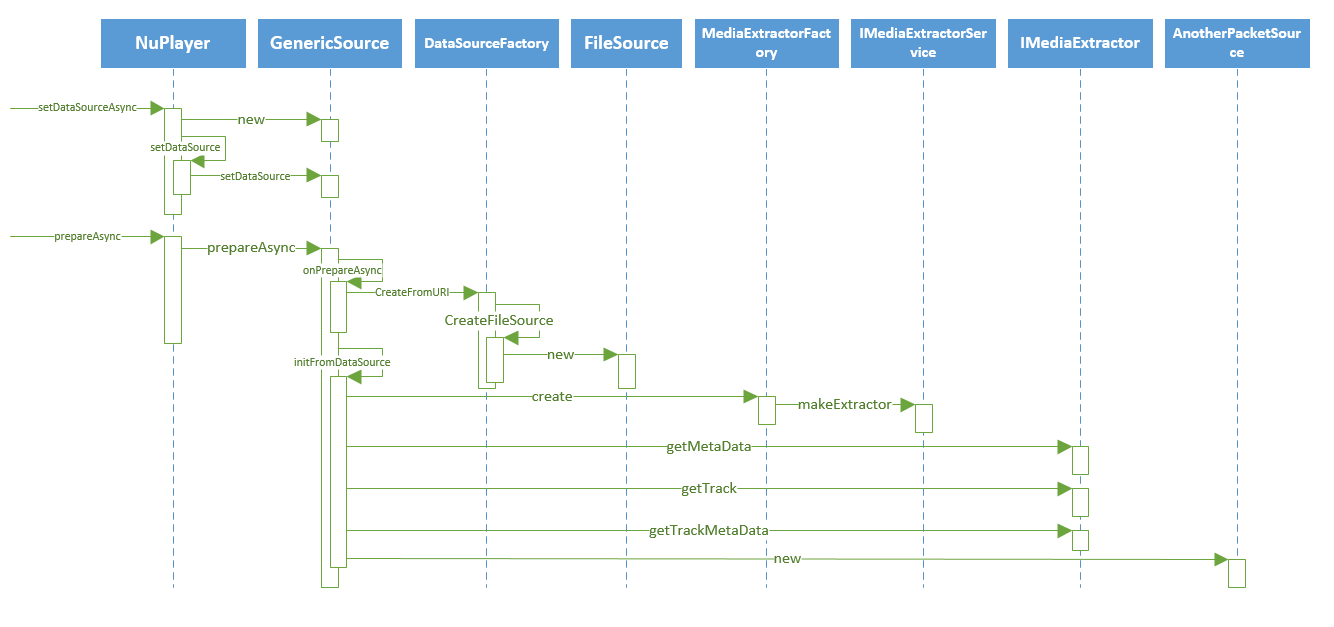

a. prepare

prepare的过程中做了以下几件事情(这边的代码比较简单,顺着看就行所以就不贴代码了):

1. 根据setDataSource过程中传进来的uri来创建DataSource,由于GenericSource一般用来播放本地视频,所以会创建一个FileSource(这里的dataSource实现了最基本的读写文件的接口)

2. 利用创建的DataSource来读取文件,使用media.extractor服务来选择并创建一个合适的MediaExtractor(media.extractor服务后面可能会来记录一下它的工作原理)

3. 利用MediaExtractor来获取文件的metadata,以及各个track的metadata(后面用于创建以及初始化decoder),调用getTrack方法从MediaExtractor中获取IMediaSource,audio和video track均拥有自己的IMediaSource,IMediaSource实现了demux功能

4. 为音频和视频分别创建一个AnotherPacketSource作为数据容器,与IMediaSource一起封装成为Track对象,之后的函数调用就是操作音频和视频的Track

status_t NuPlayer::GenericSource::initFromDataSource() { sp<IMediaExtractor> extractor; // ...... // 创建MediaExtractor extractor = MediaExtractorFactory::Create(dataSource, NULL); // 获取文件的metadata sp<MetaData> fileMeta = extractor->getMetaData(); // 获取track数量 size_t numtracks = extractor->countTracks(); // ...... // 获取文件的时长 if (mFileMeta != NULL) { int64_t duration; if (mFileMeta->findInt64(kKeyDuration, &duration)) { mDurationUs = duration; } } for (size_t i = 0; i < numtracks; ++i) { // 获取MediaSource sp<IMediaSource> track = extractor->getTrack(i); if (track == NULL) { continue; } sp<MetaData> meta = extractor->getTrackMetaData(i); if (meta == NULL) { ALOGE("no metadata for track %zu", i); return UNKNOWN_ERROR; } const char *mime; CHECK(meta->findCString(kKeyMIMEType, &mime)); // 构建Track if (!strncasecmp(mime, "audio/", 6)) { if (mAudioTrack.mSource == NULL) { mAudioTrack.mIndex = i; mAudioTrack.mSource = track; // 为track构建数据容器AnotherPacketSource mAudioTrack.mPackets = new AnotherPacketSource(mAudioTrack.mSource->getFormat()); if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_VORBIS)) { mAudioIsVorbis = true; } else { mAudioIsVorbis = false; } mMimes.add(String8(mime)); } } else if (!strncasecmp(mime, "video/", 6)) { if (mVideoTrack.mSource == NULL) { mVideoTrack.mIndex = i; mVideoTrack.mSource = track; mVideoTrack.mPackets = new AnotherPacketSource(mVideoTrack.mSource->getFormat()); // video always at the beginning mMimes.insertAt(String8(mime), 0); } } mSources.push(track); return UNKNOWN_ERROR; } // 获取加密视频的信息 (void)checkDrmInfo(); // 这里会算视频的biterate,先忽略 mBitrate = totalBitrate; return OK; }

b. start

NuPlayer的start方法会同步调用Source的start方法,这时候就开始读取数据了。

调用postReadBuffer发送两个消息,最后会调用到readBuffer方法当中做数据的读取

void NuPlayer::GenericSource::start() { // ...... if (mAudioTrack.mSource != NULL) { postReadBuffer(MEDIA_TRACK_TYPE_AUDIO); } if (mVideoTrack.mSource != NULL) { postReadBuffer(MEDIA_TRACK_TYPE_VIDEO); } mStarted = true; }

readBuffer看起来比较长,但是并不是很复杂:

1、根据trackType获取对应的Track

2、根据actualTimeUs判断是否需要seek,如需要则构建ReadOptions

3、调用IMediaSource的read或者readMultiple方法读取数据

4、将读到的数据加入到AnotherPacketSource

void NuPlayer::GenericSource::readBuffer( media_track_type trackType, int64_t seekTimeUs, MediaPlayerSeekMode mode, int64_t *actualTimeUs, bool formatChange) { Track *track; size_t maxBuffers = 1; // 根据tracktype获取Track switch (trackType) { case MEDIA_TRACK_TYPE_VIDEO: track = &mVideoTrack; maxBuffers = 8; // too large of a number may influence seeks break; case MEDIA_TRACK_TYPE_AUDIO: track = &mAudioTrack; maxBuffers = 64; break; case MEDIA_TRACK_TYPE_SUBTITLE: track = &mSubtitleTrack; break; case MEDIA_TRACK_TYPE_TIMEDTEXT: track = &mTimedTextTrack; break; default: TRESPASS(); } if (track->mSource == NULL) { return; } // 如果seekTimeUs >= 0,说明发生了seek,封装ReadOptions在read时作为参数传下去 if (actualTimeUs) { *actualTimeUs = seekTimeUs; } MediaSource::ReadOptions options; bool seeking = false; if (seekTimeUs >= 0) { options.setSeekTo(seekTimeUs, mode); seeking = true; } // 每次读取都会读取maxBuffer数量的buffer(audio 64,video 8),这时候就有两种读取方式,每次调用IMediaSource的read方法读一个buffer,或者调用readMultiple一次性读取多个buffer上来。无论哪种方法都会读满maxBuffers const bool couldReadMultiple = (track->mSource->supportReadMultiple()); if (couldReadMultiple) { options.setNonBlocking(); } int32_t generation = getDataGeneration(trackType); for (size_t numBuffers = 0; numBuffers < maxBuffers; ) { Vector<MediaBufferBase *> mediaBuffers; status_t err = NO_ERROR; sp<IMediaSource> source = track->mSource; mLock.unlock(); if (couldReadMultiple) { err = source->readMultiple( &mediaBuffers, maxBuffers - numBuffers, &options); } else { MediaBufferBase *mbuf = NULL; err = source->read(&mbuf, &options); if (err == OK && mbuf != NULL) { mediaBuffers.push_back(mbuf); } } mLock.lock(); options.clearNonPersistent(); size_t id = 0; size_t count = mediaBuffers.size(); // in case track has been changed since we don't have lock for some time. if (generation != getDataGeneration(trackType)) { for (; id < count; ++id) { mediaBuffers[id]->release(); } break; } for (; id < count; ++id) { int64_t timeUs; MediaBufferBase *mbuf = mediaBuffers[id]; // 记录读到的audio/video的媒体位置 if (!mbuf->meta_data().findInt64(kKeyTime, &timeUs)) { mbuf->meta_data().dumpToLog(); track->mPackets->signalEOS(ERROR_MALFORMED); break; } if (trackType == MEDIA_TRACK_TYPE_AUDIO) { mAudioTimeUs = timeUs; } else if (trackType == MEDIA_TRACK_TYPE_VIDEO) { mVideoTimeUs = timeUs; } // 如果seek了,会清除AnotherpacketSource中的数据,并添加seek标志 queueDiscontinuityIfNeeded(seeking, formatChange, trackType, track); sp<ABuffer> buffer = mediaBufferToABuffer(mbuf, trackType); if (numBuffers == 0 && actualTimeUs != nullptr) { *actualTimeUs = timeUs; } if (seeking && buffer != nullptr) { sp<AMessage> meta = buffer->meta(); if (meta != nullptr && mode == MediaPlayerSeekMode::SEEK_CLOSEST && seekTimeUs > timeUs) { sp<AMessage> extra = new AMessage; extra->setInt64("resume-at-mediaTimeUs", seekTimeUs); meta->setMessage("extra", extra); } } // 将数据加入到AnotherPacketSource当中 track->mPackets->queueAccessUnit(buffer); formatChange = false; seeking = false; ++numBuffers; } if (id < count) { // Error, some mediaBuffer doesn't have kKeyTime. for (; id < count; ++id) { // 清除暂存容器的数据用于再次的数据读取 mediaBuffers[id]->release(); } break; } if (err == WOULD_BLOCK) { break; } else if (err == INFO_FORMAT_CHANGED) { #if 0 track->mPackets->queueDiscontinuity( ATSParser::DISCONTINUITY_FORMATCHANGE, NULL, false /* discard */); #endif } else if (err != OK) { // 如果读取错误,则说明eos queueDiscontinuityIfNeeded(seeking, formatChange, trackType, track); track->mPackets->signalEOS(err); break; } } // 这个应该是播放网络资源时,不断下载缓存 if (mIsStreaming && (trackType == MEDIA_TRACK_TYPE_VIDEO || trackType == MEDIA_TRACK_TYPE_AUDIO)) { status_t finalResult; int64_t durationUs = track->mPackets->getBufferedDurationUs(&finalResult); // TODO: maxRebufferingMarkMs could be larger than // mBufferingSettings.mResumePlaybackMarkMs int64_t markUs = (mPreparing ? mBufferingSettings.mInitialMarkMs : mBufferingSettings.mResumePlaybackMarkMs) * 1000LL; if (finalResult == ERROR_END_OF_STREAM || durationUs >= markUs) { if (mPreparing || mSentPauseOnBuffering) { Track *counterTrack = (trackType == MEDIA_TRACK_TYPE_VIDEO ? &mAudioTrack : &mVideoTrack); if (counterTrack->mSource != NULL) { durationUs = counterTrack->mPackets->getBufferedDurationUs(&finalResult); } if (finalResult == ERROR_END_OF_STREAM || durationUs >= markUs) { if (mPreparing) { notifyPrepared(); mPreparing = false; } else { sendCacheStats(); mSentPauseOnBuffering = false; sp<AMessage> notify = dupNotify(); notify->setInt32("what", kWhatResumeOnBufferingEnd); notify->post(); } } } return; } // 自己调用自己,循环读取 postReadBuffer(trackType); } }

接下来看看queueDiscontinuityIfNeeded,这个方法很简单,其实就是调用了AnotherPacketSource的queueDiscontinuity方法。这个在后面的博文中会简单介绍工作原理

void NuPlayer::GenericSource::queueDiscontinuityIfNeeded( bool seeking, bool formatChange, media_track_type trackType, Track *track) { // formatChange && seeking: track whose source is changed during selection // formatChange && !seeking: track whose source is not changed during selection // !formatChange: normal seek if ((seeking || formatChange) && (trackType == MEDIA_TRACK_TYPE_AUDIO || trackType == MEDIA_TRACK_TYPE_VIDEO)) { ATSParser::DiscontinuityType type = (formatChange && seeking) ? ATSParser::DISCONTINUITY_FORMATCHANGE : ATSParser::DISCONTINUITY_NONE; track->mPackets->queueDiscontinuity(type, NULL /* extra */, true /* discard */); } }

c. seek

有了前面的底子,seek方法就很简单了,NuPlayer调用seekTo方法之后,会调用到readBuffer方法做数据读取

status_t NuPlayer::GenericSource::seekTo(int64_t seekTimeUs, MediaPlayerSeekMode mode) { ALOGV("seekTo: %lld, %d", (long long)seekTimeUs, mode); sp<AMessage> msg = new AMessage(kWhatSeek, this); msg->setInt64("seekTimeUs", seekTimeUs); msg->setInt32("mode", mode); // Need to call readBuffer on |mLooper| to ensure the calls to // IMediaSource::read* are serialized. Note that IMediaSource::read* // is called without |mLock| acquired and MediaSource is not thread safe. sp<AMessage> response; status_t err = msg->postAndAwaitResponse(&response); if (err == OK && response != NULL) { CHECK(response->findInt32("err", &err)); } return err; } status_t NuPlayer::GenericSource::doSeek(int64_t seekTimeUs, MediaPlayerSeekMode mode) { if (mVideoTrack.mSource != NULL) { ++mVideoDataGeneration; int64_t actualTimeUs; readBuffer(MEDIA_TRACK_TYPE_VIDEO, seekTimeUs, mode, &actualTimeUs); if (mode != MediaPlayerSeekMode::SEEK_CLOSEST) { seekTimeUs = std::max<int64_t>(0, actualTimeUs); } mVideoLastDequeueTimeUs = actualTimeUs; } if (mAudioTrack.mSource != NULL) { ++mAudioDataGeneration; readBuffer(MEDIA_TRACK_TYPE_AUDIO, seekTimeUs, MediaPlayerSeekMode::SEEK_CLOSEST); mAudioLastDequeueTimeUs = seekTimeUs; } if (mSubtitleTrack.mSource != NULL) { mSubtitleTrack.mPackets->clear(); mFetchSubtitleDataGeneration++; } if (mTimedTextTrack.mSource != NULL) { mTimedTextTrack.mPackets->clear(); mFetchTimedTextDataGeneration++; } ++mPollBufferingGeneration; schedulePollBuffering(); return OK; }

d. pause

上层调用pause之后,NuPlayer相应的也会调用GenericSource的pause方法,这个方法很简单,直接置mStarted为false。

void NuPlayer::GenericSource::pause() { Mutex::Autolock _l(mLock); mStarted = false; }

e. dequeueAccessUnit

NuPlayerDecoder会调用这个方法来从Source中获取读到的数据,这是个比较重要的方法。

1、读取时会先去判断当前播放器的状态,如果是pause或者是stop,mStarted为false,则会停止本次数据的读取。

2、接着判断数据池中的数据是否足够,如果不够则读取数据

3、从数据池中出队列一个数据

4、再次判断数据池中的数据是否足够,如果不够则读取数据

status_t NuPlayer::GenericSource::dequeueAccessUnit( bool audio, sp<ABuffer> *accessUnit) { Mutex::Autolock _l(mLock); // If has gone through stop/releaseDrm sequence, we no longer send down any buffer b/c // the codec's crypto object has gone away (b/37960096). // Note: This will be unnecessary when stop() changes behavior and releases codec (b/35248283). if (!mStarted && mIsDrmReleased) { return -EWOULDBLOCK; } Track *track = audio ? &mAudioTrack : &mVideoTrack; if (track->mSource == NULL) { return -EWOULDBLOCK; } status_t finalResult; // 先判断AnotherPacketSource中的数据是否足够,如果不足够就调用postReadBuffer方法读取数据 if (!track->mPackets->hasBufferAvailable(&finalResult)) { if (finalResult == OK) { postReadBuffer( audio ? MEDIA_TRACK_TYPE_AUDIO : MEDIA_TRACK_TYPE_VIDEO); return -EWOULDBLOCK; } return finalResult; } // 从AnotherPacketSource中出队列一个buffer status_t result = track->mPackets->dequeueAccessUnit(accessUnit); // start pulling in more buffers if cache is running low // so that decoder has less chance of being starved // 再判断数据池中的数据是否足够,如不够就去读取(本地播放) if (!mIsStreaming) { if (track->mPackets->getAvailableBufferCount(&finalResult) < 2) { postReadBuffer(audio? MEDIA_TRACK_TYPE_AUDIO : MEDIA_TRACK_TYPE_VIDEO); } } else { int64_t durationUs = track->mPackets->getBufferedDurationUs(&finalResult); // TODO: maxRebufferingMarkMs could be larger than // mBufferingSettings.mResumePlaybackMarkMs int64_t restartBufferingMarkUs = mBufferingSettings.mResumePlaybackMarkMs * 1000LL / 2; if (finalResult == OK) { if (durationUs < restartBufferingMarkUs) { postReadBuffer(audio? MEDIA_TRACK_TYPE_AUDIO : MEDIA_TRACK_TYPE_VIDEO); } if (track->mPackets->getAvailableBufferCount(&finalResult) < 2 && !mSentPauseOnBuffering && !mPreparing) { mCachedSource->resumeFetchingIfNecessary(); sendCacheStats(); mSentPauseOnBuffering = true; sp<AMessage> notify = dupNotify(); notify->setInt32("what", kWhatPauseOnBufferingStart); notify->post(); } } } if (result != OK) { if (mSubtitleTrack.mSource != NULL) { mSubtitleTrack.mPackets->clear(); mFetchSubtitleDataGeneration++; } if (mTimedTextTrack.mSource != NULL) { mTimedTextTrack.mPackets->clear(); mFetchTimedTextDataGeneration++; } return result; } int64_t timeUs; status_t eosResult; // ignored CHECK((*accessUnit)->meta()->findInt64("timeUs", &timeUs)); if (audio) { mAudioLastDequeueTimeUs = timeUs; } else { mVideoLastDequeueTimeUs = timeUs; } if (mSubtitleTrack.mSource != NULL && !mSubtitleTrack.mPackets->hasBufferAvailable(&eosResult)) { sp<AMessage> msg = new AMessage(kWhatFetchSubtitleData, this); msg->setInt64("timeUs", timeUs); msg->setInt32("generation", mFetchSubtitleDataGeneration); msg->post(); } if (mTimedTextTrack.mSource != NULL && !mTimedTextTrack.mPackets->hasBufferAvailable(&eosResult)) { sp<AMessage> msg = new AMessage(kWhatFetchTimedTextData, this); msg->setInt64("timeUs", timeUs); msg->setInt32("generation", mFetchTimedTextDataGeneration); msg->post(); } return result; }

到这里GenericSource的主要工作原理就学习完成了。