Android 12(S) MultiMedia Learning(六)NuPlayer Decoder

接下来将会从4个角度来记录NuPlayerDecoder部分

相关代码路径:

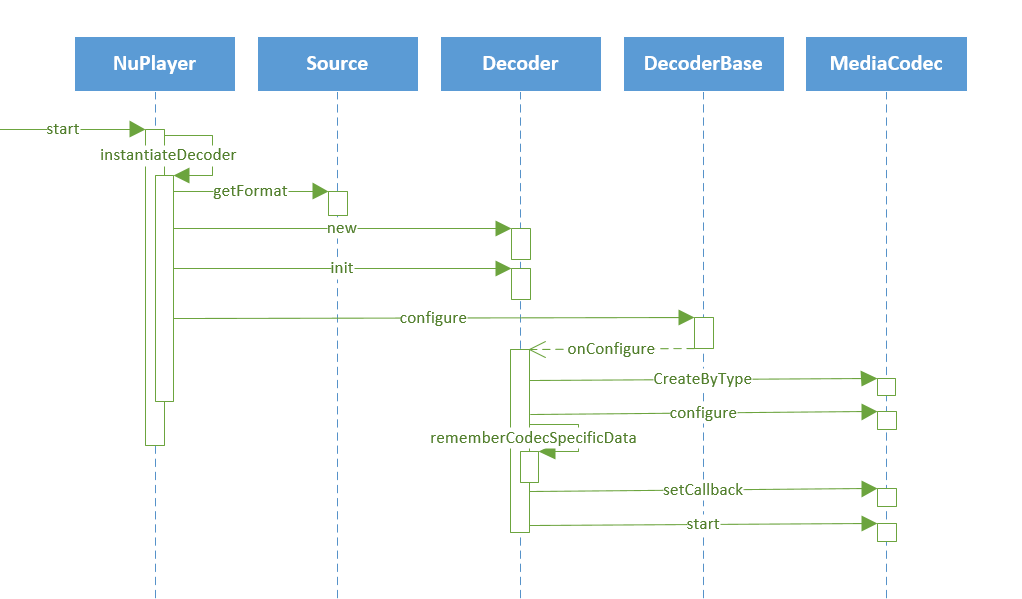

1、NuPlayerDecoder封装的是mediacodec,如何创建并且配置启动mediacodec的呢?

a. setCallback构建了NuPlayerDecoder和mediacodec之间沟通的桥梁,当omx有消息发上来,就会通过这个callback通知到NuPlayerDecoder,做相关的动作。

b. rememberCodecSpecificData 会记录下文件中的csd-buffer,等到有seek动作时会重新把这个buffer送给decoder

c. 其他的就是比较普通的对mediacodec的操作流程了 : CreateByType --> configure --> start

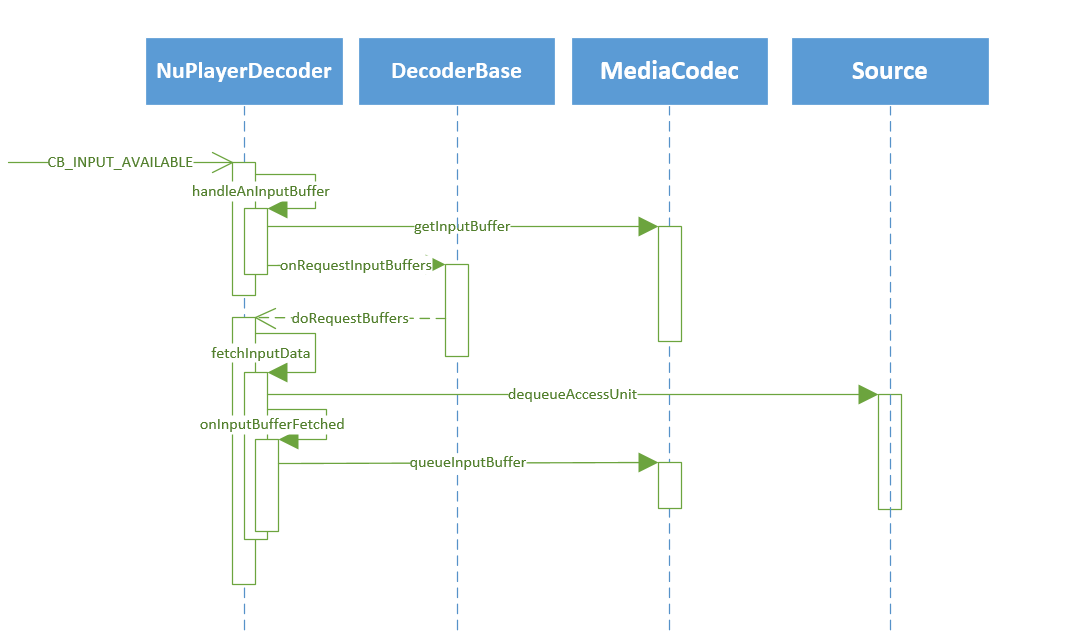

2、mediacodec启动之后,需要解码的数据从哪里获得,数据又是如何写入的,如何驱动这些动作呢?

上图是mediacodec收到omx发来的FillThisBuffer消息之后NuPlayerDecoder做出的相应动作

简单点讲就是拿到buffer,问source获取数据,把数据写给mediacodec,但是实际这部分的代码考虑的情况比较多,造成看起来比较复杂,这里贴一点代码来说明一下:

bool NuPlayer::Decoder::handleAnInputBuffer(size_t index) { // ...... // 从mediacodec获取input buffer sp<MediaCodecBuffer> buffer; mCodec->getInputBuffer(index, &buffer); // ...... // 这里有几个容器要看看他们的用法 // mInputBuffers : 记录获取到的inputbuffer // mMediaBuffers : 没什么用 // mInputBufferIsDequeued : 用于记录mInputBuffers容器中的buffer是否出队列,buffer送上来时置true,buffer送给decoder时置false if (index >= mInputBuffers.size()) { for (size_t i = mInputBuffers.size(); i <= index; ++i) { mInputBuffers.add(); mMediaBuffers.add(); mInputBufferIsDequeued.add(); mMediaBuffers.editItemAt(i) = NULL; mInputBufferIsDequeued.editItemAt(i) = false; } } mInputBuffers.editItemAt(index) = buffer; // ...... mInputBufferIsDequeued.editItemAt(index) = true; // seek之后mCSDsToSubmit不为空,会进入到这个if当中 if (!mCSDsToSubmit.isEmpty()) { // ...... } // mPendingInputMessages : 用于记录未成功写给decoder的buffer while (!mPendingInputMessages.empty()) { sp<AMessage> msg = *mPendingInputMessages.begin(); if (!onInputBufferFetched(msg)) { break; } mPendingInputMessages.erase(mPendingInputMessages.begin()); } // ...... // mDequeuedInputBuffers : 用于顺序记录mediacodec送上来的index mDequeuedInputBuffers.push_back(index); onRequestInputBuffers(); return true; }

上面的逻辑比较简单,当有inputbuffer送上来,NuPlayerDecoder就把这个buffer的索引以及buffer记录下来,然后调用onRequestInputBuffers去向Source请求数据。

// NuPlayerDecoderBase.cpp void NuPlayer::DecoderBase::onRequestInputBuffers() { if (mRequestInputBuffersPending) { return; } // doRequestBuffers() return true if we should request more data if (doRequestBuffers()) { mRequestInputBuffersPending = true; sp<AMessage> msg = new AMessage(kWhatRequestInputBuffers, this); msg->post(10 * 1000LL); } } void NuPlayer::DecoderBase::onMessageReceived(const sp<AMessage> &msg) { // ...... case kWhatRequestInputBuffers: { mRequestInputBuffersPending = false; onRequestInputBuffers(); break; } // ...... }

向Source请求数据最终会调用到doRequestBuffers方法当中,当doRequestBuffers方法返回true时,会再次调用onRequestInputBuffers(正如注释所言,当需要请求更多的数据的时候会返回true),接下来看看doRequestBuffers做了什么,在什么时候会返回true

bool NuPlayer::Decoder::doRequestBuffers() { // 当队列中有buffer时就会去请求数据,直到把队列中所有的buffer处理结束 status_t err = OK; while (err == OK && !mDequeuedInputBuffers.empty()) { size_t bufferIx = *mDequeuedInputBuffers.begin(); sp<AMessage> msg = new AMessage(); msg->setSize("buffer-ix", bufferIx); err = fetchInputData(msg); if (err != OK && err != ERROR_END_OF_STREAM) { // if EOS, need to queue EOS buffer break; } mDequeuedInputBuffers.erase(mDequeuedInputBuffers.begin()); // mPendingInputMessages : 用于记录未处理完成的inputbuffer // 先判断待处理队列是否为空,不为空则把本次处理的buffer加入到队列中 // 如果为空,则直接调用onInputBufferFetched做处理,如果处理失败,则把本次处理的buffer加入到队列当中 if (!mPendingInputMessages.empty() || !onInputBufferFetched(msg)) { mPendingInputMessages.push_back(msg); } } return err == -EWOULDBLOCK && mSource->feedMoreTSData() == OK; }

这里包含有几个步骤:

a. 先从未处理的索引队列中取出一个索引,然后为这个索引指向的buffer请求数据(调用fetchInputData)

b. 判断待处理消息的队列是否为空,如果不为空,则把当前消息加入到队列中等待处理,如果队列为空,则直接调用onInputBufferFetched处理当前消息

c. 当fetchInputData返回值为 -EWOULDBLOCK时,doRequestBuffers返回true(请求buffer失败)

接下来要看的就是fetchInputData中做了什么,onInputBufferFetched又做了什么?

status_t NuPlayer::Decoder::fetchInputData(sp<AMessage> &reply) { sp<ABuffer> accessUnit; bool dropAccessUnit = true; do { // 从Source中请求数据 status_t err = mSource->dequeueAccessUnit(mIsAudio, &accessUnit); if (err == -EWOULDBLOCK) { return err; } else if (err != OK) { // 在这里判断是否有不连续信息,如果有就做对应动作 // 如果发送格式变化,时间不连续的情况就给decoder发eos if (err == INFO_DISCONTINUITY) { // ...... // reply should only be returned without a buffer set // when there is an error (including EOS) CHECK(err != OK); reply->setInt32("err", err); return ERROR_END_OF_STREAM; } dropAccessUnit = false; if (!mIsAudio && !mIsEncrypted) { if (mIsEncryptedObservedEarlier) { ALOGE("fetchInputData: mismatched mIsEncrypted/mIsEncryptedObservedEarlier (0/1)"); return INVALID_OPERATION; } int32_t layerId = 0; bool haveLayerId = accessUnit->meta()->findInt32("temporal-layer-id", &layerId); // 如果当前从render获取的AVSync差距超过100ms,并且是AVC,不是关键帧,那么丢弃本帧,重新读取 if (mRenderer->getVideoLateByUs() > 100000LL && mIsVideoAVC && !IsAVCReferenceFrame(accessUnit)) { dropAccessUnit = true; } else if (haveLayerId && mNumVideoTemporalLayerTotal > 1) { // Add only one layer each time. if (layerId > mCurrentMaxVideoTemporalLayerId + 1 || layerId >= mNumVideoTemporalLayerAllowed) { dropAccessUnit = true; ALOGV("dropping layer(%d), speed=%g, allowed layer count=%d, max layerId=%d", layerId, mPlaybackSpeed, mNumVideoTemporalLayerAllowed, mCurrentMaxVideoTemporalLayerId); } else if (layerId > mCurrentMaxVideoTemporalLayerId) { mCurrentMaxVideoTemporalLayerId = layerId; } else if (layerId == 0 && mNumVideoTemporalLayerTotal > 1 && IsIDR(accessUnit->data(), accessUnit->size())) { mCurrentMaxVideoTemporalLayerId = mNumVideoTemporalLayerTotal - 1; } } if (dropAccessUnit) { if (layerId <= mCurrentMaxVideoTemporalLayerId && layerId > 0) { mCurrentMaxVideoTemporalLayerId = layerId - 1; } ++mNumInputFramesDropped; } } } while (dropAccessUnit); // ...... if (mCCDecoder != NULL) { mCCDecoder->decode(accessUnit); } reply->setBuffer("buffer", accessUnit); return OK; }

fetchInputData做了三件事:

a. 调用Source的dequeueAccessUnit方法获取读取到的数据,判断当前获取数据的标志

如果是格式发送变化则通知decoder eos,如果是时间不连续则同样通知decoder eos,但是会记录下csd信息,用于重新初始化decoder

b. 获取当前AVSync的状态,如果差距大于100ms则丢弃当前数据

c. 将获取到的数据封装到AMessage当中

拿到数据之后接下来就是调用onInputBufferFetched来处理AMessage

bool NuPlayer::Decoder::onInputBufferFetched(const sp<AMessage> &msg) { // ...... size_t bufferIx; CHECK(msg->findSize("buffer-ix", &bufferIx)); CHECK_LT(bufferIx, mInputBuffers.size()); sp<MediaCodecBuffer> codecBuffer = mInputBuffers[bufferIx]; sp<ABuffer> buffer; bool hasBuffer = msg->findBuffer("buffer", &buffer); bool needsCopy = true; // 如果获取的buffer为null,则认为是EOS if (buffer == NULL /* includes !hasBuffer */) { int32_t streamErr = ERROR_END_OF_STREAM; CHECK(msg->findInt32("err", &streamErr) || !hasBuffer); CHECK(streamErr != OK); // attempt to queue EOS status_t err = mCodec->queueInputBuffer( bufferIx, 0, 0, 0, MediaCodec::BUFFER_FLAG_EOS); if (err == OK) { mInputBufferIsDequeued.editItemAt(bufferIx) = false; } else if (streamErr == ERROR_END_OF_STREAM) { streamErr = err; // err will not be ERROR_END_OF_STREAM } if (streamErr != ERROR_END_OF_STREAM) { ALOGE("Stream error for [%s] (err=%d), EOS %s queued", mComponentName.c_str(), streamErr, err == OK ? "successfully" : "unsuccessfully"); handleError(streamErr); } } else { // 否则将buffer copy到mediacodecbuffer当中,送给decoder sp<AMessage> extra; if (buffer->meta()->findMessage("extra", &extra) && extra != NULL) { int64_t resumeAtMediaTimeUs; if (extra->findInt64( "resume-at-mediaTimeUs", &resumeAtMediaTimeUs)) { ALOGV("[%s] suppressing rendering until %lld us", mComponentName.c_str(), (long long)resumeAtMediaTimeUs); mSkipRenderingUntilMediaTimeUs = resumeAtMediaTimeUs; } } int64_t timeUs = 0; uint32_t flags = 0; CHECK(buffer->meta()->findInt64("timeUs", &timeUs)); int32_t eos, csd, cvo; // we do not expect SYNCFRAME for decoder if (buffer->meta()->findInt32("eos", &eos) && eos) { flags |= MediaCodec::BUFFER_FLAG_EOS; } else if (buffer->meta()->findInt32("csd", &csd) && csd) { flags |= MediaCodec::BUFFER_FLAG_CODECCONFIG; } if (buffer->meta()->findInt32("cvo", (int32_t*)&cvo)) { ALOGV("[%s] cvo(%d) found at %lld us", mComponentName.c_str(), cvo, (long long)timeUs); switch (cvo) { case 0: codecBuffer->meta()->setInt32("cvo", MediaCodec::CVO_DEGREE_0); break; case 1: codecBuffer->meta()->setInt32("cvo", MediaCodec::CVO_DEGREE_90); break; case 2: codecBuffer->meta()->setInt32("cvo", MediaCodec::CVO_DEGREE_180); break; case 3: codecBuffer->meta()->setInt32("cvo", MediaCodec::CVO_DEGREE_270); break; } } // Modular DRM MediaBufferBase *mediaBuf = NULL; NuPlayerDrm::CryptoInfo *cryptInfo = NULL; // copy into codec buffer if (needsCopy) { if (buffer->size() > codecBuffer->capacity()) { handleError(ERROR_BUFFER_TOO_SMALL); mDequeuedInputBuffers.push_back(bufferIx); return false; } if (buffer->data() != NULL) { codecBuffer->setRange(0, buffer->size()); memcpy(codecBuffer->data(), buffer->data(), buffer->size()); } else { // No buffer->data() // 加密视频的处理 //Modular DRM sp<RefBase> holder; if (buffer->meta()->findObject("mediaBufferHolder", &holder)) { mediaBuf = (holder != nullptr) ? static_cast<MediaBufferHolder*>(holder.get())->mediaBuffer() : nullptr; } if (mediaBuf != NULL) { if (mediaBuf->size() > codecBuffer->capacity()) { handleError(ERROR_BUFFER_TOO_SMALL); mDequeuedInputBuffers.push_back(bufferIx); return false; } codecBuffer->setRange(0, mediaBuf->size()); memcpy(codecBuffer->data(), mediaBuf->data(), mediaBuf->size()); MetaDataBase &meta_data = mediaBuf->meta_data(); cryptInfo = NuPlayerDrm::getSampleCryptoInfo(meta_data); } else { // No mediaBuf ALOGE("onInputBufferFetched: buffer->data()/mediaBuf are NULL for %p", buffer.get()); handleError(UNKNOWN_ERROR); return false; } } // buffer->data() } // needsCopy status_t err; AString errorDetailMsg; if (cryptInfo != NULL) { err = mCodec->queueSecureInputBuffer( bufferIx, codecBuffer->offset(), cryptInfo->subSamples, cryptInfo->numSubSamples, cryptInfo->key, cryptInfo->iv, cryptInfo->mode, cryptInfo->pattern, timeUs, flags, &errorDetailMsg); // synchronous call so done with cryptInfo here free(cryptInfo); } else { err = mCodec->queueInputBuffer( bufferIx, codecBuffer->offset(), codecBuffer->size(), timeUs, flags, &errorDetailMsg); } // no cryptInfo if (err != OK) { ALOGE("onInputBufferFetched: queue%sInputBuffer failed for [%s] (err=%d, %s)", (cryptInfo != NULL ? "Secure" : ""), mComponentName.c_str(), err, errorDetailMsg.c_str()); handleError(err); } else { mInputBufferIsDequeued.editItemAt(bufferIx) = false; } } // buffer != NULL return true; }

这里的代码比较长,主要是分了两种情况:

a. 如果message中的buffer为空,那么说明已经EOS了(并不一定是真的eos,参考上面fetchInputData,可能是seek或者formatchange)

b. 如果buffer不为空,且buffer.data不为空,那么说明是普通视频,直接拷贝到mediacodec的buffer当中;如果buffer.data为空,说明这是个加密视频,要从mediaBufferHolder中获取buffer,并且获取到CryptoInfo,

到这里数据的写入就完成了。

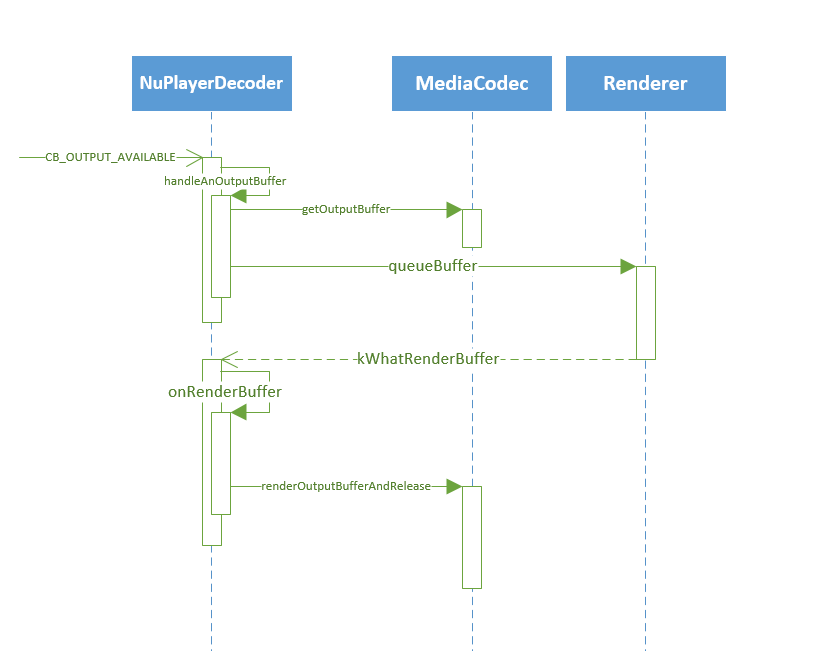

3、mediacodec送回的解码后的数据要如何接收呢,接收完要怎么处理呢

这里比较简单,从mediacodec获取到解码后的数据,送给Renderer做AVsync,Renderer再用消息通知NuPlayerDecoder来渲染

另外有个地方这边没有标注出来,seek之后出了第一帧时(resume),会调用notifyResumeCompleteIfNecessary方法通知上层第一帧解码完成。

4、start,stop,pause,seek,Decoder需要做什么对应的动作

a. start在第一节里面已经讲过了,调用NuPlayer的start接口后创建mediacodec对象,注册callback就OK了

b. NuPlayer并没有stop接口,但是mediaplayer java接口是有这个接口的,从NuPlayerDriver中来看

status_t NuPlayerDriver::stop() { ALOGD("stop(%p)", this); Mutex::Autolock autoLock(mLock); switch (mState) { case STATE_RUNNING: mPlayer->pause(); FALLTHROUGH_INTENDED; case STATE_PAUSED: mState = STATE_STOPPED; notifyListener_l(MEDIA_STOPPED); break; // ...... default: return INVALID_OPERATION; } return OK; }

stop接口其实调用的就是pause。

c. pause

pause的代码很简单,调用了Source和Renderer的pause,NuPlayerDecoder不会做任何动作

这时候mediacodec仍然会向上送InputBuffer以及outputBuffer,但是这时候并不去处理这些buffer,fetchInputBuffer不能读取到数据,会在这里一直等待读到数据

void NuPlayer::onPause() { updatePlaybackTimer(true /* stopping */, "onPause"); if (mPaused) { return; } mPaused = true; if (mSource != NULL) { mSource->pause(); } else { ALOGW("pause called when source is gone or not set"); } if (mRenderer != NULL) { mRenderer->pause(); } else { ALOGW("pause called when renderer is gone or not set"); } }

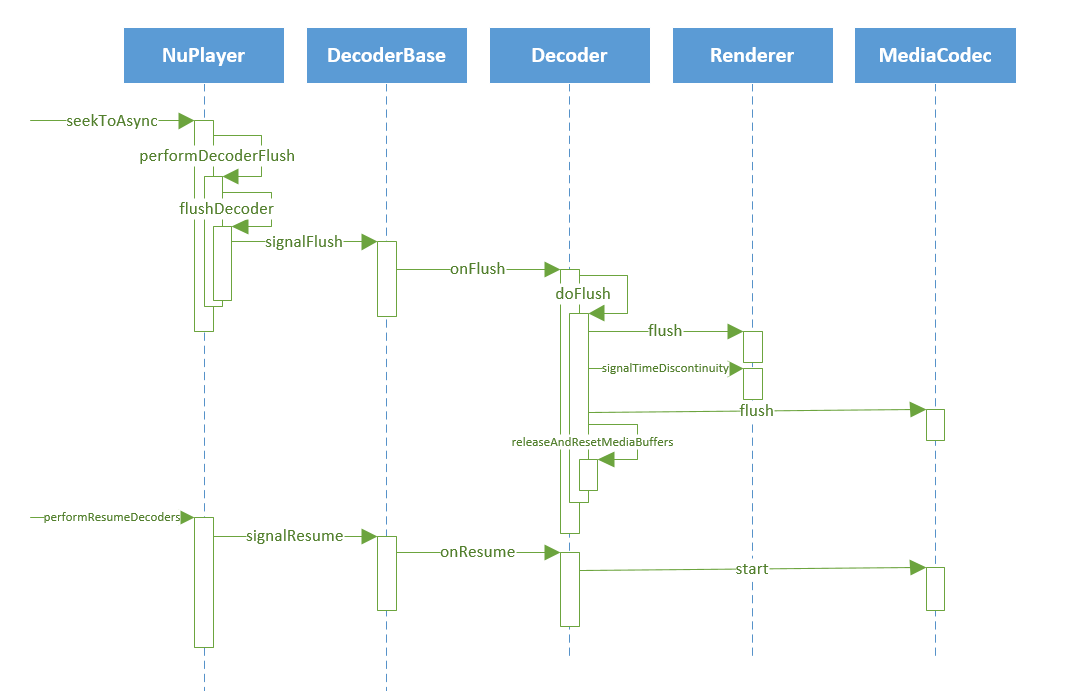

d. seek

调用seek之后Decoder总共有4个动作:

aa. 调用Render的flush

bb. 调用mediacodec的seek

cc. 释放所有保存在Decoder的buffer以及相关的标志

dd. 重新调用mediacodec的start方法,恢复运行

到这里,NuPlayerDecoder部分工作原理学习的差不多就结束了,但是这里还有很多细节没有去研究,但是顺着这个框架看应该就比较简单了。