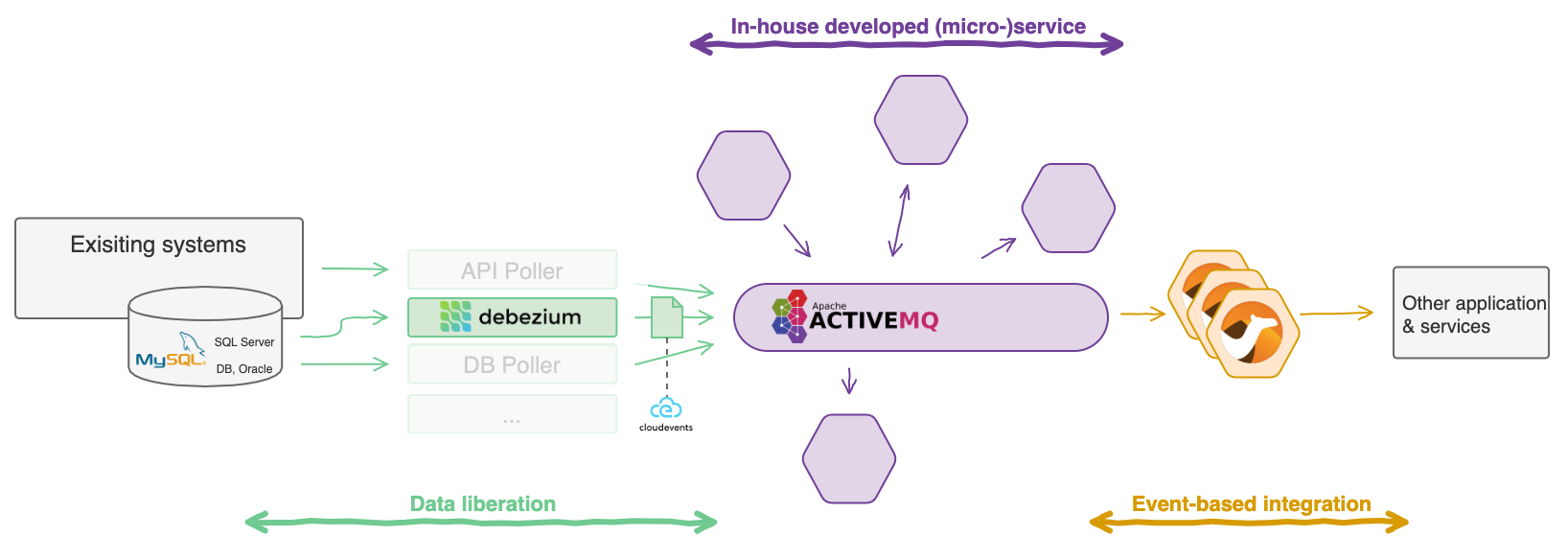

Data liberation pattern using the Debezium engine

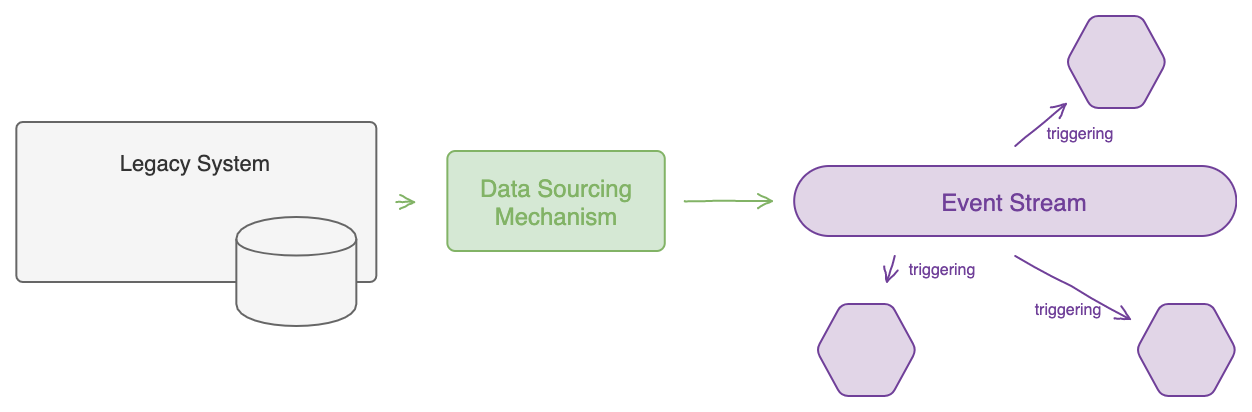

Data liberation tries to answer; “How do you get data out of your existing systems and use it in an event-driven way?”. Most enterprises have multiple applications that were not designed with event-driven architectures (EDA) in mind. Nevertheless, many of these companies are embracing an event-driven architecture to provide more real-time customer experiences and need to incorporate data from their existing business-critical systems.

There are different approaches to get this data out of the system in an event-driven way: scheduled querying the legacy databases, setting up Change Data Capture (CDC) mechanism on databases, refactoring existing systems to publish events from the application layer, etc. In all of these cases, the liberated events need to be made available on an event broker so other services can be triggered by them.

Debezium engine

Inthis post I’ll look at one way to implement this data liberation pattern: Implementing CDC using Debezium. It’s particularly interesting for systems in maintenance mode where refactoring is not desirable or simply not possible.

Debezium is an open-source CDC platform for common databases like MySQL, Postgress, SQL Server, DB2, … and is part of the Red Hat Integration suite. It converts the changes in your database to an event stream. Initially Debezium was built specifically for the Kafka platform. This by providing a Kafka connector that monitors specific databases.

The community is doing big efforts to make it a more universal tool for data liberation. For this they provide the stand-alone Debezium engine. Here you can run it in a self-managed application, and you can code the event handler yourself. This allows you to publish the CDC events to alternative broker solutions. They already provide a number of examples with brokers like AWS Kinesis, Apache Pulsar, and NATS. See the examples provided by the Debezium community.

Another important effort is the support for multiple output formats. From version 1.2.x (currently in beta), the embedded engine support additional formats besides the Kafka format: JSON, AVRO, and … drum roll … CloudEvents!!. This makes it even easier to integrate into event-driven architectures. More info on the formats.

Why is the addition of CloudEvents so exciting? Check out this talk of Doug Davis at the first online AsyncAPI Conference. However, a side note is in place here. The (Cloud)events emitted by the Debezium engine should (at least in most cases) still be transformed to hide database internal semantics and only emit the fields relevant to the outer context.

Our use case for CDC

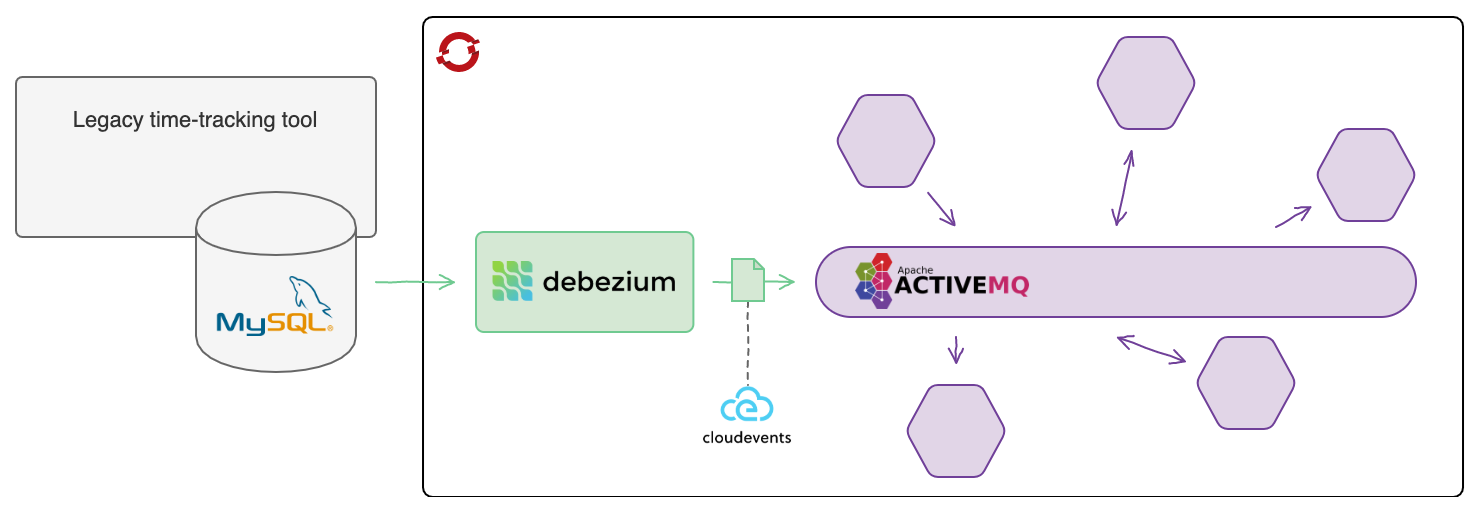

The reason I looked into it, was the need to incorporate a legacy time tracking tool into a microservices environment on OpenShift. The app only has a web UI to interact with it. No APIs, no webhooks, no messaging interfaces; and it’s a procured product with no active development. So, the automated interactions are limited to database integrations (or RPA but that is out of scope here).

The target microservices environment uses ApacheMQ Artemis as the main broker. However, a commit-log based messaging system like Kafka, Pulsar, or AWS Kinesis is in most cases a better fit for a CDC architecture (and for the data liberation pattern in general). But in this case the added value was not essential.

As a result, we decided to set up a custom Debezium Engine which connects to the MySQL database of the legacy app and publishes the change events to the ActiveMQ Artemis broker.

Some code

To illustrate the setup, I’ll use a Debezium provided image as you can’t access our environment. This image has some data and has the required configuration already applied.

You’ll find the complete PoC code and setup instructions in the following repository: https://github.com/Samuelvdc/debezium-artemis-poc.

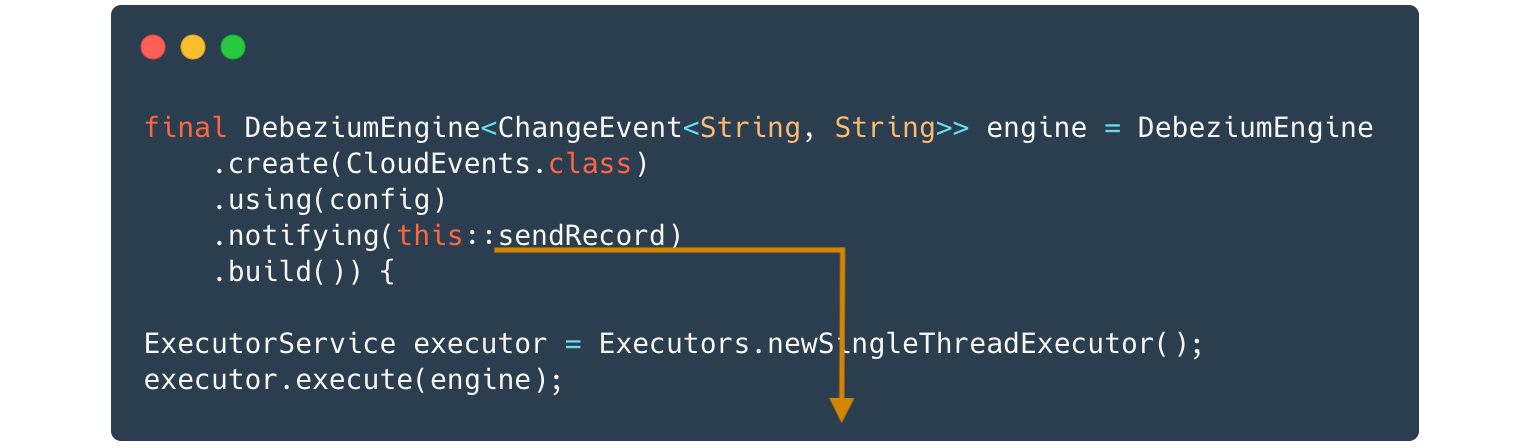

The code snippet below (from MyAMQDebeziumEngine.java) shows how to initiate a Debezium engine:

- You’ll define the message format: CloudEvents.

- You’ll provide the configuration to connect to the MySQL database. Then,

- You’ll provide a custom handler. This handler gives us the freedom to integrate with any system

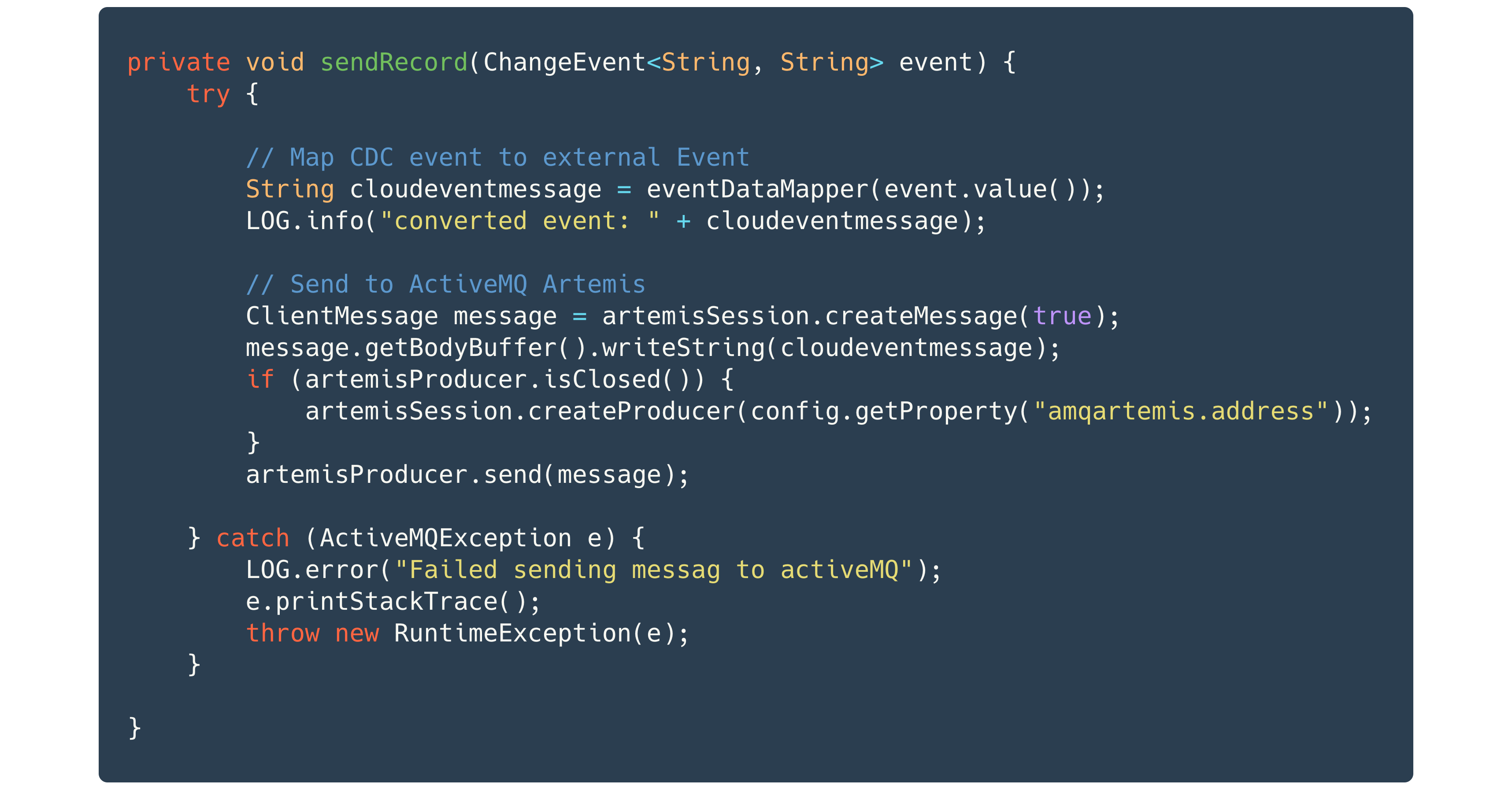

The custom handler which publishes the CDC events to ActiveMQ. Note that we do transform the CDC event before publishing.

More information on this and especially around error handling scenarios can be found at the official Debezium engine documentation.

Afterthoughts

The explained CDC implementation of a Data liberation pattern provides a relatively easy way to expose your data in an event-driven way. As it integrates through the database layer, the impact on the application(s) is very limited, which makes it a very interesting solution for legacy systems. Nonetheless, it is important to thoroughly analyze the semantics of the application layer of the existing system and align the emitted events to it.

An interesting tool for this transformation is Camel which is also part of the Red Hat Integration suite. The latest iteration of this framework provides significant improvements for the Cloud Native space. You may for instance use camel for both connecting to the database via their Debezium connector, transforming the CDC event, and routing it to ActiveMQ or other targets. All of this in a single Camel route. Some references for this setup: camel-example-debezium and debezium-examples-camel.

At this point the change events are available on our messaging broker. The in-house developed microservices can now subscribe and act on these events. But the events can also provide value for systems where we have limited control over the inbound interfaces. Here event-based integration patterns can be used to update these applications & services in an event-driven way based on the CDC event. A more in-depth study of these event-based integration patterns is for another blog.

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 记一次.NET内存居高不下排查解决与启示

· 探究高空视频全景AR技术的实现原理

· 理解Rust引用及其生命周期标识(上)

· 浏览器原生「磁吸」效果!Anchor Positioning 锚点定位神器解析

· 没有源码,如何修改代码逻辑?

· 全程不用写代码,我用AI程序员写了一个飞机大战

· DeepSeek 开源周回顾「GitHub 热点速览」

· 记一次.NET内存居高不下排查解决与启示

· MongoDB 8.0这个新功能碉堡了,比商业数据库还牛

· .NET10 - 预览版1新功能体验(一)

2019-05-29 使用terraform 生成自签名证书