OpenCVSharp配置及讲解_在C#中使用OpenCV_以Opencv的7大追踪算法为例

本篇博客应该是属于开荒,因为很难找到C#版Opencv的文章。

本文会详细讲解如何一步步配置OPENCVSHARP(C#中的OPENCV),并给出三个demo,分别是追踪算法CamShift以及Tracker在.NET C#中的实现,以及OPENCV 图像类OpenCvSharp.Mat与C# 图像类System.Drawing.Bitmap的互相转换。

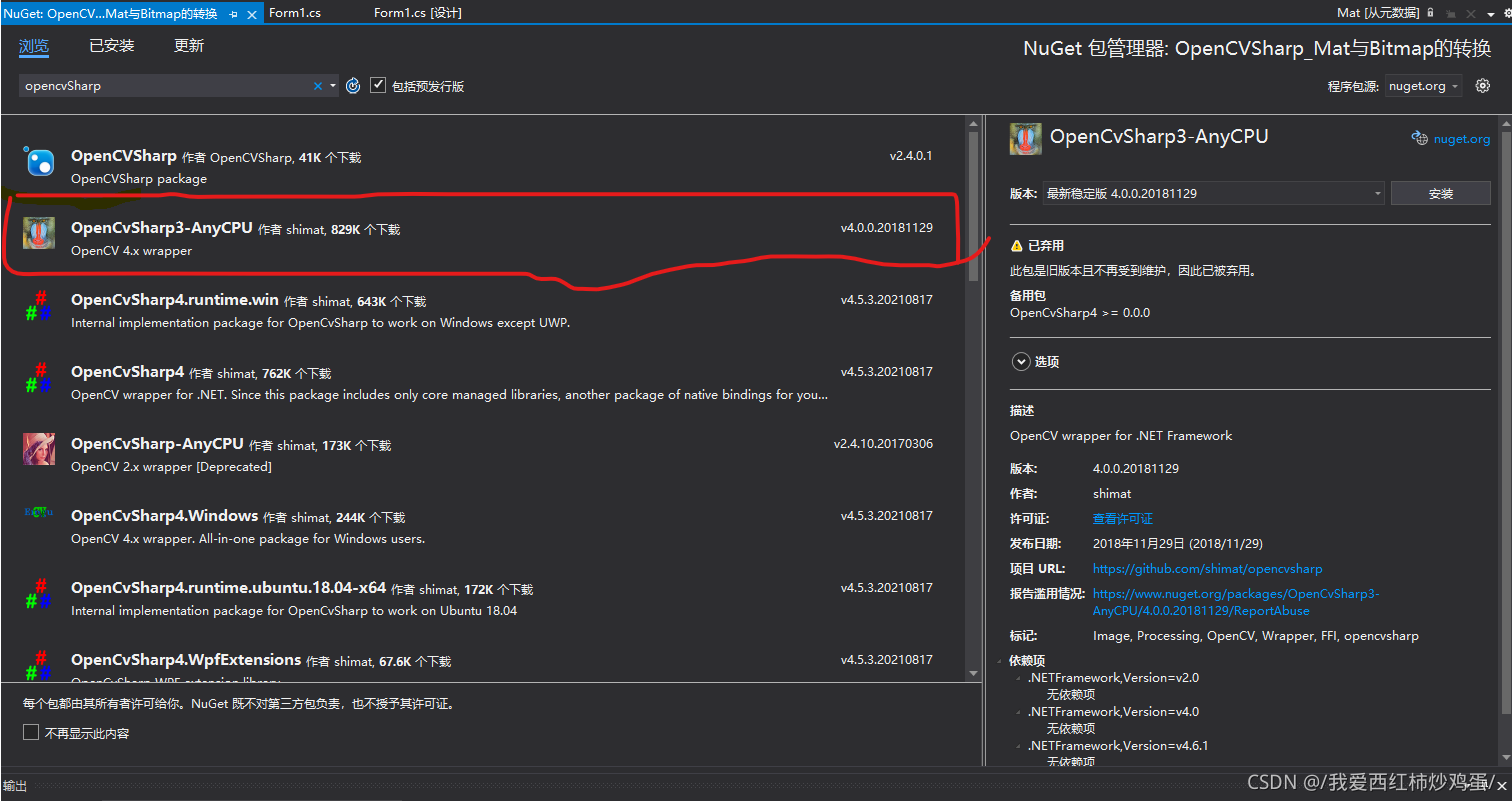

任意新建一个控制台程序,然后打开Nuget包管理器,搜索OpenCvSharp,选择那个头像为猿猴脸的那个库。为什么在众多OpenCvSharp库中选择这个库,是因为其他库我都经过了测试,不能跑。

如下图:

首先声明下,OpenCvSharp是对Opencv C++的封装,以便其能够在.Net中使用。

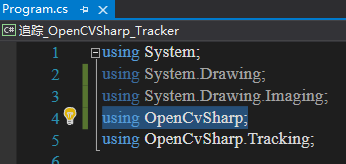

引入该库 OpenCvSharp;

using OpenCvSharp;

我在这里直接上源代码了,我对一些OPENCV C++ 的程序在C# OpenCvSharp 中进行了重写,下面分别是两个例子:

追踪_OpenCVSharp_Tracker;

Camshift交互式追踪CSharp版;

关于如何使用,两个程序都需要你在OPENCV的窗体上画一个矩形ROI框,移动物体,该方框就会跟随物体而移动,并实时输出该方框左上角坐标。

关于该opencv的图像处理讲解,请大家自己查一下。

//追踪_OpenCVSharp_Tracker; using System; using System.Drawing; using System.Drawing.Imaging; using OpenCvSharp; using OpenCvSharp.Tracking; namespace 追踪_OpenCVSharp_Tracker { class Program { private static Mat image = new Mat(); private static OpenCvSharp.Point originPoint = new OpenCvSharp.Point(); private static Rect2d selectedRect = new Rect2d(); private static bool selectRegion = false; private static int trackingFlag = 0; private static void OnMouse(MouseEvent Event, int x, int y, MouseEvent Flags, IntPtr ptr) { if (selectRegion) { selectedRect.X = Math.Min(x, originPoint.X); selectedRect.Y = Math.Min(y, originPoint.Y); selectedRect.Width = Math.Abs(x - originPoint.X); selectedRect.Height = Math.Abs(y - originPoint.Y); selectedRect = selectedRect & new Rect2d(0, 0, image.Cols, image.Rows); } switch (Event) { case MouseEvent.LButtonDown: originPoint = new OpenCvSharp.Point(x, y); selectedRect = new Rect2d(x, y, 0, 0); selectRegion = true; break; case MouseEvent.LButtonUp: selectRegion = false; if (selectedRect.Width > 0 && selectedRect.Height > 0) { trackingFlag = -1; } break; } } static void Main(string[] args) { TrackerKCF tracker_KCF= TrackerKCF.Create(); TrackerMIL trackerMIL = TrackerMIL.Create(); //cv::Ptr<cv::Tracker> tracker = TrackerCSRT.Create(); TrackerMedianFlow trackerMedianFlow = TrackerMedianFlow.Create(); TrackerMOSSE trackerMOSSE = TrackerMOSSE.Create(); TrackerTLD trackerTLD = TrackerTLD.Create(); VideoCapture cap = new VideoCapture(); cap.Open(0); if (cap.IsOpened()) { string windowName = "KCF Tracker"; string windowName2 = "OriginFrame"; Mat frame = new Mat(); Mat outputMat = new Mat(); Cv2.NamedWindow(windowName, 0); Cv2.NamedWindow(windowName2, 0); Cv2.SetMouseCallback(windowName, OnMouse, new IntPtr()); while (true) { cap.Read(frame); // Check if 'frame' is empty if (frame.Empty()) { break; } frame.CopyTo(image); if (trackingFlag != 0) { tracker_KCF.Init(frame, selectedRect); tracker_KCF.Update(frame, ref selectedRect); frame.CopyTo(outputMat); Rect rect = new Rect((int)selectedRect.X, (int)selectedRect.Y, (int)selectedRect.Width, (int)selectedRect.Height); Console.WriteLine(rect.X+" "+rect.Y); Cv2.Rectangle(outputMat, rect, new Scalar(255, 255, 0), 2); Cv2.ImShow(windowName2, outputMat); } if (selectRegion && selectedRect.Width > 0 && selectedRect.Height > 0) { Mat roi = new Mat(image, new Rect((int)selectedRect.X, (int)selectedRect.Y, (int)selectedRect.Width, (int)selectedRect.Height)); Cv2.BitwiseNot(roi, roi); } Cv2.ImShow(windowName, image); int ch = Cv2.WaitKey(25); if (ch == 27) { break; } } } } } }

//Camshift交互式追踪CSharp版; using OpenCvSharp; using System; namespace 交互式追踪CSharp版 { class Program { private static Mat image = new Mat(); private static Point originPoint = new Point(); private static Rect selectedRect = new Rect(); private static bool selectRegion = false; private static int trackingFlag = 0; //private static CvMouseCallback callBackFunc = new CvMouseCallback(OnMouse); private static void OnMouse(MouseEvent Event, int x, int y, MouseEvent Flags, IntPtr ptr) { if (selectRegion) { selectedRect.X = Math.Min(x, originPoint.X); selectedRect.Y = Math.Min(y, originPoint.Y); selectedRect.Width = Math.Abs(x - originPoint.X); selectedRect.Height = Math.Abs(y - originPoint.Y); selectedRect = selectedRect & new Rect(0, 0, image.Cols, image.Rows); } switch (Event) { case MouseEvent.LButtonDown: originPoint = new Point(x, y); selectedRect = new Rect(x, y, 0, 0); selectRegion = true; break; case MouseEvent.LButtonUp: selectRegion = false; if (selectedRect.Width > 0 && selectedRect.Height > 0) { trackingFlag = -1; } break; } } static void Main(string[] args) { VideoCapture cap = new VideoCapture(); cap.Open(0); if (cap.IsOpened()) { int ch; Rect trackingRect = new Rect(); // range of values for the 'H' channel in HSV ('H' stands for Hue) Rangef hist_range = new Rangef(0.0f, 180.0f); Rangef[] histRanges = { hist_range }; //const float* histRanges = hueRanges; // min value for the 'S' channel in HSV ('S' stands for Saturation) int minSaturation = 40; // min and max values for the 'V' channel in HSV ('V' stands for Value) int minValue = 20, maxValue = 245; // size of the histogram bin int[] histSize = { 8 }; string windowName = "CAMShift Tracker"; //string windowNameTest = "Test"; Cv2.NamedWindow(windowName, 0); //Cv2.NamedWindow(windowNameTest, 0); Cv2.SetMouseCallback(windowName, OnMouse, new IntPtr()); Mat frame = new Mat(); Mat hsvImage = new Mat(); Mat hueImage = new Mat(); Mat mask = new Mat(); Mat hist = new Mat(); Mat backproj = new Mat(); // Image size scaling factor for the input frames from the webcam double scalingFactor = 1; // Iterate until the user presses the Esc key while (true) { // Capture the current frame cap.Read(frame); // Check if 'frame' is empty if (frame.Empty()) break; // Resize the frame Cv2.Resize(frame, frame, new Size(), scalingFactor, scalingFactor, InterpolationFlags.Area); frame.CopyTo(image); // Convert to HSV colorspace Cv2.CvtColor(image, hsvImage, ColorConversionCodes.BGR2HSV); if (trackingFlag != 0) { // Check for all the values in 'hsvimage' that are within the specified range // and put the result in 'mask' Cv2.InRange(hsvImage, new Scalar(0, minSaturation, minValue), new Scalar(180, 256, maxValue), mask); /* # 通俗的来讲,这个函数就是判断hsv中每一个像素是否在[lowerb,upperb]之间,注意集合的开闭。 # 结果是,那么在mask相应像素位置填上255,反之则是0。即重点突出该颜色 # 即检查数组元素是否在另外两个数组元素值之间。这里的数组通常也就是矩阵Mat或向量。 # 要特别注意的是:该函数输出的mask是一幅二值化之后的图像。*/ //imshow(windowNameTest, mask); //waitKey(0); // Mix the specified channels int[] channels = { 0, 0 }; //cout << hsvImage.depth() << endl; hueImage.Create(hsvImage.Size(), hsvImage.Depth()); //cout << hueImage.channels() << endl; ; hueImage = hsvImage.ExtractChannel(0); //Cv2.MixChannels(hsvImage, hueImage, channels); /*mixChannels mixChannels()函数用于将输入数组的指定通道复制到输出数组的指定通道。 void mixChannels( const Mat* src, //输入数组或向量矩阵,所有矩阵的大小和深度必须相同。 size_t nsrcs, //矩阵的数量 Mat* dst, //输出数组或矩阵向量,大小和深度必须与src[0]相同 size_t ndsts,//矩阵的数量 const int* fromTo,//指定被复制通道与要复制到的位置组成的索引对 size_t npairs //fromTo中索引对的数目*/ if (trackingFlag < 0) { // Create images based on selected regions of interest Mat roi = new Mat(hueImage, selectedRect); Mat maskroi = new Mat(mask, selectedRect); Mat[] roi_source = { roi }; int[] channels_ = { 0 }; // Compute the histogram and normalize it Cv2.CalcHist(roi_source, channels_, maskroi, hist, 1, histSize, histRanges); Cv2.Normalize(hist, hist, 0, 255, NormTypes.MinMax); trackingRect = selectedRect; trackingFlag = 1; } Mat[] hueImgs = { hueImage }; int[] channels_back = { 0 }; // Compute the histogram back projection Cv2.CalcBackProject(hueImgs, channels_back, hist, backproj, histRanges); backproj &= mask; //TermCriteria criteria = new TermCriteria(CriteriaTypes.Eps | CriteriaTypes.MaxIter, 10, 1); RotatedRect rotatedTrackingRect = Cv2.CamShift(backproj, ref trackingRect, new TermCriteria(CriteriaType.Eps | CriteriaType.MaxIter, 10, 1)); // Check if the area of trackingRect is too small if ((trackingRect.Width * trackingRect.Height) <= 1) { // Use an offset value to make sure the trackingRect has a minimum size int cols = backproj.Cols, rows = backproj.Rows; int offset = Math.Min(rows, cols) + 1; trackingRect = new Rect(trackingRect.X - offset, trackingRect.Y - offset, trackingRect.X + offset, trackingRect.Y + offset) & new Rect(0, 0, cols, rows); } // Draw the ellipse on top of the image Cv2.Ellipse(image, rotatedTrackingRect, new Scalar(0, 255, 0), 3, LineTypes.Link8); } // Apply the 'negative' effect on the selected region of interest if (selectRegion && selectedRect.Width > 0 && selectedRect.Height > 0) { Mat roi = new Mat(image, selectedRect); Cv2.BitwiseNot(roi, roi); } // Display the output image Cv2.ImShow(windowName, image); // Get the keyboard input and check if it's 'Esc' // 27 -> ASCII value of 'Esc' key ch = Cv2.WaitKey(25); if (ch == 27) { break; } } } } } }

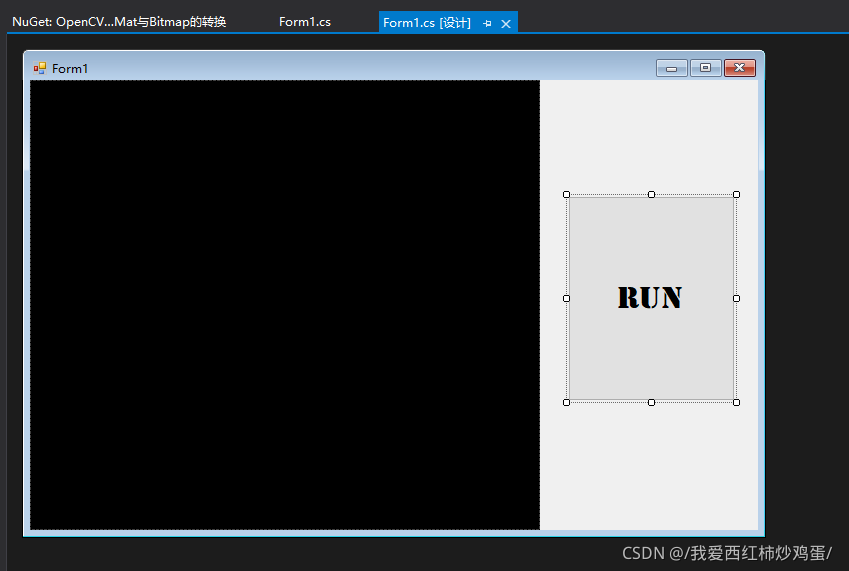

另外i,还有一个.Net FrameWork Winform 窗体实时演示摄像机的画面,并包括OpenCVSharp.Mat 类与System.Drawing.Bitmap类的互相转换。

该Demo的窗体界面:

该demo代码:

using System; using System.Collections.Generic; using System.ComponentModel; using System.Data; using System.Drawing; using System.Linq; using System.Text; using System.Threading.Tasks; using System.Windows.Forms; using OpenCvSharp; using System.Drawing.Imaging; using System.Runtime.InteropServices; namespace OpenCVSharp_Mat与Bitmap的转换 { public partial class Form1 : Form { VideoCapture cap; Mat frame = new Mat(); Mat dstMat = new Mat(); Bitmap bmp; public Form1() { InitializeComponent(); } public static Bitmap MatToBitmap(Mat dst) { return new Bitmap(dst.Cols, dst.Rows, (int)dst.Step(), PixelFormat.Format24bppRgb, dst.Data); } public static Mat BitmapToMat(Bitmap srcbit) { int iwidth = srcbit.Width; int iheight = srcbit.Height; int iByte = iwidth * iheight * 3; byte[] result = new byte[iByte]; int step; Rectangle rect = new Rectangle(0, 0, iwidth, iheight); BitmapData bmpData = srcbit.LockBits(rect, ImageLockMode.ReadWrite, srcbit.PixelFormat); IntPtr iPtr = bmpData.Scan0; Marshal.Copy(iPtr, result, 0, iByte); step = bmpData.Stride; srcbit.UnlockBits(bmpData); return new Mat(srcbit.Height, srcbit.Width, new MatType(MatType.CV_8UC3), result, step); } private void btnRun_Click(object sender, EventArgs e) { timer1.Enabled = true; } private void timer1_Tick(object sender, EventArgs e) { if (cap.IsOpened()) { cap.Read(frame); bmp = MatToBitmap(frame); pictureBox1.Image = bmp; dstMat = BitmapToMat(bmp); Cv2.ImShow("dstMat", dstMat); //Cv2.WaitKey(); } } private void Form1_Load(object sender, EventArgs e) { try { cap = new VideoCapture(); cap.Open(0); } catch (Exception ex) { MessageBox.Show(ex.Message); } } private void Form1_FormClosed(object sender, FormClosedEventArgs e) { timer1.Enabled = false; if (cap.IsOpened()) { cap.Dispose(); } } } }

里面涉及到一些数字图像处理的函数,大家不懂得可以自己去翻书或者上网查阅资料。多多单步运行,你就会发现他为什么要这样写。具体理论知识篇幅较长,一晚上都讲不完,请大家自行查资料。

如果大家能看到这里,相比是非常喜欢这篇博客了,也对UP主很认可。

UP主虽然年纪还不算大,可是已经历过人生的起起落落:

18岁选专业被调剂到生物材料,没能修成 控制工程及其自动化,计算机与微电子 相关专业。

23岁在职跨专业考研计算机失败。

期间又经历了种种碰壁,种种挫折。

但UP主始终觉得只要保持着对知识的敬畏之心,督促自己努力下去,

即使命运给了UP主这一生最不堪的开篇,那又能怎么样呢。

或许改变自己的命运不愿服从命运的安排是我们这些人一贯的追求。

那就请关注点赞加收藏吧。

本文图像类的转换部分参考以下博客:

https://blog.csdn.net/qq_34455723/article/details/90053593