Mac安装Hadoop

软件版本

hadoop3.2.1

一、打开本地ssh登录

# 生成公钥默认 $ ssh-keygen -t rsa -C "robots_wang@163.com" -b 4096 # 拷贝 $ cat ~/.ssh/id_rsa.pub # 拷贝至电脑信任列表 $ cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

测试:ssh localhost

二、安装Hadoop

【1】下载地址:

https://archive.apache.org/dist/hadoop/common/

解压:tar -zxvf hadoop-3.2.1.tar.gz -C /Users/mac/SoftWare

【2】配置环境变量

vim ~/.bash_profile

export JAVA_HOME="/Library/Java/JavaVirtualMachines/jdk1.8.0_201.jdk/Contents/Home"

export CLASSPAHT=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

export PATH="${JAVA_HOME}:${PATH}"

export HADOOP_HOME=/Users/mac/SoftWare/hadoop-3.2.1

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_OPTS="-Djava.library.path=${HADOOP_HOME}/lib/native"

export PATH=${HADOOP_HOME}/bin:${HADOOP_HOME}/sbin:$PATH

source ~/.bash_profile

【3】修改配置文件(cd /Users/mac/SoftWare/hadoop-3.2.1/etc/hadoop)

hadoop-env.sh

修改JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_201.jdk/Contents/Home

core-site.xml

新加

<configuration>

<property>

<name>hadoop.tmp.dir</name>

<value>file:/Users/mac/SoftWare/Cache/hadoop/tmp</value>

</property>

<property>

<name>fs.defaultFS</name>

<value>hdfs://localhost:9000</value>

</property>

<property>

<name>hadoop.native.lib</name>

<value>false</value>

<description>Should native hadoop libraries, if present, be used.</description>

</property>

</configuration>

hdfs-site.xml

新加

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/Users/mac/SoftWare/Cache/hadoop/tmp/dfs/name</value>

</property>

<property>

<name>dfs.namenode.data.dir</name>

<value>file:/Users/mac/SoftWare/Cache/hadoop/tmp/dfs/data</value>

</property>

</configuration>

mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/*:$HADOOP_MAPRED_HOME/share/hadoop/mapreduce/lib/*</value>

</property>

<!-- spark配置日志 -->

<property>

<name>mapreduce.jobhistory.address</name>

<value>localhost:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>localhost:19888</value>

</property>

<!-- 配置正在运行中的日志在hdfs上的存放路径 -->

<property>

<name>mapreduce.jobhistory.intermediate-done-dir</name>

<value>/history/done_intermediate</value>

</property>

<!-- 配置运行过的日志存放在hdfs上的存放路径 -->

<property>

<name>mapreduce.jobhistory.done-dir</name>

<value>/history/done</value>

</property>

</configuration>yarn-site.xml

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.env-whitelist</name>

<value>JAVA_HOME,HADOOP_COMMON_HOME,HADOOP_HDFS_HOME,HADOOP_CONF_DIR,CLASSPATH_PREPEND_DISTCACHE,HADOOP_YARN_HOME,HADOOP_MAPRED_HOME</value>

</property>

<!-- 日志设置 -->

<property>

<description>Whether to enable log aggregation</description>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

</configuration>mac的因为/bin/java 的特殊配置

修改

$HADOOP_HOME/libexec/hadoop-config.sh

在注释下新增JAVA_HOME

# do all the OS-specific startup bits here

# this allows us to get a decent JAVA_HOME,

# call crle for LD_LIBRARY_PATH, etc.

export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_311.jdk/Contents/Home

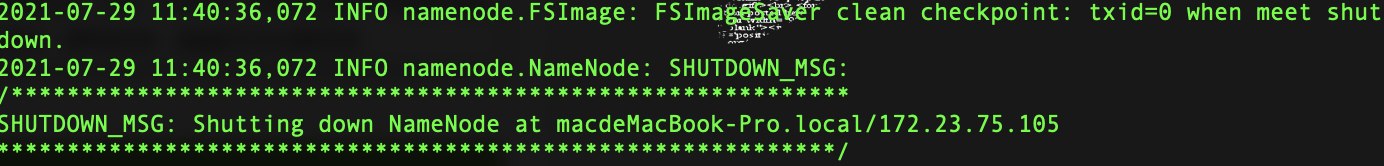

三、启动Hadoop

【1】初始化Hadoop仅一次

cd /Users/mac/SoftWare/hadoop-3.2.1/bin

hdfs namenode -format

【2】启动dfs

cd /Users/mac/SoftWare/hadoop-3.2.1/sbin

./start-dfs.sh

域名:http://localhost:9870

HDFS文件系统地址:http://localhost:9870/explorer.html#

Starting namenodes on [localhost]

Starting datanodes

Starting secondary namenodes [account.jetbrains.com]

account.jetbrains.com: Warning: Permanently added 'account.jetbrains.com,0.0.0.0' (ECDSA) to the list of known hosts.

2021-07-29 11:42:41,550 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

【3】启动yarn

./start-yarn.sh

http://localhost:8088

【4】jobhistory的启动与停止

启动: 在hadoop/sbin/目录下执行

./mr-jobhistory-daemon.sh start historyserver

停止:在hadoop/sbin/目录下执行

./mr-jobhistory-daemon.sh stop historyserver

【5】提交spark任务

spark-submit --master yarn --deploy-mode cluster --class org.apache.spark.examples.SparkPi --name SparkPi --num-executors 1 --executor-memory 1g --executor-cores 1 /Users/Robots2/softWare/spark-3.1.3/examples/jars/spark-examples_2.12-3.1.3.jar 100

参考文档

https://blog.csdn.net/pgs1004151212/article/details/104391391

浙公网安备 33010602011771号

浙公网安备 33010602011771号