虚拟机搭建Kubespere3.0(详细)

前言:不断学习就是程序员的宿命。

相对于rancher,我个人更倾向于kubespere,因为它界面确实比较吸引我,废话不多扯,下面开始吧

1.环境准备

1.前置要求

虚拟机:centos7.6~7.8!!!

以下:https://kuboard.cn/install/install-k8s.html#%E6%A3%80%E6%9F%A5-centos-hostname 网站的检验结果

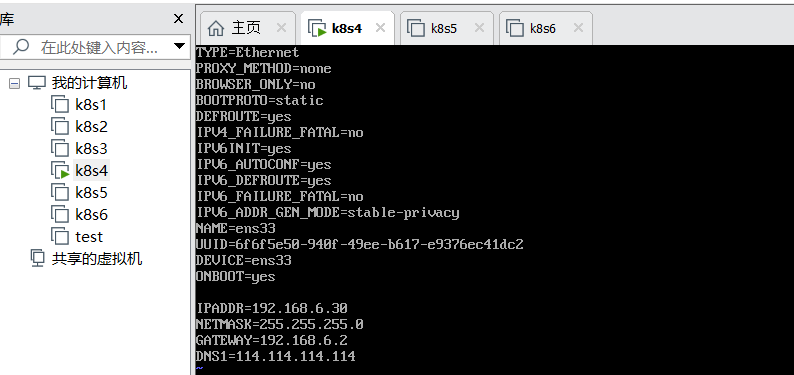

2.网络配置

vi /etc/sysconfig/network-scripts

重新启动网络服务:

/etc/init.d/network restart 或 service network restart

3.虚拟机环境如下

说明:

1) centos 版本为 7.6 或 7.7、CPU 内核数量大于等于 2,且内存大于等于 4G。

2) hostname 不是 localhost,且不包含下划线、小数点、大写字母。

3) 任意节点都有固定的内网 IP 地址(集群机器统一内网)。

4) 任意节点上 IP 地址 可互通(无需 NAT 映射即可相互访问),且没有防火墙、安全组隔离。

5) 任意节点不会直接使用 docker run 或 docker-compose 运行容器,Pod

2.基础环境安装(3台)

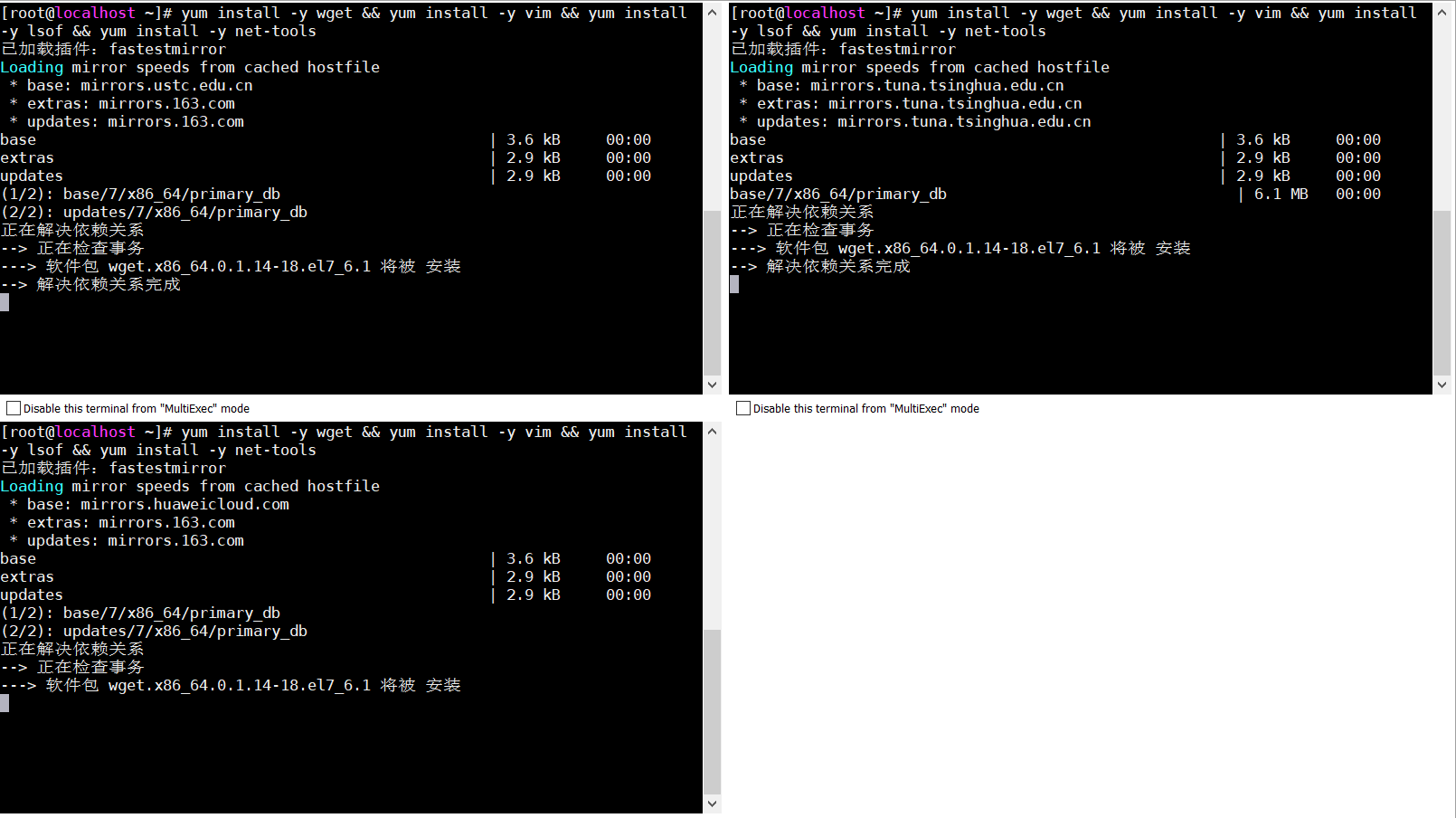

2.1安装基础工具(3台)

yum install -y wget && yum install -y vim && yum install -y lsof && yum install -y net-tools

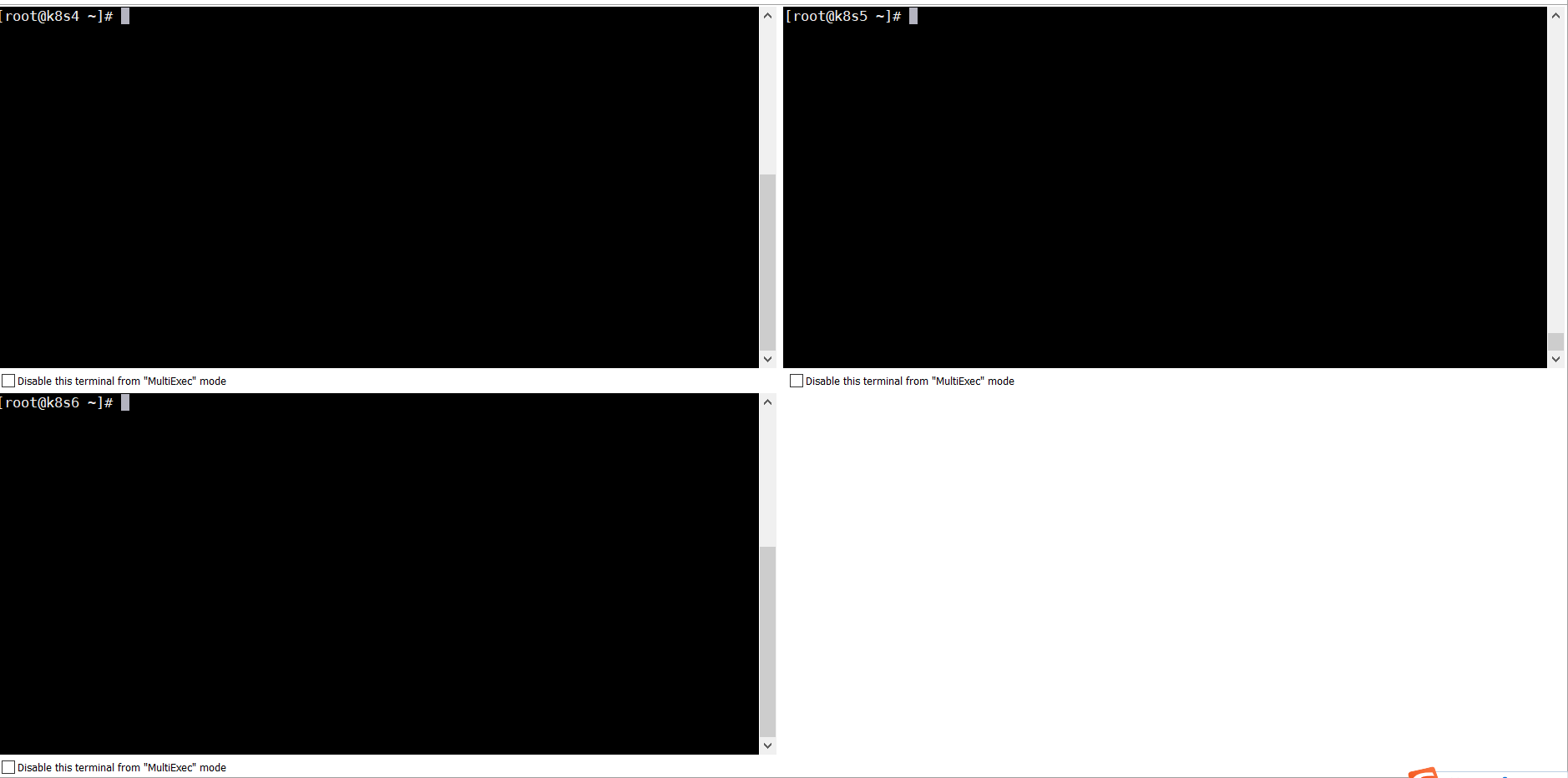

2.2配置hosts

vim /etc/hosts 192.168.6.30 k8s4 192.168.6.31 k8s5 192.168.6.32 k8s6

###

hostnamectl set-hostname <newhostname>:指定新的hostname su 切换过来

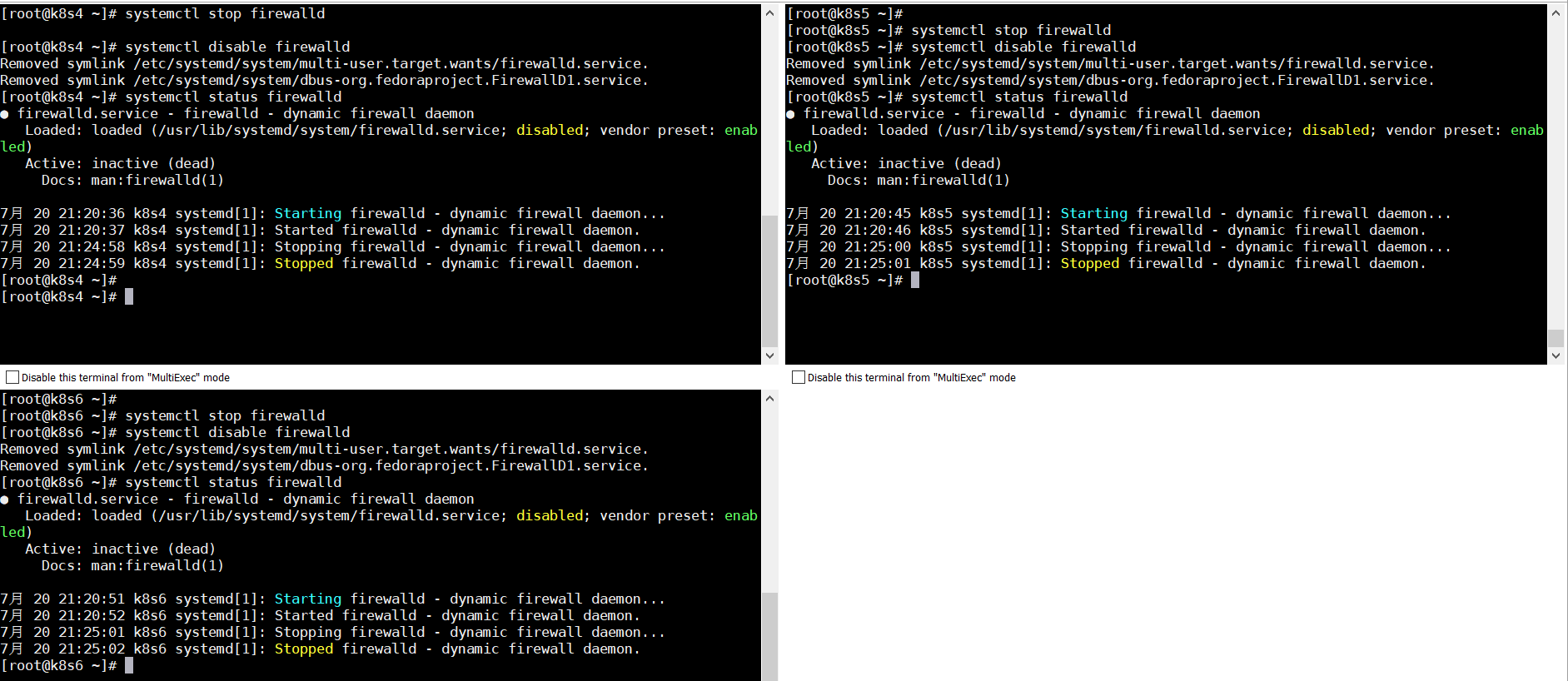

2.3关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

systemctl status firewalld

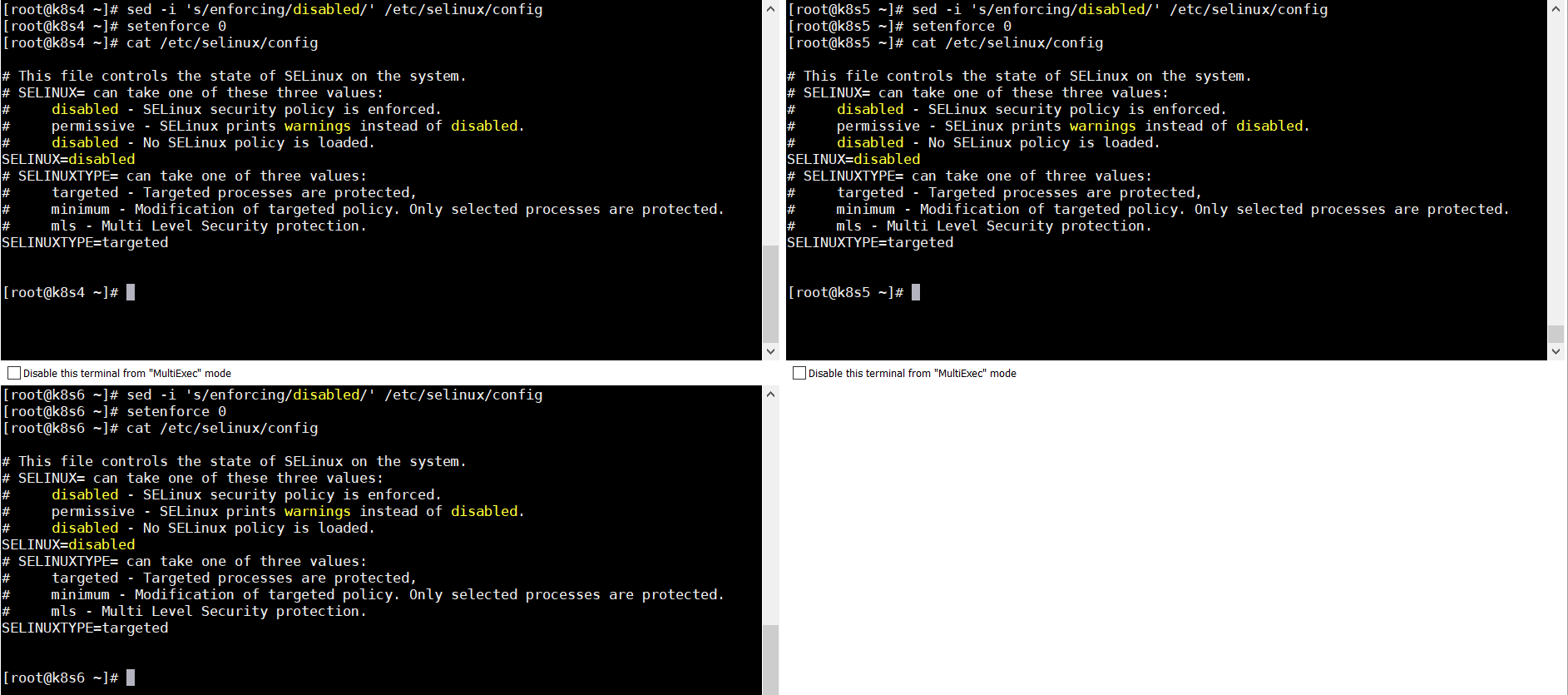

2.4关闭 selinux

sed -i 's/enforcing/disabled/' /etc/selinux/config setenforce 0 cat /etc/selinux/config

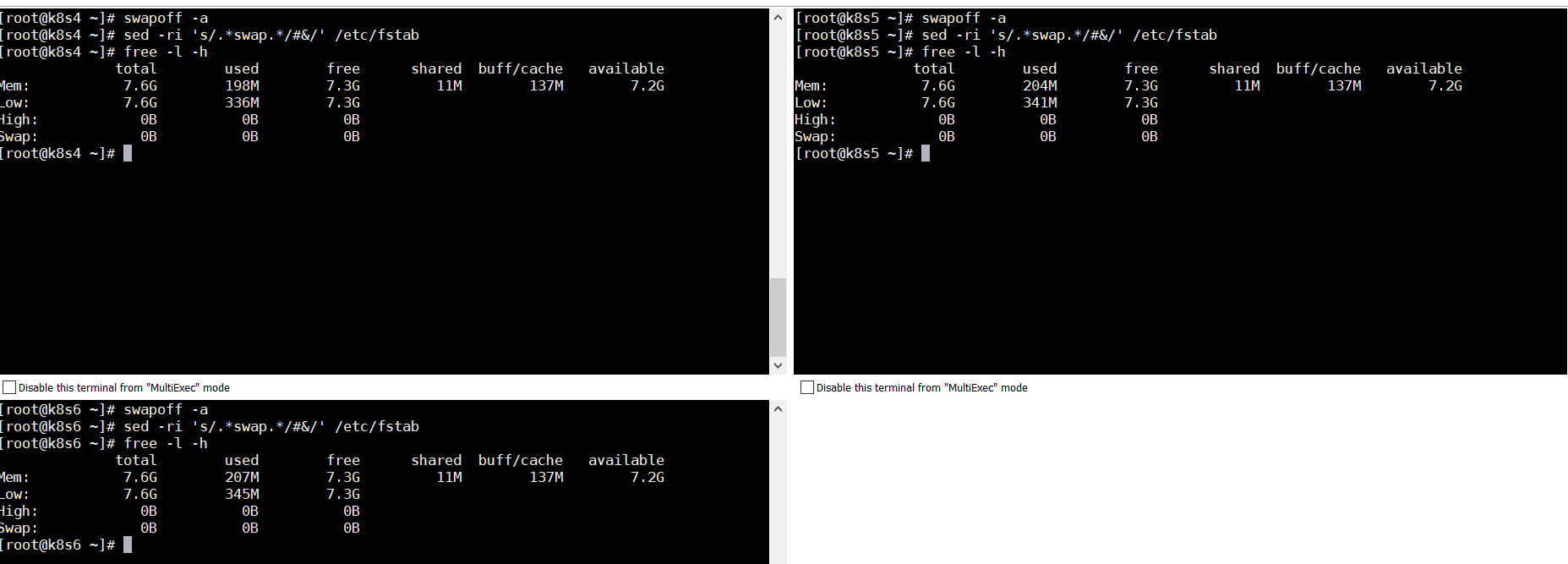

2.5关闭 swap

swapoff -a sed -ri 's/.*swap.*/#&/' /etc/fstab free -l -h

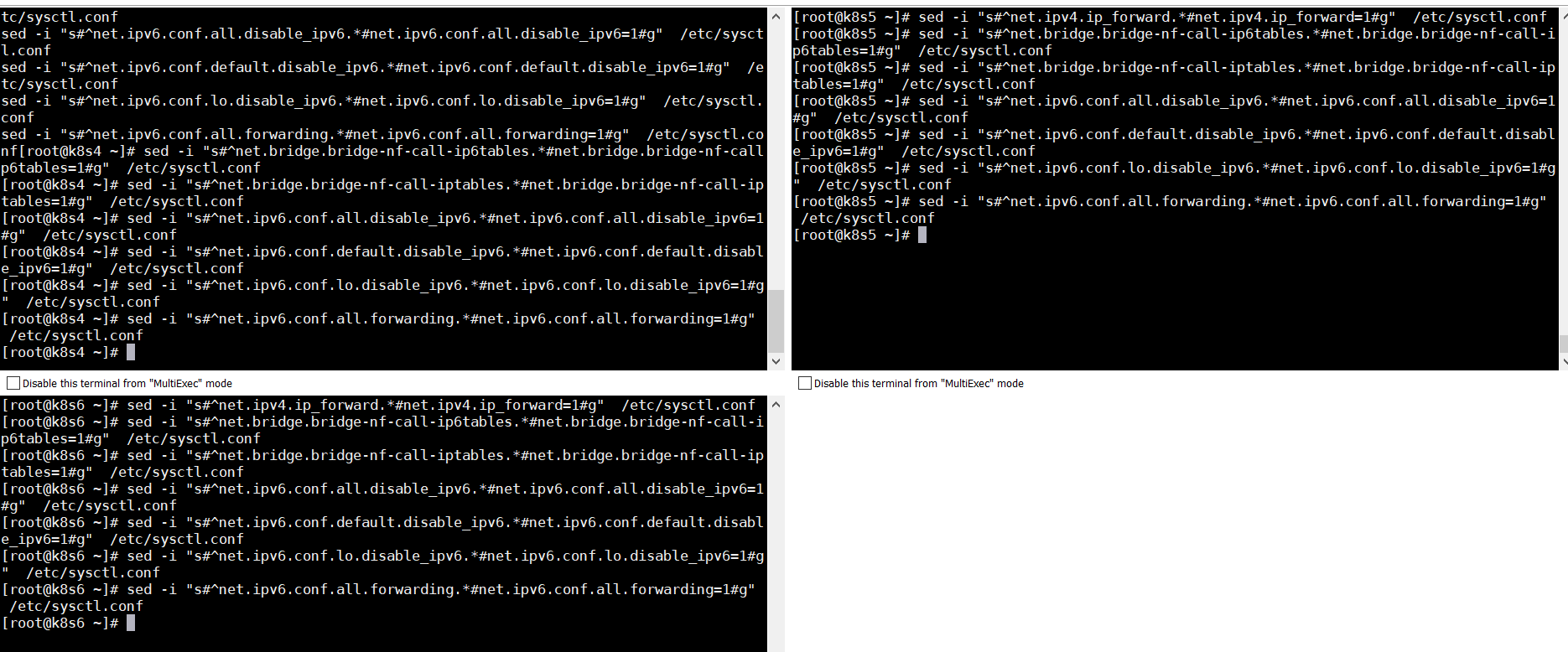

2.6将桥接的 IPv4 流量传递到 iptables 的链

如果没有/etc/sysctl.conf文件的话直接执行以下: echo "net.ipv4.ip_forward = 1" >> /etc/sysctl.conf echo "net.bridge.bridge-nf-call-ip6tables = 1" >> /etc/sysctl.conf echo "net.bridge.bridge-nf-call-iptables = 1" >> /etc/sysctl.conf echo "net.ipv6.conf.all.disable_ipv6 = 1" >> /etc/sysctl.conf echo "net.ipv6.conf.default.disable_ipv6 = 1" >> /etc/sysctl.conf echo "net.ipv6.conf.lo.disable_ipv6 = 1" >> /etc/sysctl.conf echo "net.ipv6.conf.all.forwarding = 1" >> /etc/sysctl.conf

如果有该文件可以执行以下命令 sed -i "s#^net.ipv4.ip_forward.*#net.ipv4.ip_forward=1#g" /etc/sysctl.conf sed -i "s#^net.bridge.bridge-nf-call-ip6tables.*#net.bridge.bridge-nf-call-ip6tables=1#g" /etc/sysctl.conf sed -i "s#^net.bridge.bridge-nf-call-iptables.*#net.bridge.bridge-nf-call-iptables=1#g" /etc/sysctl.conf sed -i "s#^net.ipv6.conf.all.disable_ipv6.*#net.ipv6.conf.all.disable_ipv6=1#g" /etc/sysctl.conf sed -i "s#^net.ipv6.conf.default.disable_ipv6.*#net.ipv6.conf.default.disable_ipv6=1#g" /etc/sysctl.conf sed -i "s#^net.ipv6.conf.lo.disable_ipv6.*#net.ipv6.conf.lo.disable_ipv6=1#g" /etc/sysctl.conf sed -i "s#^net.ipv6.conf.all.forwarding.*#net.ipv6.conf.all.forwarding=1#g" /etc/sysctl.conf

2.7执行以下命令

sysctl -p

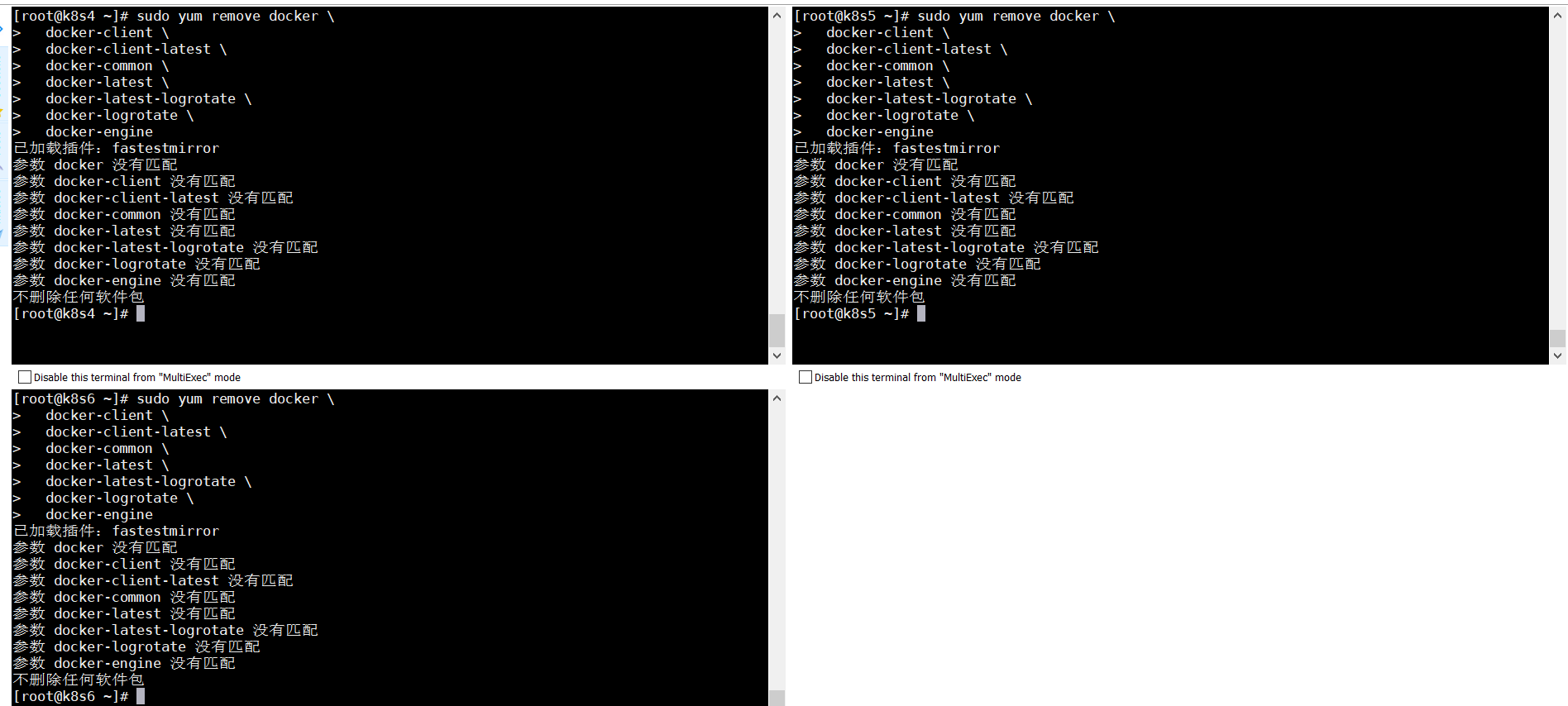

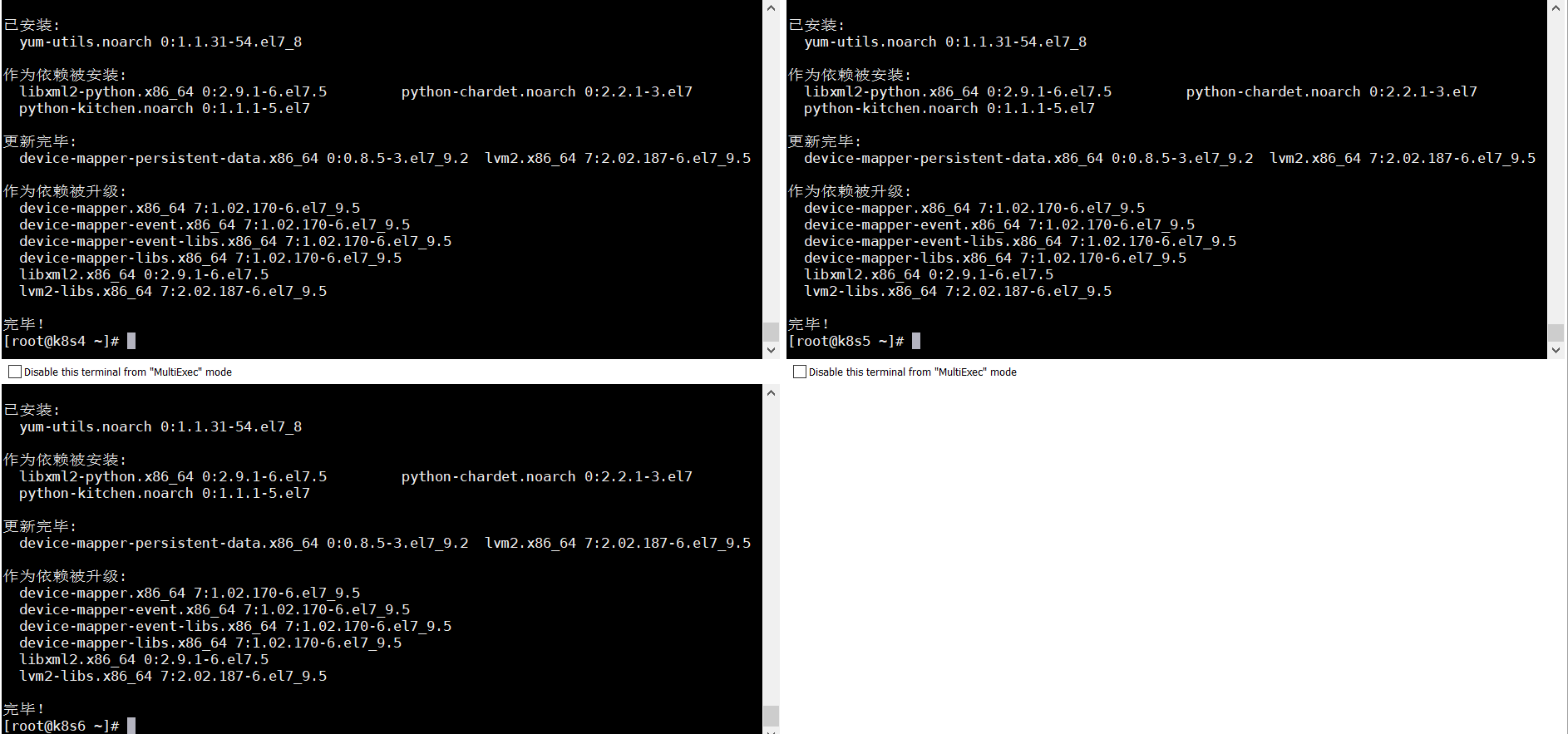

3.安装Docker(3台)

3.1卸载旧版本Docker

sudo yum remove docker \ docker-client \ docker-client-latest \ docker-common \ docker-latest \ docker-latest-logrotate \ docker-logrotate \ docker-engine

3.2安装基础依赖

yum install -y yum-utils \ device-mapper-persistent-data \ lvm2

3.3配置docker yum源

sudo yum-config-manager \ --add-repo \ http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

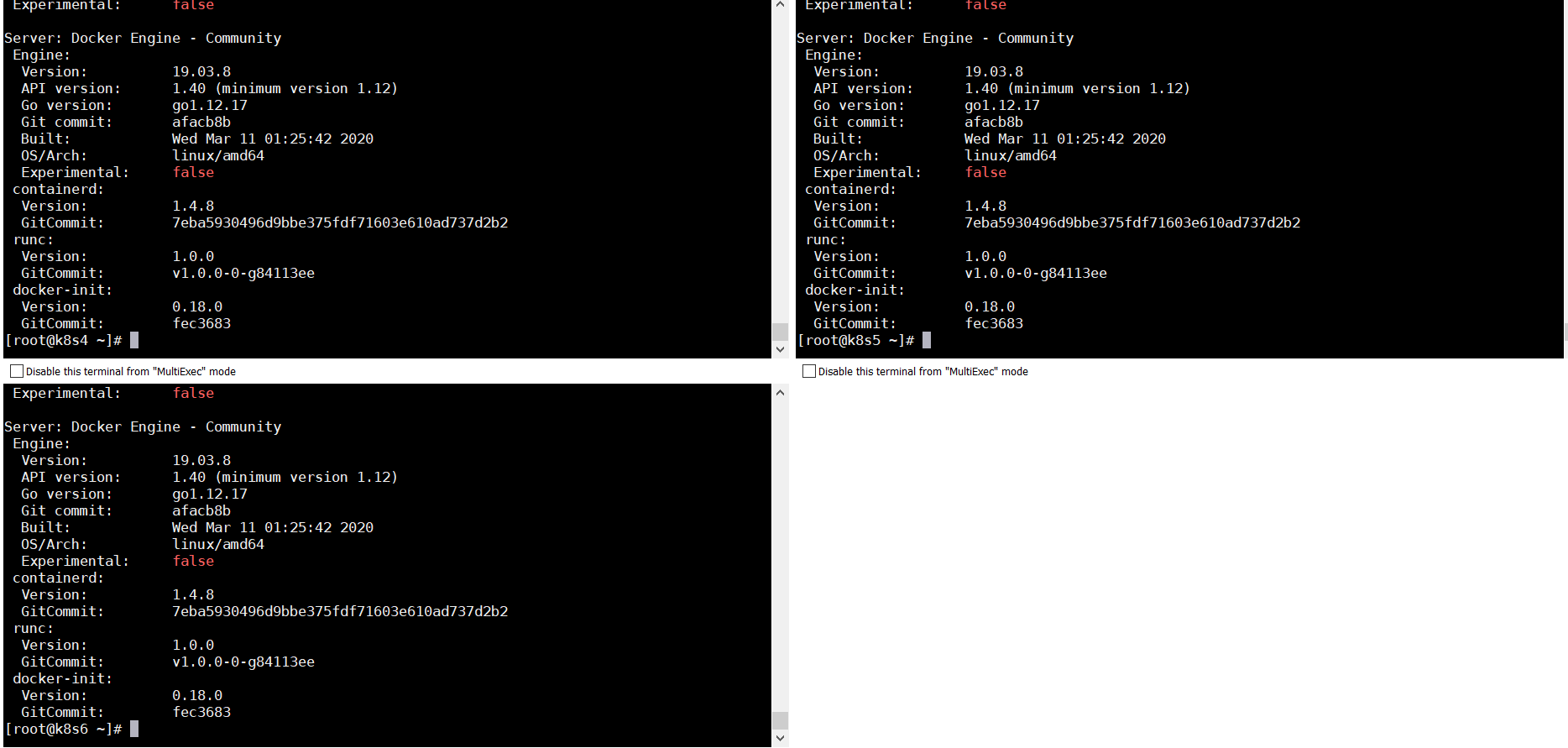

3.4安装并启动 docker

yum install -y docker-ce-19.03.8 docker-ce-cli-19.03.8 containerd.io systemctl enable docker systemctl start docker docker version

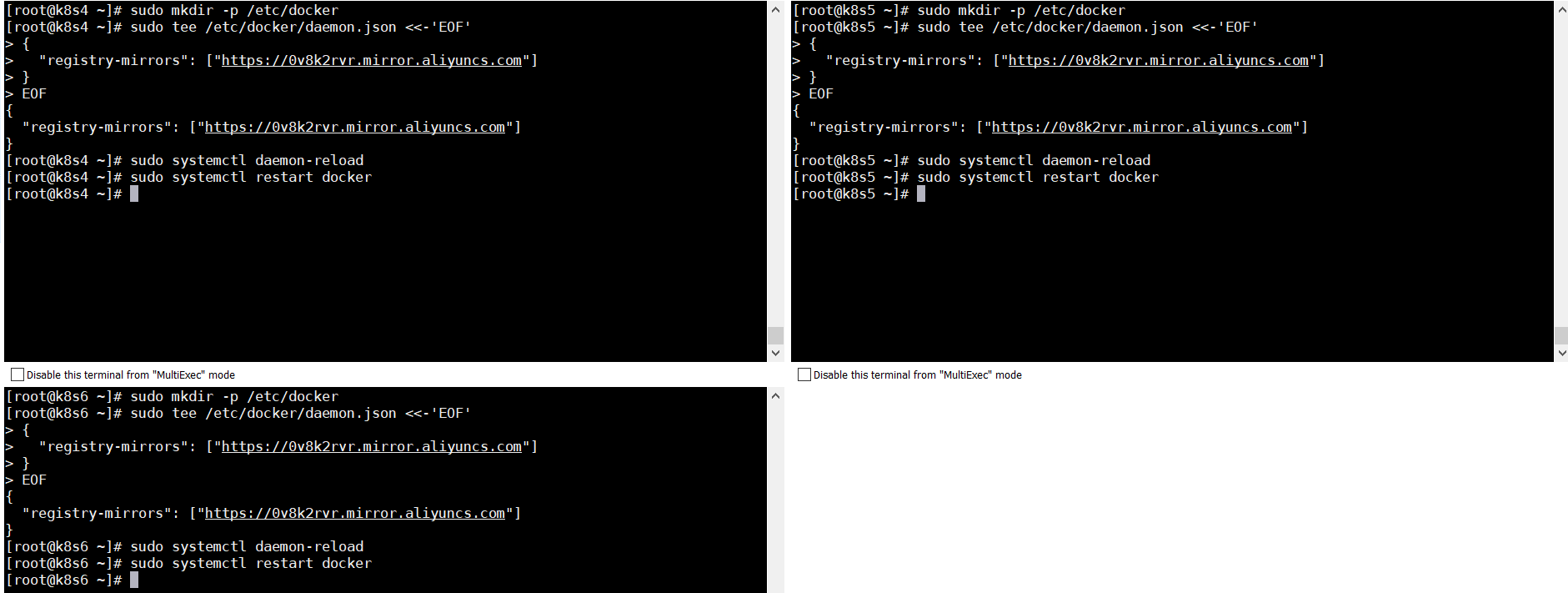

3.5配置docker加速

sudo mkdir -p /etc/docker sudo tee /etc/docker/daemon.json <<-'EOF' { "registry-mirrors": ["https://0v8k2rvr.mirror.aliyuncs.com"] } EOF sudo systemctl daemon-reload sudo systemctl restart docker

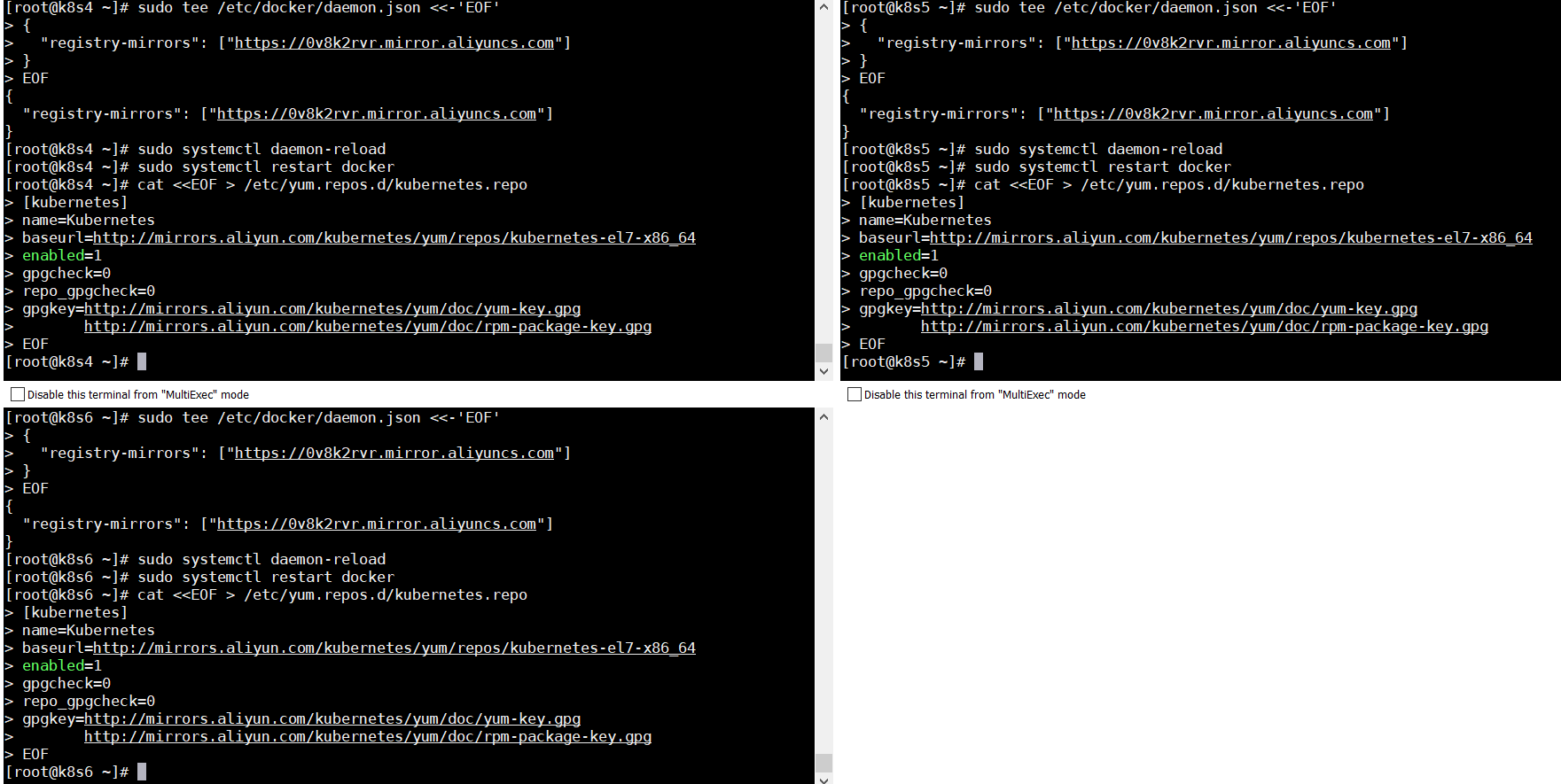

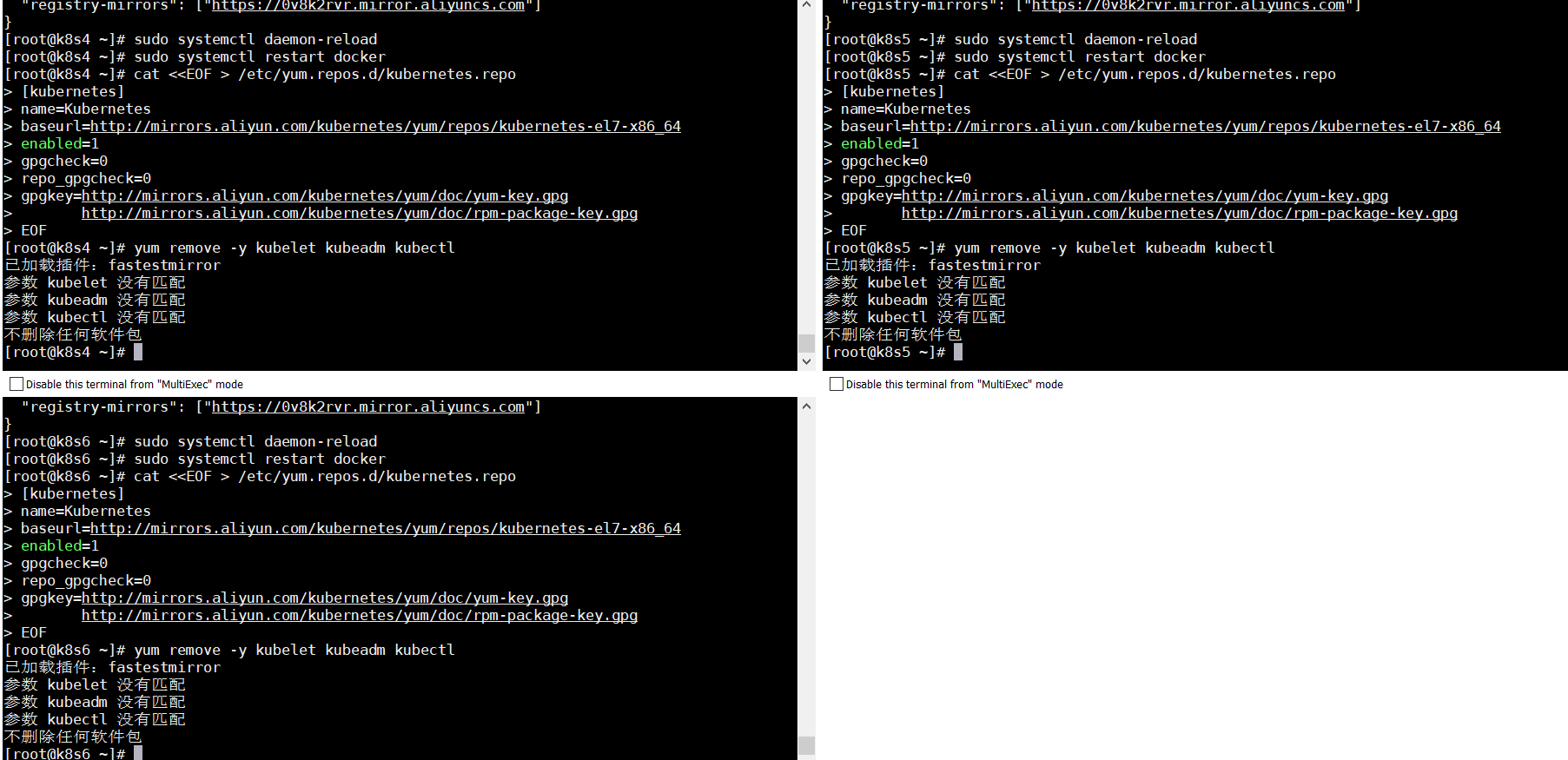

4.k8s环境安装

4.1配置K8S的yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo [kubernetes] name=Kubernetes baseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64 enabled=1 gpgcheck=0 repo_gpgcheck=0 gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg http://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg EOF

4.2卸载旧版本

yum remove -y kubelet kubeadm kubectl

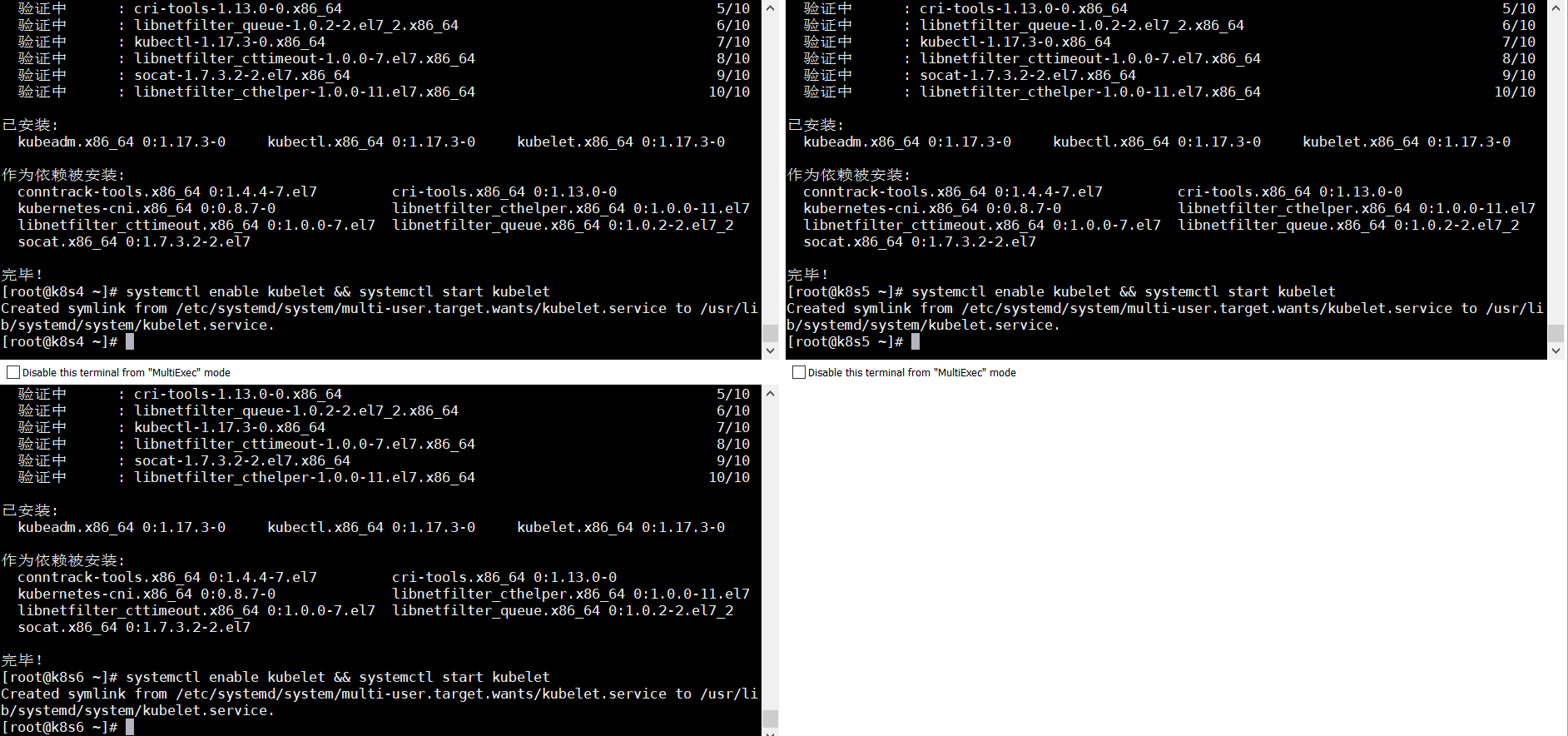

4.3安装k8s、kubelet、kubeadm、kubectl(3台)

yum install -y kubelet-1.17.3 kubeadm-1.17.3 kubectl-1.17.3

4.4开启自启

systemctl enable kubelet && systemctl start kubelet

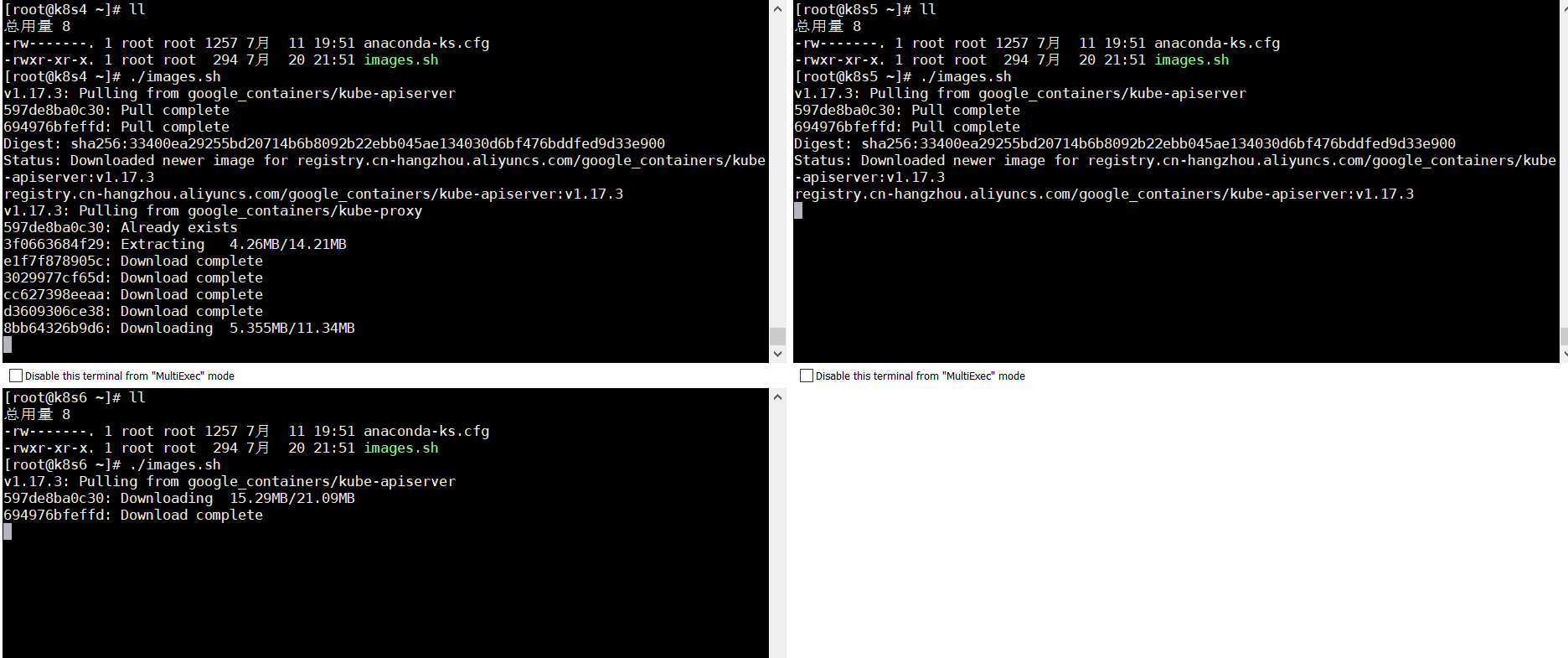

4.5初始化(3台)

vi images.sh #新建一个脚本 #!/bin/bash images=( kube-apiserver:v1.17.3 kube-proxy:v1.17.3 kube-controller-manager:v1.17.3 kube-scheduler:v1.17.3 coredns:1.6.5 etcd:3.4.3-0 pause:3.1 ) for imageName in ${images[@]} ; do docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/$imageName done

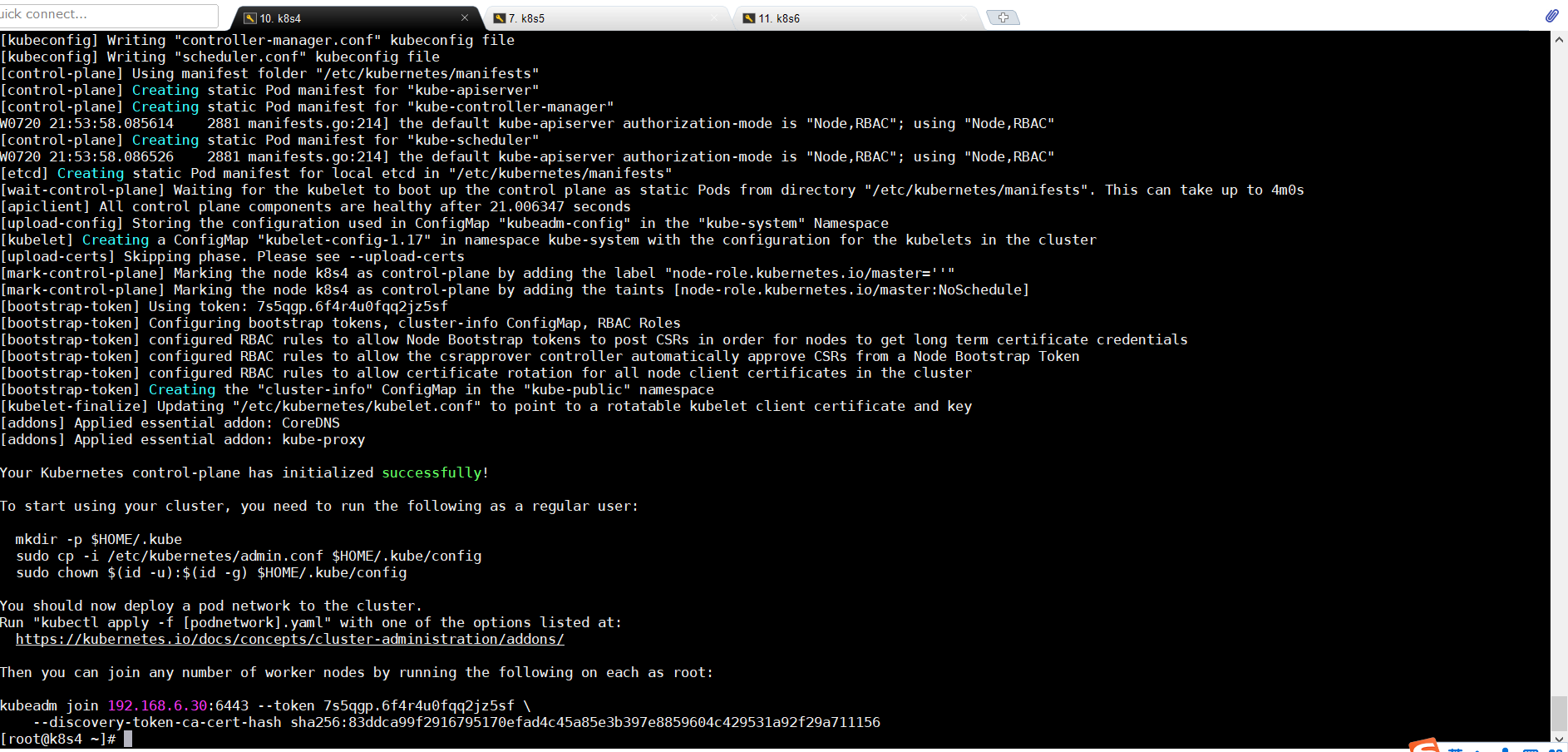

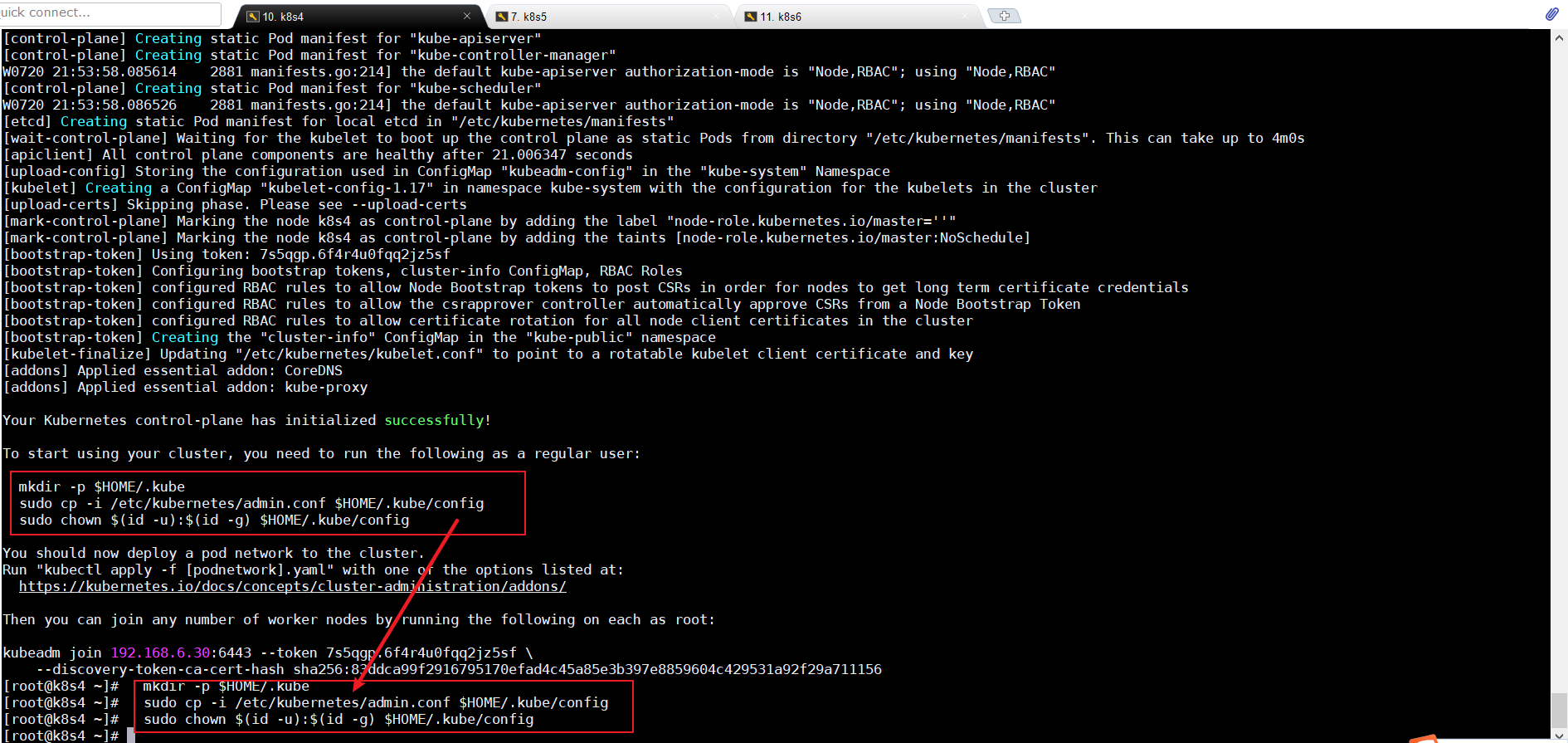

4.6初始化master节点(k8s4)

kubeadm init \ --apiserver-advertise-address=192.168.6.30 \ --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers \ --kubernetes-version v1.17.3 \ --service-cidr=10.96.0.0/16 \ --pod-network-cidr=10.244.0.0/16

###################注意#######################

这里--apiserver-advertise-address=192.168.6.30 ip为master节点IP

4.7配置kubectl(master节点)

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config #########这里一定是上一步的结果

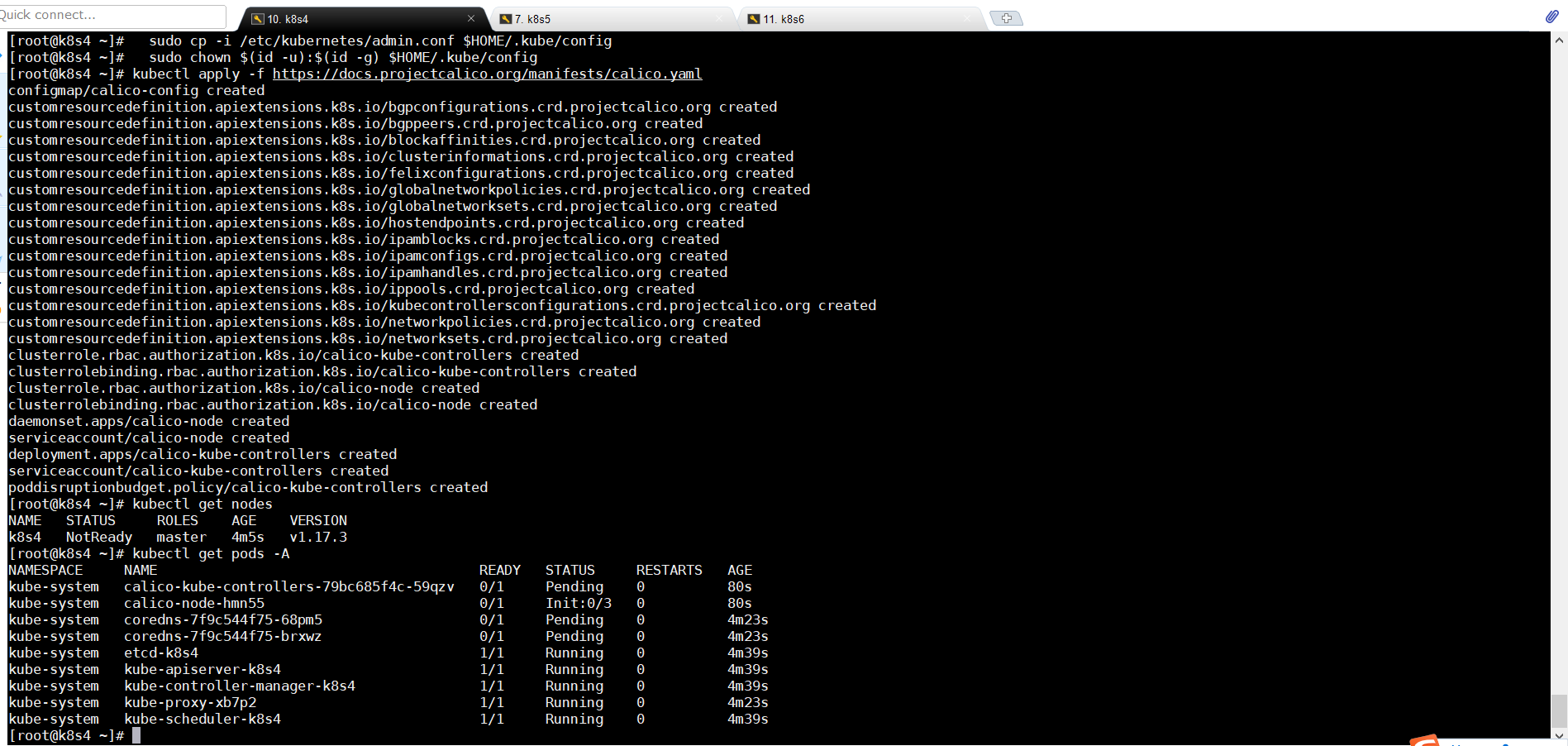

4.8部署网络插件(master)

kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml

4.9查看节点和Pod运行情况

kubectl get nodes

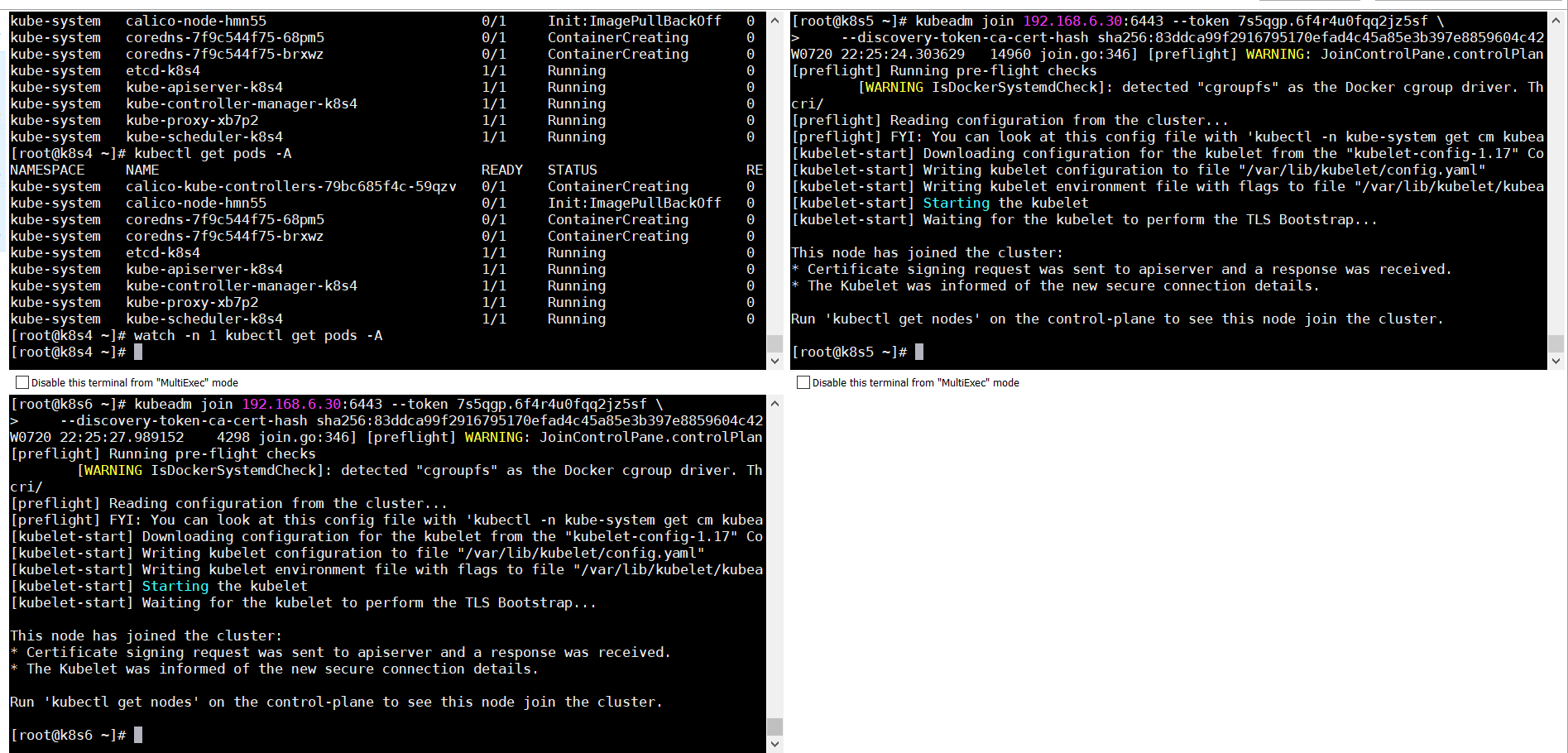

4.10 执行令牌(从节点)

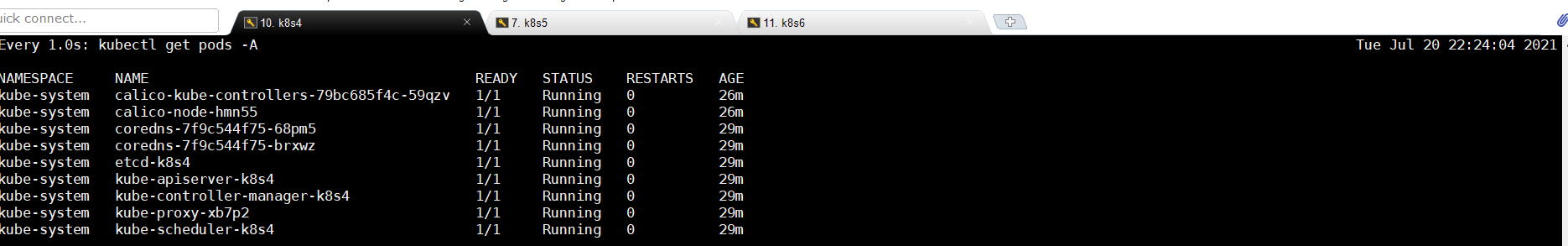

这里需要注意的是,必须等所有的状态为Runing才能进行下一步操作

kubeadm join 192.168.6.30:6443 --token 7s5qgp.6f4r4u0fqq2jz5sf \ --discovery-token-ca-cert-hash sha256:83ddca99f2916795170efad4c45a85e3b397e8859604c429531a92f29a711156

######初始化完成主节点后会生成

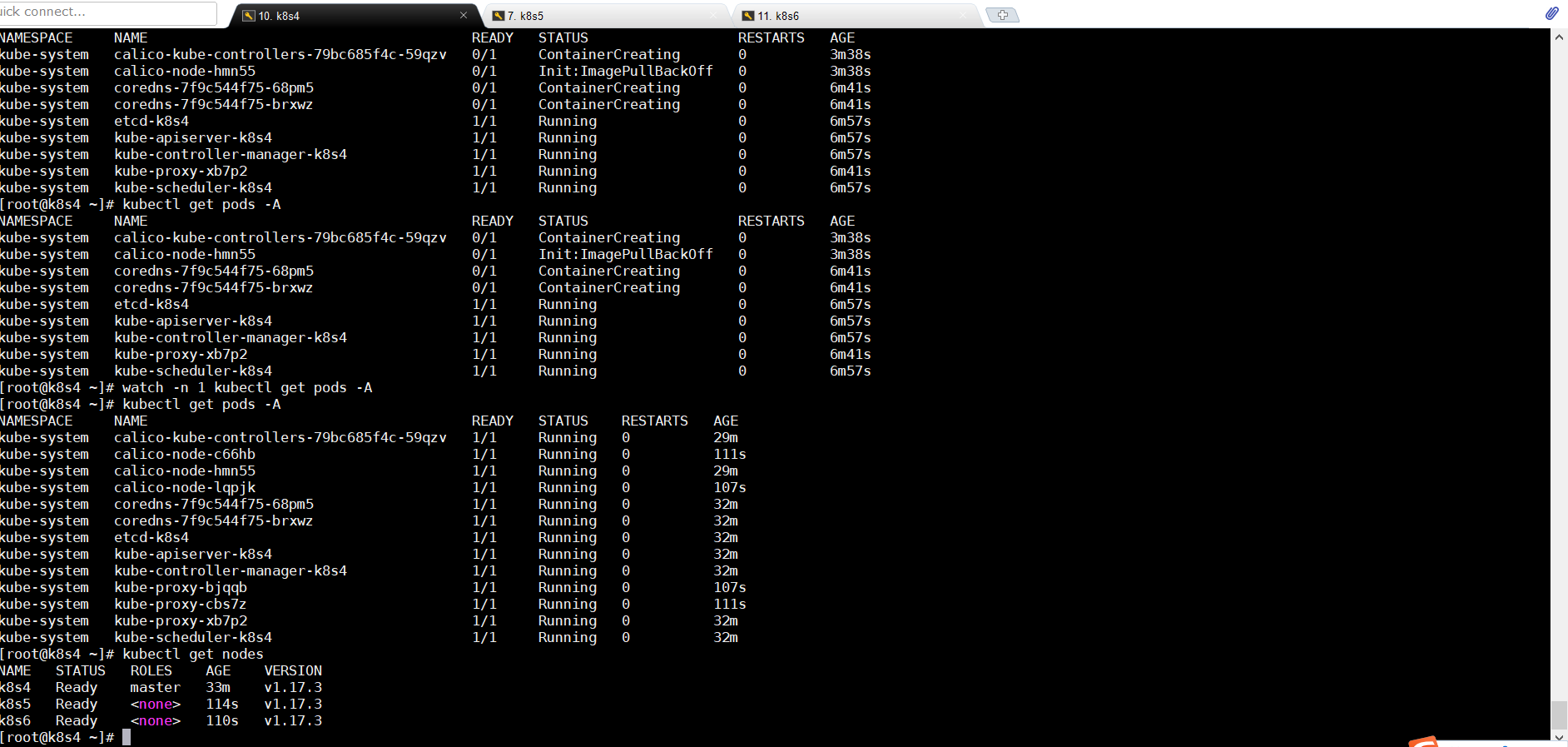

4.11主节点查看运行情况

kubectl get nodes kubectl get pods -A

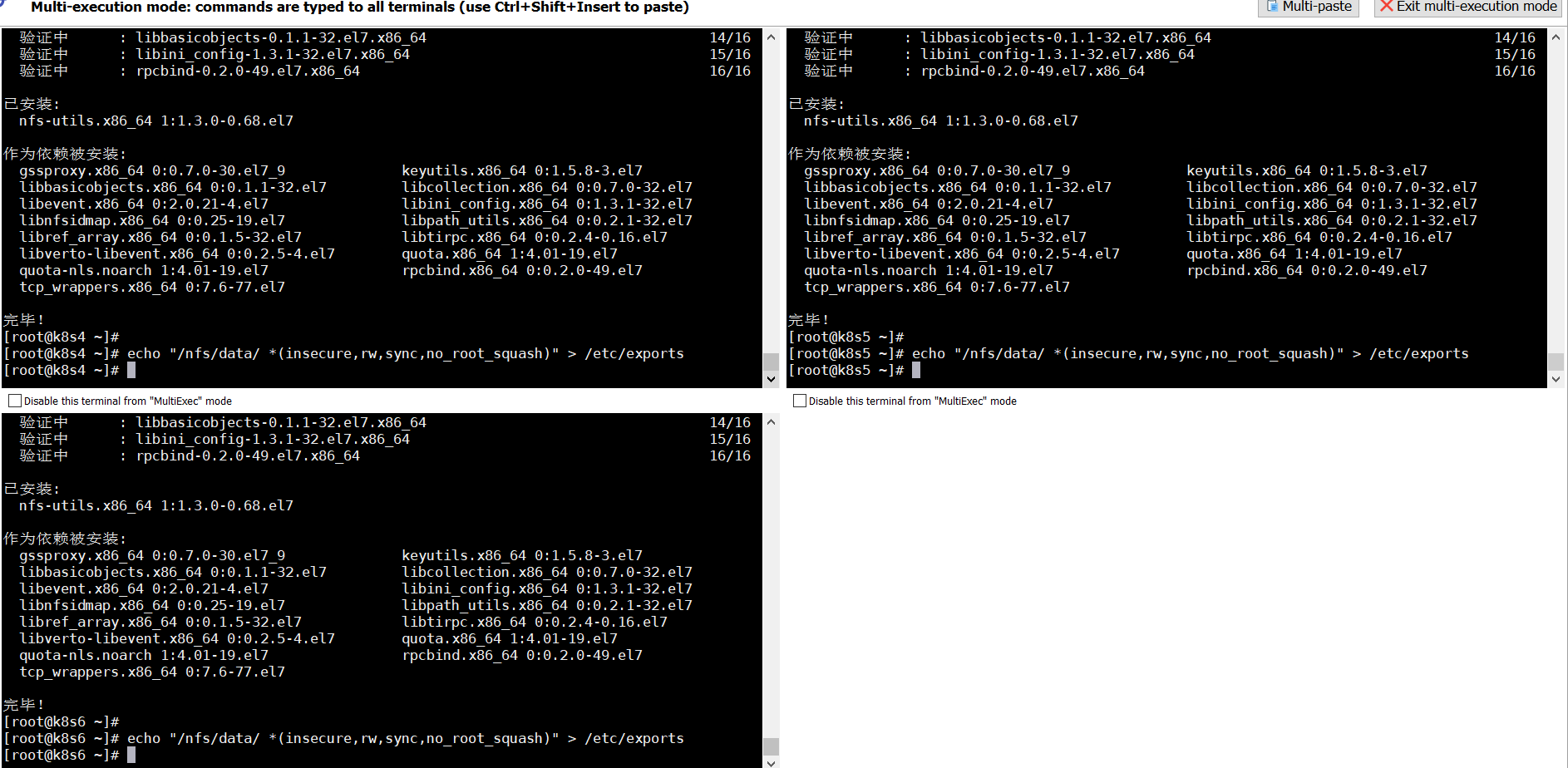

5. 搭建NFS作为默认sc(3台)

5.1配置nfs服务器

yum install -y nfs-utils echo "/nfs/data/ *(insecure,rw,sync,no_root_squash)" > /etc/exports

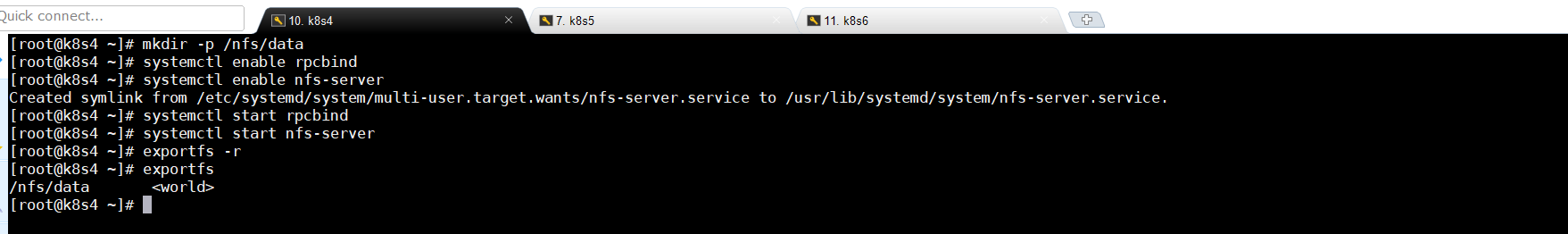

5.2创建nfs服务器目录(master节点作为服务器,master节点操作)并启动nfs

mkdir -p /nfs/data

systemctl enable rpcbind

systemctl enable nfs-server

systemctl start rpcbind

systemctl start nfs-server

exportfs -r

exportfs ####检查配置是否生效

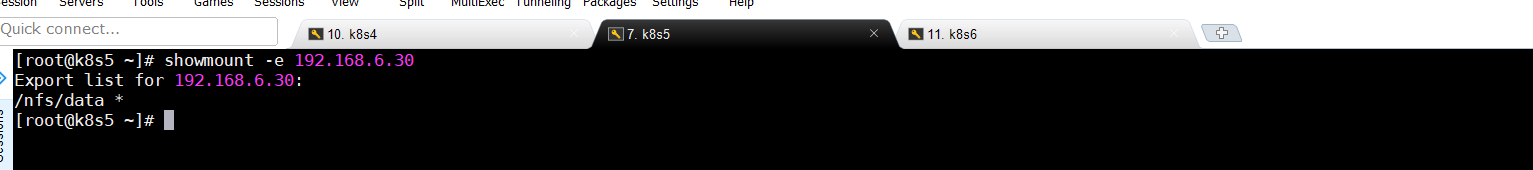

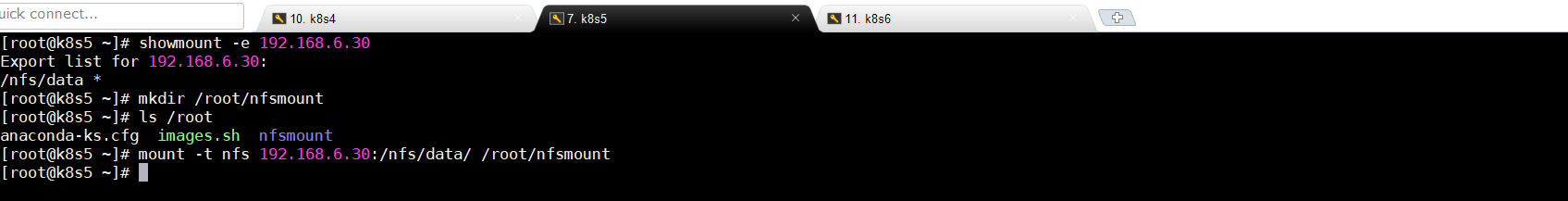

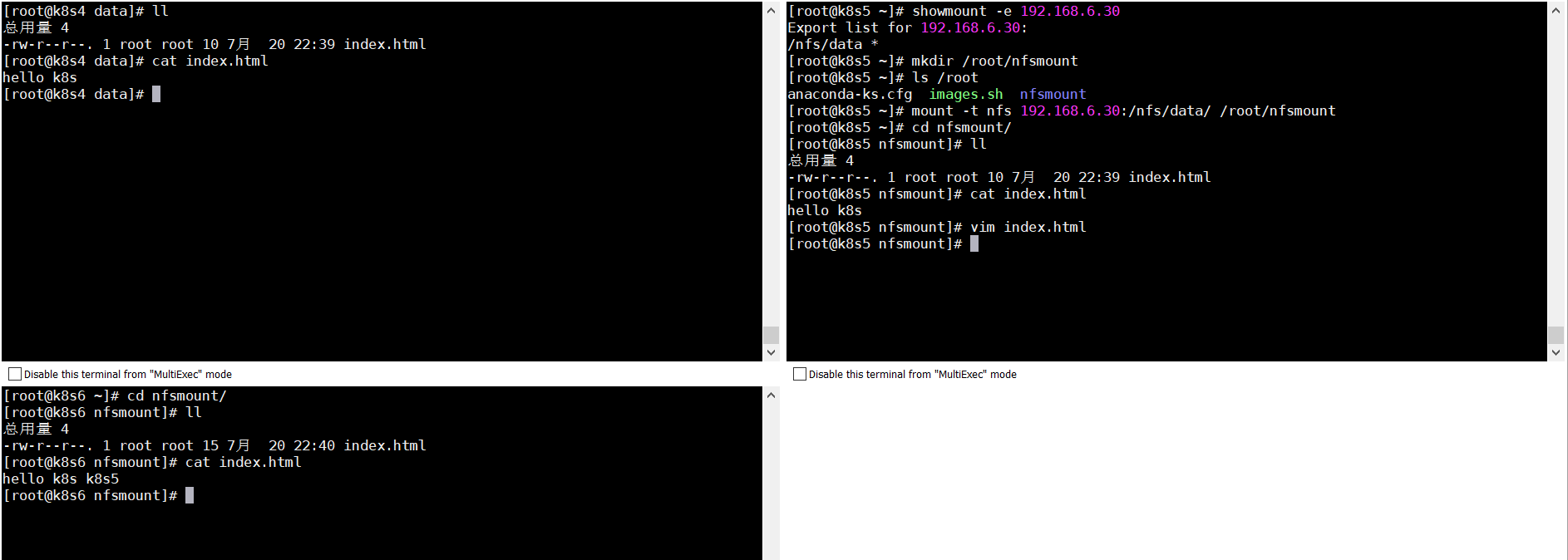

5.3搭建NFS-Client(从节点操作)

5.3.1安装客户端工具

showmount -e 192.168.6.30 ####该ip是主节点ip

5.3.2创建同步文件夹(从节点操作)

mkdir /root/nfsmount

ls /root

5.3.3将客户端的/root/nfsmount和/nfs/data/做同步(从节点操作)

mount -t nfs 192.168.6.30:/nfs/data/ /root/nfsmount

5.3.4验证

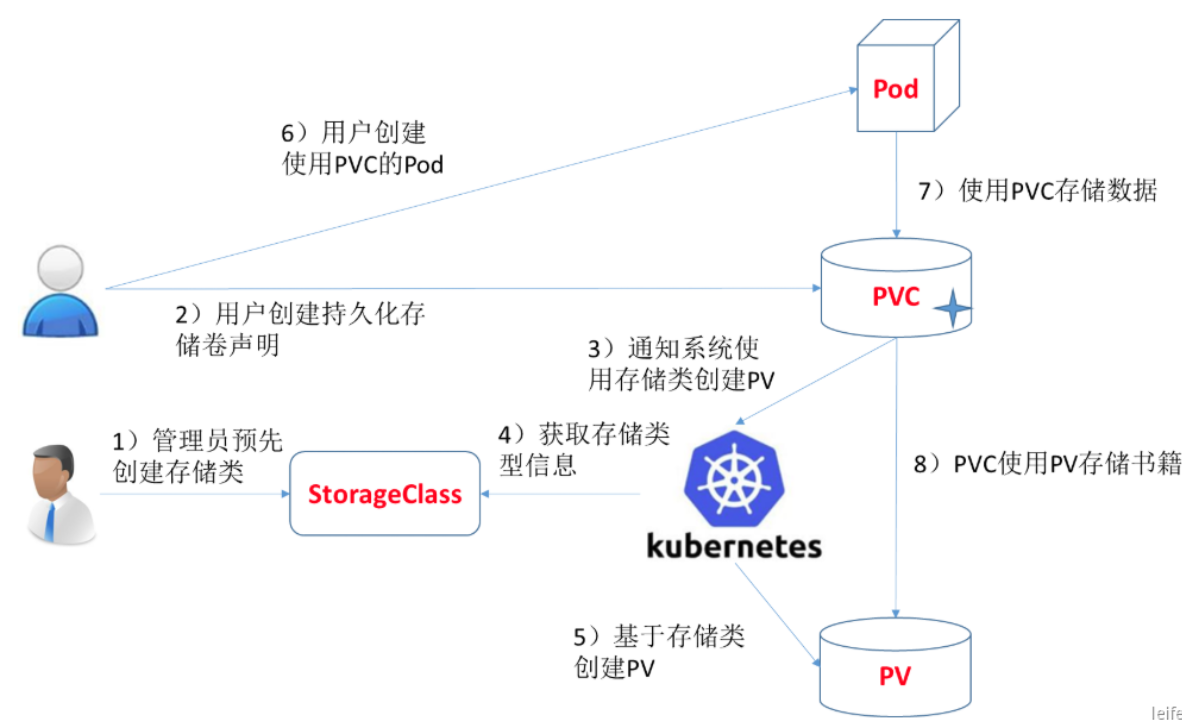

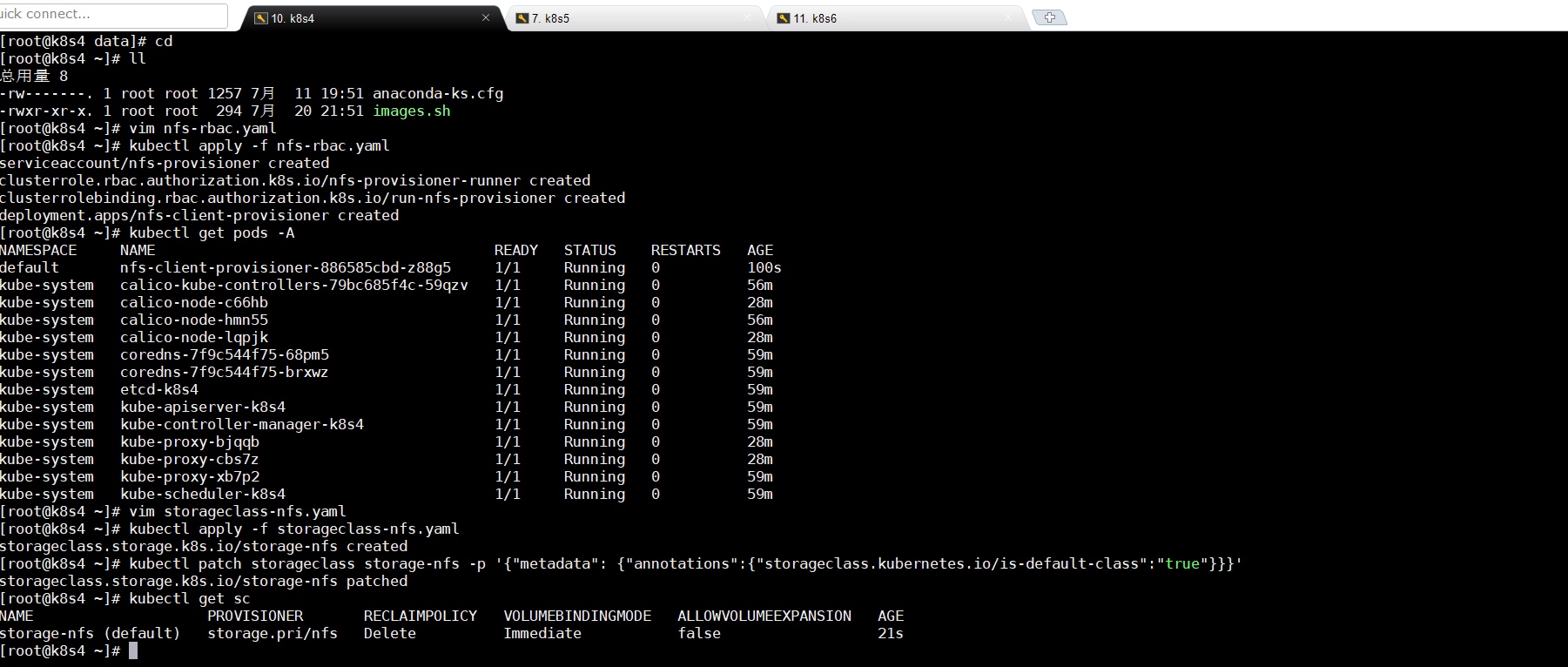

6.设置动态供应

6.1创建provisioner(NFS环境前面已经搭好)

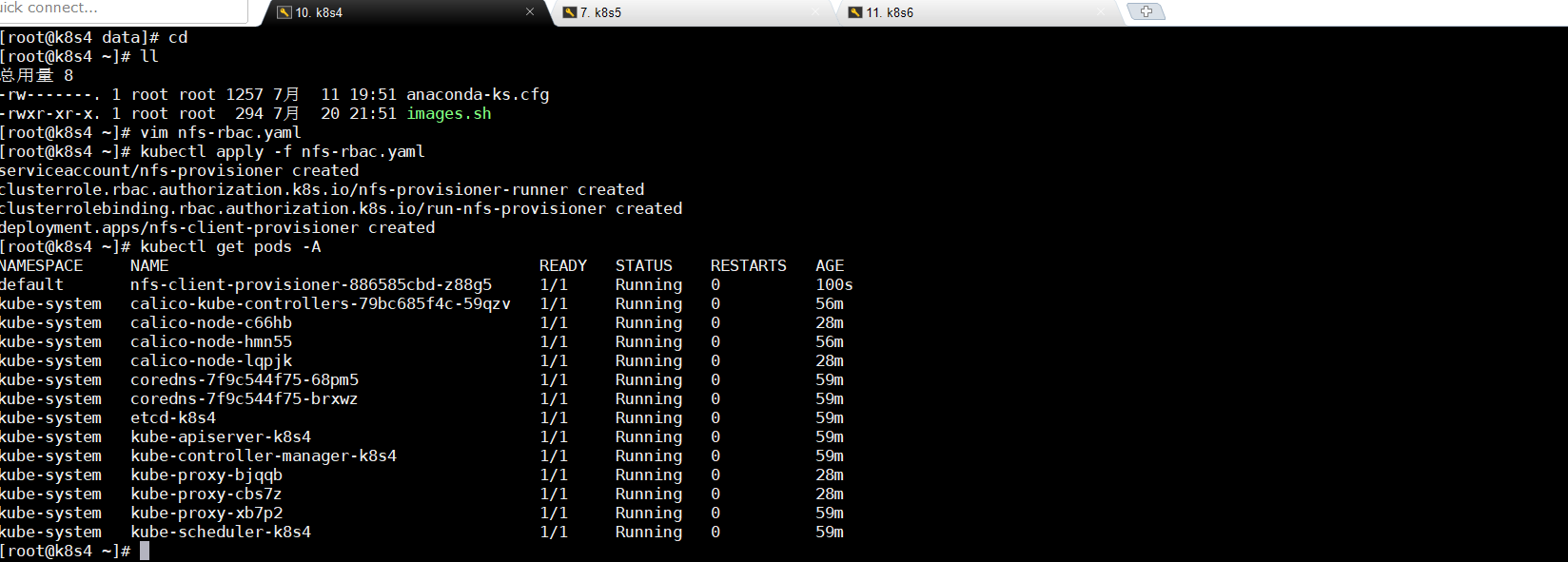

6.2master节点操作

--- apiVersion: v1 kind: ServiceAccount metadata: name: nfs-provisioner --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["watch", "create", "update", "patch"] - apiGroups: [""] resources: ["services", "endpoints"] verbs: ["get","create","list", "watch","update"] - apiGroups: ["extensions"] resources: ["podsecuritypolicies"] resourceNames: ["nfs-provisioner"] verbs: ["use"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-provisioner subjects: - kind: ServiceAccount name: nfs-provisioner namespace: default roleRef: kind: ClusterRole name: nfs-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Deployment apiVersion: apps/v1 metadata: name: nfs-client-provisioner spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccount: nfs-provisioner containers: - name: nfs-client-provisioner image: lizhenliang/nfs-client-provisioner volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: storage.pri/nfs - name: NFS_SERVER value: 192.168.6.30 - name: NFS_PATH value: /nfs/data volumes: - name: nfs-client-root nfs: server: 192.168.6.30 path: /nfs/data

6.3执行创建nfs的yaml文件信息

kubectl apply -f nfs-rbac.yaml

kubectl get pods -A

如果报错:查看报错信息,这个命令:

kubectl describe pod xxx -n kube-system

如果发现pod有问题,想删除pod进行重新kubectl apply-f nfs-rbac.yaml的话,可以参照这个博客文档:

7.1创建yaml

vim storageclass-nfs.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: storage-nfs provisioner: storage.pri/nfs reclaimPolicy: Delete

7.2应用storageclass-nfs.yaml文件

kubectl apply -f storageclass-nfs.yaml

7.3修改默认驱动

kubectl patch storageclass storage-nfs -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

kubectl get sc

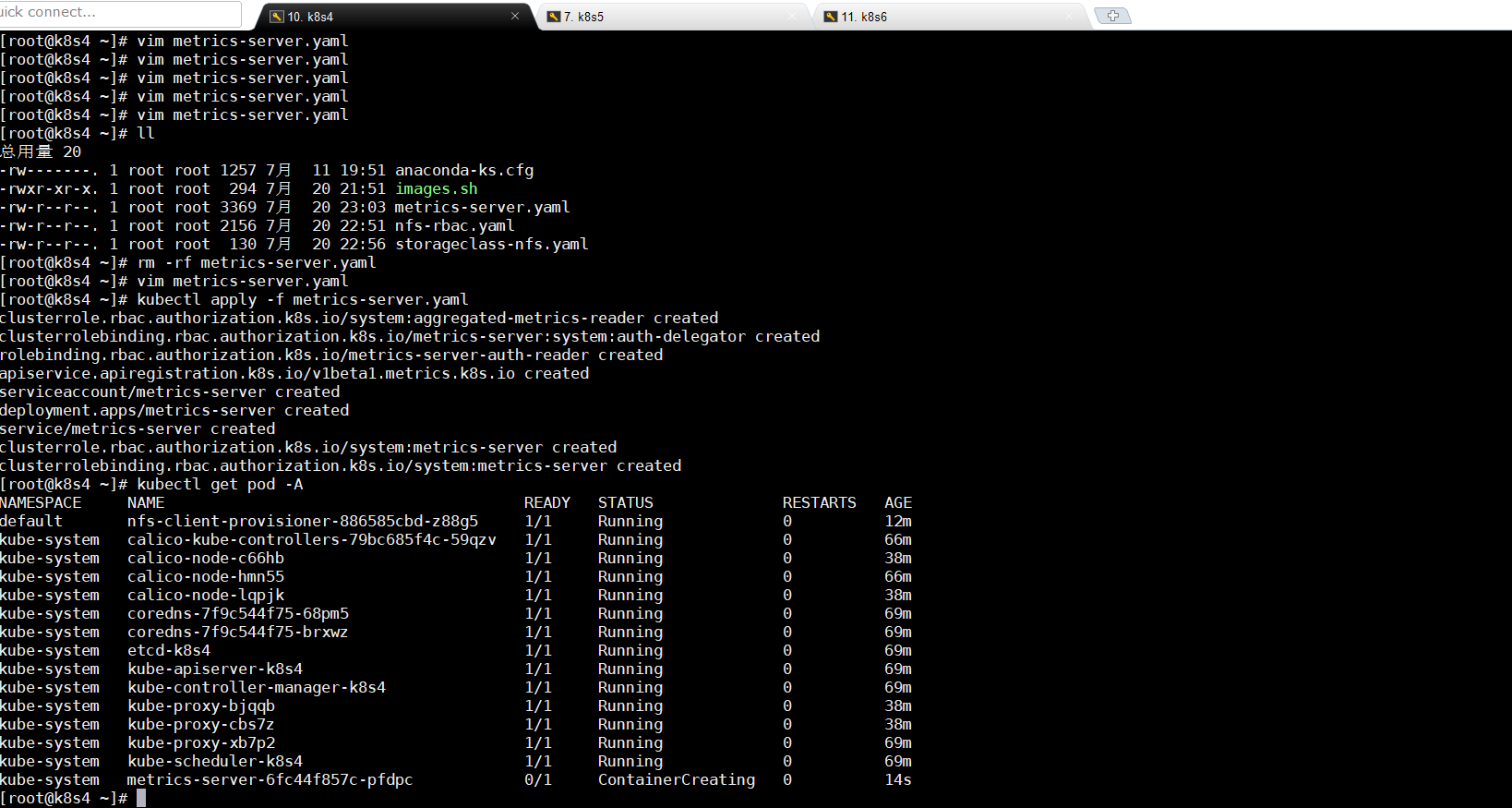

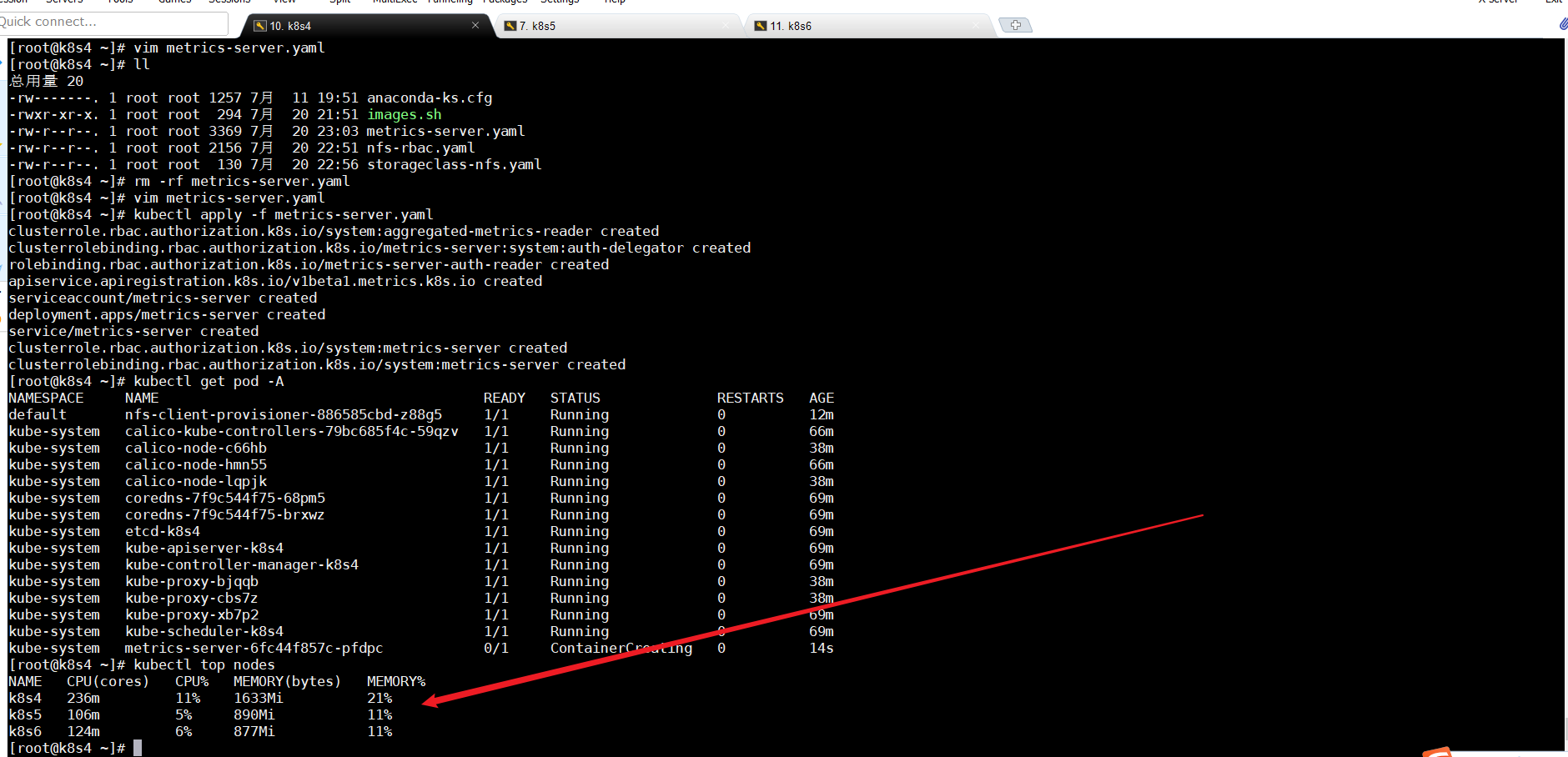

8.安装metrics-server(master)

8.1准备metrics-server.yaml文件

--- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:aggregated-metrics-reader labels: rbac.authorization.k8s.io/aggregate-to-view: "true" rbac.authorization.k8s.io/aggregate-to-edit: "true" rbac.authorization.k8s.io/aggregate-to-admin: "true" rules: - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes"] verbs: ["get", "list", "watch"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: metrics-server:system:auth-delegator roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegator subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: metrics-server-auth-reader namespace: kube-system roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-reader subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system --- apiVersion: apiregistration.k8s.io/v1beta1 kind: APIService metadata: name: v1beta1.metrics.k8s.io spec: service: name: metrics-server namespace: kube-system group: metrics.k8s.io version: v1beta1 insecureSkipTLSVerify: true groupPriorityMinimum: 100 versionPriority: 100 --- apiVersion: v1 kind: ServiceAccount metadata: name: metrics-server namespace: kube-system --- apiVersion: apps/v1 kind: Deployment metadata: name: metrics-server namespace: kube-system labels: k8s-app: metrics-server spec: selector: matchLabels: k8s-app: metrics-server template: metadata: name: metrics-server labels: k8s-app: metrics-server spec: serviceAccountName: metrics-server volumes: - name: tmp-dir emptyDir: {} containers: - name: metrics-server image: mirrorgooglecontainers/metrics-server-amd64:v0.3.6 imagePullPolicy: IfNotPresent args: - --cert-dir=/tmp - --secure-port=4443 - --kubelet-insecure-tls - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname ports: - name: main-port containerPort: 4443 protocol: TCP securityContext: readOnlyRootFilesystem: true runAsNonRoot: true runAsUser: 1000 volumeMounts: - name: tmp-dir mountPath: /tmp nodeSelector: kubernetes.io/os: linux kubernetes.io/arch: "amd64" --- apiVersion: v1 kind: Service metadata: name: metrics-server namespace: kube-system labels: kubernetes.io/name: "Metrics-server" kubernetes.io/cluster-service: "true" spec: selector: k8s-app: metrics-server ports: - port: 443 protocol: TCP targetPort: main-port --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: system:metrics-server rules: - apiGroups: - "" resources: - pods - nodes - nodes/stats - namespaces - configmaps verbs: - get - list - watch --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: system:metrics-server roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-server subjects: - kind: ServiceAccount name: metrics-server namespace: kube-system

8.2应用pod

kubectl apply -f metrics-server.yaml kubectl get pod -A

8.3查看系统的监控状态

kubectl top nodes

如果运行kubectl top nodes这个命令,爆metrics not available yet 这个命令还没有用,那就稍等一会,就能用了

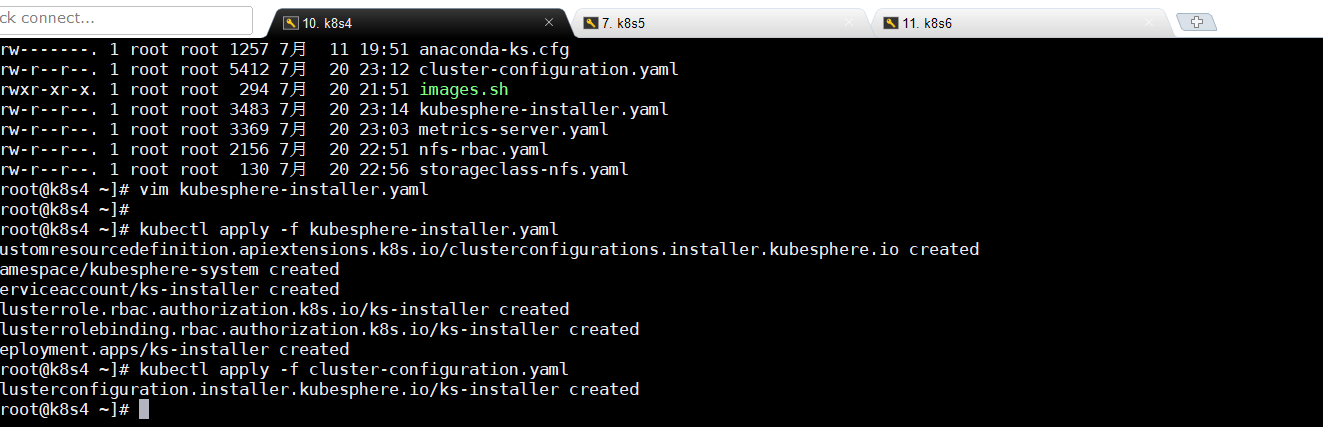

9.安装kebespherev3.0.0

部署文档:https://kubesphere.com.cn/docs/quick-start/minimal-kubesphere-on-k8s/

9.1安装(master节点)

9.1.1 准备cluster-configuration.yaml

vim cluster-configuration.yaml

添加以下内容: 其中“192.168.6.30”要修改为你master节点ip

--- apiVersion: installer.kubesphere.io/v1alpha1 kind: ClusterConfiguration metadata: name: ks-installer namespace: kubesphere-system labels: version: v3.0.0 spec: persistence: storageClass: "" # If there is not a default StorageClass in your cluster, you need to specify an existing StorageClass here. authentication: jwtSecret: "" # Keep the jwtSecret consistent with the host cluster. Retrive the jwtSecret by executing "kubectl -n kubesphere-system get cm kubesphere-config -o yaml | grep -v "apiVersion" | grep jwtSecret" on the host cluster. etcd: monitoring: true # Whether to enable etcd monitoring dashboard installation. You have to create a secret for etcd before you enable it. endpointIps: 192.168.6.30 # etcd cluster EndpointIps, it can be a bunch of IPs here. port: 2379 # etcd port tlsEnable: true common: mysqlVolumeSize: 20Gi # MySQL PVC size. minioVolumeSize: 20Gi # Minio PVC size. etcdVolumeSize: 20Gi # etcd PVC size. openldapVolumeSize: 2Gi # openldap PVC size. redisVolumSize: 2Gi # Redis PVC size. es: # Storage backend for logging, events and auditing. # elasticsearchMasterReplicas: 1 # total number of master nodes, it's not allowed to use even number # elasticsearchDataReplicas: 1 # total number of data nodes. elasticsearchMasterVolumeSize: 4Gi # Volume size of Elasticsearch master nodes. elasticsearchDataVolumeSize: 20Gi # Volume size of Elasticsearch data nodes. logMaxAge: 7 # Log retention time in built-in Elasticsearch, it is 7 days by default. elkPrefix: logstash # The string making up index names. The index name will be formatted as ks-<elk_prefix>-log. console: enableMultiLogin: true # enable/disable multiple sing on, it allows an account can be used by different users at the same time. port: 30880 alerting: # (CPU: 0.3 Core, Memory: 300 MiB) Whether to install KubeSphere alerting system. It enables Users to customize alerting policies to send messages to receivers in time with different time intervals and alerting levels to choose from. enabled: true auditing: # Whether to install KubeSphere audit log system. It provides a security-relevant chronological set of records,recording the sequence of activities happened in platform, initiated by different tenants. enabled: true devops: # (CPU: 0.47 Core, Memory: 8.6 G) Whether to install KubeSphere DevOps System. It provides out-of-box CI/CD system based on Jenkins, and automated workflow tools including Source-to-Image & Binary-to-Image. enabled: true jenkinsMemoryLim: 2Gi # Jenkins memory limit. jenkinsMemoryReq: 1500Mi # Jenkins memory request. jenkinsVolumeSize: 8Gi # Jenkins volume size. jenkinsJavaOpts_Xms: 512m # The following three fields are JVM parameters. jenkinsJavaOpts_Xmx: 512m jenkinsJavaOpts_MaxRAM: 2g events: # Whether to install KubeSphere events system. It provides a graphical web console for Kubernetes Events exporting, filtering and alerting in multi-tenant Kubernetes clusters. enabled: true ruler: enabled: true replicas: 2 logging: # (CPU: 57 m, Memory: 2.76 G) Whether to install KubeSphere logging system. Flexible logging functions are provided for log query, collection and management in a unified console. Additional log collectors can be added, such as Elasticsearch, Kafka and Fluentd. enabled: true logsidecarReplicas: 2 metrics_server: # (CPU: 56 m, Memory: 44.35 MiB) Whether to install metrics-server. IT enables HPA (Horizontal Pod Autoscaler). enabled: false monitoring: # prometheusReplicas: 1 # Prometheus replicas are responsible for monitoring different segments of data source and provide high availability as well. prometheusMemoryRequest: 400Mi # Prometheus request memory. prometheusVolumeSize: 20Gi # Prometheus PVC size. # alertmanagerReplicas: 1 # AlertManager Replicas. multicluster: clusterRole: none # host | member | none # You can install a solo cluster, or specify it as the role of host or member cluster. networkpolicy: # Network policies allow network isolation within the same cluster, which means firewalls can be set up between certain instances (Pods). # Make sure that the CNI network plugin used by the cluster supports NetworkPolicy. There are a number of CNI network plugins that support NetworkPolicy, including Calico, Cilium, Kube-router, Romana and Weave Net. enabled: true notification: # Email Notification support for the legacy alerting system, should be enabled/disabled together with the above alerting option. enabled: true openpitrix: # (2 Core, 3.6 G) Whether to install KubeSphere Application Store. It provides an application store for Helm-based applications, and offer application lifecycle management. enabled: true servicemesh: # (0.3 Core, 300 MiB) Whether to install KubeSphere Service Mesh (Istio-based). It provides fine-grained traffic management, observability and tracing, and offer visualization for traffic topology. enabled: true

9.1.2 准备kubesphere-installer.yaml

--- apiVersion: apiextensions.k8s.io/v1beta1 kind: CustomResourceDefinition metadata: name: clusterconfigurations.installer.kubesphere.io spec: group: installer.kubesphere.io versions: - name: v1alpha1 served: true storage: true scope: Namespaced names: plural: clusterconfigurations singular: clusterconfiguration kind: ClusterConfiguration shortNames: - cc --- apiVersion: v1 kind: Namespace metadata: name: kubesphere-system --- apiVersion: v1 kind: ServiceAccount metadata: name: ks-installer namespace: kubesphere-system --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: ks-installer rules: - apiGroups: - "" resources: - '*' verbs: - '*' - apiGroups: - apps resources: - '*' verbs: - '*' - apiGroups: - extensions resources: - '*' verbs: - '*' - apiGroups: - batch resources: - '*' verbs: - '*' - apiGroups: - rbac.authorization.k8s.io resources: - '*' verbs: - '*' - apiGroups: - apiregistration.k8s.io resources: - '*' verbs: - '*' - apiGroups: - apiextensions.k8s.io resources: - '*' verbs: - '*' - apiGroups: - tenant.kubesphere.io resources: - '*' verbs: - '*' - apiGroups: - certificates.k8s.io resources: - '*' verbs: - '*' - apiGroups: - devops.kubesphere.io resources: - '*' verbs: - '*' - apiGroups: - monitoring.coreos.com resources: - '*' verbs: - '*' - apiGroups: - logging.kubesphere.io resources: - '*' verbs: - '*' - apiGroups: - jaegertracing.io resources: - '*' verbs: - '*' - apiGroups: - storage.k8s.io resources: - '*' verbs: - '*' - apiGroups: - admissionregistration.k8s.io resources: - '*' verbs: - '*' - apiGroups: - policy resources: - '*' verbs: - '*' - apiGroups: - autoscaling resources: - '*' verbs: - '*' - apiGroups: - networking.istio.io resources: - '*' verbs: - '*' - apiGroups: - config.istio.io resources: - '*' verbs: - '*' - apiGroups: - iam.kubesphere.io resources: - '*' verbs: - '*' - apiGroups: - notification.kubesphere.io resources: - '*' verbs: - '*' - apiGroups: - auditing.kubesphere.io resources: - '*' verbs: - '*' - apiGroups: - events.kubesphere.io resources: - '*' verbs: - '*' - apiGroups: - core.kubefed.io resources: - '*' verbs: - '*' - apiGroups: - installer.kubesphere.io resources: - '*' verbs: - '*' - apiGroups: - storage.kubesphere.io resources: - '*' verbs: - '*' --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: ks-installer subjects: - kind: ServiceAccount name: ks-installer namespace: kubesphere-system roleRef: kind: ClusterRole name: ks-installer apiGroup: rbac.authorization.k8s.io --- apiVersion: apps/v1 kind: Deployment metadata: name: ks-installer namespace: kubesphere-system labels: app: ks-install spec: replicas: 1 selector: matchLabels: app: ks-install template: metadata: labels: app: ks-install spec: serviceAccountName: ks-installer containers: - name: installer image: kubespheredev/ks-installer:latest imagePullPolicy: "Always" volumeMounts: - mountPath: /etc/localtime name: host-time volumes: - hostPath: path: /etc/localtime type: "" name: host-time

9.2.应用pod

kubectl apply -f kubesphere-installer.yaml

kubectl apply -f cluster-configuration.yaml

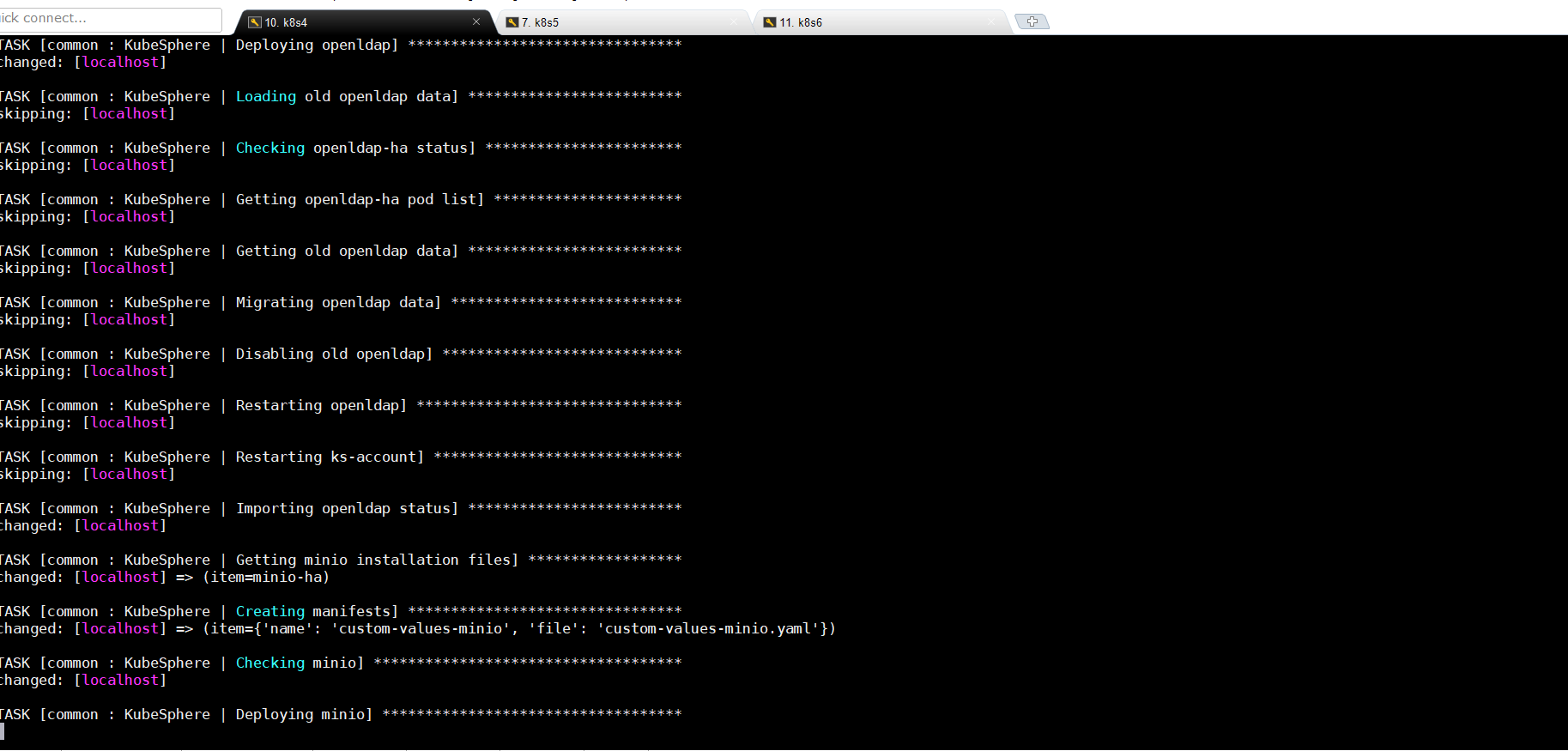

9.3查看日志(漫长等待)

kubectl logs -n kubesphere-system $(kubectl get pod -n kubesphere-system -l app=ks-install -o jsonpath='{.items[0].metadata.name}') -f

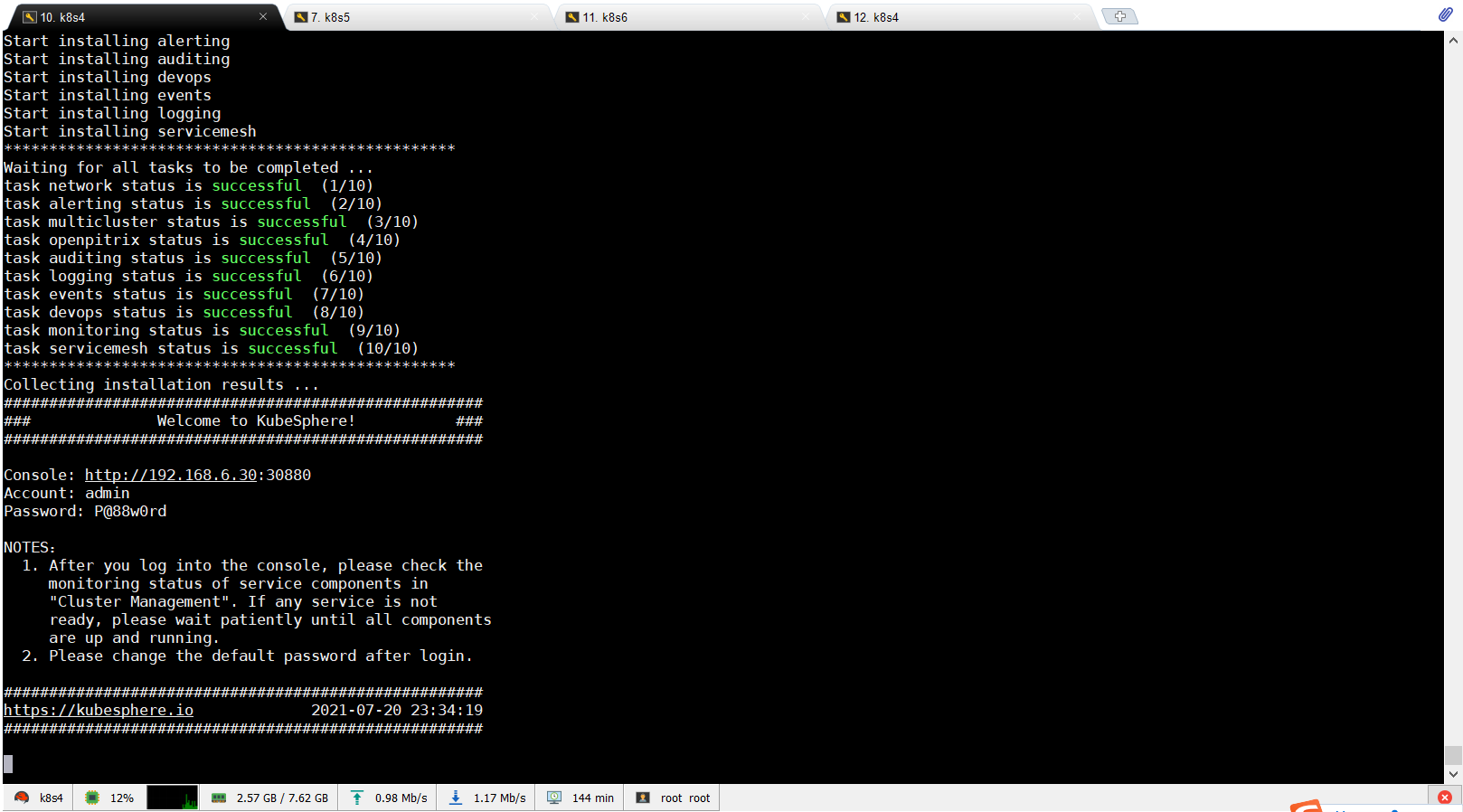

查看pod启动状态

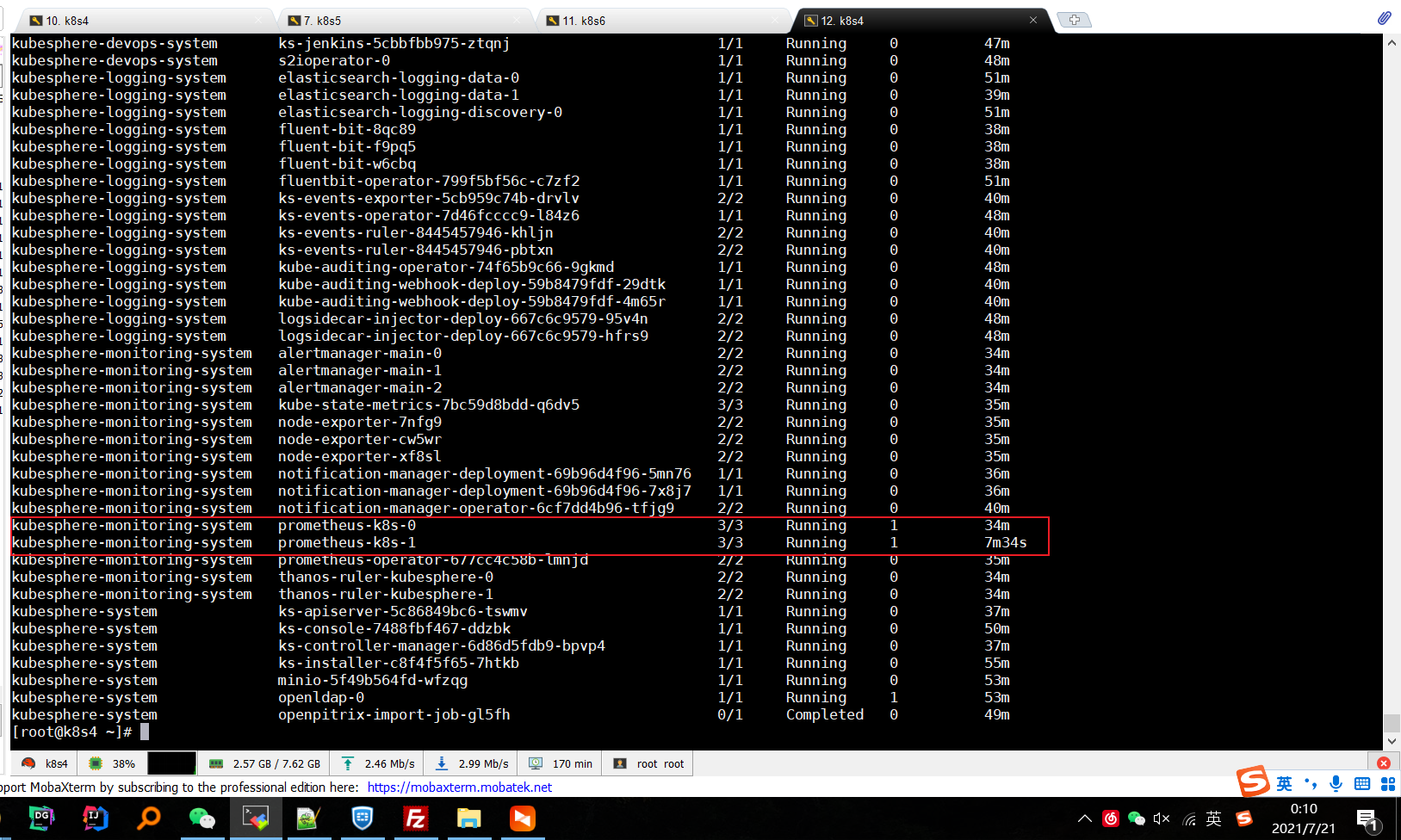

kubectl get pods -A

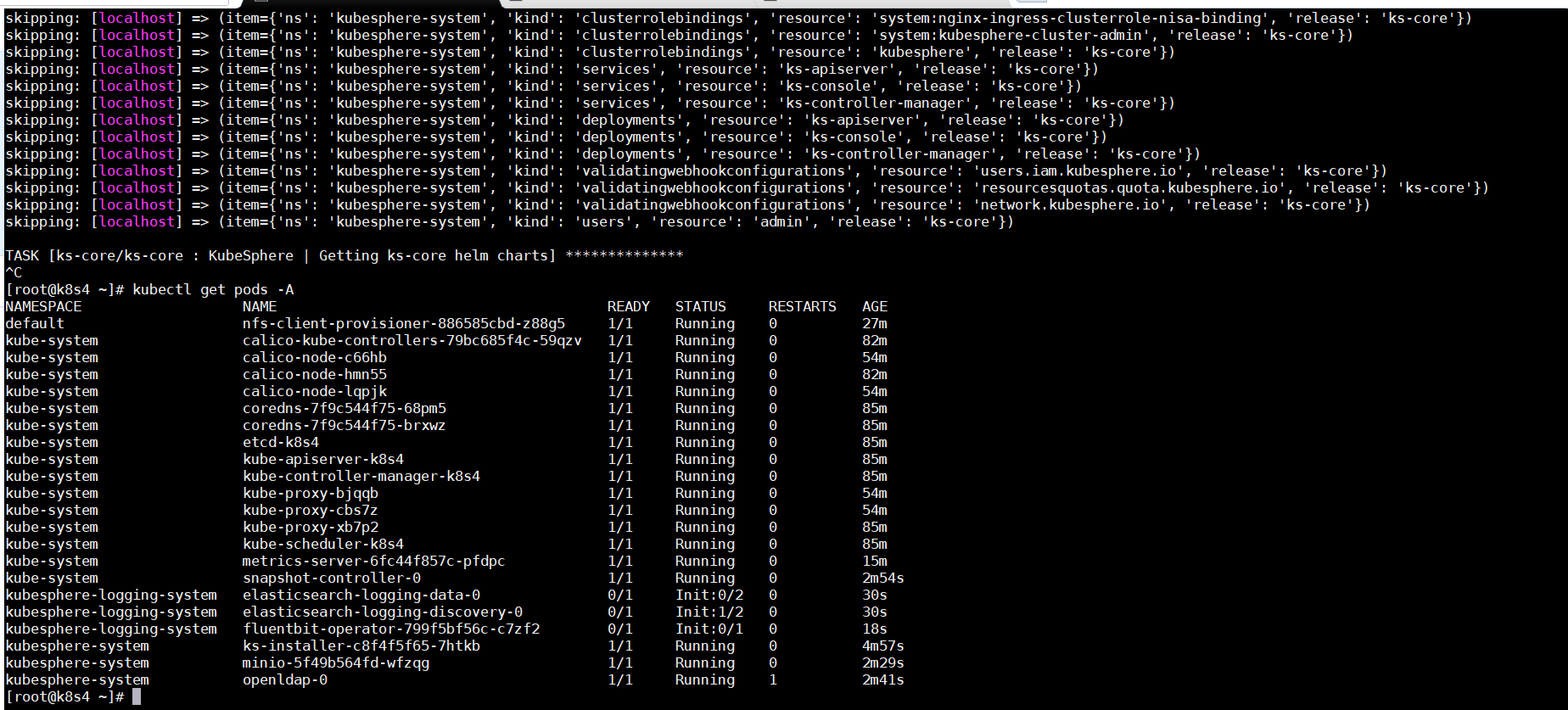

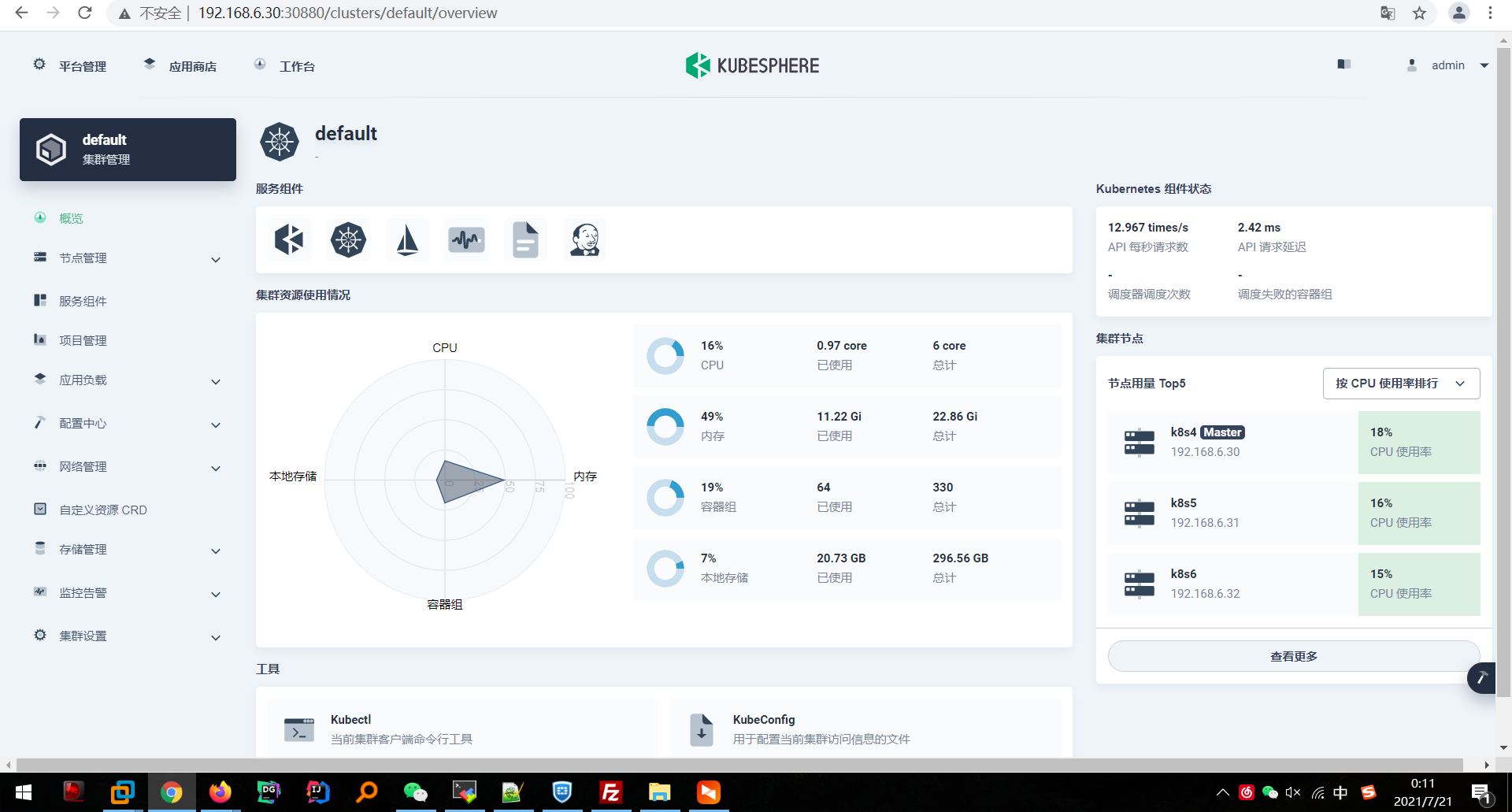

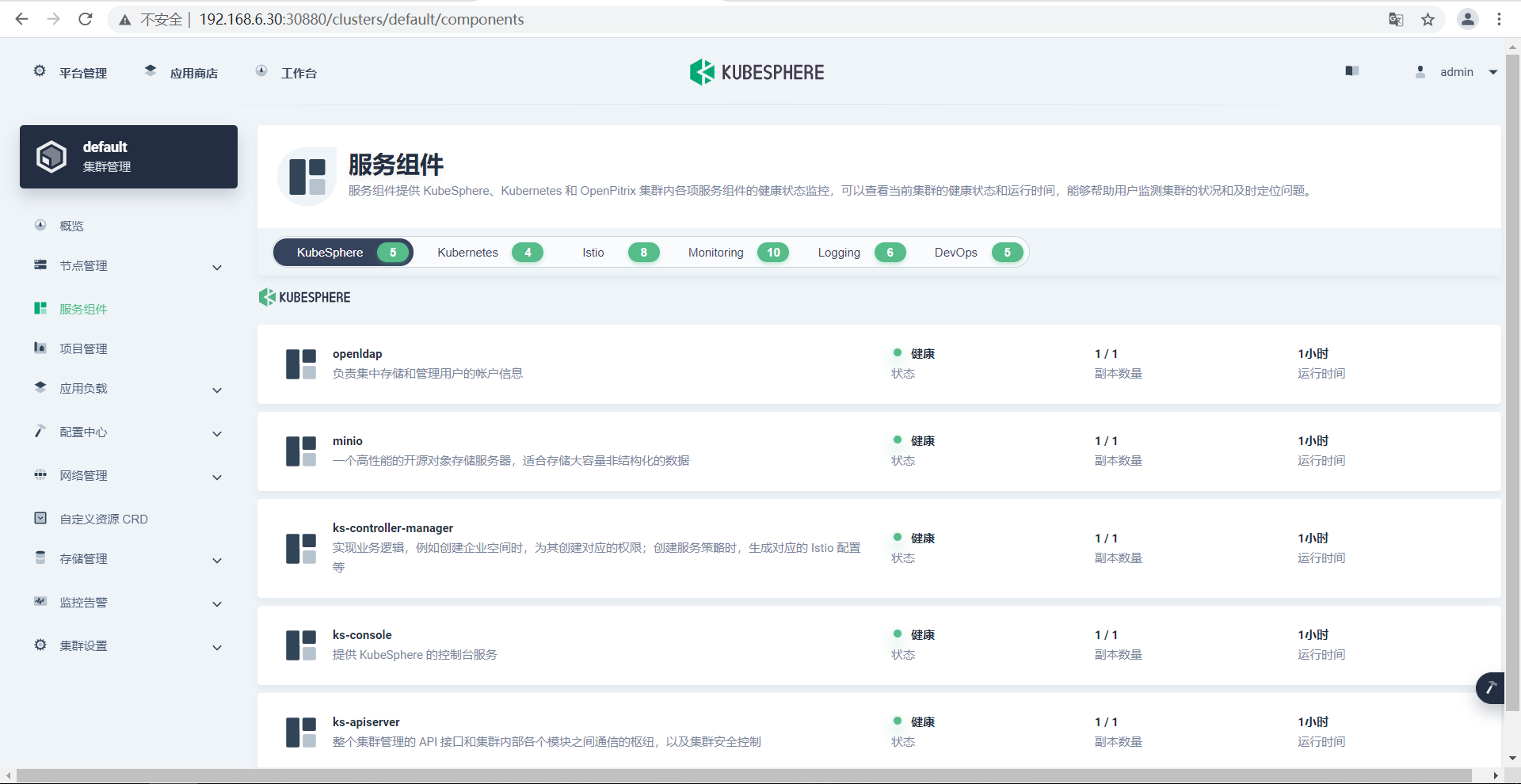

9.4访问验证是否成功

10.问题

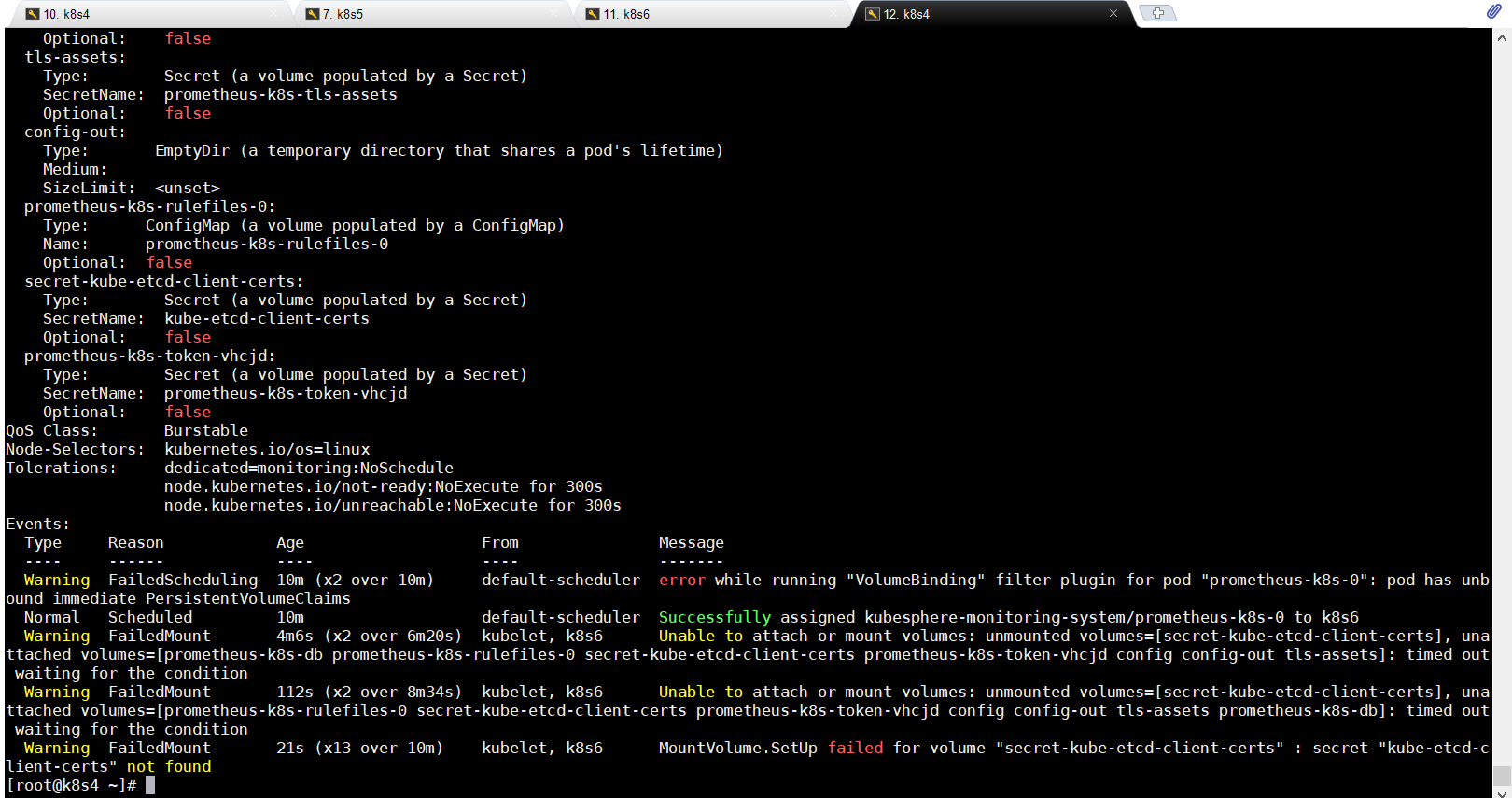

10.1解决prometheus一直没能Running的问题,缺少证书

如等待了半个小时左右还是没能Running,特别是monitoring这两个有问题,这个是监控用的

kubectl describe pod prometheus-k8s-0 -n kubesphere-monitoring-system

说没有这个secret-kube-etcd-client-certs这个证书

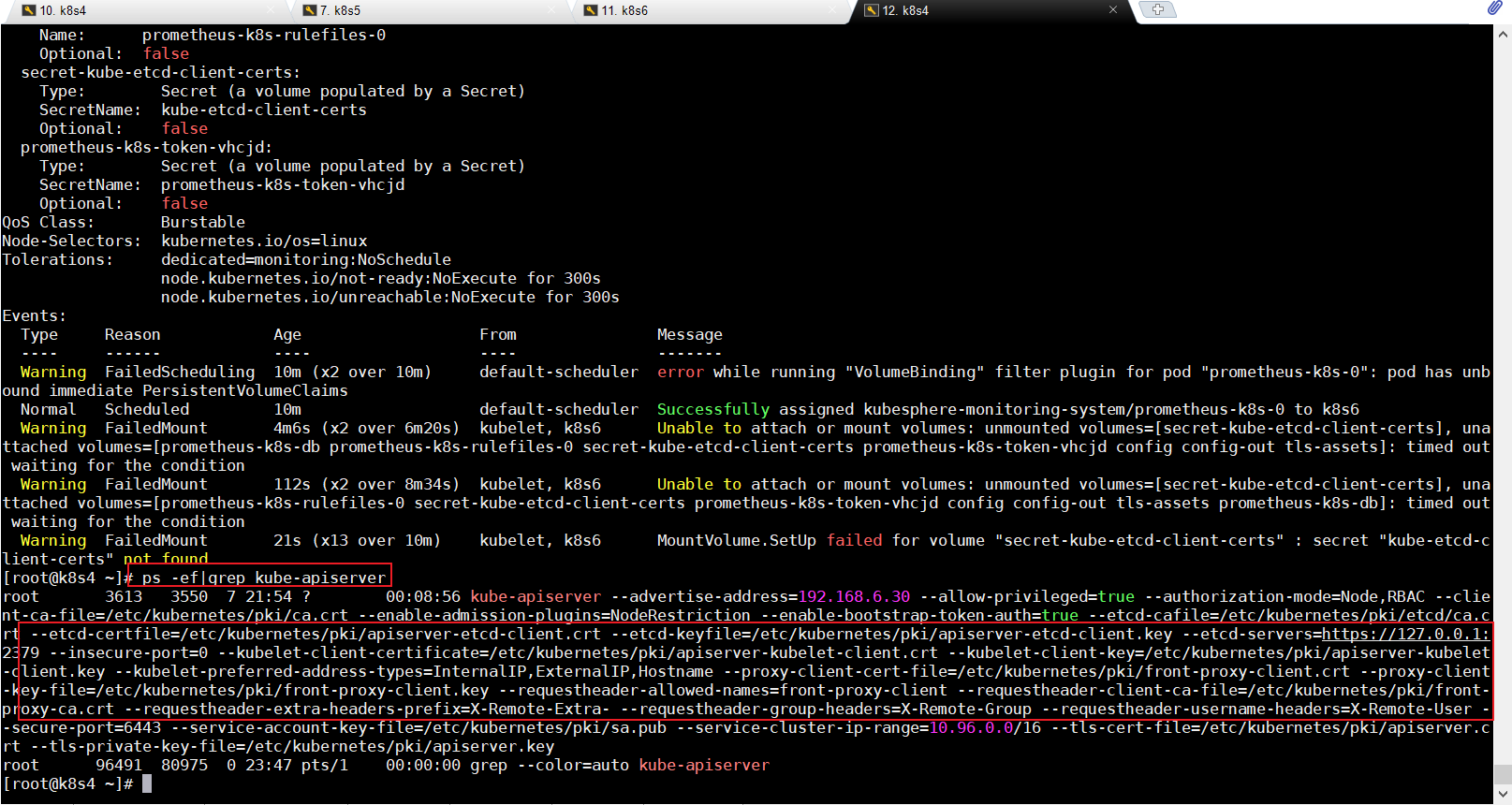

看一下kubesphere的整个apiserver

ps -ef|grep kube-apiserver

这个apiserver就会打印整个证书位置

说明是有这些证书文件的,但是kubesphere它不知道,它相当于依赖了我们系统里面的

这些证书文件就是在这些位置:

--etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

--etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

--etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

解决方案:把这个命令复制到主节点运行即可

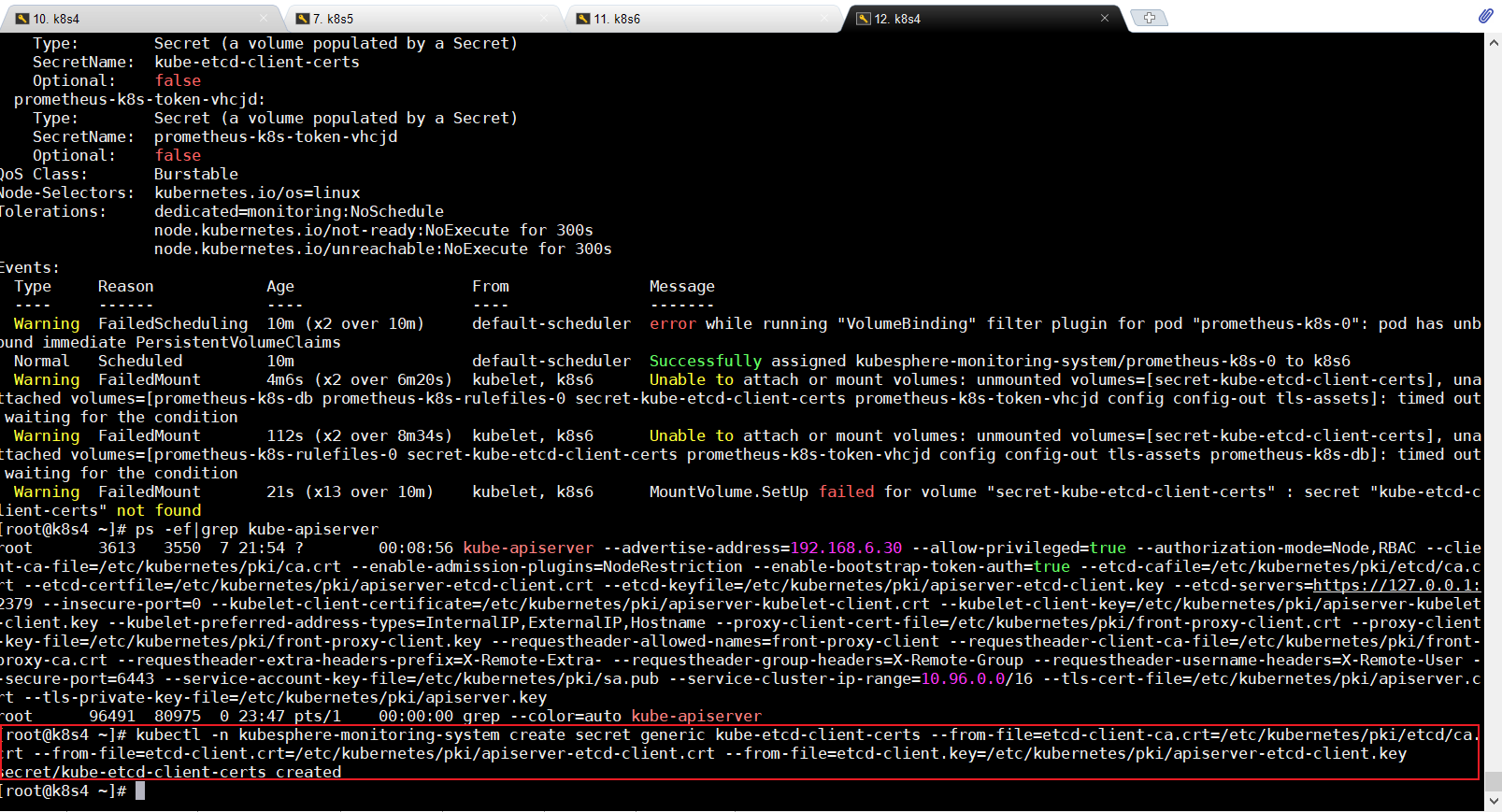

kubectl -n kubesphere-monitoring-system create secret generic kube-etcd-client-certs --from-file=etcd-client-ca.crt=/etc/kubernetes/pki/etcd/ca.crt --from-file=etcd-client.crt=/etc/kubernetes/pki/apiserver-etcd-client.crt --from-file=etcd-client.key=/etc/kubernetes/pki/apiserver-etcd-client.key

表示这个secret已经创建了

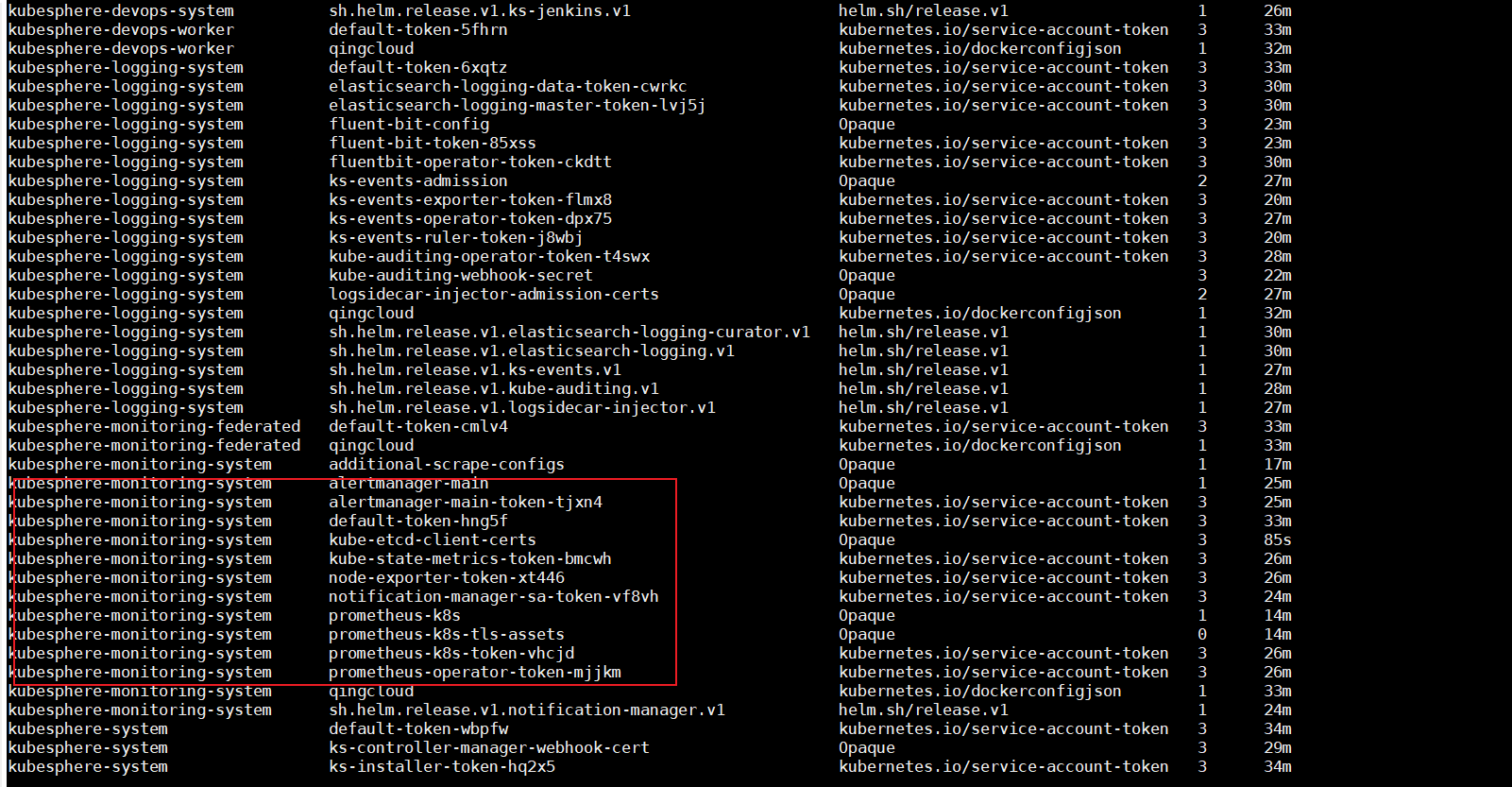

可以用命令查看是否创建成功:

kubectl get secret -A //这个命令的意思就是获取所有系统里面的secret

只要证书一创建,那么我们这个prometheus很快就可以了

如果还是不行,把这个 prometheus-k8s-0这个pod删掉

命令:kubectl delete pod prometheus-k8s-0 -n kubesphere-monitoring-system

然后让它再拉取一个就可以了

再把 prometheus-k8s-1这个pod删掉,也让它重新拉取

命令:kubectl delete pod prometheus-k8s-1 -n kubesphere-monitoring-system

如有问题欢迎讨论交流

浙公网安备 33010602011771号

浙公网安备 33010602011771号