Unity 2017 Game Optimizations (Chris Dickinson 著)

1. Pursuing Performance Problems (已看)

Pursuing Performance Problems, provides an exploration of the Unity Profiler and a series of methods to profile our application, detect performance bottlenecks, and perform root cause analysis.

Scripting Strategies, deals with the best practices for our Unity C# Script code, minimizing MonoBehaviour callback overhead, improving interobject communication, and more.

3. The Benefits of Batching (已看)

The Benefits of Batching, explores Unity's Dynamic Batching and Static Batching systems, and how they can be utilized to ease the burden on the Rendering Pipeline.

Kickstart Your Art, helps you understand the underlying technology behind art assets and learn how to avoid common pitfalls with importing, compression, and encoding.

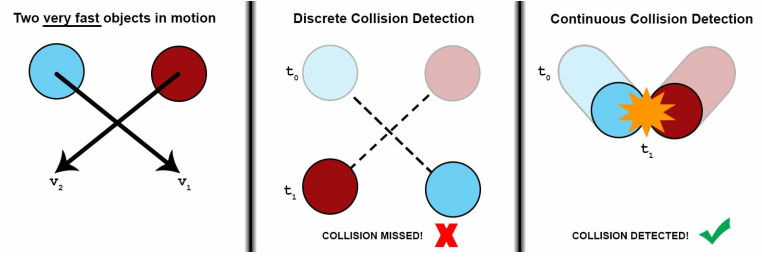

Faster Physics, is about investigating the nuances of Unity's internal Physics Engines for both 3D and 2D games, and how to properly organize our physics objects for improved performance.

6. Dynamic Graphics (已看)

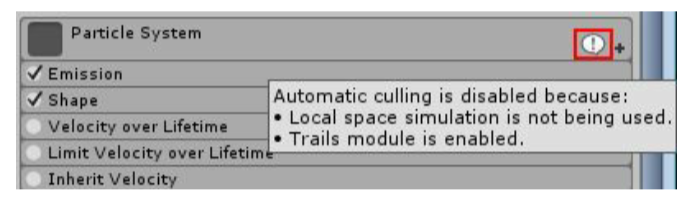

Dynamic Graphics, provides an in-depth exploration of the Rendering Pipeline, and how to improve applications that suffer rendering bottlenecks in the GPU, or CPU, how to optimize graphical effects such as lighting, shadows, and Particle Effects, ways in which to ptimize Shader code, and some specific techniques for mobile devices.

7. Virtual Velocity and Augmented Acceleration

Virtual Velocity and Augmented Acceleration, focuses on the new entertainment mediums of Virtual Reality (VR) and Augmented Reality (AR), and includes several techniques for optimizing performance that are unique to apps built for these platforms.

8. Masterful Memory Management (已看)

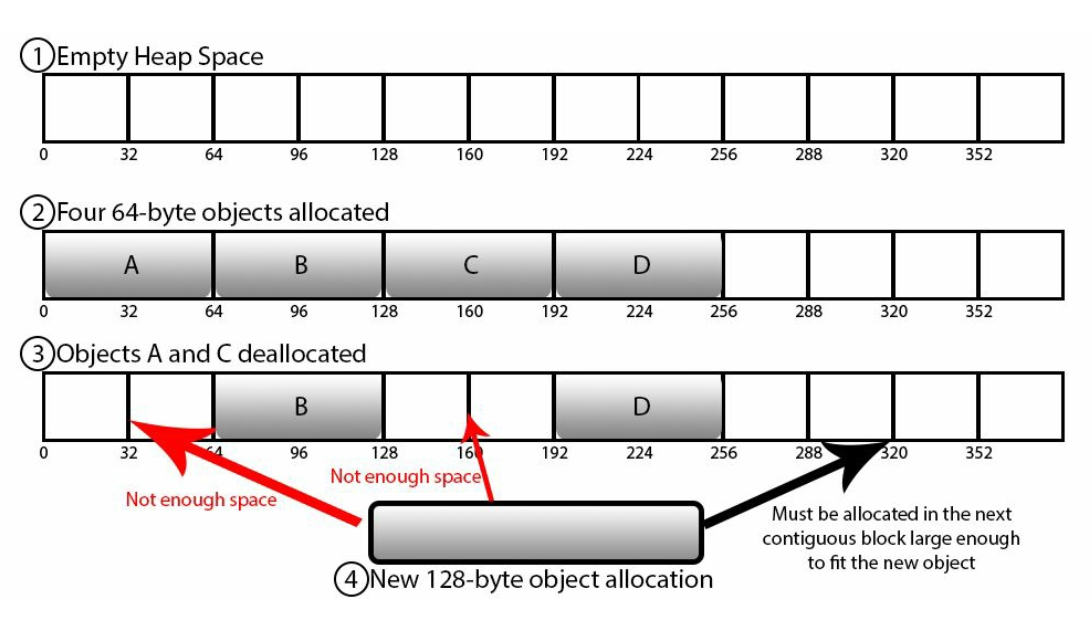

Masterful Memory Management, examines the inner workings of the Unity Engine, the Mono Framework, and how memory is managed within these components to protect our application from excessive heap allocations and runtime garbage collection.

Tactical Tips and Tricks, closes the book with a multitude of useful techniques used by Unity professionals to improve project workflow and scene management.

1. Pursuing Performance Problems

The Unity Profiler

The different subsystems it can gather data for are listed as follows:

- CPU consumption (per-major subsystem)

- Basic and detailed rendering and GPU information

- Runtime memory allocations and overall consumption

- Audio source/data usage

- Physics Engine (2D and 3D) usage

- Network messaging and operation usage

- Video playback usage

- Basic and detailed user interface performance (new in Unity 2017)

- Global Illumination statistics (new in Unity 2017)

There are generally two approaches to make use of a profiling tool: instrumentation and benchmarking (although, admittedly, the two terms are often used interchangeably).

Instrumentation typically means taking a close look into the inner workings of the application by observing the behavior of targeted function calls, where/how much memory is being allocated, and, generally getting an accurate picture of what is happening with the hope of finding the root cause of a problem. However, this is normally not an efficient way of starting to find performance problems because profiling of any application comes with a performance cost of its own.

When a Unity application is compiled in Development Mode (determined by the Development Build flag in the Build Settings menu), additional compiler flags are enabled causing the application to generate special events at runtime, which get logged and stored by the Profiler. Naturally, this will cause additional CPU and memory overhead at runtime due to all of the extraworkload the application takes on. Even worse, if the application is being profiled through the Unity Editor, then even more CPU and memory will be spent, ensuring that the Editor updates its interface, renders additional windows (such as the Scene window), and handles background tasks. This profiling cost is not always negligible. In excessively large projects, it can sometimes cause wildly inconsistent behavior when the Profiler is enabled. In some cases, the inconsistency is significant enough to cause completely unexpected behavior due to changes in event timings and potential race conditions in asynchronous behavior. This is a necessary price we pay for a deep analysis of our code's behavior at runtime, and we should always be aware of its presence.

Before we get ahead of ourselves and start analyzing every line of code in our application, it would be wiser to perform a surface-level measurement of the application. We should gather some rudimentary data and perform test scenarios during a runtime session of our game while it runs on the target hardware; the test case could simply be a few seconds of Gameplay, playback of a cut scene, a partial play through of a level, and so on. The idea of this activity is to get a general feel for what the user might experience and keep

watching for moments when performance becomes noticeably worse. Such problems may be severe enough to warrant further analysis.

This activity is commonly known as benchmarking, and the important metrics we're interested in are often the number of frames per-second (FPS) being rendered, overall memory consumption, how CPU activity behaves (looking for large spikes in activity), and sometimes CPU/GPU temperature. These are all relatively simple metrics to collect and can be used as a best first approach to performance analysis for one important reason; it will save us an enormous amount of time in the long run, since it ensures that we only

spend our time investigating problems that users would notice.

We should dig deeper into instrumentation only after a benchmarking test indicates that further analysis is required. It is also very important to benchmark by simulating actual platform behavior as much as possible if we want a realistic data sample. As such, we should never accept benchmarking data that was generated through Editor Mode as representative of real gameplay, since Editor Mode comes with some additional overhead costs thatmight mislead us, or hide potential race conditions in a real application. Instead, we should hook the profiling tool into the application while it is running in a standalone format on the target hardware

Many Unity developers are surprised to find that the Editor sometimes calculates the results of operations much faster than a standalone application does. This is particularly common when dealing with serialized data like audio files, Prefabs and Scriptable Objects. This is because the Editor will cache previously imported data and is able to access it much faster than a real application would

Launching the Profiler

Editor or standalone instances

ensure that the Development Build and Autoconnect Profiler flags are enabled

Connecting to a WebGL instance

Remote connection to an iOS device

https://docs.unity3d.com/Manual/TroubleShootingIPhone.html

Remote connection to an Android device

https://docs.unity3d.com/Manual/TroubleShootingAndroid.html

Editor profiling

We can profile the Editor itself. This is normally used when trying to profile the performance of custom Editor Scripts.

The Profiler window

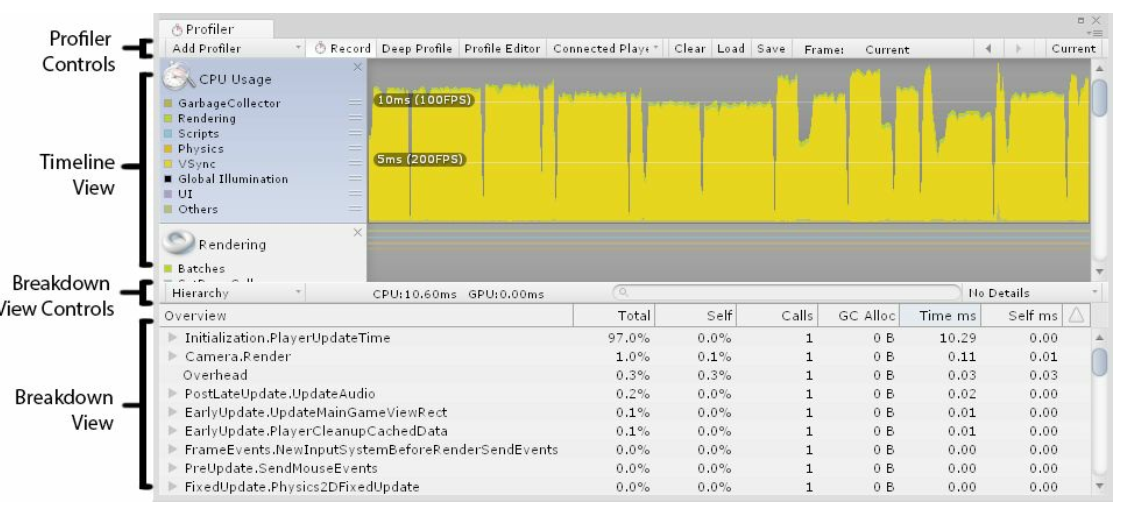

The Profiler window is split into four main sections

- Profiler Controls

- Timeline View

- Breakdown View Controls

- Breakdown View

Profiler controls

Add Profiler

Record

Deep Profile

Enabling the Deep Profile option re-compiles our scripts with much deeper level of instrumentation, allowing it to measure each and every invoked method

Profile Editor

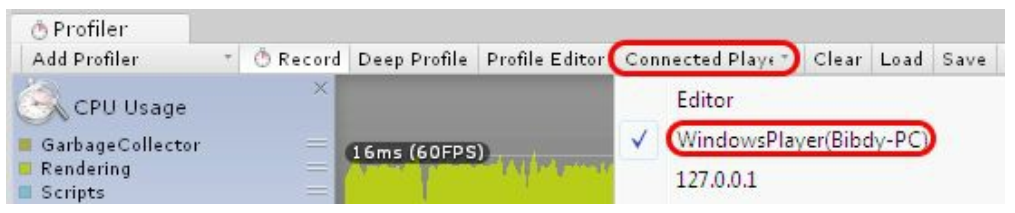

Connected Player

Clear

Load

Save

Frame Selection

Timeline View

Breakdown View Controls

Breakdown View

The CPU Usage Area

Hierarchy mode reveals most callstack invocations, while grouping similar data elements and global Unity function calls together for convenience. For instance, rendering delimiters, such as BeginGUI() and EndGUI() calls, are combined together in this mode. Hierarchy mode is helpful as an initial first step to determine which function calls cost the most CPU time to execute.

Raw Hierarchy mode is similar to Hierarchy mode, except it will separate global Unity function calls into separate entries rather than being combined into one bulk entry. This will tend to make the Breakdown View more difficult to read, but may be helpful if we're trying to count how many times a particular global method is invoked or determining whether one of these calls is costing more CPU/memory than expected. For example, each BeginGUI() and EndGUI() calls will be separated into different entries, making it more clear how many times each is being called compared to the Hierarchy mode.

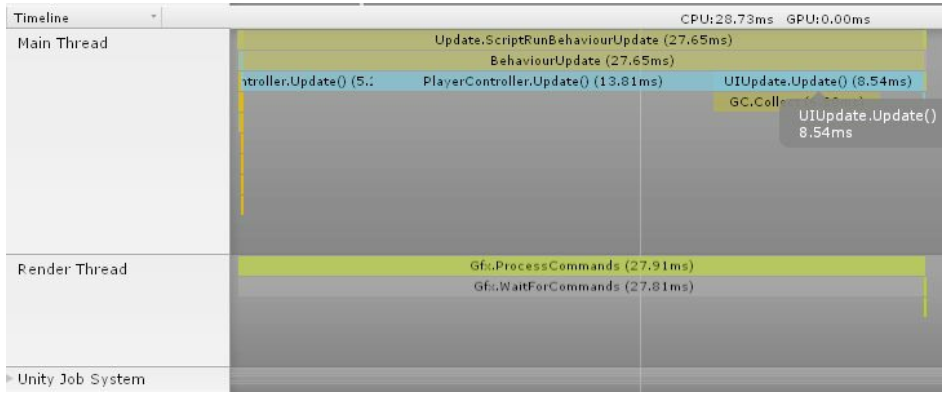

Perhaps, the most useful mode for the CPU Usage Area is the Timeline mode option (not to be confused with the main Timeline View). This mode organizes CPU usage during the current frame by how the call stack expanded and contracted during processing.

Timeline mode organizes the Breakdown View vertically into different sections that represent different threads at runtime, such as Main Thread, Render Thread, and various background job threads called Unity Job System,used for loading activity such as scenes and other assets. The horizontal axis represents time, so wider blocks are consuming more CPU time than narrower blocks. The horizontal size also represents relative time, making it easy to compare how much time one function call took compared to another. The vertical axis represents the callstack, so deeper chains represent more calls in the callstack at that time.

Under Timeline mode, blocks at the top of the Breakdown View are functions (or technically, callbacks) called by the Unity Engine at runtime (such as Start(), Awake(), or Update() ), whereas blocks underneath them are functions that those functions had called into, which can include functions on other Components or regular C# objects.

The Timeline mode offers a very clean and organized way to determine which particular method in the callstack consumes the most time and how that processing time measures up against other methods being called during the same frame. This allows us to gauge the method that is the biggest cause of performance problems with minimal effort.

For example, let's assume that we are looking at a performance problem in the following screenshot. We can tell, with a quick glance, that there are three methods that are causing a problem, and they each consume similar amounts of processing time, due to their similar widths:

In the previous screenshot, we have exceeded our 16.667 millisecond budget with calls to three different MonoBehaviour Components. The good news is that we have three possible methods through which we can find performance improvements, which means lots of opportunities to find code that can be improved. The bad news is that increasing the performance of one method will only improve about one-third of the total processing for that frame. Hence, all three methods may need to be examined and optimized in order get back under budget.

The GPU Usage Area

The GPU Usage Area is similar to the CPU Usage Area, except that it shows method calls and processing time as it occurs on the GPU. Relevant Unity method calls in this Area will relate to cameras, drawing, opaque and transparent geometry, lighting and shadows, and so on.

The GPU Usage Area offers hierarchical information similar to the CPU Usage Area and estimates time spent calling into various rendering functions such as Camera.Render() (provided rendering actually occurs during the frame currently selected in the Timeline View).

The Rendering Area

The Rendering Area provides some generic rendering statistics that tend to focus on activities related to preparing the GPU for rendering, which is a set of activities that occur on the CPU (as opposed to the act of rendering, which is activity handled within the GPU and is detailed in the GPU Usage Area). The Breakdown View offers useful information, such as the number of SetPass calls (otherwise known as Draw Calls), the total number of batches used to render the Scene, the number of batches saved from Dynamic Batching and Static Batching and how they are being generated, as well as memory consumed for textures.

The Memory Area

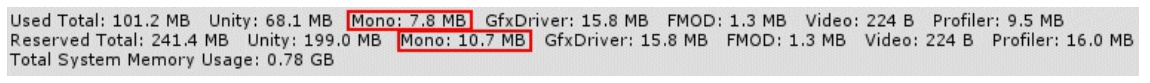

Simple mode provides only a high-level overview of memory consumption of subsystems. This include Unity's low-level Engine, the Mono framework (total heap size that is being watched by the Garbage Collector), graphical assets, audio assets and buffers, and even memory used to store data collected nby the Profiler.

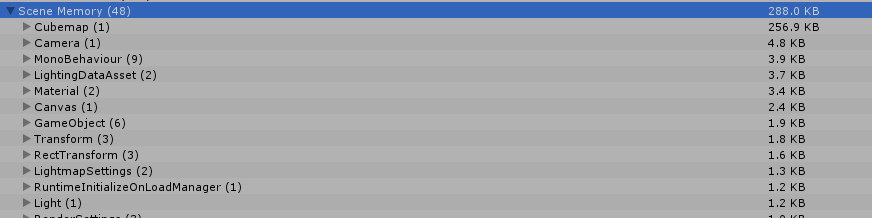

Detailed mode shows memory consumption of individual GameObjects and MonoBehaviours for both their Native and Managed representations. It also has a column explaining the reason why an object may be consuming memory and when it might be deallocated

The Audio Area

The Audio Area grants an overview of audio statistics and can be used both to measure CPU usage from the audio system and total memory consumed by Audio Sources (both for those that are playing or paused) and Audio Clips.

The Breakdown View provides lots of useful insight into how the Audio System is operating and how various audio channels and groups are being used.

The Physics 3D and Physics 2D Areas

There are two different Physics Areas, one for 3D physics (Nvidia's PhysX) and another for the 2D physics system (Box2D). This Area provides various physics statistics, such as Rigidbody, Collider, and Contact counts

The Network Messages and Network Operations Areas

These two Areas provide information about Unity's Networking System, which was introduced during the Unity 5 release cycle. The information present will depend on whether the application is using the High-Level API (HLAPI) or Transport Layer API (TLAPI) provided by Unity. The HLAPI is a more easy-to-use system for managing Player and GameObject network synchronization automatically, whereas the TLAPI is a thin layer that operates just above the socket level, allowing Unity developers to conjure up their own networking system

The Video Area

If our application happens to make use of Unity's VideoPlayer API, then we might find this Area useful for profiling video playback behavior

The UI and UI Details Areas

These Areas are new in Unity 2017 and provide insight into applications making use of Unity's built-in User Interface System.

The Global Illumination Area

The Global Illumination Area is another new Area in Unity 2017, and gives us a fantastic amount of detail into Unity's Global Illumination (GI) system

Best approaches to performance analysis

Verifying script presence

Sometimes, there are things we expect to see, but don't. These are usually easy to spot because the human brain is very good at pattern recognition and spotting differences we didn't expect. Meanwhile, there are times where we assume that something has been happening, but it didn't. These are generally more difficult to notice, because we're often scanning for the first kind of problem, and we’re assuming that the things we don’t see are working as intended. In the context of Unity, one problem that manifests itself this way is

verifying that the scripts we expect to be operating are actually present in the Scene

Verifying script count

Preventing casual mistakes such as this is essential for good productivity, since experience tells us that if we don't explicitly disallow something, then someone, somewhere, at some point, for whatever reason, will do it anyway. This is likely to cost us a frustrating afternoon hunting down a problem that eventually turned out to be caused by human-error

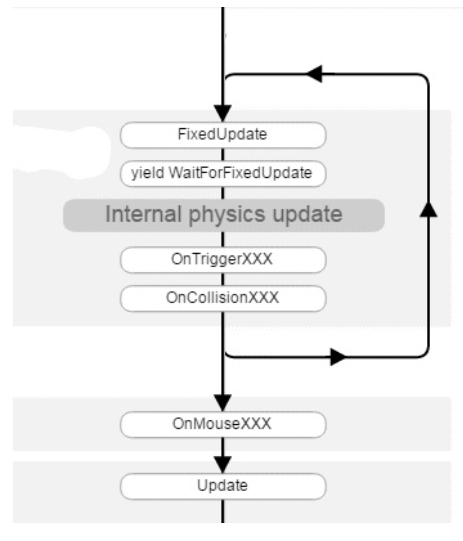

Verifying the order of events

Unity applications mostly operate as a series of callbacks from Native code to Managed code

Minimizing ongoing code changes

Minimizing internal distractions

Vertical Sync (otherwise known as VSync) is used to match the application's frame rate to the frame rate of the device it is being displayed to, for example, a monitor may run at 60 Hertz (60 cycles per-second), and if a rendering loop in our game is running faster than this then it will sit and wait until that time has elapsed before outputting the rendered frame. This feature reduces screen-tearing which occurs when a new image is pushed to the monitor before the previous image was finished, and for a brief moment part of the

new image overlaps the old image.

Executing the Profiler with VSync enabled will probably generate a lot of noisy spikes in the CPU Usage Area under the WaitForTargetFPS heading, asthe application intentionally slows itself down to match the frame rate of the display. These spikes often appear very large in Editor Mode since the Editor is typically rendering to a very small window, which doesn’t take a lot of CPU or GPU work to render.

This will generate unnecessary clutter, making it harder to spot the real issue(s). We should ensure that we disable the VSync checkbox under the CPU Usage Area when we're on the lookout for CPU spikes during performance tests. We can disable the VSync feature entirely by navigating to Edit | Project Settings | Quality and then to the sub-page for the currently selected platform.

We should also ensure that a drop in performance isn't a direct result of a massive number of exceptions and error messages appearing in the Editor Console window. Unity's Debug.Log() and similar methods, such as Debug.LogError() and Debug.LogWarning() are notoriously expensive in terms of CPU usage and heap memory consumption, which can then cause garbage collection to occur and even more lost CPU cycles (refer to Chapter 8, Masterful Memory Management, for more information on these topics).

Minimizing external distractions

Targeted profiling of code segments

Profiler script control

The Profiler can be controlled in script code through the Profiler class

Custom CPU Profiling

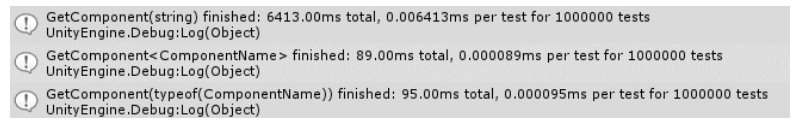

using System; using System.Diagnostics; public class CustomTimer : IDisposable { private string _timerName; private int _numTests; private Stopwatch _watch; // give the timer a name, and a count of the // number of tests we're running public CustomTimer(string timerName, int numTests) { _timerName = timerName; _numTests = numTests; if (_numTests <= 0) { _numTests = 1; } _watch = Stopwatch.StartNew(); } // automatically called when the 'using()' block ends public void Dispose() { _watch.Stop(); float ms = _watch.ElapsedMilliseconds; UnityEngine.Debug.Log(string.Format("{0} finished: {1:0.00} " + "milliseconds total, {2:0.000000} milliseconds per-test " + "for {3} tests", _timerName, ms, ms / _numTests, _numTests)); } } const int numTests = 1000; using (new CustomTimer("My Test", numTests)) { for(int i = 0; i < numTests; ++i) { TestFunction(); } } // the timer's Dispose() method is automatically called here

There are three things to note when using this approach.

Firstly, we are onlymaking an average of multiple method invocations. If processing time varies enormously between invocations, then that will not be well represented in the final average.

Secondly, if memory access is common, then repeatedly requesting the same blocks of memory will result in an artificially higher cache hit rate (where the CPU can find data in memory very quickly because it's accessed the same region recently), which will bring the average time down when compared to a typical invocation.

Thirdly, the effects of Just-In-Time (JIT) compilation will be effectively hidden for similarly artificial reasons, as it only affects the first invocation of the method.

Unity has a significant startup cost when a Scene begins, given the amount of data that needs to be loaded from disk, the initialization of complex subsystems, such as the Physics and Rendering Systems, and the number of calls to various Awake() and Start() callbacks that need to be resolved before anything else can happen

Final thoughts on Profiling and Analysis

One way of thinking about performance optimization is the act of stripping away unnecessary tasks that spend valuable resources

Understanding the Profiler

Don't let the Profiler trick us into thinking that big spikes are always bad. As always, it's only important if the user will notice it

Reducing noise

The classical definition of noise (at least in the realm of computer science) is meaningless data, and a batch of profiling data that was blindly captured with no specific target in mind is always full of data that won't interest us. More sources of data takes more time to mentally process and filter, which can be very distracting. One of the best methods to avoid this is to simply reduce the amount of data we need to process by stripping away any data deemed nonvital to the current situation

Focusing on the issue

Focus is the skill of not letting ourselves become distracted by inconsequential tasks and wild goose chases

Summary

2. Scripting Strategies

In this chapter, we will explore ways of applying performance enhancements to the following areas:

- Accessing Components

- Component callbacks (Update(), Awake(), and so on)

- Coroutines

- GameObject and Transform usage

- Interobject communication

- Mathematical calculations

- Deserialization such as Scene and Prefab loading

Obtain Components using the fastest method

Remove empty callback definitions

https://docs.unity3d.com/Manual/ExecutionOrder.html

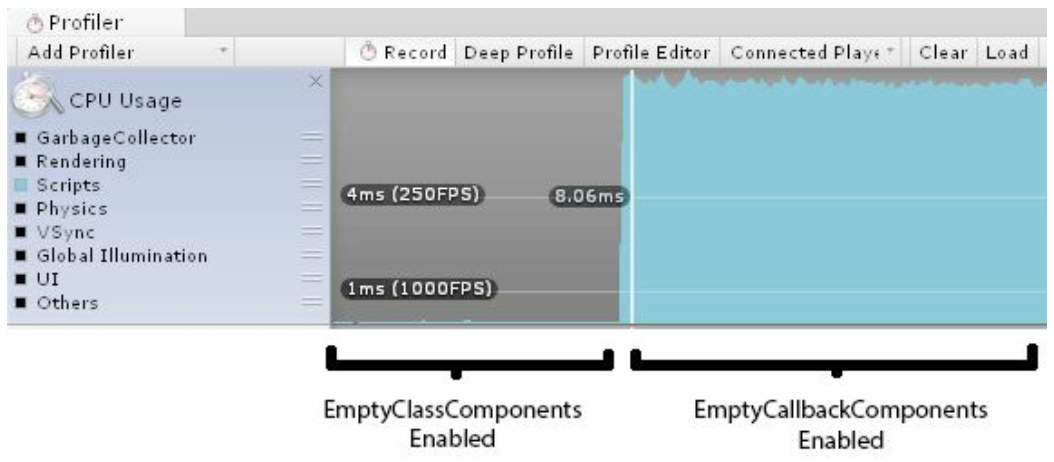

However, it is important to realize that Unity will hook into these callbacks even if the function body is empty

Cache Component references

private Rigidbody rigidbody; void Awake() { rigidbody = GetComponent<Rigidbody>(); } void Update() { rigidbody.xxx; }

Share calculation output

Update, Coroutines, and InvokeRepeating

Coroutines run on the main thread in a sequential manner such that only one Coroutine is handled at any given moment, and each Coroutine decides when to pause and resume via yield statements

Faster GameObject null reference checks

if (!System.Object.ReferenceEquals(gameObject, null)) { // do something }

Avoid retrieving string properties from GameObjects

Use appropriate data structures

Avoid re-parenting Transforms at runtime

GameObject.Instantiate(Object original, Transform parent);

transform.hierarchyCapacity;

Consider caching Transform changes

Avoid Find() and SendMessage() at runtime

Assigning references to pre-existing objects

Static Classes

using UnityEngine; public class EnemyCreatorComponent : MonoBehaviour { [SerializeField] private int _numEnemies; [SerializeField] private GameObject _enemyPrefab; [SerializeField] private EnemyManagerComponent _enemyManager; void Start() { for (int i = 0; i < _numEnemies; ++i) { CreateEnemy(); } } p ublic void CreateEnemy() { _enemyManager.CreateEnemy(_enemyPrefab); } }

Singleton Components

using UnityEngine; public class SingletonComponent<T> : MonoBehaviour where T : SingletonComponent<T> { private static T __Instance; protected static SingletonComponent<T> _Instance { get { if (!__Instance) { T[] managers = GameObject.FindObjectsOfType(typeof(T)) as T[]; if (managers != null) { if (managers.Length == 1) { __Instance = managers[0]; return __Instance; } else if (managers.Length > 1) { Debug.LogError("You have more than one " + typeof(T).Name + " in the Scene. You only need " + "one - it's a singleton!"); for (int i = 0; i < managers.Length; ++i) { T manager = managers[i]; Destroy(manager.gameObject); } } } GameObject go = new GameObject(typeof(T).Name, typeof(T)); __Instance = go.GetComponent<T>(); DontDestroyOnLoad(__Instance.gameObject); } return __Instance; } set { __Instance = value as T; } } } public class EnemyManagerSingletonComponent : SingletonComponent<EnemyManagerSingletonComponent> public static EnemyManagerSingletonComponent Instance { get { return ((EnemyManagerSingletonComponent)_Instance); } set { _Instance = value; } } public void CreateEnemy(GameObject prefab) { // same as StaticEnemyManager } public void KillAll() { // same as StaticEnemyManager } }

A global Messaging System

public class Message { public string type; public Message() { type = this.GetType().Name; } }

Moving on to our MessageSystem class, we should define its features by what kind of requirements we need it to fulfill:

- It should be globally accessible

- Any object (MonoBehaviour or not) should be able to register/deregister as linsteners to receive specific message types(that is, the Observer design pattern)

- Registering objects should provide a method to call when the given message is broadcasted from elsewhere

- The system should send the message to all listeners within a reasonable time frame, but not choke on too many requests at once

A globally accessible objectRegistration

Registration

public delegate bool MessageHandlerDelegate(Message message);

Message processing

Implementing the Messaging System

using System.Collections.Generic; using UnityEngine; public class MessagingSystem : SingletonComponent<MessagingSystem> { public static MessagingSystem Instance { get { return ((MessagingSystem)_Instance); } set { _Instance = value; } } private Dictionary<string, List<MessageHandlerDelegate>> _listenerDict = new Dictionary<string, List<MessageHandlerDelegate>>(); public bool AttachListener(System.Type type, MessageHandlerDelegate handler) { if (type == null) { Debug.Log("MessagingSystem: AttachListener failed due to having no " + "message type specified"); return false; } string msgType = type.Name; if (!_listenerDict.ContainsKey(msgType)) { _listenerDict.Add(msgType, new List<MessageHandlerDelegate>()); } List<MessageHandlerDelegate> listenerList = _listenerDict[msgType]; if (listenerList.Contains(handler)) { return false; // listener already in list } listenerList.Add(handler); return true; } }

Message queuing and processing

private Queue<Message> _messageQueue = new Queue<Message>(); public bool QueueMessage(Message msg) { if (!_listenerDict.ContainsKey(msg.type)) { return false; } _messageQueue.Enqueu(msg); return true; } private const int _maxQueueProcessingTime = 16667; private System.Diagnostics.Stopwatch timer = new System.Diagnostics.Stopwatch(); void Update() { timer.Start(); while (_messageQueue.Count > 0) { if (_maxQueueProcessingTime > 0.0f) { if (timer.Elapsed.Milliseconds > _maxQueueProcessingTime) { timer.Stop(); return; } } Message msg = _messageQueue.Dequeue(); if (!TriggerMessage(msg)) { Debug.Log("Error when processing message: " + msg.type); } } } public bool TriggerMessage(Message msg) { string msgType = msg.type; if (!_listenerDict.ContainKey(msgType)) { Debug.Log("MessagingSystem: Message \"" + msgType + "\" has no listeners!"); return false; } List<MessageHandlerDelegate> listenerList = _listenerDict[msgType]; for (int i = 0; i < listenerList.Count; ++i) { if (listenerList[i](msg)) { return true; } } return true; }

Implementing custom messages

public class CreateEnemyMessage : Message { } public class EnemyCreatedMessage : Message { public readonly GameObject enemyObject; public readonly string enemyName; public EnemyCreatedMessage(GameObject enemyObject, string enemyName) { this.enemyObject = enemyObject; this.enemyName = enemyName; } }

Message sending

public class EnemyCreatorComponent : MonoBehaviour { void Update() { if (Input.GetKeyDown(KeyCode.Space)) { MessagingSystem.Instance.QueueMessage(new CreateEnemyMessage()); } } }

Message registration

public class EnemyManagerWithMessagesComponent : MonoBehaviour { private List<GameObject> _enemies = new List<GameObject>(); [SerializeField] private GameObject _enemyPrefab; void Start() { MessagingSystem.Instance.AttachListener(typeof(CreateEnemyMessage),this.HandleCreateEnemy); } bool HandleCreateEnemy(Message msg) { CreateEnemyMessage castMsg = msg as CreateEnemyMessage; string[] names = { "Tom", "Dick", "Harry" }; GameObject enemy = GameObject.Instantiate(_enemyPrefab,5.0f * Random.insideUnitSphere,Quaternion.identity); string enemyName = names[Random.Range(0, names.Length)]; enemy.gameObject.name = enemyName; _enemies.Add(enemy); MessagingSystem.Instance.QueueMessage(new EnemyCreatedMessage(enemy,enemyName)); return true; } } public class EnemyCreatedListenerComponent : MonoBehaviour { void Start() { MessagingSystem.Instance.AttachListener(typeof(EnemyCreatedMessage), HandleEnemyCreated); } bool HandleEnemyCreated(Message msg) { EnemyCreatedMessage castMsg = msg as EnemyCreatedMessage; Debug.Log(string.Format("A new enemy was created! {0}", castMsg.enemyName)); return true; } }

Message cleanup

public bool DetachListener(System.Type type, MessageHandlerDelegate handler) { if (type == null) { Debug.Log("MessagingSystem: DetachListener failed due to having no " + "message type specified"); return false; } string msgType = type.Name; if (!_listenerDict.ContainsKey(type.Name)) { return false; } List<MessageHandlerDelegate> listenerList = _listenerDict[msgType]; if (!listenerList.Contains(handler)) { return false; } listenerList.Remove(handler); return true; } void OnDestroy() { if (MessagingSystem.IsAlive) { MessagingSystem.Instance.DetachListener(typeof(EnemyCreatedMessage),this.HandleCreateEnemy); } }

Wrapping up the Messaging System

Disable unused scripts and objects

Disabling objects by visibility

void OnBecameVisible() { enabled = true; } void OnBecameInvisible() { enabled = false; } void OnBecameVisible() { gameObject.SetActive(true); } void OnBecameInvisible() { gameObject.SetActive(false); }

Disabling objects by distance

[SerializeField] GameObject _target; [SerializeField] float _maxDistance; [SerializeField] int _coroutineFrameDelay; void Start() { StartCoroutine(DisableAtADistance()); } IEnumerator DisableAtADistance() { while (true) { float distSqrd = (transform.position - _target.transform.position).sqrMagnitude; if (distSqrd < _maxDistance * _maxDistance) { enabled = true; } else { enabled = false; } for(int i = 0; i < _coroutineFrameDelay; ++i) { yield return new WaitForEndOfFrame(); } } }

Consider using distance-squared over distance

Minimize Deserialization behavior

Unity's Serialization system is mainly used for Scenes, Prefabs,ScriptableObjects and various Asset types(which tend to derive from ScriptableObject).

When one of these object types is saved to disk, it is converted into a text file using the Yet Another Markup Language (YAML) format, which can be deserialized back into the original object type at a later time.

All GameObjects and their properties get serialized when a Prefab or Scene is serialized, including private and protected fields, all of their Components as well as its child GameObjects and their Components, and so on.

When our application is built, this serialized data is bundled together in large binary data files internally called Serialized Files in Unity.

Reading and deserializing this data from disk at runtime is an incredibly slow process (relatively speaking) and so all deserialization activity comes with a significant performance cost.

This kind of deserialization takes place any time we call Resources.Load() for a file path found under a folder named Resources.

Once the data has been loaded from disk into memory, then reloading the same reference later is much faster, but disk activity is always required the first time it is accessed.

Naturally, the larger the data set we need to deserialize, the longer this process takes.

Since every Component of a Prefab gets serialized, then the deeper the hierarchy is, the more data needs to be deserialized.

This can be a problem for Prefabs with very deep hierarchies, Prefabs with many empty GameObjects (since every GameObject always contains at least a Transform Component), and particularly problematic for User Interface(UI) Prefabs, since they tend to house many more Components than a typical Prefab.

Loading in large serialized data sets like these could cause a significant spike in CPU the first time they are loaded, which tend to increase loading time if they're needed immediately at the start of the Scene.

More importantly, they can cause frame drops if they are loaded at runtime.

There are a couple ofapproaches we can use to minimize the costs of deserialization.

Reduce serialized object size

Load serialized objects asynchronously

Keep previously loaded serialized objects in memory

Move common data into ScriptableObjects

Load scenes additively and asynchronously

Create a custom Update() layer

Earlier in this chapter, in the "Update, Coroutines and InvokeRepeating" section, we discussed the relative pros and cons of using these Unity Engine features as a means of avoiding excessive CPU workload during most of our frames.

Regardless of which of these approaches we might adopt, there is an additional risk of having lots of MonoBehaviours written to periodically call some function, which is having too many methods triggering in the same frame simultaneously.

Imagine thousands of MonoBehaviours that initialized together at the start of a Scene, each starting a Coroutine at the same time that will process their AI tasks every 500 milliseconds.

It is highly likely that they would all trigger within the same frame, causing a huge spike in its CPU usage for a moment, which settles down temporarily and then spikes again a few moments later when the next round of AI processing is due.

Ideally, we would want to spread these invocations out over time.

The following are the possible solutions to this problem: Generating a random time to wait each time the timer expires or Coroutine triggers Spread out Coroutine initialization so that only a handful of them are started at each frame Pass the responsibility of calling updates to some God Class that places a limit on the number of invocations that occur each frame

The first two options are appealing since they’re relatively simple and we know that Coroutines can potentially save us a lot of unnecessary overhead.

However, as we discussed, there are many dangers and unexpected side effects associated with such drastic design changes.

A potentially better approach to optimize updates is to not use Update() at all, or more accurately, to use it only once.

When Unity calls Update(), and in fact, any of its callbacks, it crosses the aforementioned Native-Managed Bridge,which can be a costly task.

In other words, the processing cost of executing 1,000 separate Update() callbacks will be more expensive than executing one Update() callback, which calls into 1,000 regular functions.

As we witnessed in the "Remove empty callback definitions" section, calling Update() thousands of times is not a trivial amount of work for the CPU to undertake, primarily because of the Bridge.

We can, therefore, minimize how often Unity needs to cross the Bridge by having a God Class MonoBehaviour use its own Update() callback to call our own custom updatestyle system used by our custom Components.

In fact, many Unity developers prefer implementing this design right from the start of their projects, as it gives them finer control over when and how updates propagate throughout the system; this can be used for things such as menu pausing, cool time manipulation effects, or prioritizing important tasks and/or suspending low priority tasks if we detect that we’re about to reach our CPU budget for the current frame.

All objects wanting to integrate with such a system must have a common entry point.

We can achieve this through an Interface Class with the interface keyword.

Interface Classes essentially set up a contract whereby any class that implements the Interface Class Class must provide a specific series of methods.

In other words, if we know the object implements an Interface Class, then we can be certain about what methods are available.

In C#, classes can only derive from a single base class, but they can implement any number of Interface Classes (this avoids the deadly diamond of death problem that C++ programmers will be familiar with).

The following Interface Class definition will suffice, which only requires the implementing class to define a single method called OnUpdate():

public interface IUpdateable {

void OnUpdate(float dt);

}

It’s common practice to start an Interface Class definition with a capital ‘I’ to make it clear that it is an Interface Class we’re dealing with.

The beauty of Interface Classes is that they improve the decoupling of our codebase, allowing huge subsystems to be replaced, and as long as the Interface Class isadhered to, we will have greater confidence that it will continue to function as intended.

Next, we'll define a custom MonoBehaviour type which implements this Interface Class:

public class UpdateableComponent : MonoBehaviour, IUpdateable {

public virtual void OnUpdate(float dt) {}

}

Note that we're naming the method OnUpdate() rather than Update().

We're defining a custom version of the same concept, but we want to avoid name collisions with the built-in Update() callback.

The OnUpdate() method of the UpdateableComponent class retrieves the current delta time (dt), which spares us from a bunch of unnecessary Time.deltaTime calls, which are commonly used in Update() callbacks.

We've also made the function virtual to allow derived classes to customize it.

This function will never be called as it's currently being written.

Unity automatically grabs and invokes methods defined with the Update() name, but has no concept of our OnUpdate() function, so we will need to implement something that will call this method when the time is appropriate.

For example, some kind of GameLogic God Class could be used for this purpose.

During the initialization of this Component, we should do something to notify our GameLogic object of both its existence and its destruction so that it knows when to start and stop calling its OnUpdate() function.

In the following example, we will assume that our GameLogic class is a SingletonComponent, as defined earlier in the "Singleton Components" section, and has appropriate static functions defined for registration and deregistration.

Bear in mind that it could just as easily use the aforementioned MessagingSystem to notify the GameLogic of its creation/destruction.

For MonoBehaviours to hook into this system, the most appropriate place is within their Start() and OnDestroy() callbacks:

void Start() {

GameLogic.Instance.RegisterUpdateableObject(this);

}

void OnDestroy() {

if (GameLogic.Instance.IsAlive) {

GameLogic.Instance.DeregisterUpdateableObject(this);

}

}

It is best to use the Start() method for the task of registration, since using Start() means that we can be certain all other pre-existing Components will have at least had their Awake() methods called prior to this moment.

This way, any critical initialization work will have already been done on the object before we start invoking updates on it.

Note that because we're using Start() in a MonoBehaviour base class, if we define a Start() method in a derived class, it will effectively override the base class definition, and Unity will grab the derived Start() method as a callback instead.

It would, therefore, be wise to implement a virtual Initialize() method so that derived classes can override it to customize initialization behavior without interfering with the base class's task of notifying the GameLogic object of our Component's existence.

The following code provides an example of how we might implement a virtual Initialize() method.

void Start() {

GameLogic.Instance.RegisterUpdateableObject(this);

Initialize();

}

protected virtual void Initialize() {

// derived classes should override this method for initialization code, and NOT reimple

}

Finally, we will need to implement the GameLogic class.

The implementation is effectively the same whether it is a SingletonComponent or a MonoBehaviour, and whether or not it uses the MessagingSystem.

Either way, our UpdateableComponent class must register and deregister as IUpdateable objects, and the GameLogic class must use its own Update() callback to iterate through every registered object and call their OnUpdate() function.

Here is the definition for our GameLogic class:

public class GameLogicSingletonComponent : SingletonComponent<GameLogicSingletonComponent> {

public static GameLogicSingletonComponent Instance {

get { return ((GameLogicSingletonComponent)_Instance); }

set { _Instance = value; }

}

List<IUpdateable> _updateableObjects = new List<IUpdateable>();

public void RegisterUpdateableObject(IUpdateable obj) {

if (!_updateableObjects.Contains(obj)) {

_updateableObjects.Add(obj);

}

}

public void DeregisterUpdateableObject(IUpdateable obj) {

if (_updateableObjects.Contains(obj)) {

_updateableObjects.Remove(obj);

}

}

void Update() {

float dt = Time.deltaTime;

for (int i = 0; i < _updateableObjects.Count; ++i) {

_updateableObjects[i].OnUpdate(dt);

}

}

}

If we make sure that all of our custom Components inherit from the UpdateableComponent class, then we've effectively replaced "N" invocations of the Update() callback with just one Update() callback, plus "N" virtual function calls.

This can save us a large amount of performance overhead because even though we're calling virtual functions (which cost a small overhead more than a non-virtual function call because it needs to redirect the call to the correct place), we're still keeping the overwhelming majority of update behavior inside our Managed code and avoiding the Native-Managed Bridge as much as possible.

This class can even be expanded to provide priority systems, to skip low-priority tasks if it detects that the current frame has taken too long, and many other possibilities.

Depending on how deep you already are into your current project, such changes can be incredibly daunting, time-consuming, and likely to introduce a lot of bugs as subsystems are updated to make use of a completely different set of dependencies.

However, the benefits can outweigh the risks if time is on your side.

It would be wise to do some testing on a group of objects in a Scene that is similarly designed to your current Scene files to verify that thebenefits outweigh the costs.

Summary

3. The Benefits of Batching

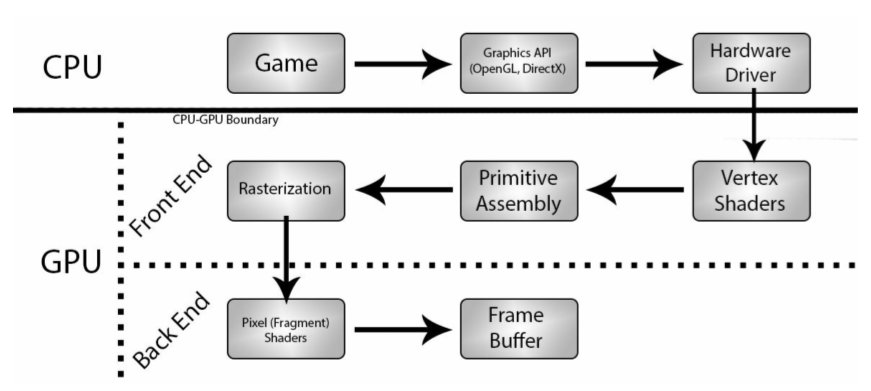

In 3D graphics and games, batching is a very general term describing the process of grouping a large number of wayward pieces of data together and processing them as a single, large block of data. This situation is ideal for CPUs, and particularly GPUs, which can handle simultaneous processing of multiple tasks with their multiple cores. Having a single core switching back and forth between different locations in memory takes time, so the less this needs to be done, the better.

In some cases, the act of batching refers to large sets of meshes, vertices, edges, UV coordinates, and other different data types that are used to represent a 3D object. However, the term could just as easily refer to the act of batching audio files, sprites, texture files, and other large datasets.

So, just to clear up any confusion, when the topic of batching is mentioned in Unity, it is usually referring to the two primary mechanisms it offers for batching mesh data: Dynamic Batching and Static Batching. These methods are essentially two different forms of geometry merging, where we combine mesh data of multiple objects together and render them all in a single instruction, as opposed to preparing and drawing each one separately.

The process of batching together multiple meshes into a single mesh is possible because there is no reason a mesh object must fill a contiguous volume of 3D space. The Rendering Pipeline is perfectly happy with accepting a collection of vertices that are not attached together with edges, and so we can take multiple separate meshes that might have resulted in multiple render instructions and combine them together into a single mesh, thus rendering it out using a single instruction.

We will cover the following topics in this chapter:

- A brief introduction to the Rendering Pipeline and the concept of Draw Calls

- How Unity's Materials and Shaders work together to render our objects

- Using the Frame Debugger to visualize rendering behavior

- How Dynamic Batching works, and how to optimize it

- How Static Batching works, and how to optimize it

Draw Calls

The primary goal of these batching methods is to reduce the number of Draw Calls required to render all objects in the current view. At its most basic form, a Draw Call is a request sent from the CPU to the GPU asking it to draw an object

Draw Call is the common industry vernacular for this process, although they are sometimes referred to as SetPass Calls in Unity, since some low-level methods are named as such

This utterly massive array of settings that must be configured to prepare the Rendering Pipeline just prior to rendering an object is often condensed into a single term known as the Render State.Until these Render State options are chagned, the GPU will maintain the same Render State for all incoming objects and render them in a similar fashion

Changing the Render State can be a time-consuming process. So, for exmpale, if we were to set the Render State to use a blue texture file and then ask it to render one gigantic mesh, then it would be rendered very rapidly with the whole mesh appearing blue. We could then render 9 more, completely different meshes, and they would all be rendered blue, since we haven't changed which texture is being used. If, however, we wanted to rendedr 10 meshes using 10 different textures, then this will take longer. This is because we will need to prepare the Render State with the new texture just prior to sending the Draw Call instruction for each mesh.

The texture being used to render the current object is effectively a global variable in the Graphics API, and changing a global variable within a parallel system is much easier said than done. In a massively parallel system such as a GPU, we must effectively wait until all of the current jobs have reached the same synchronization point(in other words, the fastest cores need to stop and wait for the slowest ones to catch up, wasting processing time that they could be using on other tasks) before we can make a Render State change, at which point we weill need to spin up all of the parallel jobs agian. This can waste a lot of time, so the less we need to ask the Render State to change, the faster the Graphics API will be able to proces our requests

Things that can trigger Render State synchronization include--but are not limited to--an immediate push of a new texture to the GPU and changing a Shader, lighting information, shadows, transparency, and pretty much any graphical setting we can think of.

Once the Render State is configured, the CPU must decide what mesh to draw, what textures and Shader it should use, and where to draw the object based on its position, rotation, and scale(all represented within a 4x4 matrix known as a transform, which is where the Transform Component gets its name from) and then send an instruction to the GPU to draw it. In order to keep the communication between CPU and GPU very dynamic, new instructions are pushed into a queue known as the Command Buffer. This queue contains instructions that the CPU has created and that the GPU pulls from each time it finishes the preceding command.

The trick to how batching improves the performance of this process is that a new Draw Call does not necessarily mean that a new Render State must be configured. If two objects share the exact same Render State information, then the GPU can immediately begin rendering the new object since the same Render State is maintained after the last object is finished. This eliminates the time wasted due to a Render State synchronization. It also serves to reduce the number of instructions that need to be pushed into the Command Buffer, reducing the workload on both the CPU and GPU.

Materials and Shaders

Render State in Unity is essentially exposed to us via Materials

If we want to minimize how often the Render State changes, then we can do so by reducing the number of Materials we use during a Scene. This would result in two performance improvements simultaneously; the CPU will spend less time generating and transmitting instructions to the GPU each frame and the GPU won't need to stop and re-synchronize state changes as often

Before we start, we should disable a few rendering options as they will contribute some extra Draw Calls, which might be distracting

- Window->Lighting->Scene->Environment-> set Skybox Material to null

- Edit->Project Settings->Quality-> set Shadows to Disable Shadows

- Edit->Project Settings->Player->Other Settings-> disable Static Batching and Dynamic Batching

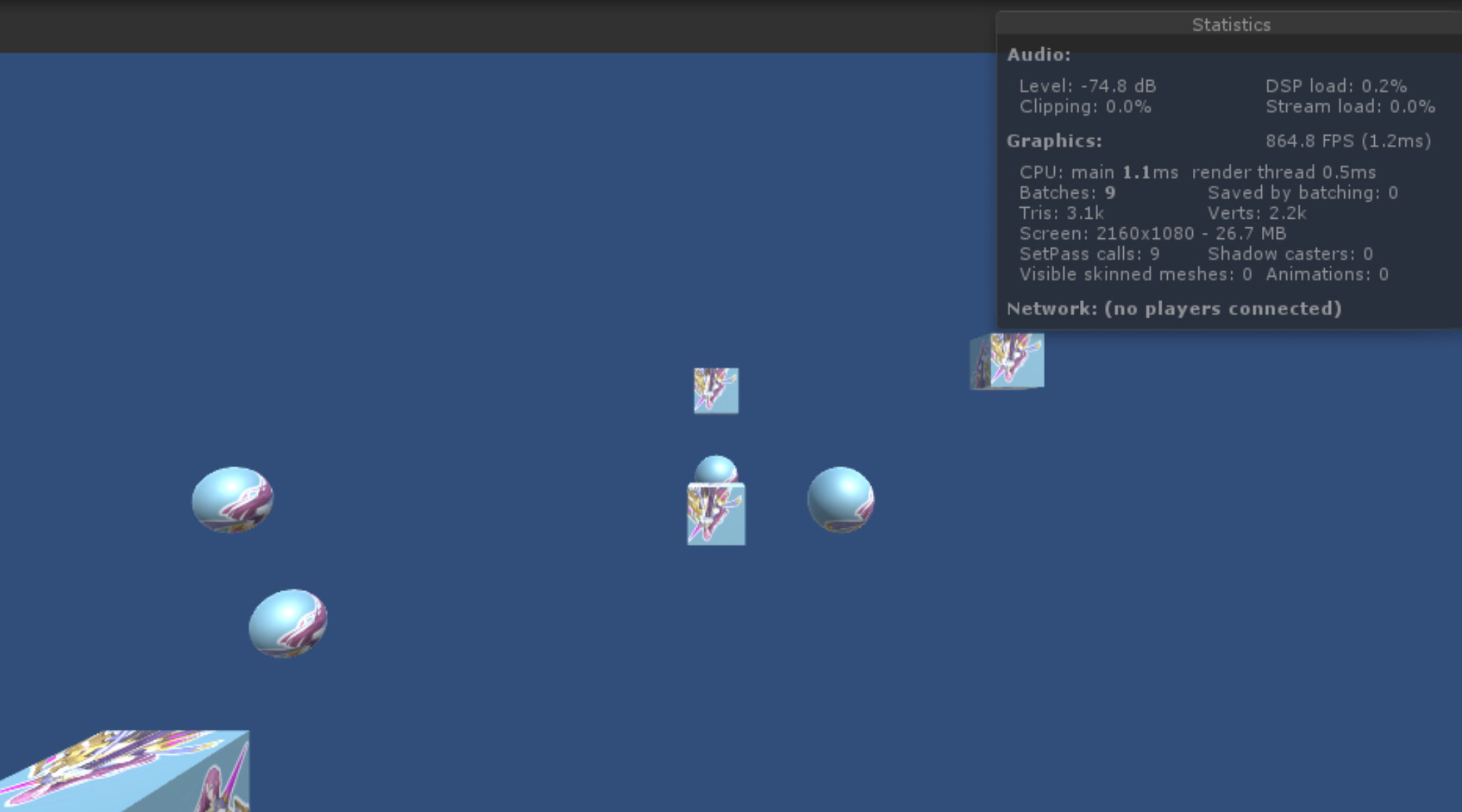

We can see 9 total batches. This value closely represents the number of Draw Calls used to render the Scene. The current view will consume one of these batches rendering the background of the scene, which could be set to Skybox or Solid Color.

The remainng 8 batches are used to draw out 8 objects. In each case, the Draw Call involves preparing the Rendering Pipeline using the Material's properties and asking the GPU to render the given mesh at its current transform.

We have ensured that each Material is unique by giving them each a unique texture file to render. Ergo, each mesh requires a different Render State, and, therefore, each of our 8 meshes requires a unique Draw Call

As previously mentioned, we can theoretically minimize the number of Draw Calls by reducing how often we cause the system to change Render State information. So, part of the goal is to reduce the amount of Materials we use.

However, if we set all objects to use the same Material, we still won't see any benefit and the number of batches remains at 9:

This is because we're not actually reducing the number of Render State changes nor efficiently grouping mesh information. Unfortunately, the Rendering Pipeline is not smart enough to realize we're overwriting the exact same Render State values, and then asking it to render the same meshes, over and over again.

The Frame Debugger

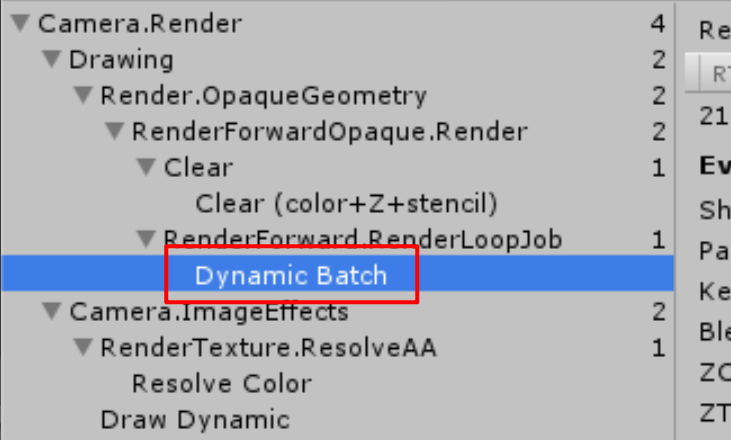

Drawing section which lists all of the Draw Calls in our Scene.

One Draw Call is being consumed to clear the screen (the item labelled Clear). and then our 8 meshes are being rendered in 8 separate Draw Calls (the item labelled RenderForward.RenderLoopJob)

Note that the number next to each item in the left-hand panel actually represents a Graphics API call, of which a Draw Call is but one type of API call. These can be seen in the Camera.Render, Camera.ImageEffects and RenderTexture.ResolveAA items. Any API call can be just as costly as a Draw Call, but the overwhelming majority of API calls we will make in a complex Scene is in the form of Draw Calls, so it is often best to focus on minimizing Draw Calls before worrying about the API communication overhead of things such as post-processing effects.

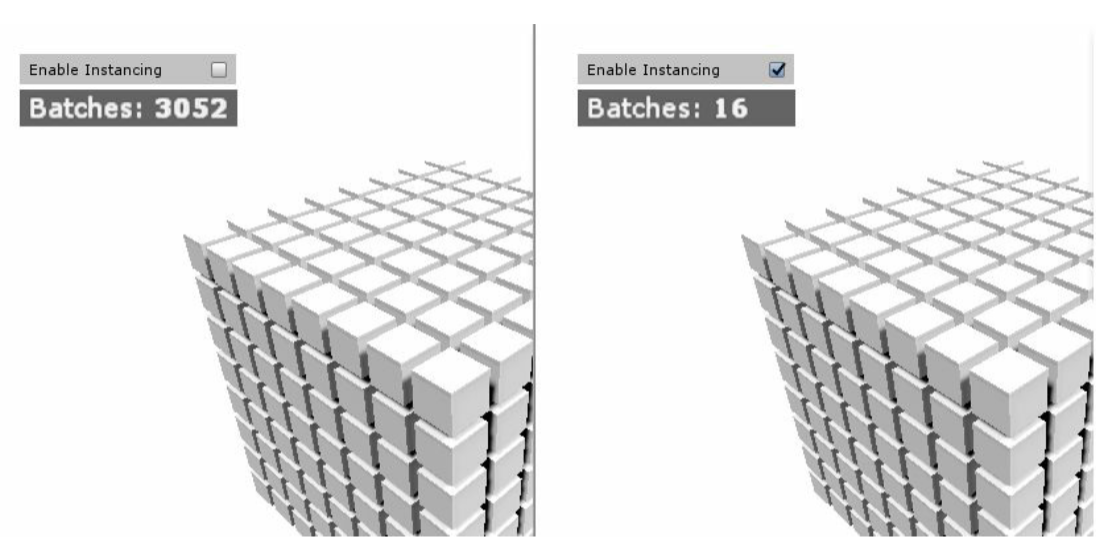

Dynamic Batching

Dynamic Batching has the following three important qualities:

- Batches are generated at runtime (batches are dynamically generated)

- The objects that are contained within a batch can vary from one frame to the next, depending on what meshes are currently visible to the Main Camera view(batch contents are dynamci)

- Even objects that can move around the Scene can be batched (it works on dynamic objects)

Hence, these attributes lead us to the name Dynamic Batching

Player Settings-> enable Dynamic Batching

Dynamic Batching automatically recognizes that our objects share Material and mesh information and is, therefore, combining some of them into a larger batch for processing.

The four spheres do not fit the requirements of Dynamic Batching. Despite the fact that they all use the same Material, there are many more requirements we must fulfill

https://docs.unity3d.com/Manual/DrawCallBatching.html

https://blogs.unity3d.com/2017/04/03/how-to-see-why-your-draw-calls-are-not-batched-in-5-6/

The following list covers the requirements to enable Dynamic Batching for a given mesh:

- All mesh instances must use the same Material reference

- Only ParticleSystem and MeshRenderer Components are dynamically batched. SkinnedMeshRenderer Components (for animated characters) and all other renderable Component types cannot be batched

- There is a limit of 300 vertices per mesh

- The total number of vertex attributes used by the Shader must be no greater than 900

- Either all mesh instances should use a uniform scale or all meshes should use a nonuniform scale, but not mixture of the two

- Mesh instances should refer to the same Lightmap file

- The Material's Shader should not depend on multiple passes

- Mesh instances must nopt receive real-time shadows

- There is an upper limit on the total number of mesh indices in the entire batch, which varies per-Graphics API and platform used, which is around 32k-64k indices

It is important to note the term Material references, because if we happen to use two different Materials with identical settings, the Rendering Pipeline is not smart enough to realize that, and they will be treated as different Materials and, therefore, will be disqualified from Dynamic Batching. Most of the rest of these requirements have either already been explained; however, a couple of these requirements are not completely intuitive or clear from the description, which merits additional explanation.

Vertex attributes

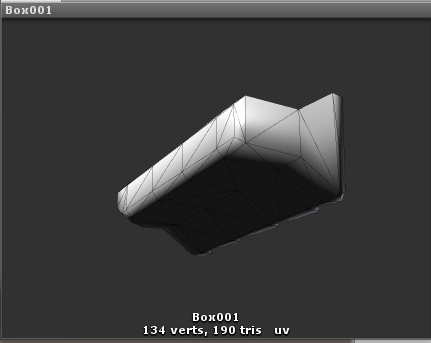

A vertex attribute is simply a piece of information contained within a mesh file on a per-vertex basis, and each is normally represented as a group of multiple floating-point values. This includes, but is not limited to, a vertex's position (relative to the root of the mesh), a normal vector (a vector pointing away from the object's surface, most often used in lighting calculations), one or more sets of texture UV coordinates (used to define how one or more textures wrap around the mesh), and possibly even color information per-vertex (normally used in custom lighting or for a flat-shaded, low-poly style object). Only meshes with less than 900 total vertex attributes used by the Shader can be included in Dynamic Batching.

Note that looking into a mesh's raw data file may contain less vertex attribute information than what Unity loads into memory because of how the engine converts mesh data from one of several raw data formats into an internal format. So, don't assume that the number of attributes our 3D modeling tool tells us the mesh uses will be the final count. The best way to verify the attribute count is to either drill down into the mesh object in the Project window until you find the MeshFilter Component and look at the verts value that appears in the Preview subsection of the Inspector window

Using more attribute data per vertex within the accompanying Shader will consume more from our 900-attribute budget and hence reduce the number of vertices the mesh is allowed to have before it can no longer be used in Dynamic Batching. For example, a simple diffuse Shader might only use 3 attributes per-vertex: position, normal, and a single set of UV coordinates. Dynamic Batching would therefore be able to support meshes using this Shader, which have a combined total of 300 vertices. However, a more complex Shader, requiring 5 attributes per-vertex, would only be able support Dynamic Batching with meshes using no more than 180 vertices. Also, note that even if we are using less than 3 vertex attributes per vertex in our Shader, Dynamic Batching still only supports meshes with a maximum of 300vertices, so only relatively simple objects are candidates for Dynamic Batching

Mesh scaling

Dynamic Batching summary

Dynamic Batching is a very useful tool when we want to render very large groups of simple meshes. The design of the system makes it ideal to use when we're making use of large numbers of simple meshes, which are nearly identical in appearance. Possible situations to apply Dynamic Batching could be as follows:

- A large forest filled with rocks, trees, and bushes

- A building, factory, or space station with many simple, common elements (computers, corridor pieces, pipes, and so on)

- A game featuring many dynamic, non-animated objects with simple geometry and particle effects (a game such as Geometry Wars springs to mind)

If the only requirement preventing two objects from being Dynamically Batched together is the fact that they use different texture fiels, be aware that it only takes a bit of development time and effort to combine textures, and regenerate mesh UVs so that they can be Dynamically Batched together (commonly known as Atlasing). This may cost us in texture quality or the overall size of a texture file (which can have drawbacks we will understand once we dive into the topic of GPU Memory Bandwith in Chapter 6, Dynamic Graphics), but it is worth considering.

Perhaps the only situation where Dynamic Batching may be a detriment on performance is if we were to set up a Scene with hundreds of simple objects, where only a few objects are put into each batch. In these cases, the overhead cost of detecting and generating so many small batches might cost more time than we'd save by just making a separate Draw Call for each mesh. Even still, this is unlikely

If anyting, we're far more likely to inflict performance losses on our application by simply assuming that Dynamic Batching is taking place, when we've actually forgotten one of the essential requirements. We can accidentally break the vertex limit by pushing a new version of a mesh, and in the process of Unity converting a raw Object(with the .obj extension) file into its own internal format, it generates more some Shader code or adding additional passes without realizing it would disqualify it from Dynamic Batching. We might even set up the object to enable shadows or Light Probes, which breaks another requirement.

Ultimately, every situation is unique, so it is worth experimenting with our mesh data, Materials, and Shaders to determine what can and cannot be dynamically batched, and performing some testing in our Scene from time to time to ensure that the number of Draw Calls we're using remains reasonable.

Static Batchings

The Static Batching system has its own set of requirements:

- As the name implies, the meshes must be flagged as Static (specifically, Batching Static)

- Additional memory must be set aside for each mesh being statically batched

- There is an upper limit on the number of vertices that can be combined in a static batch that varies per Graphic API and platform, which is around 32k-64k vertices

- The mesh instances can come from any source mesh, but they must share the same Material reference

The Static flag

Static Batching can only be applied to objects with the static flag enabled or, more specifically, the Batching Static subflag(these subflags are known as StaticEditorFlags). Clicking on the small down-pointing arrow next to the Static option for a GameObject will reveal a dropdown of the StaticEditorFlags, which can alter the object's behaviour for various Static processes.

An obvious side effect of this is that the object's transform cannot be changed, and, hence, any object wishing to make use of Static Batching cannot be moved, rotated, or scaled in any way

Memory requirements

The addtional memory requirement for Static Batching will vary, depending on the amount of replication occuring within the batched meshes. Static Batching works by copying the data for all flagged and visible meshes into a single, large mesh data buffer, and passing it into the Rendering Pipeline through a single Draw Call, while ignoring the original mesh. If all of the meshes being statically batched are unique, then this would cost us no addtional memory usage compared to rendering the objects normally, as the same amount of memory space is required to store the meshes.

However, since the data is effectively copied, these statically batched duplicates cost us addtional memroy equal to the number of meshes, multiplied by the size of the original mesh. Ordinarily, rendering one, ten, or a million clones of the same object costs us the same amount of memroy, because they're all referencing the same mesh data. The only difference between objects in this case is the transform of each object. However, because Static Batching needs to copy the data into a large buffer, this referencing is lost, since each duplicate of the original mesh is copied into the buffer with a unique set of data with a hardcoded transform baked into the vertex positions.

Therefore, using Static Batching to render 1000 identical tree objects will cost us 1000 times more memory than rendering the same trees without Static Batching. This causes some significatn memroy consumption and performance issues if Static Batching is not used wisely.

Material references

We are already aware that sharing Material references is a means of reducing Render State changes, so this requirement is fairly obvious. In addtion, sometimes, we statically batch meshes that require multiple Materials. In this case, all meshes using a different Material will be grouped together in their own static batch and for each unique Material being used.

The downside to this requirement is that, at best, Static Batching can only render all of the static meshes using a number of Draw Calls equal to the number of Materials they need.

Static Batching caveats

The Static Batching system has some addtional drawbacks. Owing to how it approaches the batching solution, by combining meshes into a single greater mesh, the Static Batching system has a few caveats that we need to be aware of.These concerns range from minor inconveniences to major drawbacks, depending on the Scene:

- Draw Call savings are not immediately visible from the Stats window until runtime

- Objects marked Batching Static introduced in the Scene at runtime will not be automatically included in Static Batching

Edit Mode debugging of Static Batching

Instantiating static meshes at runtime

Any new objects we add into the Scene at runtime will not be automatically combined into any existing batch by the Static Batching system, even if they were marked as Batching Static. To do so would cause an enormous runtime overhead between recalculating the mesh and synchronizing with the Rendering Pipeline, so Unity does not even attempt to do it automatically

For the most part, we should try to keep any meshes we want to be statically batched present in the original Scene file. However, if dynamic instantiation is necessary, or we are making use of additive Scene loading, then wen can control static batch eligibility with the StaticBatchUntility.Combine() method. This utility method has two overloads: either we provide a root GameObject, in which case all child GameObjects with meshes will be truned into new static batch groups (multiple could be created if they share multiple Materials) or we provide a list of GameObjects and a root GameObject, and it will automatically attach them as children to the root and generate new static batch groups in the same manner

We should profile our usage of this function, as it can be quite an expensive operation if there are many vertices to combine. It will also not combine the given meshes with any preexisting statically batched groups, even if they share the same Material. So we will not be able to save Draw Calls by instantiating or additively loading Static meshes that use the same Material as other statically batched groups already present in the Scene (it can only combine with meshes it was grouped with in the Combine() call)

Note that if any of the GameObjects we batch with the StaticBatchUtility.Combine() method are not marked as Static before batching, the GameObjects will remain non-Static, but the mesh itself will be Static. This means that we could accidentally move the GameObject, its Collider Component, and any other important objects, but the mesh will remain in the same location. Be careful about accidentally mixing Static and non-Static states in statically batched objects.

Static Batching summary

Static Batching is a powerful, but dangerous tool. If we don't use it wisely, we can vary easily inflict enormous performance losses via memory consumption(potentially leading to application crashes) and rendering costs on our application. It also takes a good amount of manual tweaking and configuration to ensure that batches are being properly generated, and that we aren't accidentally introducing any unintended side effects of using various Static flags. However, it does have a significant advantage in that it can be used on meshes of different shapes and enormous sizes, which Dynamic Batching cannot provide.

Summary

It is clear that the Dynamic Batching and Static Batching systems are not a silver bullet. We cannot blindly apply them to any given Scene and expect improvements. If our application and Scene happen to fit a particular set of parameters, then these methods are very effective at reducing CPU load and redering bottlenecks. However, if not, then some addtional work is required to prepare our Scene to meet batching feature requirements. Ultimately, only a good understanding of these batching systems and how they function can help us determine where and when this feature can be applied, and, hopefully, this chapter has given us all of the information we need to make informed decisions.

4. Kickstart Your Art

Audio

Importing audio files

Loading audio files

Encoding formats and quality levels

Audio performance enhancements

Minimize active Audio Source count

Enable Force to Mono for 3D sounds

Resample to lower frequencies

Consider all compression formats

Beware of streaming

Apply Filter Effects through Mixer Groups to reduce duplication

Use remote content streaming responsibly

Consider Audio Module files for background music

Texture files

The terms texture and sprite often get confused in game development, so it's worth making the distinction--a texture is simply an image file, a big list of color data telling the interpreting program what color each pixel of the image should be, whereas a sprite is the 2D equivalent of a mesh, which is often just a single quad(a pair of triangles combined to make a rectangular mesh) that renders flat against the current Camera.

There are also things called Sprite Sheets, which are large collections of individual images contained within a larger texture file, commonly used to contain the animations of a 2D character.

These files can be split apart by tools, such as Unity's Sprite Atlas tool, to form individual textures for the character's animated frames.

Both meshes and sprites use textures to render an image onto its surface.

Texture image files are typically generated in tools such as Adobe Photoshop or Gimp and then imported into our project in much the same way as audio files.

At runtime, these files are loaded into memory, pushed to the GPU's VRAM, and rendered by a Shader over the target sprite or mesh during a given Draw Call.

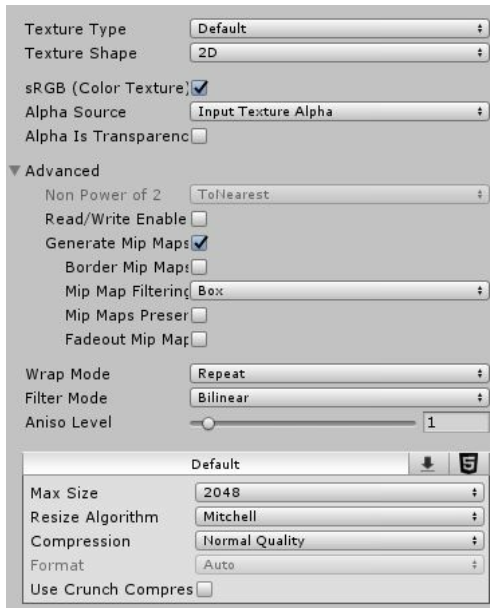

Texture compression formats

Texture performance enhancements

Reduce texture file size

The larger a given texture file, the more GPU Memory Bandwidth will be consumed, pushing the texture when it is needed. If the total memory pushed per second exceeds the graphics card's total Memory Bandwidth, then we will have a bottleneck, as the GPU must wait for all textures to be uploaded before the next rendering pass can begin. Smaller textures are naturally easier to push through the pipeline than larger textures, so we will need to find a good middle ground between high quality and performance.

A simple test to find out if we're bottlenecked in Memory Bandwidth is to reduce the resolution of our games most abundant and largest texture files and relaunch the Scene. If the frame rate suddenly improves, then the application was most likely bound by texture throughput. If the frame rate does not improve or improves very little, then either we still have some Memory Bandwidth to make use of or there are bottlenecks elsewhere in the Rendering Pipeline, preventing us from seeing any further improvement

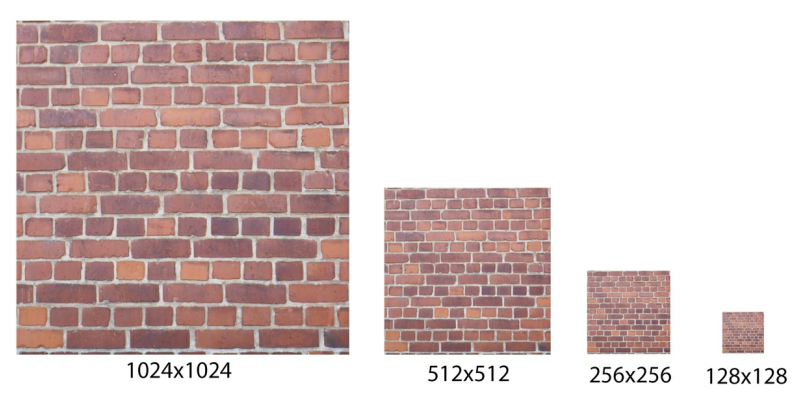

Use Mip Maps wisely

These images will be packed together to save space, essentially creating a final texture file that will be about 33 percent larger than the original image. This will cost some disk space and GPU Memory Bandwidth to upload

Remember that Mip Mapping is only useful if we have textures that need to be rendered at varying distances from the Camera

Manage resolution downscaling externally

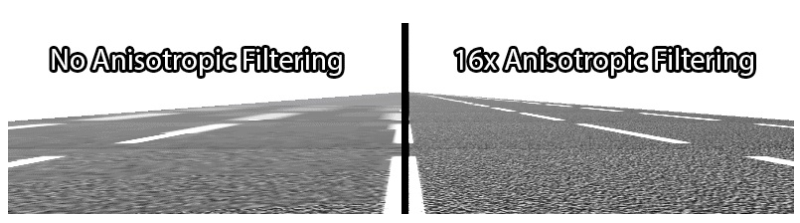

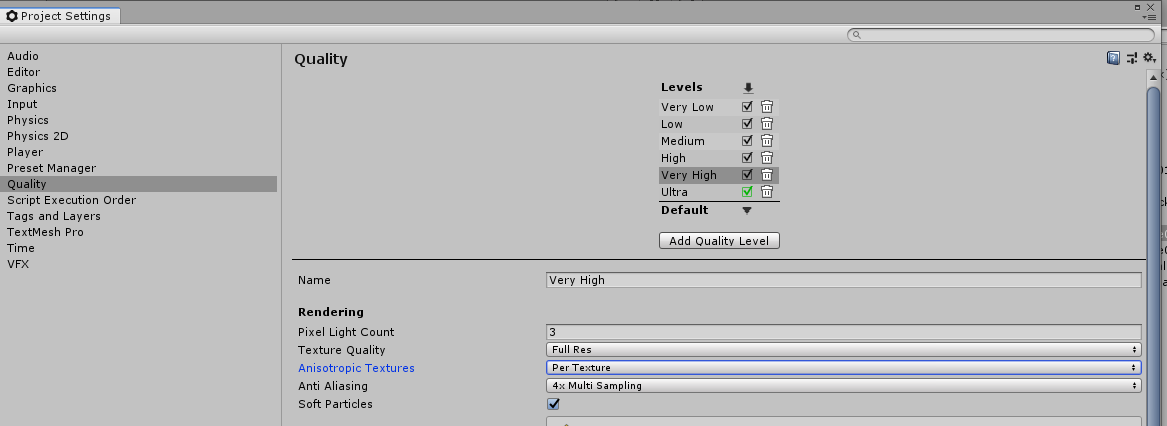

Adjust Anisotropic Filtering levels

Anisotropic Filtering is a feature that improves the image quality of textures when they are viewed at very oblique (shallow) angles

Much like Mip Mapping, this effect can be costly and, sometimes,unnecessary

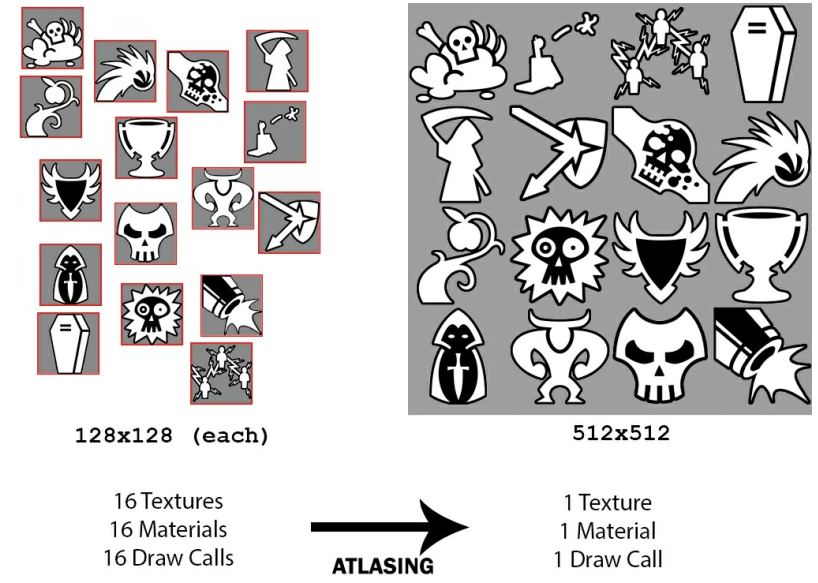

Consider Atlasing

Atlasing is the technique of combining lots of smaller, isolated textures together into a single, large texture file in order to minimize the number of Materials, and hence Draw Calls, we need to use

Each unique Material will require an additional Draw Call, but each Material only supports a single primary texture

Extra work is required to modify the UV coordinates used by the mesh or sprite object to only sample the portion of the larger texture file that it needs, but the benefits are clear; reducing Draw Calls results in reduction of CPU workload and improvement in the frame rate if our application isbottlenecked on CPU. Note that Atlasing does not result in reduced Memory Bandwidth consumption since the amount of data being pushed to the GPU would also be identical. It just happens to be bundled together in one bigger texture file

Atlasing is only an option when all of the given textures require the same Shader. If some of the textures need unique graphical effects applied through Shaders, then they must be isolated into their own Materials and Atlased in separate groups

However, because Dynamic Batching affects only non-animated meshes (that is, MeshRenderer, but not SkinnedMeshRenderer), there is no reason to combine texture files for animated characters into an Atlas. Since they are animated, the GPU needs to multiply each object's bones by the transform of the current animation state. This means a unique calculation is needed for each character, and they will result in an extra Draw Call regardless of any attempts we make to have them share Materials

Adjust compression rates for non-square textures

Texture files are normally stored in a square, power-of-two format, meaning that their height and width are equal in length, and its size is a power of two. For example, typical sizes are 256 x 256 pixels, 512 x 512, 1024 x 1024, and so on.

Sparse Textures

Procedural Materials

Asynchronous Texture Uploading

Mesh and animation files

These file types are essentially large arrays of vertex and skinned bone data

Reduce polygon count

This is the most obvious way to gain performance and should always be considered. In fact, since we cannot batch objects using Skinned Mesh Renderers, it's one of the good ways of reducing CPU and GPU runtime overhead for animated objects.

Reducing the polygon count is simple, straightforward, and provides both CPU and memory cost savings for the time required for artists to clean up the mesh. Much of an object's detail is provided almost entirely by detailed texturing and complex shading in this day and age, so we can often get away with stripping away a lot of vertices on modern meshes and most users would be unable to tell the difference.

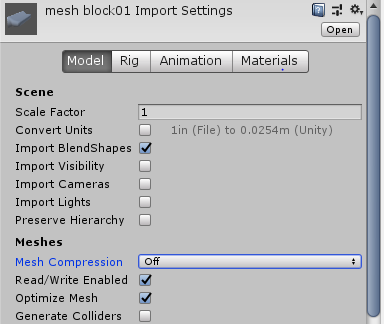

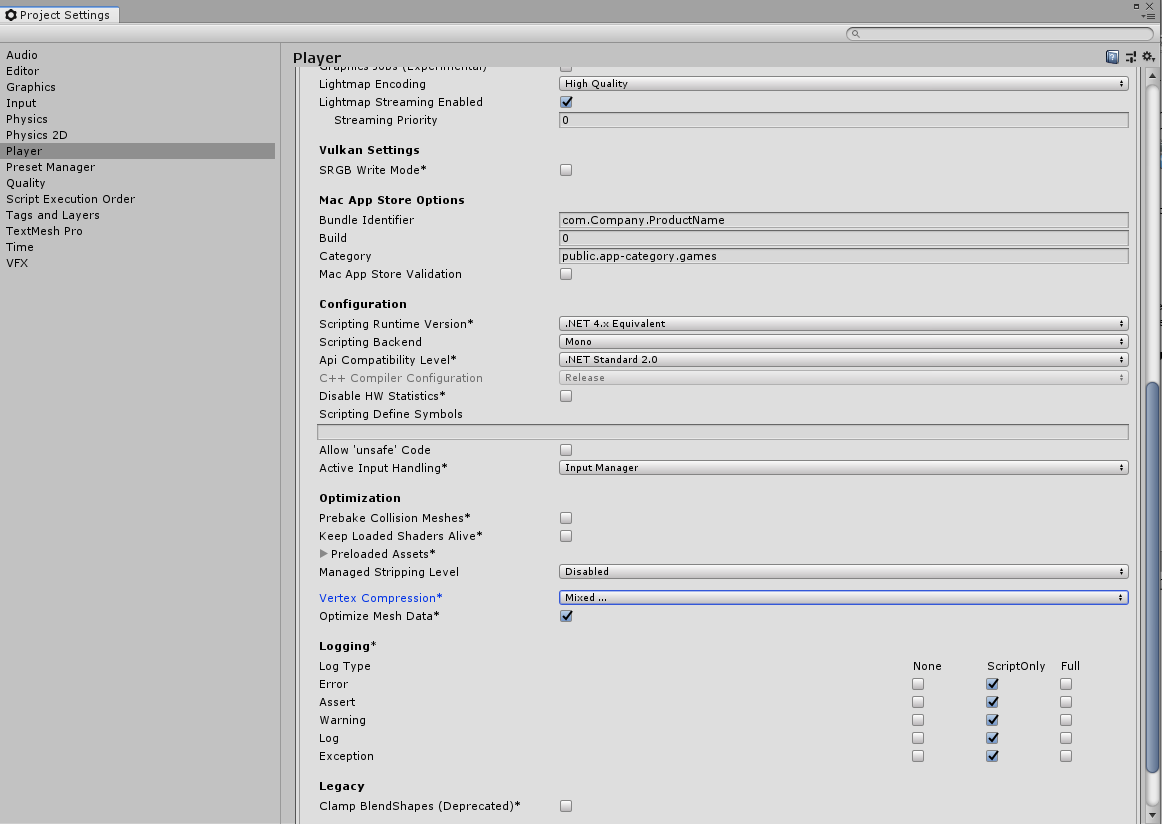

Tweak Mesh Compression

Unity offers four different Mesh Compression settings for imported mesh files: Off, Low, Medium, and High. Increasing this setting will convert floating-point data into fixed values, reducing the accuracy in vertex position/Normal direction, simplifying vertex color information, and so on

We can use the Vertex Compression option to configure the type of data that will be optimized when we import a mesh file with Mesh Compression enabled, so if we want accurate Normal data (for lighting), but have less worry over positional data, then we can configure it here. Unfortunately, this is a global setting and will affect all imported meshes (although it can be configured on a per-platform basis since it is a Player setting).

Enabling Optimize Mesh Data will strip away any data from the mesh that isn't required by the Material(s) assigned to it. So, if the mesh contains tangent information, but the Shader never requires it, then Unity will ignore it during build time

3D mesh building/animation tools often provide their own builtin ways of automated mesh optimization in the form of estimating the overall shape and stripping the mesh down tofewer total polygons. This can cause significant loss of quality and should be tested vigorously if used

Use Read-Write Enabled appropriately

The Read-Write Enabled flag allows changes to be made to the mesh at runtime either via Scripting or automatically by Unity during runtime, similar to how it is used for texture files. Internally, this means that it will keep the original mesh data in memory until we want to duplicate it and make changes dynamically. Disabling this option will allow Unity to discard the original mesh data from memory once it has determined the final mesh to use, since it knows it will never change.

If we use only a uniformly scaled version of a mesh throughout the entire game, then disabling this option will save runtime memory since we will no longer need the original mesh data to make further rescaled duplicates of the mesh (incidentally, this is how Unity organizes objects by scale factor when it comes to Dynamic Batching). Unity can, therefore, discard this unwanted data early since we will never need it again until the next time the application is launched.

However, if the mesh often reappears at runtime with different scales, then Unity needs to keep this data in memory so that it can recalculate a new mesh more quickly, hence it would be wise to enable the Read-Write Enabled flag. Disabling it will require Unity to not only reload the mesh data each time the mesh is reintroduced, but also make the rescaled duplicate at the same time, causing a potential performance hiccup.

Unity tries to detect the correct behavior for this setting at initialization time, but when meshes are instantiated and scaled in a dynamic fashion at runtime, we must force the issue by enabling this setting. This will improve instantiation speed of the objects, but cost some memory overhead since the original mesh data is kept around until it's needed

Note that this potential overhead cost also applies when using the Generate Colliders option

Consider baked animations

This tip will require changes in the asset through the 3D rigging and animation tool that we are using since Unity does not provide such tools itself. Animations are normally stored as key frame information where it keeps track of specific mesh positions and interpolates between them at runtime using skinning data (bone shapes, assignments, animation curves, and so on). Meanwhile, baking animations means effectively sampling and hardcoding each position of each vertex per-frame into the mesh/animation file without the need for interpolation and skinning data.

Using baked animations can, sometimes, result in much smaller file sizes and memory overhead than blended/skinned animations for some objects since skinning data can take up a surprisingly large amount of space to store. Thisis most likely to be the case for relatively simple objects, or objects with short animations, since we would effectively be replacing procedural data with a hardcoded series of vertex positions. So, if the mesh's polygon count is low enough where storing lots of vertex information is cheaper than skinning

data, then we may see some significant savings through this simple change.

In addition, how often the baked sample is taken can usually be customized by the exporting application. Different sample rates should be tested to find a good value where the key moments of the animation still shine-through what is essentially a simplified estimate.

Combine meshes

Forcefully combining meshes into a large, single mesh can be convenient to reduce Draw Calls, particularly if the meshes are too large for Dynamic Batching and don't play well with other statically batched groups. This is essentially the equivalent of Static Batching, but performed manually, so sometimes it's wasted effort if Static Batching could take care of the process for us.

Beware that if any single vertex of the mesh is visible in the Scene, then the entire object will be rendered together as one whole. This can lead to a lot of wasted processing if the mesh is only partially visible most of the time. This technique also comes with the drawback that it generates a whole new mesh asset file that we must deposit into our Scene, which means any changes we make to the original meshes will not be reflected in the combined one. This results in a lot of tedious workflow effort every time changes need to be made, so if Static Batching is an option, it should be used instead.

There are several tools available online, which can combine mesh files together for us in Unity. They are only an Asset Store or Google search away.

Asset Bundles and Resources

Resource System can be a great benefit during prototyping