Centos6.5使用ELK(Elasticsearch + Logstash + Kibana) 搭建日志集中分析平台实践

Centos6.5安装Logstash ELK stack 日志管理系统

概述:

日志主要包括系统日志、应用程序日志和安全日志。系统运维和开发人员可以通过日志了解服务器软硬件信息、检查配置过程中的错误及错误发生的原因。经常分析日志可以了解服务器的负荷,性能安全性,从而及时采取措施纠正错误。

通常,日志被分散的储存不同的设备上。如果你管理数十上百台服务器,你还在使用依次登录每台机器的传统方法查阅日志。这样是不是感觉很繁琐和效率低下。当务之急我们使用集中化的日志管理,例如:开源的syslog,将所有服务器上的日志收集汇总。

集中化管理日志后,日志的统计和检索又成为一件比较麻烦的事情,一般我们使用grep、awk和wc等Linux命令能实现检索和统计,但是对于要求更高的查询、排序和统计等要求和庞大的机器数量依然使用这样的方法难免有点力不从心。

开源实时日志分析ELK平台能够完美的解决我们上述的问题,ELK由ElasticSearch、Logstash和Kiabana三个开源工具组成。官方网站:https://www.elastic.co/products

Elasticsearch是个开源分布式搜索引擎,它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。 它不是一个软件,而是Elasticsearch,Logstash,Kibana 开源软件的集合,对外是作为一个日志管理系统的开源方案。它可以从任何来源,任何格式进行日志搜索,分析获取数据,并实时进行展示。像盾牌(安全),监护者(警报)和Marvel(监测)一样为你的产品提供更多的可能。

Elasticsearch:搜索,提供分布式全文搜索引擎

Logstash: 日志收集,管理,存储

Kibana :日志的过滤web 展示

Filebeat:监控日志文件、转发

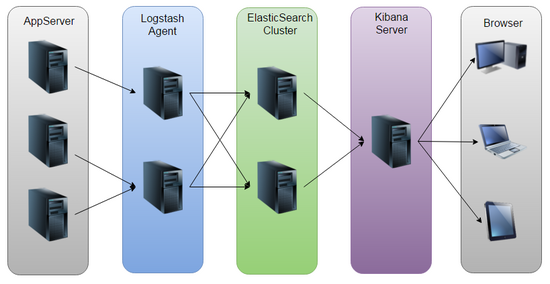

工作原理如下如所示:

操作系统 centos6.5 x86_64

elk server: 192.168.3.17

为避免干扰,关闭防火墙和selinux

service iptables off

setenforce 0

三台机器都需要修改hosts文件

cat /etc/hosts

192.168.3.17 elk.chinasoft.com

192.168.3.18 rsyslog.chinasoft.com

192.168.3.13 nginx.chinasoft.com

修改主机名:

hostname elk.chinasoft.com

mkdir -p /data/elk

下载elk相关的软件

cd /data/elk

wget -c https://download.elastic.co/elasticsearch/release/org/elasticsearch/distribution/rpm/elasticsearch/2.3.3/elasticsearch-2.3.3.rpm

wget -c https://download.elastic.co/logstash/logstash/packages/centos/logstash-2.3.2-1.noarch.rpm

wget -c https://download.elastic.co/kibana/kibana/kibana-4.5.1-1.x86_64.rpm

wget -c https://download.elastic.co/beats/filebeat/filebeat-1.2.3-x86_64.rpm

服务器只需要安装e、l、k, 客户端只需要安装filebeat。

安装elasticsearch,先安装jdk,elk server 需要java 开发环境支持,由于客户端上使用的是filebeat软件,它不依赖java环境,所以不需要安装

rpm -ivh jdk-8u102-linux-x64.rpm

yum localinstall elasticsearch-2.3.3.rpm -y

启动服务

service elasticsearch start

chkconfig elasticsearch on

检查服务

rpm -qc elasticsearch

/etc/elasticsearch/elasticsearch.yml

/etc/elasticsearch/logging.yml

/etc/init.d/elasticsearch

/etc/sysconfig/elasticsearch

/usr/lib/sysctl.d/elasticsearch.conf

/usr/lib/systemd/system/elasticsearch.service

/usr/lib/tmpfiles.d/elasticsearch.conf

是否正常启动

netstat -nltp | grep java

tcp 0 0 ::ffff:127.0.0.1:9200 :::* LISTEN 1927/java

tcp 0 0 ::1:9200 :::* LISTEN 1927/java

tcp 0 0 ::ffff:127.0.0.1:9300 :::* LISTEN 1927/java

tcp 0 0 ::1:9300 :::* LISTEN 1927/java

2.安装kibana

yum localinstall kibana-4.5.1-1.x86_64.rpm -y

service kibana start

chkconfig kibana on

检查kibana服务运行(Kibana默认 进程名:node ,端口5601)

ss -tunlp|grep 5601

tcp LISTEN 0 128 *:5601 *:* users:(("node",2042,11))

日志观察

tail -f /var/log/kibana/kibana.stdout

这时,我们可以打开浏览器,测试访问一下kibana服务器http://192.168.3.17:5601/,确认没有问题,如下图:

安装logstash,以及添加配置文件

yum localinstall logstash-2.3.2-1.noarch.rpm -y

生成证书

cd /etc/pki/tls/

openssl req -subj '/CN=elk.chinasoft.com/' -x509 -days 3650 -batch -nodes -newkey rsa:2048 -keyout private/logstash-forwarder.key -out certs/logstash-forwarder.crt

之后创建logstash 的配置文件,如下:

vim /etc/logstash/conf.d/01-logstash-initial.conf

input {

beats {

port => 5000

type => "logs"

ssl => true

ssl_certificate => "/etc/pki/tls/certs/logstash-forwarder.crt"

ssl_key => "/etc/pki/tls/private/logstash-forwarder.key"

}

}

filter {

if [type] == "syslog-beat" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:\[%{POSINT:syslog_pid}\])?: %{GREEDYDATA:syslog_message}" }

add_field => [ "received_at", "%{@timestamp}" ]

add_field => [ "received_from", "%{host}" ]

}

geoip {

source => "clientip"

}

syslog_pri {}

date {

match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

}

output {

elasticsearch { }

stdout { codec => rubydebug }

}

启动logstash,并检查端口,配置文件里,此处写的是5000端口

service logstash start

ss -tunlp|grep 5000

tcp LISTEN 0 50 :::5000 :::* users:(("java",2238,14))

修改elasticsearch 配置文件

查看目录,创建文件夹es-01(名字不是必须的),logging.yml是自带的,elasticsearch.yml是创建的文件,具体如下:

cd /etc/elasticsearch/

ll

total 12

-rwxr-x--- 1 root elasticsearch 3189 May 12 21:24 elasticsearch.yml

-rwxr-x--- 1 root elasticsearch 2571 May 12 21:24 logging.yml

drwxr-x--- 2 root elasticsearch 4096 May 17 23:49 scripts

[root@centossz008 elasticsearch]# tree

├── elasticsearch.yml

├── es-01

│ ├── elasticsearch.yml

│ └── logging.yml

├── logging.yml

└── scripts

mkdir es-01

vim es-01/elasticsearch.yml

http:

port: 9200

network:

host: elk.chinasoft.com

node:

name: elk.chinasoft.com

path:

data: /etc/elasticsearch/data/es-01

重启elasticsearch、logstash服务。

service elasticsearch restart

Stopping elasticsearch: [ OK ]

Starting elasticsearch: [ OK ]

# service logstash restart

Killing logstash (pid 2238) with SIGTERM

Waiting logstash (pid 2238) to die...

Waiting logstash (pid 2238) to die...

logstash stopped.

logstash started.

将fiebeat安装包拷贝到 rsyslog、nginx 客户端上

将 fiebeat安装包拷贝到 rsyslog、nginx 客户端上

scp filebeat-1.2.3-x86_64.rpm root@rsyslog.chinasoft.com:/root

scp filebeat-1.2.3-x86_64.rpm root@nginx.chinasoft.com:/root

scp /etc/pki/tls/certs/logstash-forwarder.crt rsyslog.chinasoft.com:/root

scp /etc/pki/tls/certs/logstash-forwarder.crt nginx.chinasoft.com:/root

filebeat客户端是一个轻量级的,从服务器上的文件收集日志资源的工具,这些日志转发到处理到Logstash服务器上。该Filebeat客户端使用安全的Beats协议与Logstash实例通信。lumberjack协议被设计为可靠性和低延迟。Filebeat使用托管源数据的计算机的计算资源,并且Beats输入插件尽量减少对Logstash的资源需求。

(node1 rsyslog.chinasoft.com)安装filebeat,拷贝证书,创建收集日志配置文件

yum localinstall filebeat-1.2.3-x86_64.rpm -y

#拷贝证书到本机指定目录中

[root@rsyslog elk]# cp logstash-forwarder.crt /etc/pki/tls/certs/.

[root@rsyslog elk]# cd /etc/filebeat/

tree

.

├── conf.d

│ ├── authlogs.yml

│ └── syslogs.yml

├── filebeat.template.json

├── filebeat.yml

└── filebeat.yml.bak

修改的文件有3个,filebeat.yml,是定义连接logstash 服务器的配置。conf.d目录下的2个配置文件是自定义监控日志的,下面看下各自的内容:

mkdir conf.d

vim filebeat.yml

------------------------------------------

filebeat:

spool_size: 1024

idle_timeout: 5s

registry_file: .filebeat

config_dir: /etc/filebeat/conf.d

output:

logstash:

hosts:

- elk.chinasoft.com:5000

tls:

certificate_authorities: ["/etc/pki/tls/certs/logstash-forwarder.crt"]

enabled: true

shipper: {}

logging: {}

runoptions: {}

------------------------------------------

vim conf.d/authlogs.yml

------------------------------------------

filebeat:

prospectors:

- paths:

- /var/log/secure

encoding: plain

fields_under_root: false

input_type: log

ignore_older: 24h

document_type: syslog-beat

scan_frequency: 10s

harvester_buffer_size: 16384

tail_files: false

force_close_files: false

backoff: 1s

max_backoff: 1s

backoff_factor: 2

partial_line_waiting: 5s

max_bytes: 10485760

------------------------------------------

vim conf.d/syslogs.yml

------------------------------------------

filebeat:

prospectors:

- paths:

- /var/log/messages

encoding: plain

fields_under_root: false

input_type: log

ignore_older: 24h

document_type: syslog-beat

scan_frequency: 10s

harvester_buffer_size: 16384

tail_files: false

force_close_files: false

backoff: 1s

max_backoff: 1s

backoff_factor: 2

partial_line_waiting: 5s

max_bytes: 10485760

------------------------------------------

vim conf.d/flowsdk.yml

------------------------------------------

filebeat:

prospectors:

- paths:

- /data/logs/flowsdk.log

encoding: plain

fields_under_root: false

input_type: log

ignore_older: 24h

document_type: syslog-beat

scan_frequency: 10s

harvester_buffer_size: 16384

tail_files: false

force_close_files: false

backoff: 1s

max_backoff: 1s

backoff_factor: 2

partial_line_waiting: 5s

max_bytes: 10485760

------------------------------------------

修改完成后,启动filebeat服务

service filebeat start

chkconfig filebeat on

报错:

service filebeat start

Starting filebeat: 2016/10/12 14:05:46 No paths given. What files do you want me to watch?

分析:

需要监控的文件,不是最近24小时产生的,添加一个其他的文件即可如/var/logs/message

(node2 nginx.chinasoft.com)安装filebeat,拷贝证书,创建收集日志配置文件

yum localinstall filebeat-1.2.3-x86_64.rpm -y

cp logstash-forwarder.crt /etc/pki/tls/certs/.

cd /etc/filebeat/

tree

.

├── conf.d

│ ├── nginx.yml

│ └── syslogs.yml

├── filebeat.template.json

└── filebeat.yml

修改filebeat.yml 内容如下:

vim filebeat.yml

------------------------------------------

filebeat:

spool_size: 1024

idle_timeout: 5s

registry_file: .filebeat

config_dir: /etc/filebeat/conf.d

output:

logstash:

hosts:

- elk.chinasoft.com:5000

tls:

certificate_authorities: ["/etc/pki/tls/certs/logstash-forwarder.crt"]

enabled: true

shipper: {}

logging: {}

runoptions: {}

------------------------------------------

syslogs.yml & nginx.yml

vim conf.d/syslogs.yml

------------------------------------------

filebeat:

prospectors:

- paths:

- /var/log/messages

encoding: plain

fields_under_root: false

input_type: log

ignore_older: 24h

document_type: syslog-beat

scan_frequency: 10s

harvester_buffer_size: 16384

tail_files: false

force_close_files: false

backoff: 1s

max_backoff: 1s

backoff_factor: 2

partial_line_waiting: 5s

max_bytes: 10485760

------------------------------------------

vim conf.d/nginx.yml

------------------------------------------

filebeat:

prospectors:

- paths:

- /var/log/nginx/access.log

encoding: plain

fields_under_root: false

input_type: log

ignore_older: 24h

document_type: syslog-beat

scan_frequency: 10s

harvester_buffer_size: 16384

tail_files: false

force_close_files: false

backoff: 1s

max_backoff: 1s

backoff_factor: 2

partial_line_waiting: 5s

max_bytes: 10485760

------------------------------------------

修改完成后,启动filebeat服务,并检查filebeat进程

重新载入 systemd,扫描新的或有变动的单元;启动并加入开机自启动

systemctl daemon-reload

systemctl start filebeat

systemctl enable filebeat

systemctl status filebeat

● filebeat.service - filebeat

Loaded: loaded (/usr/lib/systemd/system/filebeat.service; enabled; vendor preset: disabled)

Active: active (running) since Tue 2016-10-11 17:23:03 CST; 2min 56s ago

Docs: https://www.elastic.co/guide/en/beats/filebeat/current/index.html

Main PID: 22452 (filebeat)

CGroup: /system.slice/filebeat.service

└─22452 /usr/bin/filebeat -c /etc/filebeat/filebeat.yml

Oct 11 17:23:03 localhost.localdomain systemd[1]: Started filebeat.

Oct 11 17:23:03 localhost.localdomain systemd[1]: Starting filebeat...

Oct 11 17:23:31 localhost.localdomain systemd[1]: Started filebeat.

通过上面可以看出,客户端filebeat进程已经和 elk 服务器连接了。下面去验证。

vim /etc/init.d/elasticsearch

修改如下两行:

LOG_DIR="/data/elasticsearch/log"

DATA_DIR="/data/elasticsearch/data"

创建存储数据和本身日志的目录

mkdir /data/elasticsearch/data -p

mkdir /data/elasticsearch/log -p

chown -R elasticsearch.elasticsearch /data/elasticsearch/

重启服务生效

/etc/init.d/elasticsearch

如果是编译安装的,配置存储目录是elasticsearch.yml如下:

cat /usr/local/elasticsearch/config/elasticsearch.yml | egrep -v "^$|^#"

path.data: /tmp/elasticsearch/data

path.logs: /tmp/elasticsearch/logs

network.host: X.X.X.X

network.port: 9200

logstash日志存放目录修改:

vim /etc/init.d/logstash

LS_LOG_DIR=/data/logstash

概述:

日志主要包括系统日志、应用程序日志和安全日志。系统运维和开发人员可以通过日志了解服务器软硬件信息、检查配置过程中的错误及错误发生的原因。经常分析日志可以了解服务器的负荷,性能安全性,从而及时采取措施纠正错误。

通常,日志被分散的储存不同的设备上。如果你管理数十上百台服务器,你还在使用依次登录每台机器的传统方法查阅日志。这样是不是感觉很繁琐和效率低下。当务之急我们使用集中化的日志管理,例如:开源的syslog,将所有服务器上的日志收集汇总。

集中化管理日志后,日志的统计和检索又成为一件比较麻烦的事情,一般我们使用grep、awk和wc等Linux命令能实现检索和统计,但是对于要求更高的查询、排序和统计等要求和庞大的机器数量依然使用这样的方法难免有点力不从心。

开源实时日志分析ELK平台能够完美的解决我们上述的问题,ELK由ElasticSearch、Logstash和Kiabana三个开源工具组成。官方网站:https://www.elastic.co/products

Elasticsearch是个开源分布式搜索引擎,它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。 它不是一个软件,而是Elasticsearch,Logstash,Kibana 开源软件的集合,对外是作为一个日志管理系统的开源方案。它可以从任何来源,任何格式进行日志搜索,分析获取数据,并实时进行展示。像盾牌(安全),监护者(警报)和Marvel(监测)一样为你的产品提供更多的可能。

Elasticsearch:搜索,提供分布式全文搜索引擎

Logstash: 日志收集,管理,存储

Kibana :日志的过滤web 展示

Filebeat:监控日志文件、转发

工作原理如下如所示:

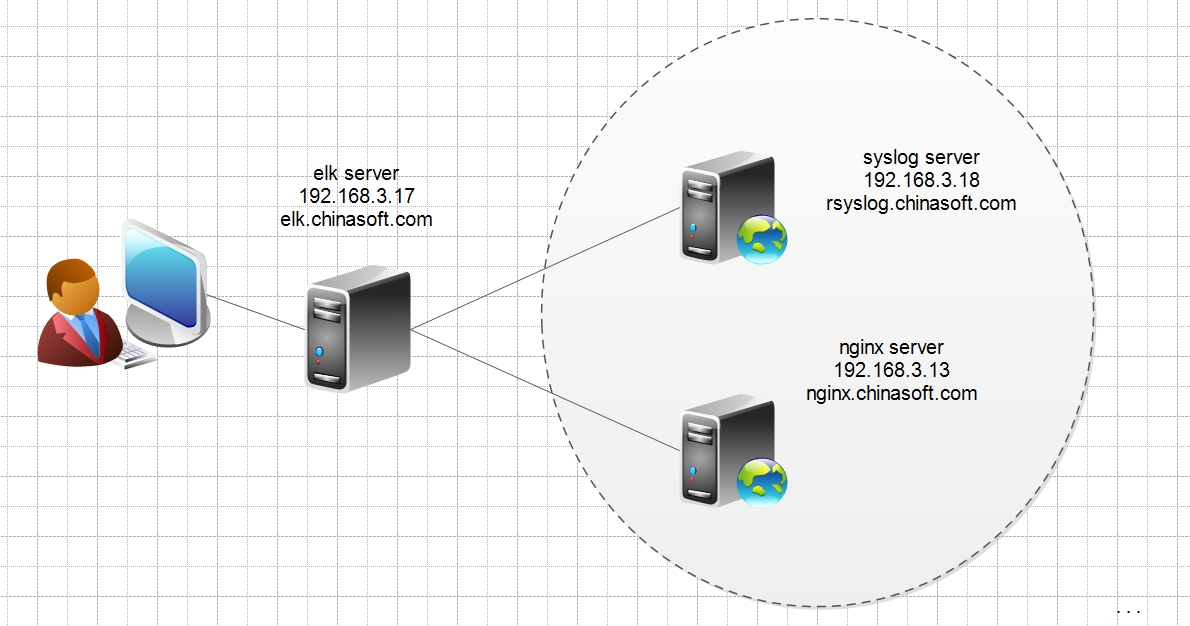

一、测试环境规划图

操作系统 centos6.5 x86_64

elk server: 192.168.3.17

为避免干扰,关闭防火墙和selinux

service iptables off

setenforce 0

三台机器都需要修改hosts文件

cat /etc/hosts

192.168.3.17 elk.chinasoft.com

192.168.3.18 rsyslog.chinasoft.com

192.168.3.13 nginx.chinasoft.com

修改主机名:

hostname elk.chinasoft.com

mkdir -p /data/elk

下载elk相关的软件

cd /data/elk

wget -c https://download.elastic.co/elasticsearch/release/org/elasticsearch/distribution/rpm/elasticsearch/2.3.3/elasticsearch-2.3.3.rpm

wget -c https://download.elastic.co/logstash/logstash/packages/centos/logstash-2.3.2-1.noarch.rpm

wget -c https://download.elastic.co/kibana/kibana/kibana-4.5.1-1.x86_64.rpm

wget -c https://download.elastic.co/beats/filebeat/filebeat-1.2.3-x86_64.rpm

服务器只需要安装e、l、k, 客户端只需要安装filebeat。

安装elasticsearch,先安装jdk,elk server 需要java 开发环境支持,由于客户端上使用的是filebeat软件,它不依赖java环境,所以不需要安装

二、elk服务端操作

1.安装jdk8和elasticsearchrpm -ivh jdk-8u102-linux-x64.rpm

yum localinstall elasticsearch-2.3.3.rpm -y

启动服务

service elasticsearch start

chkconfig elasticsearch on

检查服务

rpm -qc elasticsearch

/etc/elasticsearch/elasticsearch.yml

/etc/elasticsearch/logging.yml

/etc/init.d/elasticsearch

/etc/sysconfig/elasticsearch

/usr/lib/sysctl.d/elasticsearch.conf

/usr/lib/systemd/system/elasticsearch.service

/usr/lib/tmpfiles.d/elasticsearch.conf

是否正常启动

netstat -nltp | grep java

tcp 0 0 ::ffff:127.0.0.1:9200 :::* LISTEN 1927/java

tcp 0 0 ::1:9200 :::* LISTEN 1927/java

tcp 0 0 ::ffff:127.0.0.1:9300 :::* LISTEN 1927/java

tcp 0 0 ::1:9300 :::* LISTEN 1927/java

2.安装kibana

yum localinstall kibana-4.5.1-1.x86_64.rpm -y

service kibana start

chkconfig kibana on

检查kibana服务运行(Kibana默认 进程名:node ,端口5601)

ss -tunlp|grep 5601

tcp LISTEN 0 128 *:5601 *:* users:(("node",2042,11))

日志观察

tail -f /var/log/kibana/kibana.stdout

这时,我们可以打开浏览器,测试访问一下kibana服务器http://192.168.3.17:5601/,确认没有问题,如下图:

安装logstash,以及添加配置文件

yum localinstall logstash-2.3.2-1.noarch.rpm -y

生成证书

cd /etc/pki/tls/

openssl req -subj '/CN=elk.chinasoft.com/' -x509 -days 3650 -batch -nodes -newkey rsa:2048 -keyout private/logstash-forwarder.key -out certs/logstash-forwarder.crt

enerating a 2048 bit RSA private key ..............................................+++ ........................................................................................................................................................+++ writing new private key to 'private/logstash-forwarder.key' -----

之后创建logstash 的配置文件,如下:

vim /etc/logstash/conf.d/01-logstash-initial.conf

input {

beats {

port => 5000

type => "logs"

ssl => true

ssl_certificate => "/etc/pki/tls/certs/logstash-forwarder.crt"

ssl_key => "/etc/pki/tls/private/logstash-forwarder.key"

}

}

filter {

if [type] == "syslog-beat" {

grok {

match => { "message" => "%{SYSLOGTIMESTAMP:syslog_timestamp} %{SYSLOGHOST:syslog_hostname} %{DATA:syslog_program}(?:\[%{POSINT:syslog_pid}\])?: %{GREEDYDATA:syslog_message}" }

add_field => [ "received_at", "%{@timestamp}" ]

add_field => [ "received_from", "%{host}" ]

}

geoip {

source => "clientip"

}

syslog_pri {}

date {

match => [ "syslog_timestamp", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

}

output {

elasticsearch { }

stdout { codec => rubydebug }

}

启动logstash,并检查端口,配置文件里,此处写的是5000端口

service logstash start

ss -tunlp|grep 5000

tcp LISTEN 0 50 :::5000 :::* users:(("java",2238,14))

修改elasticsearch 配置文件

查看目录,创建文件夹es-01(名字不是必须的),logging.yml是自带的,elasticsearch.yml是创建的文件,具体如下:

cd /etc/elasticsearch/

ll

total 12

-rwxr-x--- 1 root elasticsearch 3189 May 12 21:24 elasticsearch.yml

-rwxr-x--- 1 root elasticsearch 2571 May 12 21:24 logging.yml

drwxr-x--- 2 root elasticsearch 4096 May 17 23:49 scripts

[root@centossz008 elasticsearch]# tree

├── elasticsearch.yml

├── es-01

│ ├── elasticsearch.yml

│ └── logging.yml

├── logging.yml

└── scripts

mkdir es-01

vim es-01/elasticsearch.yml

http:

port: 9200

network:

host: elk.chinasoft.com

node:

name: elk.chinasoft.com

path:

data: /etc/elasticsearch/data/es-01

重启elasticsearch、logstash服务。

service elasticsearch restart

Stopping elasticsearch: [ OK ]

Starting elasticsearch: [ OK ]

# service logstash restart

Killing logstash (pid 2238) with SIGTERM

Waiting logstash (pid 2238) to die...

Waiting logstash (pid 2238) to die...

logstash stopped.

logstash started.

将fiebeat安装包拷贝到 rsyslog、nginx 客户端上

将 fiebeat安装包拷贝到 rsyslog、nginx 客户端上

scp filebeat-1.2.3-x86_64.rpm root@rsyslog.chinasoft.com:/root

scp filebeat-1.2.3-x86_64.rpm root@nginx.chinasoft.com:/root

scp /etc/pki/tls/certs/logstash-forwarder.crt rsyslog.chinasoft.com:/root

scp /etc/pki/tls/certs/logstash-forwarder.crt nginx.chinasoft.com:/root

三、客户端部署filebeat(在rsyslog、nginx客户端上操作)

filebeat客户端是一个轻量级的,从服务器上的文件收集日志资源的工具,这些日志转发到处理到Logstash服务器上。该Filebeat客户端使用安全的Beats协议与Logstash实例通信。lumberjack协议被设计为可靠性和低延迟。Filebeat使用托管源数据的计算机的计算资源,并且Beats输入插件尽量减少对Logstash的资源需求。

(node1 rsyslog.chinasoft.com)安装filebeat,拷贝证书,创建收集日志配置文件

yum localinstall filebeat-1.2.3-x86_64.rpm -y

#拷贝证书到本机指定目录中

[root@rsyslog elk]# cp logstash-forwarder.crt /etc/pki/tls/certs/.

[root@rsyslog elk]# cd /etc/filebeat/

tree

.

├── conf.d

│ ├── authlogs.yml

│ └── syslogs.yml

├── filebeat.template.json

├── filebeat.yml

└── filebeat.yml.bak

修改的文件有3个,filebeat.yml,是定义连接logstash 服务器的配置。conf.d目录下的2个配置文件是自定义监控日志的,下面看下各自的内容:

mkdir conf.d

vim filebeat.yml

------------------------------------------

filebeat:

spool_size: 1024

idle_timeout: 5s

registry_file: .filebeat

config_dir: /etc/filebeat/conf.d

output:

logstash:

hosts:

- elk.chinasoft.com:5000

tls:

certificate_authorities: ["/etc/pki/tls/certs/logstash-forwarder.crt"]

enabled: true

shipper: {}

logging: {}

runoptions: {}

------------------------------------------

vim conf.d/authlogs.yml

------------------------------------------

filebeat:

prospectors:

- paths:

- /var/log/secure

encoding: plain

fields_under_root: false

input_type: log

ignore_older: 24h

document_type: syslog-beat

scan_frequency: 10s

harvester_buffer_size: 16384

tail_files: false

force_close_files: false

backoff: 1s

max_backoff: 1s

backoff_factor: 2

partial_line_waiting: 5s

max_bytes: 10485760

------------------------------------------

vim conf.d/syslogs.yml

------------------------------------------

filebeat:

prospectors:

- paths:

- /var/log/messages

encoding: plain

fields_under_root: false

input_type: log

ignore_older: 24h

document_type: syslog-beat

scan_frequency: 10s

harvester_buffer_size: 16384

tail_files: false

force_close_files: false

backoff: 1s

max_backoff: 1s

backoff_factor: 2

partial_line_waiting: 5s

max_bytes: 10485760

------------------------------------------

vim conf.d/flowsdk.yml

------------------------------------------

filebeat:

prospectors:

- paths:

- /data/logs/flowsdk.log

encoding: plain

fields_under_root: false

input_type: log

ignore_older: 24h

document_type: syslog-beat

scan_frequency: 10s

harvester_buffer_size: 16384

tail_files: false

force_close_files: false

backoff: 1s

max_backoff: 1s

backoff_factor: 2

partial_line_waiting: 5s

max_bytes: 10485760

------------------------------------------

修改完成后,启动filebeat服务

service filebeat start

chkconfig filebeat on

报错:

service filebeat start

Starting filebeat: 2016/10/12 14:05:46 No paths given. What files do you want me to watch?

分析:

需要监控的文件,不是最近24小时产生的,添加一个其他的文件即可如/var/logs/message

(node2 nginx.chinasoft.com)安装filebeat,拷贝证书,创建收集日志配置文件

yum localinstall filebeat-1.2.3-x86_64.rpm -y

cp logstash-forwarder.crt /etc/pki/tls/certs/.

cd /etc/filebeat/

tree

.

├── conf.d

│ ├── nginx.yml

│ └── syslogs.yml

├── filebeat.template.json

└── filebeat.yml

修改filebeat.yml 内容如下:

vim filebeat.yml

------------------------------------------

filebeat:

spool_size: 1024

idle_timeout: 5s

registry_file: .filebeat

config_dir: /etc/filebeat/conf.d

output:

logstash:

hosts:

- elk.chinasoft.com:5000

tls:

certificate_authorities: ["/etc/pki/tls/certs/logstash-forwarder.crt"]

enabled: true

shipper: {}

logging: {}

runoptions: {}

------------------------------------------

syslogs.yml & nginx.yml

vim conf.d/syslogs.yml

------------------------------------------

filebeat:

prospectors:

- paths:

- /var/log/messages

encoding: plain

fields_under_root: false

input_type: log

ignore_older: 24h

document_type: syslog-beat

scan_frequency: 10s

harvester_buffer_size: 16384

tail_files: false

force_close_files: false

backoff: 1s

max_backoff: 1s

backoff_factor: 2

partial_line_waiting: 5s

max_bytes: 10485760

------------------------------------------

vim conf.d/nginx.yml

------------------------------------------

filebeat:

prospectors:

- paths:

- /var/log/nginx/access.log

encoding: plain

fields_under_root: false

input_type: log

ignore_older: 24h

document_type: syslog-beat

scan_frequency: 10s

harvester_buffer_size: 16384

tail_files: false

force_close_files: false

backoff: 1s

max_backoff: 1s

backoff_factor: 2

partial_line_waiting: 5s

max_bytes: 10485760

------------------------------------------

修改完成后,启动filebeat服务,并检查filebeat进程

重新载入 systemd,扫描新的或有变动的单元;启动并加入开机自启动

systemctl daemon-reload

systemctl start filebeat

systemctl enable filebeat

systemctl status filebeat

● filebeat.service - filebeat

Loaded: loaded (/usr/lib/systemd/system/filebeat.service; enabled; vendor preset: disabled)

Active: active (running) since Tue 2016-10-11 17:23:03 CST; 2min 56s ago

Docs: https://www.elastic.co/guide/en/beats/filebeat/current/index.html

Main PID: 22452 (filebeat)

CGroup: /system.slice/filebeat.service

└─22452 /usr/bin/filebeat -c /etc/filebeat/filebeat.yml

Oct 11 17:23:03 localhost.localdomain systemd[1]: Started filebeat.

Oct 11 17:23:03 localhost.localdomain systemd[1]: Starting filebeat...

Oct 11 17:23:31 localhost.localdomain systemd[1]: Started filebeat.

通过上面可以看出,客户端filebeat进程已经和 elk 服务器连接了。下面去验证。

四、验证,访问kibana http://192.168.3.17:5601

为了后续使用Kibana,需要配置至少一个Index名字或者Pattern,它用于在分析时确定ES中的Index。这里使用系统默认,Kibana会自动加载该Index下doc的field,并自动选择合适的field用于图标中的时间字段

至此,elk搭建和简单的使用暂告一段落

vim /etc/init.d/elasticsearch

修改如下两行:

LOG_DIR="/data/elasticsearch/log"

DATA_DIR="/data/elasticsearch/data"

创建存储数据和本身日志的目录

mkdir /data/elasticsearch/data -p

mkdir /data/elasticsearch/log -p

chown -R elasticsearch.elasticsearch /data/elasticsearch/

重启服务生效

/etc/init.d/elasticsearch

如果是编译安装的,配置存储目录是elasticsearch.yml如下:

cat /usr/local/elasticsearch/config/elasticsearch.yml | egrep -v "^$|^#"

path.data: /tmp/elasticsearch/data

path.logs: /tmp/elasticsearch/logs

network.host: X.X.X.X

network.port: 9200

logstash日志存放目录修改:

vim /etc/init.d/logstash

LS_LOG_DIR=/data/logstash

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律