docker技术快速实现前后端项目的集群化⑤docker环境下搭建percona-mysql的pxc集群

docker技术快速实现前后端项目的集群化⑤docker环境下搭建percona-mysql的pxc集群

生产环境中使用docker搭建类似mysql数据库、redis等有业务状态的集群是不合理的,数据库的数据很关键,压力大,对性能的要求较高,如果集群崩溃除了重启docker没有太好的解决方法,而重启对业务的影响是很难控制的

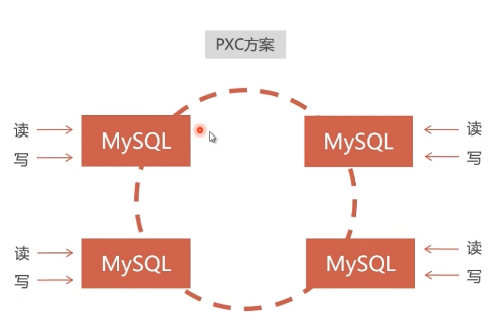

部署pxc集群perconadb

参考文档:https://hub.docker.com/r/percona/percona-xtradb-cluster/

# docker pull docker.io/percona/percona-xtradb-cluster

给镜像改名字

[root@server01 ~]# docker images

[root@server01 ~]# docker tag docker.io/percona/percona-xtradb-cluster pxc

处于安全考虑需要给pxc集群创建docker内部网络

创建自定义网段172.18.0.0/24,docker inspect net1可以查看网络信息

[root@server01 ~]# docker network create --subnet=172.18.0.0/24 net1

[root@server01 ~]# docker inspect net1

Docker的存储

一般可以直接映射到宿主机,Pxc技术不能直接映射需要使用到卷volume

# 创建卷

[root@server01 ~]# docker volume create v1

# 查看卷信息

[root@server01 ~]# docker inspect v1

# 删除卷

[root@server01 ~]# docker volume rm v1

创建集群

创建pxc各节点的volume卷

创建5节点的pxc集群

1.创建5个卷

[root@server01 ~]# docker volume create v1

[root@server01 ~]# docker volume create v2

[root@server01 ~]# docker volume create v3

[root@server01 ~]# docker volume create v4

[root@server01 ~]# docker volume create v5

[root@server01 ~]# docker volume ls

DRIVER VOLUME NAME

local v1

local v2

local v3

local v4

local v5

创建第一个节点

[root@server01 ~]# docker run -d -p 3306:3306 -e MYSQL_ROOT_PASSWORD=root123456 -e CLUSTER_NAME=pxc01 -e XTRABACKUP_PASSWORD=root123456 -v v1:/var/lib/mysql --privileged --name=pxcnode01 --net=net1 --ip 172.18.0.2 pxc

我们在创建第一个pxc实例后要等待第一个实例可以成功运行,并且能通过客户端连接,因为启动node2,node3。。。等需要和node1同步,如果同步不成功就会闪退

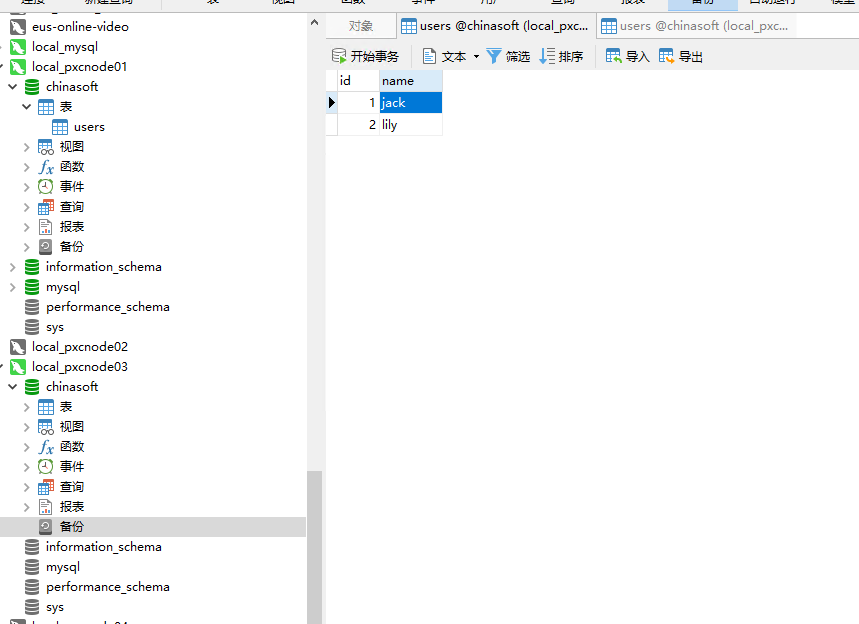

通过客户端工具测试是否能够连接成功

依次创建剩下的4个pxc节点 docker run -d -p 3306:3306 -e MYSQL_ROOT_PASSWORD=root123456 -e CLUSTER_NAME=pxc01 -e XTRABACKUP_PASSWORD=root123456 -v v1:/var/lib/mysql --privileged --name=pxcnode01 --net=net1 --ip 172.18.0.2 pxc docker run -d -p 3307:3306 -e MYSQL_ROOT_PASSWORD=root123456 -e CLUSTER_NAME=pxc01 -e XTRABACKUP_PASSWORD=root123456 -e CLUSTER_JOIN=node1 -v v2:/var/lib/mysql --privileged --name=pxcnode02 --net=net1 --ip 172.18.0.3 pxc docker run -d -p 3308:3306 -e MYSQL_ROOT_PASSWORD=root123456 -e CLUSTER_NAME=pxc01 -e XTRABACKUP_PASSWORD=root123456 -e CLUSTER_JOIN=node1 -v v3:/var/lib/mysql --privileged --name=pxcnode03 --net=net1 --ip 172.18.0.4 pxc docker run -d -p 3309:3306 -e MYSQL_ROOT_PASSWORD=root123456 -e CLUSTER_NAME=pxc01 -e XTRABACKUP_PASSWORD=root123456 -e CLUSTER_JOIN=node1 -v v4:/var/lib/mysql --privileged --name=pxcnode04 --net=net1 --ip 172.18.0.5 pxc docker run -d -p 3310:3306 -e MYSQL_ROOT_PASSWORD=root123456 -e CLUSTER_NAME=pxc01 -e XTRABACKUP_PASSWORD=root123456 -e CLUSTER_JOIN=node1 -v v5:/var/lib/mysql --privileged --name=pxcnode05 --net=net1 --ip 172.18.0.6 pxc 默认使用的是percona8.0但是第二个节点起不来,报错ssl证书问题 于是使用percona-mysql5.7 # docker pull percona/percona-xtradb-cluster:5.7 # docker tag percona/percona-xtradb-cluster:5.7 因为之前volume已经创建了percona8.0的文件,此时再次启动就起不来,所以需要删除里面的文件 # docker volume rm v1 # docker volume create v1 # 再次执行节点的创建 docker run -d -p 3306:3306 -e MYSQL_ROOT_PASSWORD=root123456 -e CLUSTER_NAME=pxc01 -e XTRABACKUP_PASSWORD=root123456 -v v1:/var/lib/mysql --privileged --name=pxcnode01 --net=net1 --ip 172.18.0.2 pxc docker run -d -p 3307:3306 -e MYSQL_ROOT_PASSWORD=root123456 -e CLUSTER_NAME=pxc01 -e XTRABACKUP_PASSWORD=root123456 -e CLUSTER_JOIN=pxcnode01 -v v2:/var/lib/mysql --privileged --name=pxcnode02 --net=net1 --ip 172.18.0.3 pxc docker run -d -p 3308:3306 -e MYSQL_ROOT_PASSWORD=root123456 -e CLUSTER_NAME=pxc01 -e XTRABACKUP_PASSWORD=root123456 -e CLUSTER_JOIN=pxcnode01 -v v3:/var/lib/mysql --privileged --name=pxcnode03 --net=net1 --ip 172.18.0.4 pxc docker run -d -p 3309:3306 -e MYSQL_ROOT_PASSWORD=root123456 -e CLUSTER_NAME=pxc01 -e XTRABACKUP_PASSWORD=root123456 -e CLUSTER_JOIN=pxcnode01 -v v4:/var/lib/mysql --privileged --name=pxcnode04 --net=net1 --ip 172.18.0.5 pxc docker run -d -p 3310:3306 -e MYSQL_ROOT_PASSWORD=root123456 -e CLUSTER_NAME=pxc01 -e XTRABACKUP_PASSWORD=root123456 -e CLUSTER_JOIN=pxcnode01 -v v5:/var/lib/mysql --privileged --name=pxcnode05 --net=net1 --ip 172.18.0.6 pxc # 最终全部跑起来了 [root@server01 ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 1561e31c4952 pxc "/entrypoint.sh my..." 6 seconds ago Up 5 seconds 4567-4568/tcp, 0.0.0.0:3310->3306/tcp pxcnode05 befc01d39968 pxc "/entrypoint.sh my..." 25 seconds ago Up 24 seconds 4567-4568/tcp, 0.0.0.0:3309->3306/tcp pxcnode04 0e56217ade2c pxc "/entrypoint.sh my..." 44 seconds ago Up 44 seconds 4567-4568/tcp, 0.0.0.0:3308->3306/tcp pxcnode03 39f47c1c7357 pxc "/entrypoint.sh my..." About a minute ago Up About a minute 4567-4568/tcp, 0.0.0.0:3307->3306/tcp pxcnode02 c989361c6d63 pxc "/entrypoint.sh my..." 5 minutes ago Up 5 minutes 0.0.0.0:3306->3306/tcp, 4567-4568/tcp pxcnode01 # 等待所有节点都创建完成,并且能通过客户端连接 验证集群的同步是否正常,在node01中创建库,表,插入数据,在node03中看是否正常同步

报错: [root@server01 ~]# docker start pxcnode03 Error response from daemon: driver failed programming external connectivity on endpoint pxcnode03 (982c64d5b204741d8b0b2c6ee1630756643e75199ad27ced845f011525593c81): (iptables failed: iptables --wait -t nat -A DOCKER -p tcp -d 0/0 --dport 3308 -j DNAT --to-destination 172.18.0.4:3306 ! -i br-a90d3111cc8b: iptables: No chain/target/match by that name. (exit status 1)) Error: failed to start containers: pxcnode03 解决办法: [root@server01 ~]# systemctl restart docker 启动集群报错 # docker logs pxcnode01 2020-07-28T21:41:01.087855Z 0 [ERROR] WSREP: failed to open gcomm backend connection: 131: No address to connect (FATAL) at gcomm/src/gmcast.cpp:connect_precheck():311 2020-07-28T21:41:01.087884Z 0 [ERROR] WSREP: gcs/src/gcs_core.cpp:gcs_core_open():209: Failed to open backend connection: -131 (State not recoverable) 2020-07-28T21:41:01.164568Z 0 [ERROR] WSREP: gcs/src/gcs.cpp:gcs_open():1514: Failed to open channel 'pxc01' at 'gcomm://pxcnode01': -131 (State not recoverable) 2020-07-28T21:41:01.164614Z 0 [ERROR] WSREP: gcs connect failed: State not recoverable 2020-07-28T21:41:01.164620Z 0 [ERROR] WSREP: Provider/Node (gcomm://pxcnode01) failed to establish connection with cluster (reason: 7) 2020-07-28T21:41:01.164623Z 0 [ERROR] Aborting

处理办法: [root@server01 ~]# docker inspect v1 [ { "Driver": "local", "Labels": {}, "Mountpoint": "/var/lib/docker/volumes/v1/_data", "Name": "v1", "Options": {}, "Scope": "local" } ] [root@server01 ~]# cat /var/lib/docker/volumes/v1/_data/grastate.dat # GALERA saved state version: 2.1 uuid: 7751e544-ce81-11ea-9de6-3784633f45d7 seqno: 27 # 由0修改为1 safe_to_bootstrap: 1

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· 震惊!C++程序真的从main开始吗?99%的程序员都答错了

· 【硬核科普】Trae如何「偷看」你的代码?零基础破解AI编程运行原理

· 单元测试从入门到精通

· 上周热点回顾(3.3-3.9)

· winform 绘制太阳,地球,月球 运作规律