kubeadm 之k8s 多node 部署

主机名修改

1 2 3 | hostnamectl set-hostname master-1 && exec bashhostnamectl set-hostname node-1 && exec bashhostnamectl set-hostname node-2 && exec bash |

host 文件修改

1 2 3 4 5 | 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4::1 localhost localhost.localdomain localhost6 localhost6.localdomain6192.168.10.29 master-1192.168.10.30 node-1192.168.10.31 node-2 |

配置双机互信

1 2 3 4 | ssh-keygen ssh-copy-id master-1ssh-copy-id node-1ssh-copy-id node-2 |

关闭交换分区

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | swapoff -a vim /etc/fstab## /etc/fstab# Created by anaconda on Sun Feb 7 10:14:45 2021## Accessible filesystems, by reference, are maintained under '/dev/disk'# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info#/dev/mapper/centos-root / xfs defaults 0 0UUID=ec65c557-715f-4f2b-beae-ec564c71b66b /boot xfs defaults 0 0#/dev/mapper/centos-swap swap swap defaults 0 0 |

加载内核参数并加以设置

1 2 3 4 5 6 7 8 | modprobe br_netfilter echo "modprobe br_netfilter" >> /etc/profilecat > /etc/sysctl.d/k8s.conf <<EOFnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1net.ipv4.ip_forward = 1EOFsysctl -p /etc/sysctl.d/k8s.conf |

关闭防火墙

1 2 | systemctl disable firewalld.service systemctl stop firewalld.service |

配置docker仓库源

1 2 3 4 5 6 7 8 9 | # step 1: 安装必要的一些系统工具 yum install -y yum-utils device-mapper-persistent-data lvm2# Step 2: 添加软件源信息yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo# Step 3: 更新并安装 Docker-CEyum makecache fastyum -y install docker-ce# Step 4: 开启Docker服务service docker start |

设置时间同步计划任务

1 2 3 4 5 6 7 | yum -y install ntpdatecrontab -e crontab -l* */1 * * * /usr/sbin/ntpdate time.windows.com >/dev/null您在 /var/spool/mail/root 中有新邮件systemctl restart crond.service |

加载ipvs 模块

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | cd /etc/sysconfig/modules/cat /etc/sysconfig/modules/ipvs.modules #!/bin/bashipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr ip_vs_dh ip_vs_sh ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"for kernel_module in ${ipvs_modules}; do /sbin/modinfo -F filename ${kernel_module} > /dev/null 2>&1 if [ 0 -eq 0 ]; then /sbin/modprobe ${kernel_module} fidonechmod +x ipvs.modules bash ipvs.modules lsmod | grep ip_vs ip_vs_ftp 13079 0 nf_nat 26787 1 ip_vs_ftpip_vs_sed 12519 0 ip_vs_nq 12516 0 ip_vs_sh 12688 0 ip_vs_dh 12688 0 ip_vs_lblcr 12922 0 ip_vs_lblc 12819 0 ip_vs_wrr 12697 0 ip_vs_rr 12600 0 ip_vs_wlc 12519 0 ip_vs_lc 12516 0 ip_vs 145497 22 ip_vs_dh,ip_vs_lc,ip_vs_nq,ip_vs_rr,ip_vs_sh,ip_vs_ftp,ip_vs_sed,ip_vs_wlc,ip_vs_wrr,ip_vs_lblcr,ip_vs_lblcnf_conntrack 133095 2 ip_vs,nf_natlibcrc32c 12644 4 xfs,ip_vs,nf_nat,nf_conntrack |

配置镜像加速器及修改docker启动驱动

1 2 3 4 5 | cat /etc/docker/daemon.json{"registry-mirrors": ["http://f1361db2.m.daocloud.io"],"exec-opts": ["native.cgroupdriver=systemd"]}systemctl start docker.service && systemctl enable docker.service |

配置k8s 仓库

1 2 3 4 5 6 7 8 9 10 | cat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/enabled=1gpgcheck=1repo_gpgcheck=1gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOFsetenforce 0 |

安装k8s 初始化所需安装包

1 | yum install -y kubelet-1.20.6 kubeadm-1.20.6 kubectl-1.20.6 |

设置开机自启

1 | systemctl enable kubelet |

kubeadm初始化k8s集群master-1操作

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 | kubeadm init --kubernetes-version=1.20.6 --apiserver-advertise-address=192.168.10.29 --image-repository registry.aliyuncs.com/google_containers --pod-network-cidr=10.244.0.0/16 --ignore-preflight-errors=SystemVerification[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key[addons] Applied essential addon: CoreDNS[addons] Applied essential addon: kube-proxyYour Kubernetes control-plane has initialized successfully!To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/configAlternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.confYou should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.10.29:6443 --token dhwkd2.n73yo7rt3x6bsdm8 \ --discovery-token-ca-cert-hash sha256:2a85fc7081360f0d61dbb9930800951a8aa7bca0163cff236ce5da9b8c35ad50 mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config |

node节点加入集群

1 2 | kubeadm join 192.168.10.29:6443 --token dhwkd2.n73yo7rt3x6bsdm8 \ --discovery-token-ca-cert-hash sha256:2a85fc7081360f0d61dbb9930800951a8aa7bca0163cff236ce5da9b8c35ad50 |

查看集群状态

1 2 3 4 5 | [root@master-1 modules]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster-1 NotReady control-plane,master 7m26s v1.20.6node-1 NotReady <none> 2m v1.20.6node-2 NotReady <none> 111s v1.20.6 |

设置node角色

1 2 3 4 5 6 7 8 9 | [root@master-1 modules]# kubectl label node node-1 node-role.kubernetes.io/worker=workernode/node-1 labeled[root@master-1 modules]# kubectl label node node-2 node-role.kubernetes.io/worker=workernode/node-2 labeled[root@master-1 modules]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster-1 NotReady control-plane,master 10m v1.20.6node-1 NotReady worker 4m51s v1.20.6node-2 NotReady worker 4m42s v1.20.6 |

上面状态都是NotReady状态,说明没有安装网络插件

安装kubernetes网络组件-Calico

1 2 3 4 5 6 | kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml[root@master-1 modules]# kubectl get nodesNAME STATUS ROLES AGE VERSIONmaster-1 Ready control-plane,master 13m v1.20.6node-1 Ready worker 8m24s v1.20.6node-2 Ready worker 8m15s v1.20.6 |

测试在k8s创建pod是否可以正常访问网络

1 2 3 4 5 6 7 8 9 10 | [root@master-1 modules]# kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- shIf you don't see a command prompt, try pressing enter./ # ping baidu.comPING baidu.com (220.181.38.148): 56 data bytes64 bytes from 220.181.38.148: seq=0 ttl=127 time=365.628 ms64 bytes from 220.181.38.148: seq=1 ttl=127 time=384.185 ms^C--- baidu.com ping statistics ---2 packets transmitted, 2 packets received, 0% packet lossround-trip min/avg/max = 365.628/374.906/384.185 ms |

测试coredns是否正常

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | [root@master-1 modules]# kubectl run busybox --image busybox:1.28 --restart=Never --rm -it busybox -- shIf you don't see a command prompt, try pressing enter./ # ping baidu.comPING baidu.com (220.181.38.148): 56 data bytes64 bytes from 220.181.38.148: seq=0 ttl=127 time=365.628 ms64 bytes from 220.181.38.148: seq=1 ttl=127 time=384.185 ms^C--- baidu.com ping statistics ---2 packets transmitted, 2 packets received, 0% packet lossround-trip min/avg/max = 365.628/374.906/384.185 ms/ # nslookup kubernetes.default.svc.cluster.localServer: 10.96.0.10Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.localName: kubernetes.default.svc.cluster.localAddress 1: 10.96.0.1 kubernetes.default.svc.cluster.local |

安装dasboard

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 279 280 281 282 283 284 285 286 287 288 289 290 291 292 293 294 295 296 297 298 299 300 301 302 303 304 305 306 307 308 309 310 311 312 313 314 315 316 317 318 319 320 | [root@master-1 ~]# cat kubernetes-dashboard.yaml '# Copyright 2017 The Kubernetes Authors.## Licensed under the Apache License, Version 2.0 (the "License");# you may not use this file except in compliance with the License.# You may obtain a copy of the License at## http://www.apache.org/licenses/LICENSE-2.0## Unless required by applicable law or agreed to in writing, software# distributed under the License is distributed on an "AS IS" BASIS,# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.# See the License for the specific language governing permissions and# limitations under the License.apiVersion: v1kind: Namespacemetadata: name: kubernetes-dashboard---apiVersion: v1kind: ServiceAccountmetadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard---kind: ServiceapiVersion: v1metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboardspec: ports: - port: 443 targetPort: 8443 selector: k8s-app: kubernetes-dashboard---apiVersion: v1kind: Secretmetadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-certs namespace: kubernetes-dashboardtype: Opaque---apiVersion: v1kind: Secretmetadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-csrf namespace: kubernetes-dashboardtype: Opaquedata: csrf: ""---apiVersion: v1kind: Secretmetadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-key-holder namespace: kubernetes-dashboardtype: Opaque---kind: ConfigMapapiVersion: v1metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-settings namespace: kubernetes-dashboard---kind: RoleapiVersion: rbac.authorization.k8s.io/v1metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboardrules: # Allow Dashboard to get, update and delete Dashboard exclusive secrets. - apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs", "kubernetes-dashboard-csrf"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map. - apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics. - apiGroups: [""] resources: ["services"] resourceNames: ["heapster", "dashboard-metrics-scraper"] verbs: ["proxy"] - apiGroups: [""] resources: ["services/proxy"] resourceNames: ["heapster", "http:heapster:", "https:heapster:", "dashboard-metrics-scraper", "http:dashboard-metrics-scraper"] verbs: ["get"]---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboardrules: # Allow Metrics Scraper to get metrics from the Metrics server - apiGroups: ["metrics.k8s.io"] resources: ["pods", "nodes"] verbs: ["get", "list", "watch"]---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboardroleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kubernetes-dashboardsubjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: kubernetes-dashboardroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: kubernetes-dashboardsubjects: - kind: ServiceAccount name: kubernetes-dashboard namespace: kubernetes-dashboard---kind: DeploymentapiVersion: apps/v1metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboardspec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard spec: containers: - name: kubernetes-dashboard image: kubernetesui/dashboard:v2.0.0-beta8 imagePullPolicy: IfNotPresent ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates - --namespace=kubernetes-dashboard # Uncomment the following line to manually specify Kubernetes API server Host # If not specified, Dashboard will attempt to auto discover the API server and connect # to it. Uncomment only if the default does not work. # - --apiserver-host=http://my-address:port volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs # Create on-disk volume to store exec logs - mountPath: /tmp name: tmp-volume livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard nodeSelector: "beta.kubernetes.io/os": linux # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule---kind: ServiceapiVersion: v1metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboardspec: ports: - port: 8000 targetPort: 8000 selector: k8s-app: dashboard-metrics-scraper---kind: DeploymentapiVersion: apps/v1metadata: labels: k8s-app: dashboard-metrics-scraper name: dashboard-metrics-scraper namespace: kubernetes-dashboardspec: replicas: 1 revisionHistoryLimit: 10 selector: matchLabels: k8s-app: dashboard-metrics-scraper template: metadata: labels: k8s-app: dashboard-metrics-scraper annotations: seccomp.security.alpha.kubernetes.io/pod: 'runtime/default' spec: containers: - name: dashboard-metrics-scraper image: kubernetesui/metrics-scraper:v1.0.1 imagePullPolicy: IfNotPresent ports: - containerPort: 8000 protocol: TCP livenessProbe: httpGet: scheme: HTTP path: / port: 8000 initialDelaySeconds: 30 timeoutSeconds: 30 volumeMounts: - mountPath: /tmp name: tmp-volume securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true runAsUser: 1001 runAsGroup: 2001 serviceAccountName: kubernetes-dashboard nodeSelector: "beta.kubernetes.io/os": linux # Comment the following tolerations if Dashboard must not be deployed on master tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule volumes: - name: tmp-volume emptyDir: {}[root@master-1 ~]# kubectl apply -f kubernetes-dashboard.yaml namespace/kubernetes-dashboard createdserviceaccount/kubernetes-dashboard createdservice/kubernetes-dashboard createdsecret/kubernetes-dashboard-certs createdsecret/kubernetes-dashboard-csrf createdsecret/kubernetes-dashboard-key-holder createdconfigmap/kubernetes-dashboard-settings createdrole.rbac.authorization.k8s.io/kubernetes-dashboard createdclusterrole.rbac.authorization.k8s.io/kubernetes-dashboard createdrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard createdclusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard createddeployment.apps/kubernetes-dashboard createdservice/dashboard-metrics-scraper createddeployment.apps/dashboard-metrics-scraper created |

查看dashboard的状态

1 2 3 4 | [root@master-1 ~]# kubectl get pods -n kubernetes-dashboardNAME READY STATUS RESTARTS AGEdashboard-metrics-scraper-7445d59dfd-kldp7 1/1 Running 0 38hkubernetes-dashboard-54f5b6dc4b-4v2np 1/1 Running 0 38h |

查看dashboard前端的service

1 2 3 4 | [root@master-1 ~]# kubectl get svc -n kubernetes-dashboardNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEdashboard-metrics-scraper ClusterIP 10.108.226.98 <none> 8000/TCP 38hkubernetes-dashboard ClusterIP 10.108.44.41 <none> 443/TCP 38h |

修改service type类型变成NodePort

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 | kubectl edit svc kubernetes-dashboard -n kubernetes-dashboard# Please edit the object below. Lines beginning with a '#' will be ignored,# Please edit the object below. Lines beginning with a '#' will be ignored,# and an empty file will abort the edit. If an error occurs while saving this file will be# reopened with the relevant failures.#apiVersion: v1kind: Servicemetadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"k8s-app":"kubernetes-dashboard"},"name":"kubernetes-dashboard","namespace":"kubernetes-dashboard"},"spec":{"ports":[{"port":443,"targetPort":8443}],"selector":{"k8s-app":"kubernetes-dashboard"}}} creationTimestamp: "2021-12-15T14:27:58Z" labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard resourceVersion: "2903" uid: 94758740-9210-46bb-939b-2b742e9b01e6spec: clusterIP: 10.108.44.41 clusterIPs: - 10.108.44.41 ports: - port: 443 protocol: TCP targetPort: 8443 selector: k8s-app: kubernetes-dashboard sessionAffinity: None #type: ClusterIP type: NodePortstatus: loadBalancer: {} |

保存退出查看

1 2 3 4 | [root@master-1 ~]# kubectl get svc -n kubernetes-dashboardNAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGEdashboard-metrics-scraper ClusterIP 10.108.226.98 <none> 8000/TCP 38hkubernetes-dashboard NodePort 10.108.44.41 <none> 443:32341/TCP 38h |

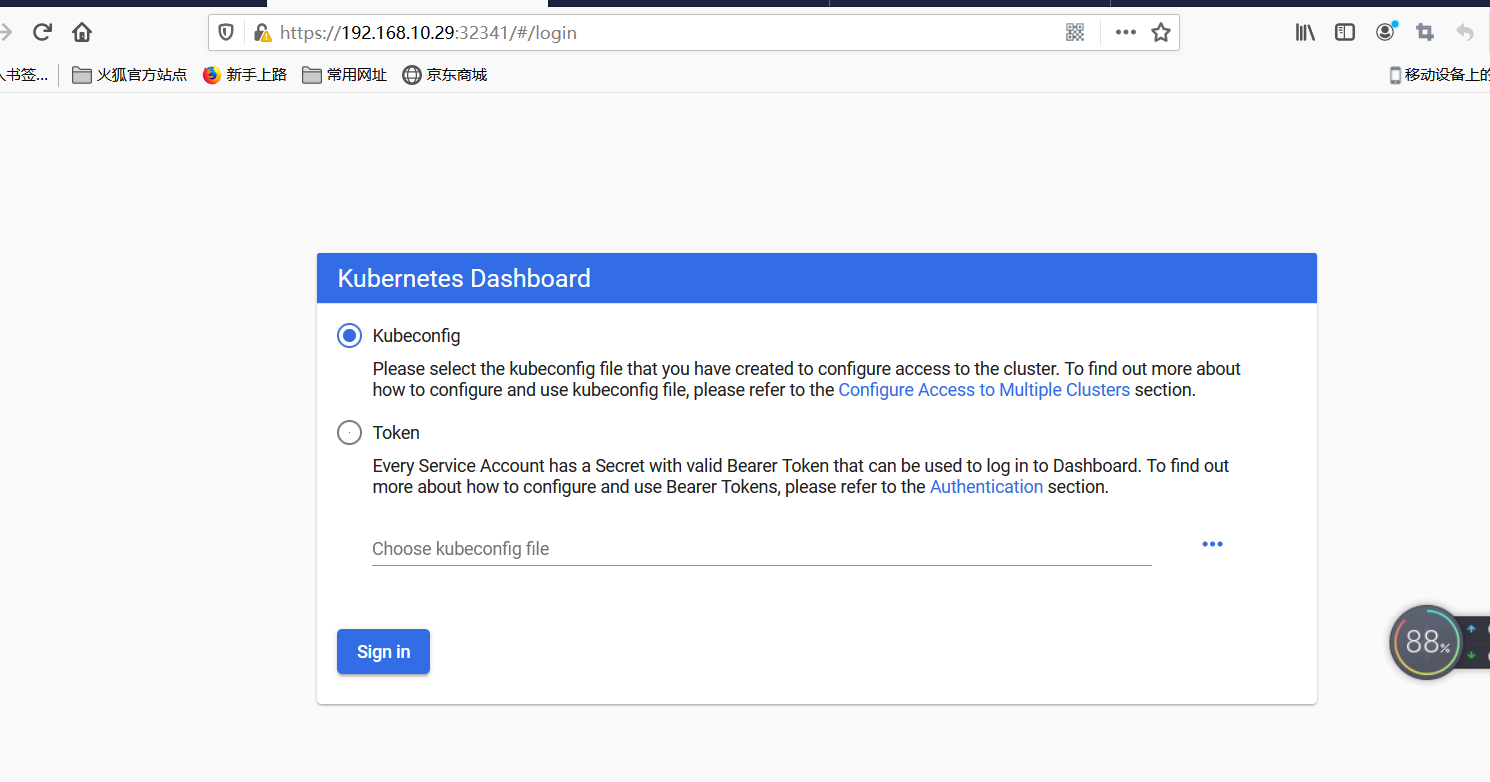

浏览器访问https://192.168.10.29:32341/#/login

通过token令牌访问dashboard创建管理员token,具有查看任何空间的权限,可以管理所有资源对象

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | [root@master-1 ~]# kubectl create clusterrolebinding dashboard-cluster-admin --clusterrole=cluster-admin --serviceaccount=kubernetes-dashboard:kubernetes-dashboardclusterrolebinding.rbac.authorization.k8s.io/dashboard-cluster-admin created[root@master-1 ~]# kubectl get secret -n kubernetes-dashboardNAME TYPE DATA AGEdefault-token-b8sqn kubernetes.io/service-account-token 3 38hkubernetes-dashboard-certs Opaque 0 38hkubernetes-dashboard-csrf Opaque 1 38hkubernetes-dashboard-key-holder Opaque 2 38hkubernetes-dashboard-token-jh4sz kubernetes.io/service-account-token 3 38h[root@master-1 ~]# kubectl describe secret kubernetes-dashboard-token-jh4sz -n kubernetes-dashboardName: kubernetes-dashboard-token-jh4szNamespace: kubernetes-dashboardLabels: <none>Annotations: kubernetes.io/service-account.name: kubernetes-dashboard kubernetes.io/service-account.uid: 6a0320fb-d304-43ca-898b-59156d2ee4a9Type: kubernetes.io/service-account-tokenData====token: eyJhbGciOiJSUzI1NiIsImtpZCI6InlJM3BCZ2hXSGJNZVhMekFPaDlFRnJYVlhfeHpOWXVyODBOdlhrQlBCSXcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1qaDRzeiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjZhMDMyMGZiLWQzMDQtNDNjYS04OThiLTU5MTU2ZDJlZTRhOSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.FMp3-Vudcl4UeTfZkNVDfrc3kGVQjKG1T6b3cufZG1T5t7BFMIhtBIUm2WeSlTy3tCrh95u-Ei5O-S_O6UolUAjZwDm4ncsjknBHenubAq_A9meAT9Jzvsp7g2pxpXobnNcF4_jJTt0FhQtSNwCzA7IgzxPwZLFOdY88p0YED0mpLk5wODZBnrmsj9rLNZ-u_vprFEnUhrJ5nRxk4W9-sHLFKzyZhyu2T4hf2E3Ahi0FzVT0kMBpg9lPcI02NTv4zq1GPe4y87IbzCP9Uwz8iJNkTQCB-DS8WWT_hs2Y0CUhgQimp39qLfoLX6Eqg2IG2vk0atLZFL5lft403-ONZAca.crt: 1066 bytesnamespace: 20 bytes |

注意命令行复制有换行符

1 | eyJhbGciOiJSUzI1NiIsImtpZCI6InlJM3BCZ2hXSGJNZVhMekFPaDlFRnJYVlhfeHpOWXVyODBOdlhrQlBCSXcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1qaDRzeiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjZhMDMyMGZiLWQzMDQtNDNjYS04OThiLTU5MTU2ZDJlZTRhOSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.FMp3-Vudcl4UeTfZkNVDfrc3kGVQjKG1T6b3cufZG1T5t7BFMIhtBIUm2WeSlTy3tCrh95u-Ei5O-S_O6UolUAjZwDm4ncsjknBHenubAq_A9meAT9Jzvsp7g2pxpXobnNcF4_jJTt0FhQtSNwCzA7IgzxPwZLFOdY88p0YED0mpLk5wODZBnrmsj9rLNZ-u_vprFEnUhrJ5nRxk4W9-sHLFKzyZhyu2T4hf2E3Ahi0FzVT0kMBpg9lPcI02NTv4zq1GPe4y87IbzCP9Uwz8iJNkTQCB-DS8WWT_hs2Y0CUhgQimp39qLfoLX6Eqg2IG2vk0atLZFL5lft403-ONZA |

通过kubeconfig文件访问dashboard

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | [root@master-1 ~]# cd /etc/kubernetes/pki[root@master-1 pki]# lsapiserver.crt apiserver-etcd-client.key apiserver-kubelet-client.crt ca.crt etcd front-proxy-ca.key front-proxy-client.key sa.pubapiserver-etcd-client.crt apiserver.key apiserver-kubelet-client.key ca.key front-proxy-ca.crt front-proxy-client.crt sa.key[root@master-1 pki]# kubectl config set-cluster kubernetes --certificate-authority=./ca.crt --server="https://192.168.40.180:6443" --embed-certs=true --kubeconfig=/root/dashboard-admin.confCluster "kubernetes" set.[root@master-1 pki]# vim /root/dashboard-admin.conf [root@master-1 pki]# DEF_NS_ADMIN_TOKEN=$(kubectl get secret kubernetes-dashboard-token-jh4sz -n kubernetes-dashboard -o jsonpath={.data.token}|base64 -d)[root@master-1 pki]# kubectl config set-credentials dashboard-admin --token=$DEF_NS_ADMIN_TOKEN --kubeconfig=/root/dashboard-admin.confUser "dashboard-admin" set.[root@master-1 pki]# kubectl config set-context dashboard-admin@kubernetes --cluster=kubernetes --user=dashboard-admin --kubeconfig=/root/dashboard-admin.confContext "dashboard-admin@kubernetes" created.[root@master-1 pki]# kubectl config use-context dashboard-admin@kubernetes --kubeconfig=/root/dashboard-admin.confSwitched to context "dashboard-admin@kubernetes".[root@master-1 pki]# cat /root/dashboard-admin.confapiVersion: v1clusters:- cluster: certificate-authority-data: LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM1ekNDQWMrZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFWTVJNd0VRWURWUVFERXdwcmRXSmwKY201bGRHVnpNQjRYRFRJeE1USXhOVEUwTURFMU1sb1hEVE14TVRJeE16RTBNREUxTWxvd0ZURVRNQkVHQTFVRQpBeE1LYTNWaVpYSnVaWFJsY3pDQ0FTSXdEUVlKS29aSWh2Y05BUUVCQlFBRGdnRVBBRENDQVFvQ2dnRUJBS3hKCmhwMVR0VDlVSmttb2VVYy82YkRNRFR5emQ1bTZEVFVGVGtad3ZrVVJGbW9pMGlzakhZQmp6dWRFeDNnRmlCL08KTGtrOW5tKzMrVVg1TjRoYU1ta3NsdytFTGJpTEhTM3ZvZExRWElOVVJQSVpNREpiQTRJVWZLeVI3MlZFT0ZlKwoyYVVOZDBsK2I0bElvTTBxRkpzb25WVE5mdXBlc1pCVFQ0cXF3cVZzRzc3ZHpSeWR4Y0ZLeHVFd2JEd1R2K05oCmZRWDk4SndjcGdtem41VDVHQVhSeWJ4WVhkYmd4cjFHNnBXOFlMcExuU3VpTFNoUHB4WGQ2cEZzMG1zZTgyckEKcDJGYm1jZDZRTTVvNTd5K2dXMDNNTU5pSElnOXVIRWdvQm55MkI4NW1TSjFqNmFEQlBIRkpOeU80aVRJb0V2VQpxeVZxZjhmN3c4dWp3T3RhN3FNQ0F3RUFBYU5DTUVBd0RnWURWUjBQQVFIL0JBUURBZ0trTUE4R0ExVWRFd0VCCi93UUZNQU1CQWY4d0hRWURWUjBPQkJZRUZIU0xHTUtBSGU0NXdIU3NqSW9jVEJoRDhGWnZNQTBHQ1NxR1NJYjMKRFFFQkN3VUFBNElCQVFDb0xVUGJwWDRmN1N6VWFxS1RweDF4Q2RZSTRQRnVFY0lVZ0pHWndLYkdzVHZqdWRmRgpSTkMxTXgyV0VyejhmbXFYRmRKQmVidFp1b0ovMCtFd3FiQ1FNMUI0UWdmMHdYSkF0elJlbTlTbUJ0dUlJbGI5Ci9iVEw4RnBueWFuaEZ1eFRQZFBzTGc0VWJPaEZCUVo1V2hYNEJOWnIwelRoK2FZeEpuUTg0c2lxbktRVStwMWkKYUx6Ky9NekVocTMvYnF5NDJTdnVsaW1iaXF3VDN5VDhDSmRwcEhjZ0F0emtweWw1eW1PdUdQK0F5blR0Y0svZAp2Ky9vUVdJODRxV2tzeHpxYUxNdGpFK1psaW1iMGdGV1ZsM1BRUFhZSTRGTFFQNDVBU2JoQUxpZmswYjNUNEZRCmh5M2pCS0YxTUtGYUZyUm0zUkl3SmRJTVN3WnhISVJCVm0vYgotLS0tLUVORCBDRVJUSUZJQ0FURS0tLS0tCg== server: https://192.168.40.180:6443 name: kubernetescontexts:- context: cluster: kubernetes user: dashboard-admin name: dashboard-admin@kubernetescurrent-context: dashboard-admin@kuberneteskind: Configpreferences: {}users:- name: dashboard-admin user: token: eyJhbGciOiJSUzI1NiIsImtpZCI6InlJM3BCZ2hXSGJNZVhMekFPaDlFRnJYVlhfeHpOWXVyODBOdlhrQlBCSXcifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZC10b2tlbi1qaDRzeiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6IjZhMDMyMGZiLWQzMDQtNDNjYS04OThiLTU5MTU2ZDJlZTRhOSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDprdWJlcm5ldGVzLWRhc2hib2FyZCJ9.FMp3-Vudcl4UeTfZkNVDfrc3kGVQjKG1T6b3cufZG1T5t7BFMIhtBIUm2WeSlTy3tCrh95u-Ei5O-S_O6UolUAjZwDm4ncsjknBHenubAq_A9meAT9Jzvsp7g2pxpXobnNcF4_jJTt0FhQtSNwCzA7IgzxPwZLFOdY88p0YED0mpLk5wODZBnrmsj9rLNZ-u_vprFEnUhrJ5nRxk4W9-sHLFKzyZhyu2T4hf2E3Ahi0FzVT0kMBpg9lPcI02NTv4zq1GPe4y87IbzCP9Uwz8iJNkTQCB-DS8WWT_hs2Y0CUhgQimp39qLfoLX6Eqg2IG2vk0atLZFL5lft403-ONZA |

安装metrics-server组件注意:这个是k8s在1.17的新特性,如果是1.16版本的可以不用添加,1.17以后要添加。这个参数的作用是Aggregation允许在不修改Kubernetes核心代码的同时扩展Kubernetes API。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 | [root@master-1 ~]# cat /etc/kubernetes/manifests/kube-apiserver.yamlapiVersion: v1kind: Podmetadata: annotations: kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 192.168.10.29:6443 creationTimestamp: null labels: component: kube-apiserver tier: control-plane name: kube-apiserver namespace: kube-systemspec: containers: - command: - kube-apiserver - --advertise-address=192.168.10.29 - --allow-privileged=true - --authorization-mode=Node,RBAC - --client-ca-file=/etc/kubernetes/pki/ca.crt - --enable-admission-plugins=NodeRestriction - --enable-bootstrap-token-auth=true - --enable-aggregator-routing=true # 添加这个参数 - --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt - --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt - --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key - --etcd-servers=https://127.0.0.1:2379 - --insecure-port=0 - --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt - --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key - --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname - --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt - --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key - --requestheader-allowed-names=front-proxy-client - --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt - --requestheader-extra-headers-prefix=X-Remote-Extra- - --requestheader-group-headers=X-Remote-Group - --requestheader-username-headers=X-Remote-User - --secure-port=6443 - --service-account-issuer=https://kubernetes.default.svc.cluster.local - --service-account-key-file=/etc/kubernetes/pki/sa.pub - --service-account-signing-key-file=/etc/kubernetes/pki/sa.key - --service-cluster-ip-range=10.96.0.0/12 - --tls-cert-file=/etc/kubernetes/pki/apiserver.crt - --tls-private-key-file=/etc/kubernetes/pki/apiserver.key image: registry.aliyuncs.com/google_containers/kube-apiserver:v1.20.6 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 8 httpGet: host: 192.168.10.29 path: /livez port: 6443 scheme: HTTPS initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 15 name: kube-apiserver readinessProbe: failureThreshold: 3 httpGet: host: 192.168.10.29 path: /readyz port: 6443 scheme: HTTPS periodSeconds: 1 timeoutSeconds: 15 resources: requests: cpu: 250m startupProbe: failureThreshold: 24 httpGet: host: 192.168.10.29 path: /livez port: 6443 scheme: HTTPS initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 15 volumeMounts: - mountPath: /etc/ssl/certs name: ca-certs readOnly: true - mountPath: /etc/pki name: etc-pki readOnly: true - mountPath: /etc/kubernetes/pki name: k8s-certs readOnly: true hostNetwork: true priorityClassName: system-node-critical volumes: - hostPath: path: /etc/ssl/certs type: DirectoryOrCreate name: ca-certs - hostPath: path: /etc/pki type: DirectoryOrCreate name: etc-pki - hostPath: path: /etc/kubernetes/pki type: DirectoryOrCreate name: k8s-certsstatus: {} |

重新更新apiserver配置:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | [root@master-1 ~]# kubectl apply -f /etc/kubernetes/manifests/kube-apiserver.yamlpod/kube-apiserver created[root@master-1 ~]# kubectl get pods -n kube-systemNAME READY STATUS RESTARTS AGEcalico-kube-controllers-558995777d-7g9x7 1/1 Running 0 2d12hcalico-node-2qrpn 1/1 Running 0 2d12hcalico-node-6zh2p 1/1 Running 0 2d12hcalico-node-thfh7 1/1 Running 0 2d12hcoredns-7f89b7bc75-jbl2h 1/1 Running 0 2d12hcoredns-7f89b7bc75-nr24n 1/1 Running 0 2d12hetcd-master-1 1/1 Running 0 2d12hkube-apiserver 0/1 CrashLoopBackOff 1 12skube-apiserver-master-1 1/1 Running 0 2m1skube-controller-manager-master-1 1/1 Running 1 2d12hkube-proxy-5h29b 1/1 Running 0 2d12hkube-proxy-thxv4 1/1 Running 0 2d12hkube-proxy-txm52 1/1 Running 0 2d12hkube-scheduler-master-1 1/1 Running 1 2d12h[root@master-1 ~]# kubectl delete pods kube-apiserver -n kube-system #把CrashLoopBackOff状态的pod删除pod "kube-apiserver" deleted |

查看metrics.yaml 并更新

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 | [root@master-1 ~]# cat metrics.yaml apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: metrics-server:system:auth-delegator labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: ReconcileroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegatorsubjects:- kind: ServiceAccount name: metrics-server namespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata: name: metrics-server-auth-reader namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: ReconcileroleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-readersubjects:- kind: ServiceAccount name: metrics-server namespace: kube-system---apiVersion: v1kind: ServiceAccountmetadata: name: metrics-server namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: name: system:metrics-server labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcilerules:- apiGroups: - "" resources: - pods - nodes - nodes/stats - namespaces verbs: - get - list - watch- apiGroups: - "extensions" resources: - deployments verbs: - get - list - update - watch---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: system:metrics-server labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: ReconcileroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-serversubjects:- kind: ServiceAccount name: metrics-server namespace: kube-system---apiVersion: v1kind: ConfigMapmetadata: name: metrics-server-config namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: EnsureExistsdata: NannyConfiguration: |- apiVersion: nannyconfig/v1alpha1 kind: NannyConfiguration---apiVersion: apps/v1kind: Deploymentmetadata: name: metrics-server namespace: kube-system labels: k8s-app: metrics-server kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile version: v0.3.6spec: selector: matchLabels: k8s-app: metrics-server version: v0.3.6 template: metadata: name: metrics-server labels: k8s-app: metrics-server version: v0.3.6 annotations: scheduler.alpha.kubernetes.io/critical-pod: '' seccomp.security.alpha.kubernetes.io/pod: 'docker/default' spec: priorityClassName: system-cluster-critical serviceAccountName: metrics-server containers: - name: metrics-server image: k8s.gcr.io/metrics-server-amd64:v0.3.6 imagePullPolicy: IfNotPresent command: - /metrics-server - --metric-resolution=30s - --kubelet-preferred-address-types=InternalIP - --kubelet-insecure-tls ports: - containerPort: 443 name: https protocol: TCP - name: metrics-server-nanny image: k8s.gcr.io/addon-resizer:1.8.4 imagePullPolicy: IfNotPresent resources: limits: cpu: 100m memory: 300Mi requests: cpu: 5m memory: 50Mi env: - name: MY_POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: MY_POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: metrics-server-config-volume mountPath: /etc/config command: - /pod_nanny - --config-dir=/etc/config - --cpu=300m - --extra-cpu=20m - --memory=200Mi - --extra-memory=10Mi - --threshold=5 - --deployment=metrics-server - --container=metrics-server - --poll-period=300000 - --estimator=exponential - --minClusterSize=2 volumes: - name: metrics-server-config-volume configMap: name: metrics-server-config tolerations: - key: "CriticalAddonsOnly" operator: "Exists" - key: node-role.kubernetes.io/master effect: NoSchedule---apiVersion: v1kind: Servicemetadata: name: metrics-server namespace: kube-system labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" kubernetes.io/name: "Metrics-server"spec: selector: k8s-app: metrics-server ports: - port: 443 protocol: TCP targetPort: https---apiVersion: apiregistration.k8s.io/v1kind: APIServicemetadata: name: v1beta1.metrics.k8s.io labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcilespec: service: name: metrics-server namespace: kube-system group: metrics.k8s.io version: v1beta1 insecureSkipTLSVerify: true groupPriorityMinimum: 100 versionPriority: 100[root@master-1 ~]# kubectl apply -f metrics.yaml clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator createdrolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader createdserviceaccount/metrics-server createdclusterrole.rbac.authorization.k8s.io/system:metrics-server createdclusterrolebinding.rbac.authorization.k8s.io/system:metrics-server createdconfigmap/metrics-server-config createddeployment.apps/metrics-server createdservice/metrics-server createdapiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created[root@master-1 ~]# kubectl get pods -n kube-system | grep metricsmetrics-server-6595f875d6-jhf9p 2/2 Running 0 14s |

测试kubectl top命令

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 | [root@master-1 ~]# kubectl top pods -n kube-systemNAME CPU(cores) MEMORY(bytes) calico-kube-controllers-558995777d-7g9x7 2m 16Mi calico-node-2qrpn 33m 81Mi calico-node-6zh2p 23m 94Mi calico-node-thfh7 40m 108Mi coredns-7f89b7bc75-jbl2h 4m 25Mi coredns-7f89b7bc75-nr24n 3m 9Mi etcd-master-1 14m 122Mi kube-apiserver-master-1 112m 380Mi kube-controller-manager-master-1 21m 48Mi kube-proxy-5h29b 1m 29Mi kube-proxy-thxv4 1m 17Mi kube-proxy-txm52 1m 15Mi kube-scheduler-master-1 3m 20Mi metrics-server-6595f875d6-jhf9p 1m 16Mi [root@master-1 ~]# kubectl top pods -n kube-systemNAME CPU(cores) MEMORY(bytes) calico-kube-controllers-558995777d-7g9x7 2m 16Mi calico-node-2qrpn 33m 81Mi calico-node-6zh2p 23m 94Mi calico-node-thfh7 40m 108Mi coredns-7f89b7bc75-jbl2h 4m 25Mi coredns-7f89b7bc75-nr24n 3m 9Mi etcd-master-1 14m 122Mi kube-apiserver-master-1 112m 380Mi kube-controller-manager-master-1 21m 48Mi kube-proxy-5h29b 1m 29Mi kube-proxy-thxv4 1m 17Mi kube-proxy-txm52 1m 15Mi kube-scheduler-master-1 3m 20Mi metrics-server-6595f875d6-jhf9p 1m 16Mi [root@master-1 ~]# kubectl top nodeNAME CPU(cores) CPU% MEMORY(bytes) MEMORY% master-1 181m 9% 2049Mi 55% node-1 81m 4% 1652Mi 45% node-2 97m 4% 1610Mi 43% |

把scheduler、controller-manager端口变成物理机可以监听的端口

1 2 3 4 5 6 | [root@master-1 ~]# kubectl get csWarning: v1 ComponentStatus is deprecated in v1.19+NAME STATUS MESSAGE ERRORscheduler Unhealthy Get "http://127.0.0.1:10251/healthz": dial tcp 127.0.0.1:10251: connect: connection refused controller-manager Unhealthy Get "http://127.0.0.1:10252/healthz": dial tcp 127.0.0.1:10252: connect: connection refused etcd-0 Healthy {"health":"true"} |

默认在1.19之后10252和10251都是绑定在127的,如果想要通过prometheus监控,会采集不到数据,所以可以把端口绑定到物理机

可按如下方法处理:

vim /etc/kubernetes/manifests/kube-scheduler.yaml

修改如下内容:

把--bind-address=127.0.0.1变成--bind-address=192.168.10.29

把httpGet:字段下的hosts由127.0.0.1变成192.168.10.29

把—port=0删除

#注意:192.168.40.180是k8s的控制节点xianchaomaster1的ip

vim /etc/kubernetes/manifests/kube-controller-manager.yaml

把--bind-address=127.0.0.1变成--bind-address=192.168.10.29

把httpGet:字段下的hosts由127.0.0.1变成192.168.10.29

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 | [root@master-1 ~]# cat /etc/kubernetes/manifests/kube-scheduler.yamlapiVersion: v1kind: Podmetadata: creationTimestamp: null labels: component: kube-scheduler tier: control-plane name: kube-scheduler namespace: kube-systemspec: containers: - command: - kube-scheduler - --authentication-kubeconfig=/etc/kubernetes/scheduler.conf - --authorization-kubeconfig=/etc/kubernetes/scheduler.conf - --bind-address=192.168.10.29 - --kubeconfig=/etc/kubernetes/scheduler.conf - --leader-elect=true image: registry.aliyuncs.com/google_containers/kube-scheduler:v1.20.6 imagePullPolicy: IfNotPresent livenessProbe: failureThreshold: 8 httpGet: host: 192.168.10.29 path: /healthz port: 10259 scheme: HTTPS initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 15 name: kube-scheduler resources: requests: cpu: 100m startupProbe: failureThreshold: 24 httpGet: host: 192.168.10.29 path: /healthz port: 10259 scheme: HTTPS initialDelaySeconds: 10 periodSeconds: 10 timeoutSeconds: 15 volumeMounts: - mountPath: /etc/kubernetes/scheduler.conf name: kubeconfig readOnly: true hostNetwork: true priorityClassName: system-node-critical volumes: - hostPath: path: /etc/kubernetes/scheduler.conf type: FileOrCreate name: kubeconfigstatus: {} |

修改之后在k8s各个节点重启下kubelet

1 2 3 4 5 6 7 | systemctl restart kubelet[root@master-1 ~]# ss -antulp | grep 10251tcp LISTEN 0 128 :::10251 :::* users:(("kube-scheduler",pid=7512,fd=7))[root@master-1 ~]# ss -antulp | grep 10251tcp LISTEN 0 128 :::10251 :::* users:(("kube-scheduler",pid=7512,fd=7)) |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 葡萄城 AI 搜索升级:DeepSeek 加持,客户体验更智能

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏