kafka 简介

消息系统分类

peer-to-peer

1. 一般基于Pull或者Polling接受消息

2. 发送到对列中消息只能被一个接受方接受,即使有多个接受者在同一个对列中监听同一个消息

3. 即支持异步即可即弃 的消息传递方式,也支持同步请求应答传递方式

发布订阅

1.发布到一个主题消息内,可被多个订阅者接收

2.发布订阅即可基于push消费数据,也可基于Pull或者Polling消费数据

3.解耦能力比P2P模式更强

消息系统使用场景

1.解耦 :各系统之间通过消息系统这个统一的接收数据的接口,无需了解彼此存在

2.冗余: 部分消息系统具有消息持久化能力,可规避消息处理前丢失风险

3.扩展 : 消息系统是统一的数据接口,各系独立扩展

4.峰值处理能力: 消息系统可顶住峰值流量,业务系统可根据处理能力从消息系统中获取并处理对应的请求

5.可恢复性: 系统中部分组件失效并不影响整个系统,它恢复仍可从系统获取数据并处理

6.异步通信: 在不需要立即处理请求的场景下,可将请求放入消息系统中,合适的时间取出并处理

常用消息系统对比

1.rabbitmq Erlang编写,支持多协议AMQP、XMPP、SMTP、STOMP,支持负载均衡,数据持久化,同时支持peer-to-peer和发布订阅模式

2.redis 基于key-value的nosql数据库,同时支持mq功能,可做轻量级队列服务使用,就如队列而言,redis对短消息小与10kb的消息处理性能比rabbitmq要好,长消息的性能比rabbitmq要差

3.ZeroMQ 轻量级,不需要单独的消息服务器或中间件,应用程序本身扮演该角色,Peer-to-Peer。它实质上是一个库,需要开发人员自己组合多种技术,使用复杂度高

4.ActiveMQ JMS实现,Peer-to-Peer,支持持久化、XA事务

5.Kafka/Jafka 高性能跨语言的分布式发布/订阅消息系统,数据持久化,全分布式,同时支持在线和离线处理

6. MetaQ/RocketMQ 纯Java实现,发布/订阅消息系统,支持本地事务和XA分布式事务

kafka设计目标

1.高吞吐率 在廉价的商用机器上单机可支持每秒100万条消息的读写

2.消息持久化 所有消息均被持久化到磁盘,无消息丢失,支持消息重放

3.完全分布式 Producer,Broker,Consumer均支持水平扩展

4.同时适应在线流处理和离线批处理

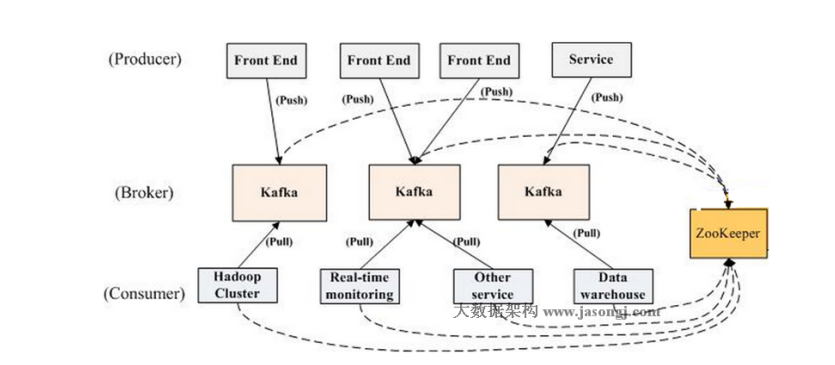

kefka架构图

部署kafka

安装kafka

[root@mail ~]# hostnamectl set-hostname kafka && exec bash

[root@kafka ~]# yum -y install jdk-8u171-linux-x64.rpm

[root@kafka ~]# wget http://mirrors.tuna.tsinghua.edu.cn/apache/kafka/2.1.0/kafka_2.12-2.1.0.tgz

--2019-01-21 14:47:03-- http://mirrors.tuna.tsinghua.edu.cn/apache/kafka/2.1.0/kafka_2.12-2.1.0.tgz

正在解析主机 mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)... 101.6.8.193, 2402:f000:1:408:8100::1

正在连接 mirrors.tuna.tsinghua.edu.cn (mirrors.tuna.tsinghua.edu.cn)|101.6.8.193|:80... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:55201623 (53M) [application/octet-stream]

正在保存至: “kafka_2.12-2.1.0.tgz”

100%[=====================================================================================================================================================>] 55,201,623 602KB/s 用时 90s

2019-01-21 14:48:39 (597 KB/s) - 已保存 “kafka_2.12-2.1.0.tgz” [55201623/55201623])

[root@kafka ~]# tar xf kafka_2.12-2.1.0.tgz -C /usr/local/

[root@kafka ~]# cd /usr/local/kafka_2.12-2.1.0/

[root@kafka kafka_2.12-2.1.0]# ls

bin config libs LICENSE NOTICE site-docs

[root@kafka bin]# ./zookeeper-server-start.sh ../config/zookeeper.properties

[2019-01-21 14:51:47,738] INFO Reading configuration from: ../config/zookeeper.properties (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2019-01-21 14:51:47,766] INFO autopurge.snapRetainCount set to 3 (org.apache.zookeeper.server.DatadirCleanupManager)

[2019-01-21 14:51:47,793] INFO autopurge.purgeInterval set to 0 (org.apache.zookeeper.server.DatadirCleanupManager)

[2019-01-21 14:51:47,794] INFO Purge task is not scheduled. (org.apache.zookeeper.server.DatadirCleanupManager)

[2019-01-21 14:51:47,794] WARN Either no config or no quorum defined in config, running in standalone mode (org.apache.zookeeper.server.quorum.QuorumPeerMain)

[2019-01-21 14:51:48,013] INFO Reading configuration from: ../config/zookeeper.properties (org.apache.zookeeper.server.quorum.QuorumPeerConfig)

[2019-01-21 14:51:48,021] INFO Starting server (org.apache.zookeeper.server.ZooKeeperServerMain)

[2019-01-21 14:51:58,260] INFO Server environment:zookeeper.version=3.4.13-2d71af4dbe22557fda74f9a9b4309b15a7487f03, built on 06/29/2018 00:39 GMT (org.apache.zookeeper.server.ZooKeeperServer

)[2019-01-21 14:51:58,261] INFO Server environment:host.name=kafka (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,261] INFO Server environment:java.version=1.8.0_171 (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,261] INFO Server environment:java.vendor=Oracle Corporation (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,262] INFO Server environment:java.home=/usr/java/jdk1.8.0_171-amd64/jre (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,263] INFO Server environment:java.class.path=/usr/local/kafka_2.12-2.1.0/bin/../libs/activation-1.1.1.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/aopalliance-repackaged-2

.5.0-b42.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/argparse4j-0.7.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/audience-annotations-0.5.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/commons-lang3-3.5.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/compileScala.mapping:/usr/local/kafka_2.12-2.1.0/bin/../libs/compileScala.mapping.asc:/usr/local/kafka_2.12-2.1.0/bin/../libs/connect-api-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/connect-basic-auth-extension-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/connect-file-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/connect-json-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/connect-runtime-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/connect-transforms-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/guava-20.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/hk2-api-2.5.0-b42.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/hk2-locator-2.5.0-b42.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/hk2-utils-2.5.0-b42.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jackson-annotations-2.9.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jackson-core-2.9.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jackson-databind-2.9.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jackson-jaxrs-base-2.9.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jackson-jaxrs-json-provider-2.9.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jackson-module-jaxb-annotations-2.9.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javassist-3.22.0-CR2.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javax.annotation-api-1.2.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javax.inject-1.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javax.inject-2.5.0-b42.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javax.servlet-api-3.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javax.ws.rs-api-2.1.1.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javax.ws.rs-api-2.1.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jaxb-api-2.3.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-client-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-common-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-container-servlet-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-container-servlet-core-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-hk2-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-media-jaxb-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-server-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-client-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-continuation-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-http-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-io-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-security-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-server-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-servlet-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-servlets-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-util-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jopt-simple-5.0.4.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka_2.12-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka_2.12-2.1.0-sources.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-clients-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-log4j-appender-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-streams-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-streams-examples-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-streams-scala_2.12-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-streams-test-utils-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-tools-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/log4j-1.2.17.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/lz4-java-1.5.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/maven-artifact-3.5.4.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/metrics-core-2.2.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/osgi-resource-locator-1.0.1.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/plexus-utils-3.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/reflections-0.9.11.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/rocksdbjni-5.14.2.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/scala-library-2.12.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/scala-logging_2.12-3.9.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/scala-reflect-2.12.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/slf4j-api-1.7.25.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/slf4j-log4j12-1.7.25.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/snappy-java-1.1.7.2.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/validation-api-1.1.0.Final.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/zkclient-0.10.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/zookeeper-3.4.13.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/zstd-jni-1.3.5-4.jar (org.apache.zookeeper.server.ZooKeeperServer)[2019-01-21 14:51:58,264] INFO Server environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,264] INFO Server environment:java.io.tmpdir=/tmp (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,264] INFO Server environment:java.compiler=<NA> (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,264] INFO Server environment:os.name=Linux (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,265] INFO Server environment:os.arch=amd64 (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,265] INFO Server environment:os.version=3.10.0-862.el7.x86_64 (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,265] INFO Server environment:user.name=root (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,265] INFO Server environment:user.home=/root (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,266] INFO Server environment:user.dir=/usr/local/kafka_2.12-2.1.0/bin (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,659] INFO tickTime set to 3000 (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,659] INFO minSessionTimeout set to -1 (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,659] INFO maxSessionTimeout set to -1 (org.apache.zookeeper.server.ZooKeeperServer)

[2019-01-21 14:51:58,700] INFO Using org.apache.zookeeper.server.NIOServerCnxnFactory as server connection factory (org.apache.zookeeper.server.ServerCnxnFactory)

[2019-01-21 14:51:58,719] INFO binding to port 0.0.0.0/0.0.0.0:2181 (org.apache.zookeeper.server.NIOServerCnxnFactory)

[root@mail ~]# ss -lntp | grep java

LISTEN 0 50 :::2181 :::* users:(("java",pid=13854,fd=99))

LISTEN 0 50 :::44536 :::* users:(("java",pid=13854,fd=86))

[root@kafka ~]# /usr/local/kafka_2.12-2.1.0/bin/kafka-server-start.sh /usr/local/kafka_2.12-2.1.0/config/server.properties

[2019-01-21 14:55:25,229] INFO Registered kafka:type=kafka.Log4jController MBean (kafka.utils.Log4jControllerRegistration$)

[2019-01-21 14:55:28,772] INFO starting (kafka.server.KafkaServer)

[2019-01-21 14:55:28,779] INFO Connecting to zookeeper on localhost:2181 (kafka.server.KafkaServer)

[2019-01-21 14:55:28,999] INFO [ZooKeeperClient] Initializing a new session to localhost:2181. (kafka.zookeeper.ZooKeeperClient)

[2019-01-21 14:55:39,074] INFO Client environment:zookeeper.version=3.4.13-2d71af4dbe22557fda74f9a9b4309b15a7487f03, built on 06/29/2018 00:39 GMT (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,074] INFO Client environment:host.name=kafka (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,074] INFO Client environment:java.version=1.8.0_171 (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,075] INFO Client environment:java.vendor=Oracle Corporation (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,075] INFO Client environment:java.home=/usr/java/jdk1.8.0_171-amd64/jre (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,075] INFO Client environment:java.class.path=/usr/local/kafka_2.12-2.1.0/bin/../libs/activation-1.1.1.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/aopalliance-repackaged-2

.5.0-b42.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/argparse4j-0.7.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/audience-annotations-0.5.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/commons-lang3-3.5.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/compileScala.mapping:/usr/local/kafka_2.12-2.1.0/bin/../libs/compileScala.mapping.asc:/usr/local/kafka_2.12-2.1.0/bin/../libs/connect-api-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/connect-basic-auth-extension-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/connect-file-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/connect-json-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/connect-runtime-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/connect-transforms-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/guava-20.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/hk2-api-2.5.0-b42.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/hk2-locator-2.5.0-b42.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/hk2-utils-2.5.0-b42.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jackson-annotations-2.9.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jackson-core-2.9.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jackson-databind-2.9.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jackson-jaxrs-base-2.9.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jackson-jaxrs-json-provider-2.9.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jackson-module-jaxb-annotations-2.9.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javassist-3.22.0-CR2.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javax.annotation-api-1.2.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javax.inject-1.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javax.inject-2.5.0-b42.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javax.servlet-api-3.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javax.ws.rs-api-2.1.1.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/javax.ws.rs-api-2.1.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jaxb-api-2.3.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-client-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-common-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-container-servlet-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-container-servlet-core-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-hk2-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-media-jaxb-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jersey-server-2.27.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-client-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-continuation-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-http-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-io-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-security-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-server-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-servlet-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-servlets-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jetty-util-9.4.12.v20180830.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/jopt-simple-5.0.4.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka_2.12-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka_2.12-2.1.0-sources.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-clients-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-log4j-appender-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-streams-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-streams-examples-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-streams-scala_2.12-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-streams-test-utils-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/kafka-tools-2.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/log4j-1.2.17.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/lz4-java-1.5.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/maven-artifact-3.5.4.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/metrics-core-2.2.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/osgi-resource-locator-1.0.1.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/plexus-utils-3.1.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/reflections-0.9.11.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/rocksdbjni-5.14.2.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/scala-library-2.12.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/scala-logging_2.12-3.9.0.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/scala-reflect-2.12.7.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/slf4j-api-1.7.25.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/slf4j-log4j12-1.7.25.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/snappy-java-1.1.7.2.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/validation-api-1.1.0.Final.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/zkclient-0.10.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/zookeeper-3.4.13.jar:/usr/local/kafka_2.12-2.1.0/bin/../libs/zstd-jni-1.3.5-4.jar (org.apache.zookeeper.ZooKeeper)[2019-01-21 14:55:39,077] INFO Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,077] INFO Client environment:java.io.tmpdir=/tmp (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,077] INFO Client environment:java.compiler=<NA> (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,077] INFO Client environment:os.name=Linux (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,077] INFO Client environment:os.arch=amd64 (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,077] INFO Client environment:os.version=3.10.0-862.el7.x86_64 (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,078] INFO Client environment:user.name=root (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,078] INFO Client environment:user.home=/root (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,078] INFO Client environment:user.dir=/root (org.apache.zookeeper.ZooKeeper)

[2019-01-21 14:55:39,094] INFO Initiating client connection, connectString=localhost:2181 sessionTimeout=6000 watcher=kafka.zookeeper.ZooKeeperClient$ZooKeeperClientWatcher$@2e4b8173 (org.apa

che.zookeeper.ZooKeeper)[2019-01-21 14:55:39,146] INFO [ZooKeeperClient] Waiting until connected. (kafka.zookeeper.ZooKeeperClient)

[2019-01-21 14:55:39,585] INFO Opening socket connection to server localhost/0:0:0:0:0:0:0:1:2181. Will not attempt to authenticate using SASL (unknown error) (org.apache.zookeeper.ClientCnxn

)[2019-01-21 14:55:39,648] INFO Socket connection established to localhost/0:0:0:0:0:0:0:1:2181, initiating session (org.apache.zookeeper.ClientCnxn)

[2019-01-21 14:55:39,815] INFO Session establishment complete on server localhost/0:0:0:0:0:0:0:1:2181, sessionid = 0x100064d444d0000, negotiated timeout = 6000 (org.apache.zookeeper.ClientCn

xn)[2019-01-21 14:55:39,835] INFO [ZooKeeperClient] Connected. (kafka.zookeeper.ZooKeeperClient)

[2019-01-21 14:55:42,087] INFO Cluster ID = rNMz6rWkRq-0gkfvlpnmOw (kafka.server.KafkaServer)

[2019-01-21 14:55:42,211] WARN No meta.properties file under dir /tmp/kafka-logs/meta.properties (kafka.server.BrokerMetadataCheckpoint)

[2019-01-21 14:55:42,603] INFO KafkaConfig values:

advertised.host.name = null

advertised.listeners = null

advertised.port = null

alter.config.policy.class.name = null

alter.log.dirs.replication.quota.window.num = 11

alter.log.dirs.replication.quota.window.size.seconds = 1

authorizer.class.name =

auto.create.topics.enable = true

auto.leader.rebalance.enable = true

background.threads = 10

broker.id = 0

broker.id.generation.enable = true

broker.rack = null

client.quota.callback.class = null

compression.type = producer

connection.failed.authentication.delay.ms = 100

connections.max.idle.ms = 600000

controlled.shutdown.enable = true

controlled.shutdown.max.retries = 3

controlled.shutdown.retry.backoff.ms = 5000

controller.socket.timeout.ms = 30000

create.topic.policy.class.name = null

default.replication.factor = 1

delegation.token.expiry.check.interval.ms = 3600000

delegation.token.expiry.time.ms = 86400000

delegation.token.master.key = null

delegation.token.max.lifetime.ms = 604800000

delete.records.purgatory.purge.interval.requests = 1

delete.topic.enable = true

fetch.purgatory.purge.interval.requests = 1000

group.initial.rebalance.delay.ms = 0

group.max.session.timeout.ms = 300000

group.min.session.timeout.ms = 6000

host.name =

inter.broker.listener.name = null

inter.broker.protocol.version = 2.1-IV2

kafka.metrics.polling.interval.secs = 10

kafka.metrics.reporters = []

leader.imbalance.check.interval.seconds = 300

leader.imbalance.per.broker.percentage = 10

listener.security.protocol.map = PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

listeners = null

log.cleaner.backoff.ms = 15000

log.cleaner.dedupe.buffer.size = 134217728

log.cleaner.delete.retention.ms = 86400000

log.cleaner.enable = true

log.cleaner.io.buffer.load.factor = 0.9

log.cleaner.io.buffer.size = 524288

log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308

log.cleaner.min.cleanable.ratio = 0.5

log.cleaner.min.compaction.lag.ms = 0

log.cleaner.threads = 1

log.cleanup.policy = [delete]

log.dir = /tmp/kafka-logs

log.dirs = /tmp/kafka-logs

log.flush.interval.messages = 9223372036854775807

log.flush.interval.ms = null

log.flush.offset.checkpoint.interval.ms = 60000

log.flush.scheduler.interval.ms = 9223372036854775807

log.flush.start.offset.checkpoint.interval.ms = 60000

log.index.interval.bytes = 4096

log.index.size.max.bytes = 10485760

log.message.downconversion.enable = true

log.message.format.version = 2.1-IV2

log.message.timestamp.difference.max.ms = 9223372036854775807

log.message.timestamp.type = CreateTime

log.preallocate = false

log.retention.bytes = -1

log.retention.check.interval.ms = 300000

log.retention.hours = 168

log.retention.minutes = null

log.retention.ms = null

log.roll.hours = 168

log.roll.jitter.hours = 0

log.roll.jitter.ms = null

log.roll.ms = null

log.segment.bytes = 1073741824

log.segment.delete.delay.ms = 60000

max.connections.per.ip = 2147483647

max.connections.per.ip.overrides =

max.incremental.fetch.session.cache.slots = 1000

message.max.bytes = 1000012

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

min.insync.replicas = 1

num.io.threads = 8

num.network.threads = 3

num.partitions = 1

num.recovery.threads.per.data.dir = 1

num.replica.alter.log.dirs.threads = null

num.replica.fetchers = 1

offset.metadata.max.bytes = 4096

offsets.commit.required.acks = -1

offsets.commit.timeout.ms = 5000

offsets.load.buffer.size = 5242880

offsets.retention.check.interval.ms = 600000

offsets.retention.minutes = 10080

offsets.topic.compression.codec = 0

offsets.topic.num.partitions = 50

offsets.topic.replication.factor = 1

offsets.topic.segment.bytes = 104857600

password.encoder.cipher.algorithm = AES/CBC/PKCS5Padding

password.encoder.iterations = 4096

password.encoder.key.length = 128

password.encoder.keyfactory.algorithm = null

password.encoder.old.secret = null

password.encoder.secret = null

port = 9092

principal.builder.class = null

producer.purgatory.purge.interval.requests = 1000

queued.max.request.bytes = -1

queued.max.requests = 500

quota.consumer.default = 9223372036854775807

quota.producer.default = 9223372036854775807

quota.window.num = 11

quota.window.size.seconds = 1

replica.fetch.backoff.ms = 1000

replica.fetch.max.bytes = 1048576

replica.fetch.min.bytes = 1

replica.fetch.response.max.bytes = 10485760

replica.fetch.wait.max.ms = 500

replica.high.watermark.checkpoint.interval.ms = 5000

replica.lag.time.max.ms = 10000

replica.socket.receive.buffer.bytes = 65536

replica.socket.timeout.ms = 30000

replication.quota.window.num = 11

replication.quota.window.size.seconds = 1

request.timeout.ms = 30000

reserved.broker.max.id = 1000

sasl.client.callback.handler.class = null

sasl.enabled.mechanisms = [GSSAPI]

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.principal.to.local.rules = [DEFAULT]

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism.inter.broker.protocol = GSSAPI

sasl.server.callback.handler.class = null

security.inter.broker.protocol = PLAINTEXT

socket.receive.buffer.bytes = 102400

socket.request.max.bytes = 104857600

socket.send.buffer.bytes = 102400

ssl.cipher.suites = []

ssl.client.auth = none

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = https

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.abort.timed.out.transaction.cleanup.interval.ms = 60000

transaction.max.timeout.ms = 900000

transaction.remove.expired.transaction.cleanup.interval.ms = 3600000

transaction.state.log.load.buffer.size = 5242880

transaction.state.log.min.isr = 1

transaction.state.log.num.partitions = 50

transaction.state.log.replication.factor = 1

transaction.state.log.segment.bytes = 104857600

transactional.id.expiration.ms = 604800000

unclean.leader.election.enable = false

zookeeper.connect = localhost:2181

zookeeper.connection.timeout.ms = 6000

zookeeper.max.in.flight.requests = 10

zookeeper.session.timeout.ms = 6000

zookeeper.set.acl = false

zookeeper.sync.time.ms = 2000

(kafka.server.KafkaConfig)

[2019-01-21 14:55:42,642] INFO KafkaConfig values:

advertised.host.name = null

advertised.listeners = null

advertised.port = null

alter.config.policy.class.name = null

alter.log.dirs.replication.quota.window.num = 11

alter.log.dirs.replication.quota.window.size.seconds = 1

authorizer.class.name =

auto.create.topics.enable = true

auto.leader.rebalance.enable = true

background.threads = 10

broker.id = 0

broker.id.generation.enable = true

broker.rack = null

client.quota.callback.class = null

compression.type = producer

connection.failed.authentication.delay.ms = 100

connections.max.idle.ms = 600000

controlled.shutdown.enable = true

controlled.shutdown.max.retries = 3

controlled.shutdown.retry.backoff.ms = 5000

controller.socket.timeout.ms = 30000

create.topic.policy.class.name = null

default.replication.factor = 1

delegation.token.expiry.check.interval.ms = 3600000

delegation.token.expiry.time.ms = 86400000

delegation.token.master.key = null

delegation.token.max.lifetime.ms = 604800000

delete.records.purgatory.purge.interval.requests = 1

delete.topic.enable = true

fetch.purgatory.purge.interval.requests = 1000

group.initial.rebalance.delay.ms = 0

group.max.session.timeout.ms = 300000

group.min.session.timeout.ms = 6000

host.name =

inter.broker.listener.name = null

inter.broker.protocol.version = 2.1-IV2

kafka.metrics.polling.interval.secs = 10

kafka.metrics.reporters = []

leader.imbalance.check.interval.seconds = 300

leader.imbalance.per.broker.percentage = 10

listener.security.protocol.map = PLAINTEXT:PLAINTEXT,SSL:SSL,SASL_PLAINTEXT:SASL_PLAINTEXT,SASL_SSL:SASL_SSL

listeners = null

log.cleaner.backoff.ms = 15000

log.cleaner.dedupe.buffer.size = 134217728

log.cleaner.delete.retention.ms = 86400000

log.cleaner.enable = true

log.cleaner.io.buffer.load.factor = 0.9

log.cleaner.io.buffer.size = 524288

log.cleaner.io.max.bytes.per.second = 1.7976931348623157E308

log.cleaner.min.cleanable.ratio = 0.5

log.cleaner.min.compaction.lag.ms = 0

log.cleaner.threads = 1

log.cleanup.policy = [delete]

log.dir = /tmp/kafka-logs

log.dirs = /tmp/kafka-logs

log.flush.interval.messages = 9223372036854775807

log.flush.interval.ms = null

log.flush.offset.checkpoint.interval.ms = 60000

log.flush.scheduler.interval.ms = 9223372036854775807

log.flush.start.offset.checkpoint.interval.ms = 60000

log.index.interval.bytes = 4096

log.index.size.max.bytes = 10485760

log.message.downconversion.enable = true

log.message.format.version = 2.1-IV2

log.message.timestamp.difference.max.ms = 9223372036854775807

log.message.timestamp.type = CreateTime

log.preallocate = false

log.retention.bytes = -1

log.retention.check.interval.ms = 300000

log.retention.hours = 168

log.retention.minutes = null

log.retention.ms = null

log.roll.hours = 168

log.roll.jitter.hours = 0

log.roll.jitter.ms = null

log.roll.ms = null

log.segment.bytes = 1073741824

log.segment.delete.delay.ms = 60000

max.connections.per.ip = 2147483647

max.connections.per.ip.overrides =

max.incremental.fetch.session.cache.slots = 1000

message.max.bytes = 1000012

metric.reporters = []

metrics.num.samples = 2

metrics.recording.level = INFO

metrics.sample.window.ms = 30000

min.insync.replicas = 1

num.io.threads = 8

num.network.threads = 3

num.partitions = 1

num.recovery.threads.per.data.dir = 1

num.replica.alter.log.dirs.threads = null

num.replica.fetchers = 1

offset.metadata.max.bytes = 4096

offsets.commit.required.acks = -1

offsets.commit.timeout.ms = 5000

offsets.load.buffer.size = 5242880

offsets.retention.check.interval.ms = 600000

offsets.retention.minutes = 10080

offsets.topic.compression.codec = 0

offsets.topic.num.partitions = 50

offsets.topic.replication.factor = 1

offsets.topic.segment.bytes = 104857600

password.encoder.cipher.algorithm = AES/CBC/PKCS5Padding

password.encoder.iterations = 4096

password.encoder.key.length = 128

password.encoder.keyfactory.algorithm = null

password.encoder.old.secret = null

password.encoder.secret = null

port = 9092

principal.builder.class = null

producer.purgatory.purge.interval.requests = 1000

queued.max.request.bytes = -1

queued.max.requests = 500

quota.consumer.default = 9223372036854775807

quota.producer.default = 9223372036854775807

quota.window.num = 11

quota.window.size.seconds = 1

replica.fetch.backoff.ms = 1000

replica.fetch.max.bytes = 1048576

replica.fetch.min.bytes = 1

replica.fetch.response.max.bytes = 10485760

replica.fetch.wait.max.ms = 500

replica.high.watermark.checkpoint.interval.ms = 5000

replica.lag.time.max.ms = 10000

replica.socket.receive.buffer.bytes = 65536

replica.socket.timeout.ms = 30000

replication.quota.window.num = 11

replication.quota.window.size.seconds = 1

request.timeout.ms = 30000

reserved.broker.max.id = 1000

sasl.client.callback.handler.class = null

sasl.enabled.mechanisms = [GSSAPI]

sasl.jaas.config = null

sasl.kerberos.kinit.cmd = /usr/bin/kinit

sasl.kerberos.min.time.before.relogin = 60000

sasl.kerberos.principal.to.local.rules = [DEFAULT]

sasl.kerberos.service.name = null

sasl.kerberos.ticket.renew.jitter = 0.05

sasl.kerberos.ticket.renew.window.factor = 0.8

sasl.login.callback.handler.class = null

sasl.login.class = null

sasl.login.refresh.buffer.seconds = 300

sasl.login.refresh.min.period.seconds = 60

sasl.login.refresh.window.factor = 0.8

sasl.login.refresh.window.jitter = 0.05

sasl.mechanism.inter.broker.protocol = GSSAPI

sasl.server.callback.handler.class = null

security.inter.broker.protocol = PLAINTEXT

socket.receive.buffer.bytes = 102400

socket.request.max.bytes = 104857600

socket.send.buffer.bytes = 102400

ssl.cipher.suites = []

ssl.client.auth = none

ssl.enabled.protocols = [TLSv1.2, TLSv1.1, TLSv1]

ssl.endpoint.identification.algorithm = https

ssl.key.password = null

ssl.keymanager.algorithm = SunX509

ssl.keystore.location = null

ssl.keystore.password = null

ssl.keystore.type = JKS

ssl.protocol = TLS

ssl.provider = null

ssl.secure.random.implementation = null

ssl.trustmanager.algorithm = PKIX

ssl.truststore.location = null

ssl.truststore.password = null

ssl.truststore.type = JKS

transaction.abort.timed.out.transaction.cleanup.interval.ms = 60000

transaction.max.timeout.ms = 900000

transaction.remove.expired.transaction.cleanup.interval.ms = 3600000

transaction.state.log.load.buffer.size = 5242880

transaction.state.log.min.isr = 1

transaction.state.log.num.partitions = 50

transaction.state.log.replication.factor = 1

transaction.state.log.segment.bytes = 104857600

transactional.id.expiration.ms = 604800000

unclean.leader.election.enable = false

zookeeper.connect = localhost:2181

zookeeper.connection.timeout.ms = 6000

zookeeper.max.in.flight.requests = 10

zookeeper.session.timeout.ms = 6000

zookeeper.set.acl = false

zookeeper.sync.time.ms = 2000

(kafka.server.KafkaConfig)

[2019-01-21 14:55:42,822] INFO [ThrottledChannelReaper-Produce]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2019-01-21 14:55:42,831] INFO [ThrottledChannelReaper-Request]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2019-01-21 14:55:42,823] INFO [ThrottledChannelReaper-Fetch]: Starting (kafka.server.ClientQuotaManager$ThrottledChannelReaper)

[2019-01-21 14:55:43,094] INFO Log directory /tmp/kafka-logs not found, creating it. (kafka.log.LogManager)

[2019-01-21 14:55:43,144] INFO Loading logs. (kafka.log.LogManager)

[2019-01-21 14:55:43,178] INFO Logs loading complete in 34 ms. (kafka.log.LogManager)

[2019-01-21 14:55:43,227] INFO Starting log cleanup with a period of 300000 ms. (kafka.log.LogManager)

[2019-01-21 14:55:43,250] INFO Starting log flusher with a default period of 9223372036854775807 ms. (kafka.log.LogManager)

[2019-01-21 14:55:47,878] INFO Awaiting socket connections on 0.0.0.0:9092. (kafka.network.Acceptor)

[2019-01-21 14:55:48,005] INFO [SocketServer brokerId=0] Started 1 acceptor threads (kafka.network.SocketServer)

[2019-01-21 14:55:48,103] INFO [ExpirationReaper-0-Produce]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-01-21 14:55:48,106] INFO [ExpirationReaper-0-Fetch]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-01-21 14:55:48,146] INFO [ExpirationReaper-0-DeleteRecords]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-01-21 14:55:48,221] INFO [LogDirFailureHandler]: Starting (kafka.server.ReplicaManager$LogDirFailureHandler)

[2019-01-21 14:55:58,523] INFO Creating /brokers/ids/0 (is it secure? false) (kafka.zk.KafkaZkClient)

[2019-01-21 14:55:58,543] INFO Result of znode creation at /brokers/ids/0 is: OK (kafka.zk.KafkaZkClient)

[2019-01-21 14:55:58,560] INFO Registered broker 0 at path /brokers/ids/0 with addresses: ArrayBuffer(EndPoint(kafka,9092,ListenerName(PLAINTEXT),PLAINTEXT)) (kafka.zk.KafkaZkClient)

[2019-01-21 14:55:58,568] WARN No meta.properties file under dir /tmp/kafka-logs/meta.properties (kafka.server.BrokerMetadataCheckpoint)

[2019-01-21 14:55:58,917] INFO [ExpirationReaper-0-topic]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-01-21 14:55:58,951] INFO [ExpirationReaper-0-Heartbeat]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-01-21 14:55:59,016] INFO [ExpirationReaper-0-Rebalance]: Starting (kafka.server.DelayedOperationPurgatory$ExpiredOperationReaper)

[2019-01-21 14:55:59,044] INFO Successfully created /controller_epoch with initial epoch 0 (kafka.zk.KafkaZkClient)

[2019-01-21 14:55:59,405] INFO [GroupCoordinator 0]: Starting up. (kafka.coordinator.group.GroupCoordinator)

[2019-01-21 14:55:59,421] INFO [GroupCoordinator 0]: Startup complete. (kafka.coordinator.group.GroupCoordinator)

[2019-01-21 14:55:59,496] INFO [GroupMetadataManager brokerId=0] Removed 0 expired offsets in 62 milliseconds. (kafka.coordinator.group.GroupMetadataManager)

[2019-01-21 14:55:59,547] INFO [ProducerId Manager 0]: Acquired new producerId block (brokerId:0,blockStartProducerId:0,blockEndProducerId:999) by writing to Zk with path version 1 (kafka.coo

rdinator.transaction.ProducerIdManager)[2019-01-21 14:55:59,718] INFO [TransactionCoordinator id=0] Starting up. (kafka.coordinator.transaction.TransactionCoordinator)

[2019-01-21 14:55:59,733] INFO [TransactionCoordinator id=0] Startup complete. (kafka.coordinator.transaction.TransactionCoordinator)

[2019-01-21 14:55:59,771] INFO [Transaction Marker Channel Manager 0]: Starting (kafka.coordinator.transaction.TransactionMarkerChannelManager)

[2019-01-21 14:56:00,314] INFO [/config/changes-event-process-thread]: Starting (kafka.common.ZkNodeChangeNotificationListener$ChangeEventProcessThread)

[2019-01-21 14:56:00,392] INFO [SocketServer brokerId=0] Started processors for 1 acceptors (kafka.network.SocketServer)

[2019-01-21 14:56:00,446] INFO Kafka version : 2.1.0 (org.apache.kafka.common.utils.AppInfoParser)

[2019-01-21 14:56:00,447] INFO Kafka commitId : 809be928f1ae004e (org.apache.kafka.common.utils.AppInfoParser)

[2019-01-21 14:56:00,453] INFO [KafkaServer id=0] started (kafka.server.KafkaServer)

[root@kafka ~]# ss -lntp | grep 9092

LISTEN 0 50 :::9092 :::* users:(("java",pid=14408,fd=104))

创建主题

[root@kafka bin]# ./kafka-topics.sh --zookeeper 127.0.0.1:2181 --create --topic top-1 --partitions 3 --replication-factor 1 Created topic "top-1". [root@kafka bin]# ./kafka-topics.sh --zookeeper 127.0.0.1:2181 --describe --topic top-1 Topic:top-1 PartitionCount:3 ReplicationFactor:1 Configs: Topic: top-1 Partition: 0 Leader: 0 Replicas: 0 Isr: 0 Topic: top-1 Partition: 1 Leader: 0 Replicas: 0 Isr: 0 Topic: top-1 Partition: 2 Leader: 0 Replicas: 0 Isr: 0

浙公网安备 33010602011771号

浙公网安备 33010602011771号