chatgpt接口开发笔记3: 语音识别接口

chatgpt接口开发笔记3: 语音识别接口

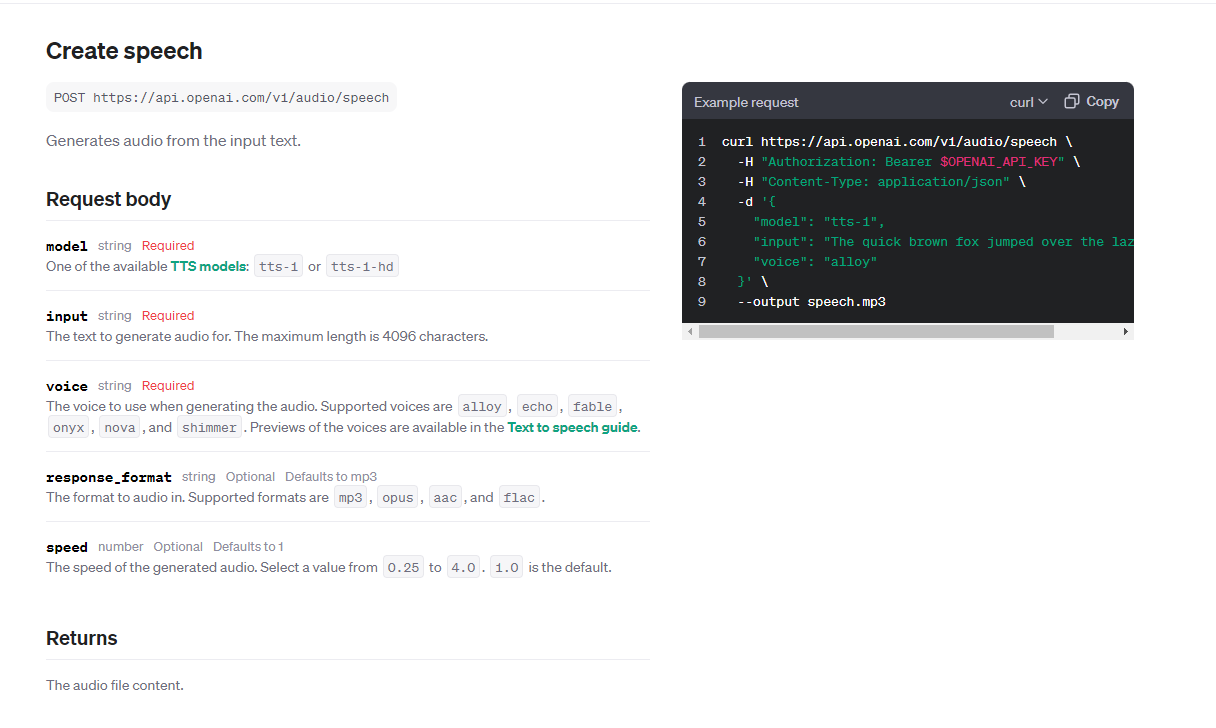

1.文本转语音

1、了解接口参数

接口地址:

POST https://api.openai.com/v1/audio/speech

下面是接口文档描述内容:

参数:

{

"model": "tts-1",

"input": "你好,我是饶坤,我是terramours gpt的开发者",

"voice": "alloy"

}

- model 模型

- input 需要转换的文字

- voice 语音风格

2.postman测试

3.结果:

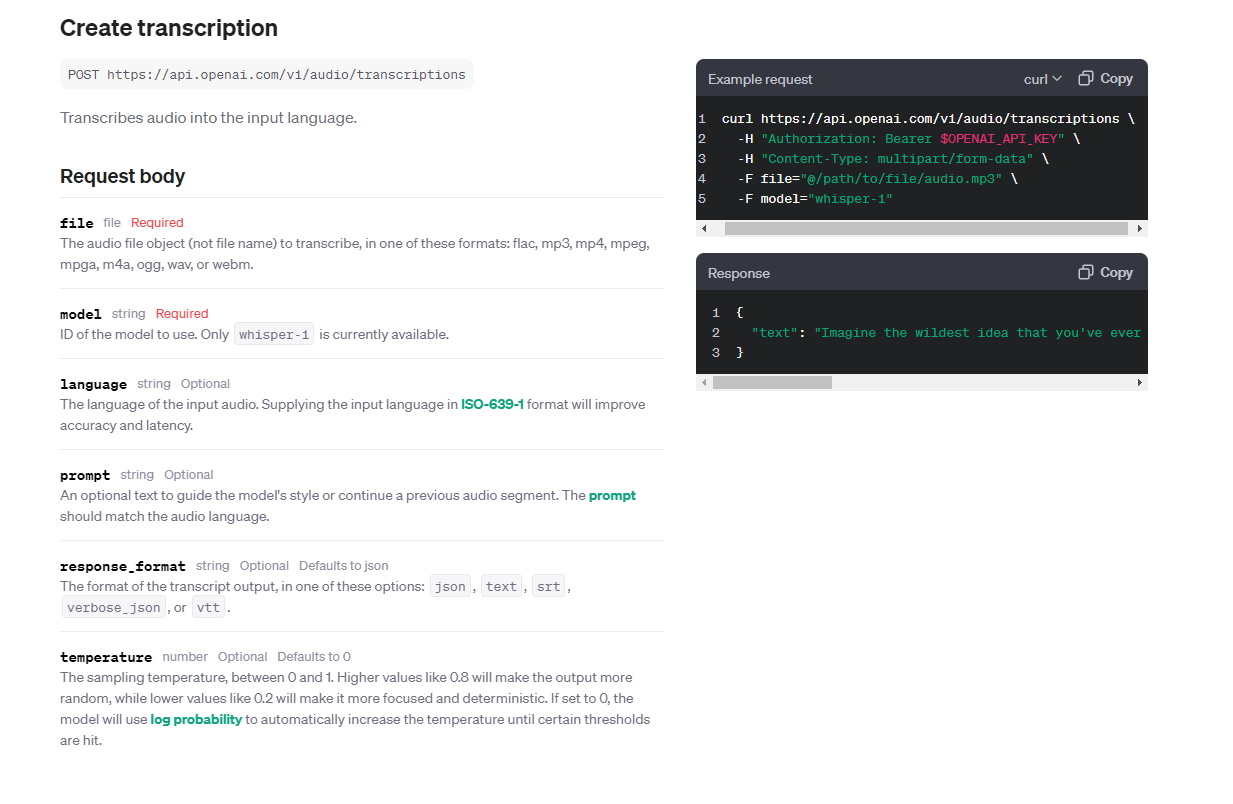

2.语音转文本

1、了解接口参数

接口地址:

POST https://api.openai.com/v1/audio/transcriptions

文档:

参数

curl https://api.openai.com/v1/audio/transcriptions \

-H "Authorization: Bearer $OPENAI_API_KEY" \

-H "Content-Type: multipart/form-data" \

-F file="@/path/to/file/audio.mp3" \

-F model="whisper-1"

- file 需要解析的语言文件

- model 模型类型

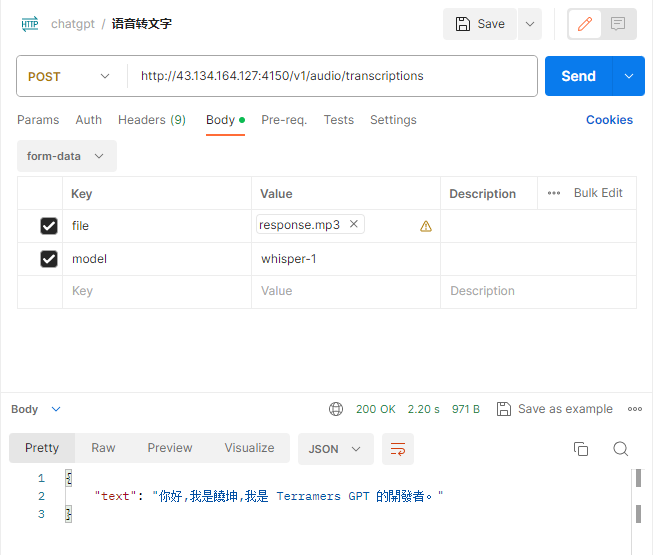

2.postman测试

3.结果:

{

"text": "你好,我是饒坤,我是 Terramers GPT 的開發者。"

}

SDK开发

对应的语音接口我会加入到SDK中,使用C#开发者可以在nuget中搜索AllInAI.Sharp.API.

SDK为开源项目,代码地址:https://github.com/raokun/AllInAI.Sharp.API

首先在项目中安装sdk

Install-Package AllInAI.Sharp.API

1.Speech

1.OpenAI

public async Task OpenAISpeechTest() {

try {

AuthOption authOption = new AuthOption() { Key = "sk-**", BaseUrl = "https://api.openai.com", AIType = Enums.AITypeEnum.OpenAi };

AudioService audioService = new AudioService(authOption);

AudioSpeechReq req = new AudioSpeechReq() { Model = "tts-1", Input = "你好,我是饶坤,我是AllInAI.Sharp.API的开发者", Voice = "alloy" };

var res = await audioService.Speech<Stream>(req);

if(res.Data != null) {

var filePath = $"D:/test/{Guid.NewGuid()}.mp3";

using (FileStream fileStream = File.Create(filePath)) {

res.Data.CopyTo(fileStream);

}

}

}

catch (Exception e) {

Console.WriteLine(e.Message);

}

}

1.Transcriptions

1.OpenAI

public async Task OpenAITranscriptionsTest() {

try {

AuthOption authOption = new AuthOption() { Key = "sk-**", BaseUrl = "https://api.openai.com", AIType = Enums.AITypeEnum.OpenAi };

// 读取音频文件的二进制内容

byte[] audioData = File.ReadAllBytes("C:/Users/Administrator/Desktop/response.mp3");

AudioService audioService = new AudioService(authOption) ;

AudioCreateTranscriptionReq req = new AudioCreateTranscriptionReq() { File=audioData,FileName= "response.mp3",Model= "whisper-1" ,Language="zh"};

AudioTranscriptionRes res = await audioService.Transcriptions(req);

}

catch (Exception e) {

Console.WriteLine(e.Message);

}

}

阅读如遇样式问题,请前往个人博客浏览: [https://www.raokun.top](chatgpt接口开发笔记3: 语音识别接口)

拥抱ChatGPT:https://first.terramours.site