kubeadm 部署篇

一、 kubeadm 部署篇

Kubernetes 有两种方式,第一种是二进制的方式,可定制但是部署复杂容易出错;第二种是 kubeadm 工具 安装,部署简单,不可定制化。本次我们部署 kubeadm 版。 1、 部署系统版本

1、 部署系统版本

| 软件 | 版本 | |

|---|---|---|

| CentOS | CentOS Linux release 7.7.1908 (Core) | |

| Docker | 19.03.12 | |

| Kubernetes | V1.19.1 | |

| Flannel | V0.13.0 | |

| Kernel-lm | kernel-lt-4.4.245-1.el7.elrepo.x86_64.rpm | |

| Kernel-lm-devel | kernel-lt-devel-4.4.245-1.el7.elrepo.x86_64.rpm |

2、 节点规划

| Hostname | ip | 内核版本 |

|---|---|---|

| k8s-master-01 | 192.168.12.11 | 4.4.245-1.el7.elrepo.x86_64 |

| K8s-node-01 | 192.168.12.12 | 4.4.245-1.el7.elrepo.x86_64 |

| K8s-node-02 | 192.168.12.13 | 4.4.245-1.el7.elrepo.x86_64 |

配置固定ip和内网ip

## 查看ip信息

[root@kubernetes-master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:8c:63:0c brd ff:ff:ff:ff:ff:ff

inet 192.168.12.11/24 brd 192.168.12.255 scope global noprefixroute ens33

valid_lft forever preferred_lft forever

inet6 fe80::4c9d:ce6a:b0d:2b71/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens37: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:8c:63:16 brd ff:ff:ff:ff:ff:ff

inet 172.16.0.11/16 brd 172.16.255.255 scope global noprefixroute ens37

valid_lft forever preferred_lft forever

inet6 fe80::2d8:177b:c488:526b/64 scope link noprefixroute

valid_lft forever preferred_lft forever

# 修改ip和内网ip

[root@kubernetes-master ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens33

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens33

UUID=71edb611-0964-44bb-bb4a-b15dd5c9d297

DEVICE=ens33

ONBOOT=yes

IPADDR=192.168.12.11 # 修改外网ip

PREFIX=24

GATEWAY=192.168.12.2

DNS1=114.114.114.114

IPV6_PRIVACY=no

# 重启网卡

[root@kubernetes-master ~]# systemctl restart network

# 修改内网ip

[root@kubernetes-master ~]# vim /etc/sysconfig/network-scripts/ifcfg-ens37

TYPE=Ethernet

PROXY_METHOD=none

BROWSER_ONLY=no

BOOTPROTO=none

DEFROUTE=yes

IPV4_FAILURE_FATAL=no

IPV6INIT=yes

IPV6_AUTOCONF=yes

IPV6_DEFROUTE=yes

IPV6_FAILURE_FATAL=no

IPV6_ADDR_GEN_MODE=stable-privacy

NAME=ens37

UUID=8322d0b4-2469-4b00-8f3a-b6c87e1588b5

DEVICE=ens37

ONBOOT=yes

IPADDR=172.16.0.11 # 修改内网ip

PREFIX=16

IPV6_PRIVACY=no

# 重启网卡

[root@kubernetes-master ~]# ifdown ens37 && ifup ens37

修改主机名

# 修改master名

[root@kubernetes-master ~]# hostnamectl set-hostname kubernetes-master01

[root@kubernetes-master ~]# bash

3、 关闭 selinux

查看selinux信息

# 查看selinux信息

[root@kubernetes-master ~]# cat /etc/selinux/config

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=enforcing

# SELINUXTYPE= can take one of three values:

# targeted - Targeted processes are protected,

# minimum - Modification of targeted policy. Only selected processes are protected.

# mls - Multi Level Security protection.

SELINUXTYPE=targeted

关闭

# 永久关闭

[root@kubernetes-master ~]# sed -i 's#enforcing#disabled#g' /etc/sysconfig/selinux

# 临时关闭

setenforce 0

4、 关闭 swap 分区

一旦触发 swap,会导致系统性能急剧下降,所以一般情况下,K8S 要求关闭 swap 分区

[root@kubernetes-master ~]# swapoff -a

[root@kubernetes-master ~]# sed -i.bak 's/^.*centos-swap/#&/g' /etc/fstab

# 安装完成k8s写入如下数据各个节点服务器,各个配置文件关闭swap分区

[root@kubernetes-master ~]# echo 'KUBELET_EXTRA_ARGS="--fail-swap-on=false"' > /etc/sysconfig/kubelet

[root@kubernetes-master ~]# vim /etc/sysconfig/kubelet

KUBELET_EXTRA_ARGS="--fail-swap-on=false"

5、 配置国内 yum 源

默认情况下,CentOS 使用的是官方 yum 源,所以一般情况下在国内使用是非常慢,所以我们可以替换成国 内的一些比较成熟的 yum 源,例如:清华大学镜像源,网易云镜像源等等。

# 备份

[root@kubernetes-master ~]# mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

# 还原

[root@kubernetes-master~]# curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

[root@kubernetes-master ~]# curl -o /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

# 刷新缓存

yum makecache

# 更新系统(不更新内核)

yum update -y --exclud=kernel*

安装基础软件

[root@kubernetes-master ~]# yum install wget expect vim net-tools ntp bash-completion ipvsadm ipset jq iptables conntrack sysstat libseccomp -y

关闭防火墙

关闭防火墙是为了方便日常使用,不会给我们造成困扰。在生成环境中建议打开。

[root@kubernetes-master01 ~]# systemctl disable --now firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

6、 升级内核版本

由于 Docker 运行需要较新的系统内核功能,例如 ipvs 等等,所以一般情况下,我们需要使用 4.0+以上版本 的系统内核。

# 内核要求是 4.18+,如果是`CentOS 8`则不需要升级内核

[root@kubernetes-master01 opt]# wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-4.4.245-1.el7.elrepo.x86_64.rpm

[root@kubernetes-master01 opt]# wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-devel-4.4.245-1.el7.el

repo.x86_64.rpm

# 安装内核

[root@kubernetes-master01 opt]# yum localinstall -y kernel-lt*

# 指定启动内核

[root@kubernetes-master01 opt]# grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

# 产看默认内核

[root@kubernetes-master01 opt]# grubby --default-kernel

# 重启

reboot

[root@kubernetes-master01 ~]# uname -a

Linux kubernetes-master01 4.4.245-1.el7.elrepo.x86_64 #1 SMP Fri Nov 20 09:39:52 EST 2020 x86_64 x86_64 x86_64 GNU/Linux

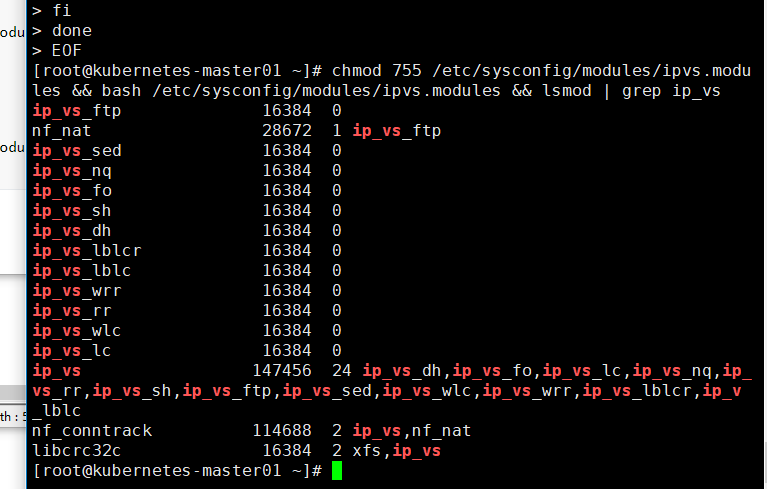

7、 安装 ipvs

ipvs 是系统内核中的一个模块,其网络转发性能很高。一般情况下,我们首选 ipvs。

# 安装 IPVS

[root@kubernetes-master01 ~]# yum install -y conntrack-tools ipvsadm ipset conntrack libseccomp

# 加载 IPVS 模块

[root@kubernetes-master01 ~]# cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

ipvs_modules="ip_vs ip_vs_lc ip_vs_wlc ip_vs_rr ip_vs_wrr ip_vs_lblc ip_vs_lblcr

ip_vs_dh ip_vs_sh ip_vs_fo ip_vs_nq ip_vs_sed ip_vs_ftp nf_conntrack"

for kernel_module in \${ipvs_modules}; do

/sbin/modinfo -F filename \${kernel_module} > /dev/null 2>&1

if [ $? -eq 0 ]; then

/sbin/modprobe \${kernel_module}

fi

done

EOF

[root@kubernetes-master01 ~]# chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep ip_vs

8、 内核参数优化

内核参数优化的主要目的是使其更适合 kubernetes 的正常运行。

[root@kubernetes-master01 ~]# cat > /etc/sysctl.d/k8s.conf << EOF

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp.keepaliv.probes = 3

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp.max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp.max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.top_timestamps = 0

net.core.somaxconn = 16384

EOF

# 立即生效

sysctl --system

11、 安装 Docker

Docker 主要是作为 k8s 管理的常用的容器工具之一。

11.1、CentOS7 版

# 阿里云官网 https://developer.aliyun.com/mirror/docker-ce?spm=a2c6h.13651102.0.0.3e221b11vd97uy

[root@kubernetes-master01 ~] yum install -y yum-utils device-mapper-persistent-data lvm2

[root@kubernetes-master01 ~] yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@kubernetes-master01 ~] yum install docker-ce -y

[root@kubernetes-master01 ~] sudo mkdir -p /etc/docker

[root@kubernetes-master01 ~] sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://8mh75mhz.mirror.aliyuncs.com"]

}

EOF

[root@kubernetes-master01 ~] sudo systemctl daemon-reload; systemctl start docker;systemctl enable --now docker.service

11.2、CentOS8 版

wget

https://download.docker.com/linux/centos/7/x86_64/stable/Packages/containerd.io1.2.13-3.2.el7.x86_64.rpm

yum install containerd.io-1.2.13-3.2.el7.x86_64.rpm -y

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo

https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install docker-ce -y

sudo mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://8mh75mhz.mirror.aliyuncs.com"]

}

EOF

sudo systemctl daemon-reload ; systemctl restart docker;systemctl enable --now docker.service

12、 同步集群时间

在集群当中,时间是一个很重要的概念,一旦集群当中某台机器时间跟集群时间不一致,可能会导致集群面 临很多问题。所以,在部署集群之前,需要同步集群当中的所有机器的时间。

12.1、CentOS7 版

yum install ntp -y

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' > /etc/timezone

ntpdate time2.aliyun.com

# 写入定时任务

*/1 * * * * ntpdate time2.aliyun.com > /dev/null 2>&1

12.2、CentOS8 版

rpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm

yum install wntp -y

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' > /etc/timezone

ntpdate time2.aliyun.com

# 写入定时任务

*/1 * * * * ntpdate time2.aliyun.com > /dev/null 2>&1

13、 配置 Kubernetes 源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum makecache

# 在node节点也需要执行

setenforce 0 # 临时生效

yum install -y kubelet kubeadm kubectl

systemctl enable kubelet && systemctl start kubelet

# 查看启动状态(没有启动,需要初始化)

[root@kubernetes-master01 ~]# systemctl status kubelet

● kubelet.service - kubelet: The Kubernetes Node Agent

Loaded: loaded (/usr/lib/systemd/system/kubelet.service; enabled; ven

Drop-In: /usr/lib/systemd/system/kubelet.service.d

└─10-kubeadm.conf

Active: activating (auto-restart) (Result: exit-code) since Sat 2021-s ago

Docs: https://kubernetes.io/docs/

Process: 2282 ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS (code=exited, status=255)

Main PID: 2282 (code=exited, status=255)

Jan 23 18:20:47 kubernetes-master01 kubelet[2282]: /workspace/src/k8s.io

Jan 23 18:20:47 kubernetes-master01 kubelet[2282]: created by k8s.io/kub

Jan 23 18:20:47 kubernetes-master01 kubelet[2282]: /workspace/src/k8s.io

Jan 23 18:20:47 kubernetes-master01 kubelet[2282]: goroutine 94 [runnabl

Jan 23 18:20:47 kubernetes-master01 kubelet[2282]: k8s.io/kubernetes/ven

Jan 23 18:20:47 kubernetes-master01 kubelet[2282]: /workspace/src/k8s.io

Jan 23 18:20:47 kubernetes-master01 kubelet[2282]: created by k8s.io/kub

Jan 23 18:20:47 kubernetes-master01 kubelet[2282]: /workspace/src/k8s.io

Jan 23 18:20:47 kubernetes-master01 systemd[1]: Unit kubelet.service ent

Jan 23 18:20:47 kubernetes-master01 systemd[1]: kubelet.service failed.

Hint: Some lines were ellipsized, use -l to show in full.

14、 节点初始化

方法一:获取镜像

# 查看所需要的镜像

[root@kubernetes-master01 ~]# kubeadm config images list

k8s.gcr.io/kube-apiserver:v1.20.2

k8s.gcr.io/kube-controller-manager:v1.20.2

k8s.gcr.io/kube-scheduler:v1.20.2

k8s.gcr.io/kube-proxy:v1.20.2

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns:1.7.0

# 在master节点执行获取masert节点配置

[root@kubernetes-master01 ~]# kubeadm config print init-defaults >kubeade.yaml

[root@kubernetes-master01 ~]# cat kubeade.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 1.2.3.4 # apiserver地址,因为单master,所以配置master的节点内网IP(修改为内网IP)

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: kubernetes-master01 # 默认读取当前master节点的hostname

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: k8s.gcr.io # 默认的镜像地址修改成阿里云镜像源

kind: ClusterConfiguration

kubernetesVersion: v1.20.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}

修改之后文件说明的kubeade.yaml文件

[root@kubernetes-master01 ~]# cat kubeade.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.16.0.11 # apiserver地址,因为单master,所以配置master的节点内网IP(修改为内网IP)

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: kubernetes-master01 # 默认读取当前master节点的hostname

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers # 默认的镜像地址修改成阿里云镜像源

kind: ClusterConfiguration

kubernetesVersion: v1.20.0

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16 # Pod网段,flannel插件需要使用这个网段(手动添加的)

serviceSubnet: 10.96.0.0/12

scheduler: {}

修改的文件

[root@kubernetes-master01 ~]#cat kubeade.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.16.0.11

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: kubernetes-master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.20.0

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/12

scheduler: {}

查看镜像配置

操作节点:只在master节点执行

# 查看需要使用的镜像列表,将得到如下列表(拉取镜像的地址)

[root@kubernetes-master01 ~]# kubeadm config images list --config kubeade.yaml

registry.aliyuncs.com/google_containers/kube-apiserver:v1.20.0

registry.aliyuncs.com/google_containers/kube-controller-manager:v1.20.0

registry.aliyuncs.com/google_containers/kube-scheduler:v1.20.0

registry.aliyuncs.com/google_containers/kube-proxy:v1.20.0

registry.aliyuncs.com/google_containers/pause:3.2

registry.aliyuncs.com/google_containers/etcd:3.4.13-0

registry.aliyuncs.com/google_containers/coredns:1.7.0

# 下载镜像

[root@kubernetes-master01 ~]# kubeadm config images pull --config kubeade.yaml

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-apiserver:v1.20.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-controller-manager:v1.20.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-scheduler:v1.20.0

[config/images] Pulled registry.aliyuncs.com/google_containers/kube-proxy:v1.20.0

[config/images] Pulled registry.aliyuncs.com/google_containers/pause:3.2

[config/images] Pulled registry.aliyuncs.com/google_containers/etcd:3.4.13-0

[config/images] Pulled registry.aliyuncs.com/google_containers/coredns:1.7.0

方法二:获取镜像并配置构建镜像

# 通过阿里云构建镜像(修改对应版本)

https://code.aliyun.com/RandySun121/k8s/tree/master

https://cr.console.aliyun.com/repository/cn-hangzhou/k8s121/kube-apiserver/build

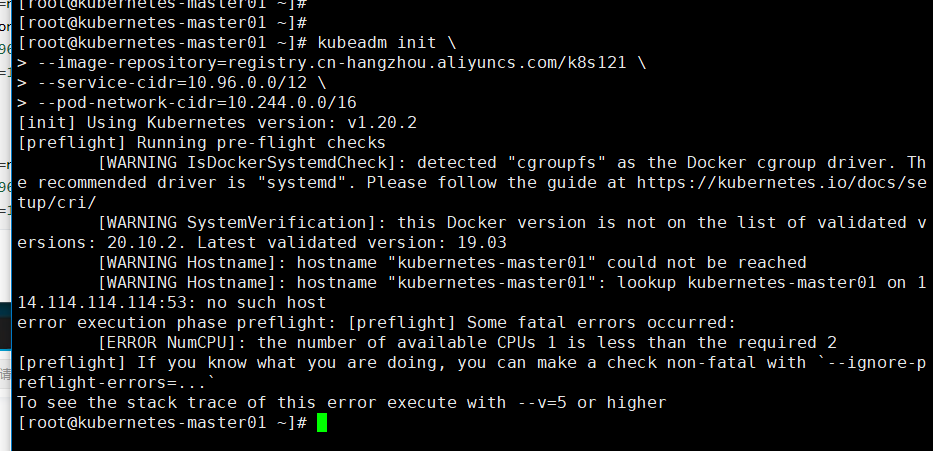

初始化kubeadm

kubeadm init --kubernetes-version=v1.13.3 指定版本

方式一:

kubeadm init --config kubeadm.yml

方式二:

kubeadm init \

--image-repository=registry.cn-hangzhou.aliyuncs.com/k8s121 \

--kubernetes-version=v1.18.8 \ # kubernetes版本

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

# 去除版本

kubeadm init \

--image-repository=registry.cn-hangzhou.aliyuncs.com/k8s121 \

--service-cidr=10.96.0.0/12 \

--pod-network-cidr=10.244.0.0/16

配置hosts解析

cat >> /etc/hosts <<EOF

192.168.12.11 kubernetes-master01

192.168.12.12 kubernetes-node01

192.168.12.13 kubernetes-node02

EOF

初始化

[root@kubernetes-master01 ~]# kubeadm init --image-repository=registry.cn-hangzhou.aliyuncs.com/k8s121 --service-cidr=10.96.0.0/12 --pod-network-cidr=10.244.0.0/16

[init] Using Kubernetes version: v1.20.2

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING SystemVerification]: this Docker version is not on the list of validated versions: 20.10.2. Latest validated version: 19.03

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Generating "ca" certificate and key

[certs] Generating "apiserver" certificate and key

[certs] apiserver serving cert is signed for DNS names [kubernetes kubernetes-master01 kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local] and IPs [10.96.0.1 192.168.12.11]

[certs] Generating "apiserver-kubelet-client" certificate and key

[certs] Generating "front-proxy-ca" certificate and key

[certs] Generating "front-proxy-client" certificate and key

[certs] Generating "etcd/ca" certificate and key

[certs] Generating "etcd/server" certificate and key

[certs] etcd/server serving cert is signed for DNS names [kubernetes-master01 localhost] and IPs [192.168.12.11 127.0.0.1 ::1]

[certs] Generating "etcd/peer" certificate and key

[certs] etcd/peer serving cert is signed for DNS names [kubernetes-master01 localhost] and IPs [192.168.12.11 127.0.0.1 ::1]

[certs] Generating "etcd/healthcheck-client" certificate and key

[certs] Generating "apiserver-etcd-client" certificate and key

[certs] Generating "sa" key and public key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Writing "admin.conf" kubeconfig file

[kubeconfig] Writing "kubelet.conf" kubeconfig file

[kubeconfig] Writing "controller-manager.conf" kubeconfig file

[kubeconfig] Writing "scheduler.conf" kubeconfig file

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

[control-plane] Creating static Pod manifest for "kube-scheduler"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 8.503958 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.20" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node kubernetes-master01 as control-plane by adding the labels "node-role.kubernetes.io/master=''" and "node-role.kubernetes.io/control-plane='' (deprecated)"

[mark-control-plane] Marking the node kubernetes-master01 as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: dkyuer.4s7imqsllta2pptl

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.12.11:6443 --token dkyuer.4s7imqsllta2pptl \

--discovery-token-ca-cert-hash sha256:fc5ee03e480e3dc8d043d4ac1d22cc6d32d212b0f51ffb7ee5c1b71a9c4dd9ce

[root@kubernetes-master01 ~]#

14.1、配置 kubernetes 用户信息

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

14.2、增加命令提示

yum install -y bash-completion

source /usr/share/bash-completion/bash_completion

source <(kubectl completion bash)

echo "source <(kubectl completion bash)" >> ~/.bashrc

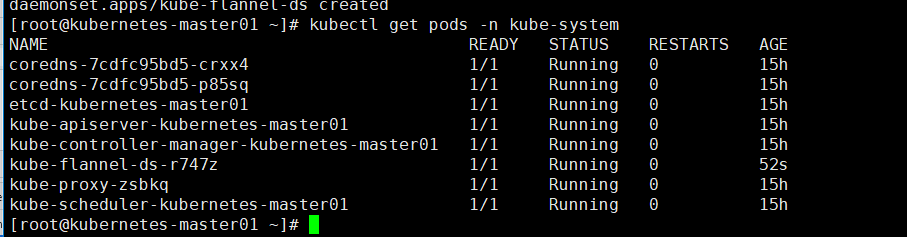

15、 安装集群网络插件

kubernetes 需要使用第三方的网络插件来实现 kubernetes 的网络功能,这样一来,安装网络插件成为必要前 提;第三方网络插件有多种,常用的有 flanneld、calico 和 cannel(flanneld+calico),不同的网络组件,都提供 基本的网络功能,为各个 Node 节点提供 IP 网络等。

flannel:https://github.com/coreos/flannel

找不到:https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

参考地址:https://blog.csdn.net/zlk999/article/details/107006050/

- 修改配置,指定网卡名称,大概在文件190行,添加一行配置(不指定,可能会出问题)

image: quay.io/coreos/flannel:v0.13.1-rc1

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

- --iface=ens37 # 如果机器存在多个网卡的话,需要指定内网网卡的名称,默认不指定会找第一块网卡

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

修改拉取镜像地址

kubernetes-master01 ~]# cat kube-flannel.yml | grep images

[root@kubernetes-master01 ~]# cat kube-flannel.yml | grep image

image: quay.io/coreos/flannel:v0.13.1-rc1

image: quay.io/coreos/flannel:v0.13.1-rc1

[root@kubernetes-master01 ~]# ^C

[root@kubernetes-master01 ~]# sed -i 's#quay.io/coreos/flannel#registry.cn-hangzhou.aliyuncs.com/k8s121/flannel#g' kube-flannel.yml

[root@kubernetes-master01 ~]# cat kube-flannel.yml | grep image

image: registry.cn-hangzhou.aliyuncs.com/k8s121/flannel:v0.13.1-rc1

image: registry.cn-hangzhou.aliyuncs.com/k8s121/flannel:v0.13.1-rc1

执行flannel

[root@kubernetes-master01 ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

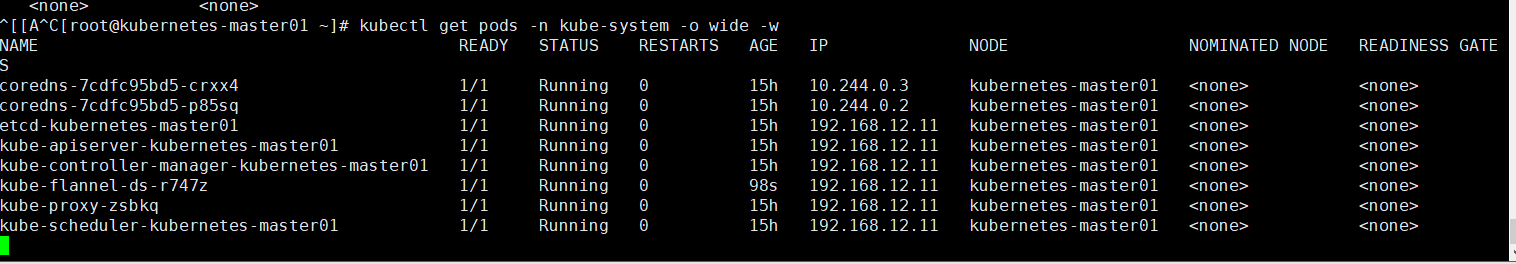

[root@kubernetes-master01 ~]# kubectl get pods -n kube-system

[root@kubernetes-master01 ~]# kubectl get pods -n kube-system -o wide -w

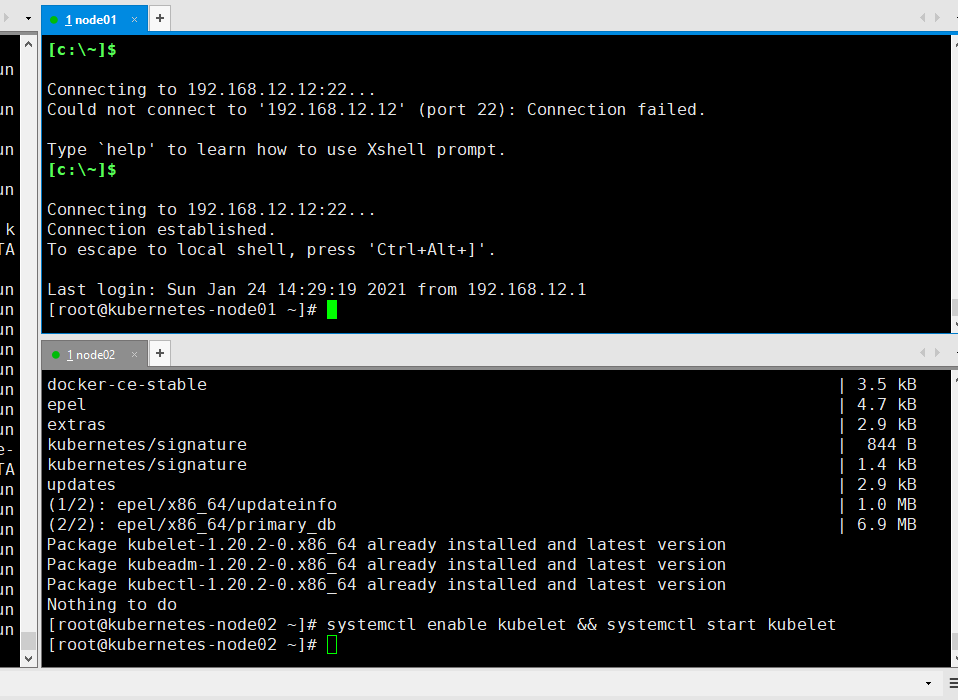

16、 Node 节点加入集群

在node节点执行如下操作

setenforce 0 # 临时

yum install -y kubelet kubeadm kubectl

systemctl enackannable kubelet && systemctl start kubelet

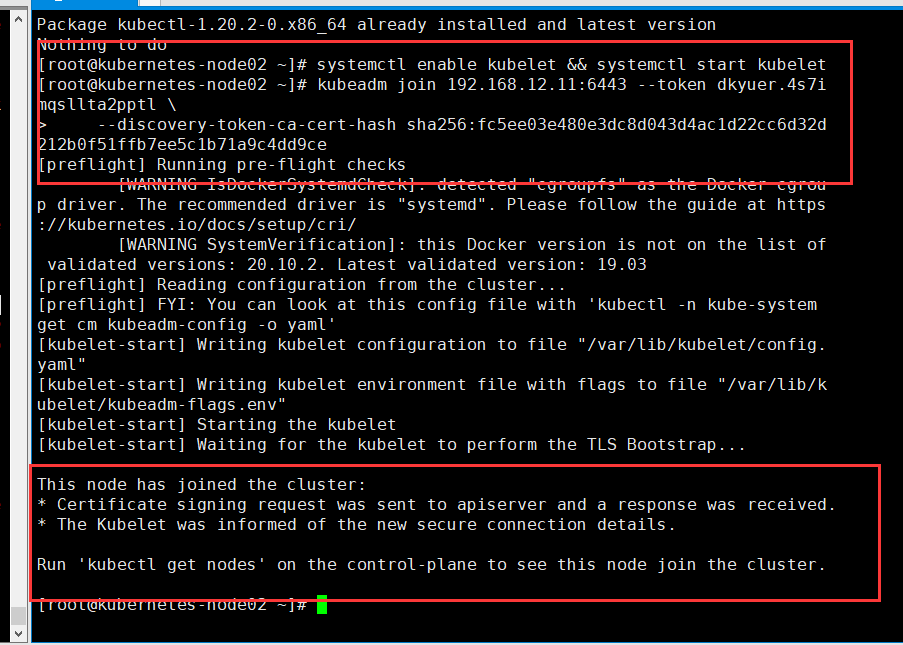

将node添加集群中

# 在node节点中执行安装成功的master节点产生的tokne

kubeadm join 192.168.12.11:6443 --token dkyuer.4s7imqsllta2pptl \

--discovery-token-ca-cert-hash sha256:fc5ee03e480e3dc8d043d4ac1d22cc6d32d212b0f51ffb7ee5c1b71a9c4dd9ce

自己创建token

# 创建 TOKEN

kubeadm token create --print-join-command

# node 节点执行入集群

kubeadm join 192.168.12.11:6443 --token dkyuer.4s7imqsllta2pptl \

--discovery-token-ca-cert-hash sha256:fc5ee03e480e3dc8d043d4ac1d22cc6d32d212b0f51ffb7ee5c1b71a9c4dd9ce

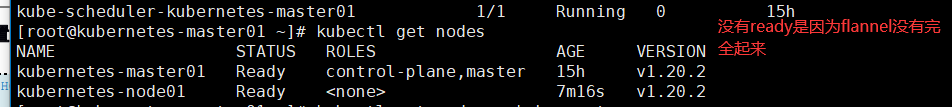

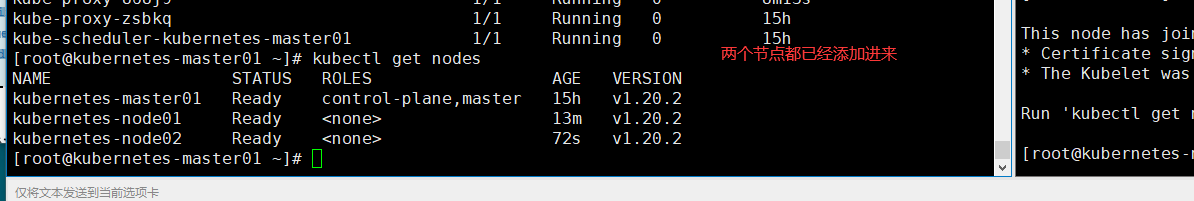

# 查看node节点是否添加到nodes集群中

[root@kubernetes-master01 ~]# kubectl get nodes

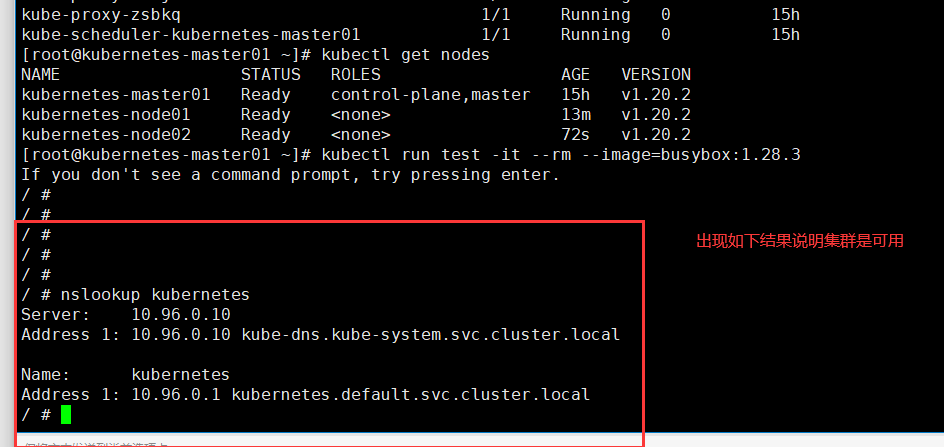

验证集群是否已经安装成功

第一种方式

# 出现如下结果说明集群网络是可用的(没有出现或者慢是有问题的)

[root@kubernetes-master01 ~]# kubectl run test -it --rm --image=busybox:1.28.3

If you don't see a command prompt, try pressing enter.

/ #

/ #

/ #

/ #

/ #

/ # nslookup kubernetes

Server: 10.96.0.10

Address 1: 10.96.0.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.96.0.1 kubernetes.default.svc.cluster.local

# 查看集群svc,dns可以解析说明是成功的

[root@kubernetes-master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15h

第二种方式

[root@kubernetes-master01 ~]# kubectl create deployment nginx --image=nginx

deployment.apps/nginx created

[root@kubernetes-master-01 ~]# kubectl expose deployment nginx --port=80 --type=NodePort

service/nginx exposed

[root@kubernetes-master01 ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6799fc88d8-pxql5 1/1 Running 0 93s 10.244.1.2 kubernetes-node01 <none> <none>

test 1/1 Running 0 7m21s 10.244.2.2 kubernetes-node02 <none> <none>

[root@kubernetes-master01 ~]# kubectl get pods -o wide -w

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-6799fc88d8-pxql5 1/1 Running 0 2m5s 10.244.1.2 kubernetes-node01 <none> <none>

test 1/1 Running 0 7m53s 10.244.2.2 kubernetes-node02 <none> <none>

[root@kubernetes-master01 ~]# kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 15h

nginx ClusterIP 10.109.227.243 <none> 80/TCP 2m4s

# 停止所有容器

docker stop $(docker ps -a -q)