Python 异步爬虫 aiohttp 示例

1、写在前面

之前一篇随笔记录了异步的个人理解 https://www.cnblogs.com/rainbow-tan/p/15081118.html

之前随笔异步都是以asyncio.sleep()来进行异步操作的演示,下面代码具体演示了一次异步爬虫

2、使用的异步爬虫库为 aiohttp

演示功能:

爬取 https://wall.alphacoders.com/ 中的小图片,进行批量下载,进行下载用时的对比

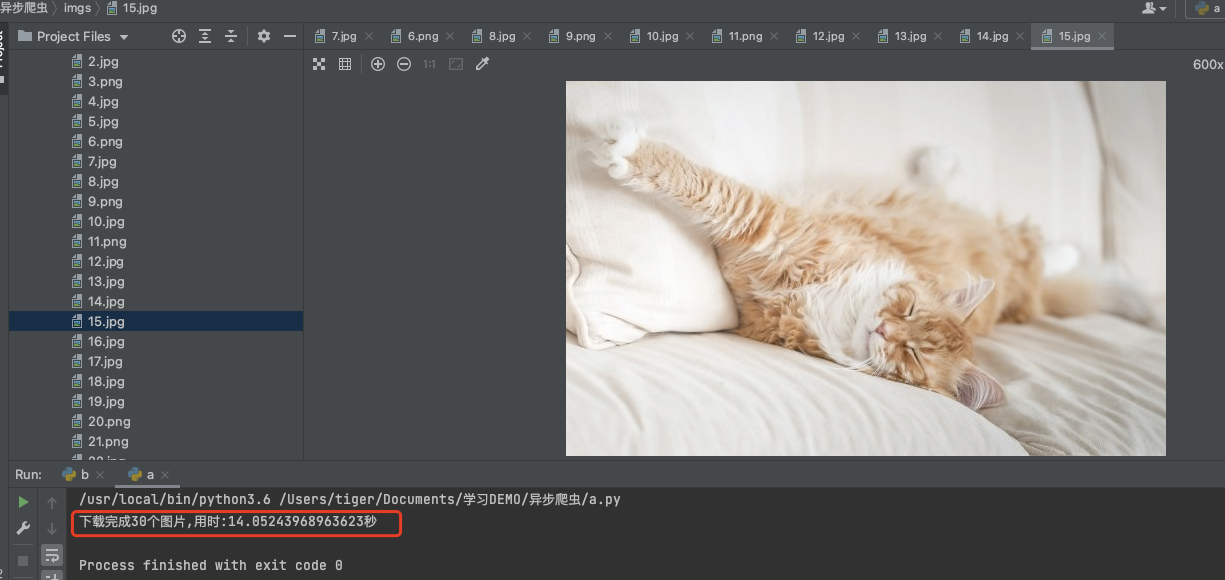

(1)先用一般的requests库进行爬虫演示,查看运行的时间

import os import time import requests from bs4 import BeautifulSoup def get_html(url): ret = requests.get(url) return ret if __name__ == '__main__': index = 0 start = time.time() response = get_html('https://wall.alphacoders.com/') soup = BeautifulSoup(response.text, 'lxml') boxgrids = soup.find_all(class_='boxgrid') for boxgrid in boxgrids: img = boxgrid.find('a').find('picture').find('img') link = img.attrs['src'] content = get_html(link).content picture_type = str(link).split('.')[-1] index += 1 path = os.path.abspath('imgs') if not os.path.exists(path): os.makedirs(path) with open('{}/{}.{}'.format(path, index, picture_type), 'wb') as f: f.write(content) end = time.time() print(f'下载完成{index}个图片,用时:{end - start}秒')

运行

用时14秒,下载30个图片

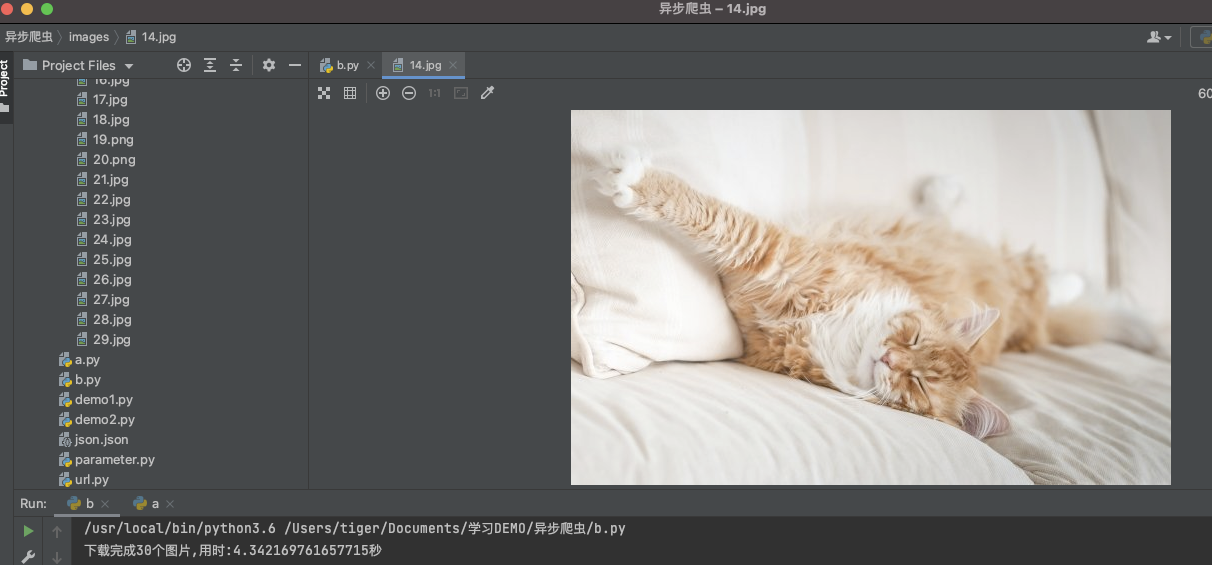

(2)使用异步库aiohttp下载

import asyncio import os import time import aiohttp from aiohttp import TCPConnector from bs4 import BeautifulSoup async def get_html(url): async with aiohttp.ClientSession( connector=TCPConnector(verify_ssl=False)) as session: async with session.get(url) as resp: text = await resp.text() soup = BeautifulSoup(text, 'lxml') boxgrids = soup.find_all(class_='boxgrid') links = [] for boxgrid in boxgrids: img = boxgrid.find('a').find('picture').find('img') link = img.attrs['src'] links.append(link) return links async def write_file(url, index): async with aiohttp.ClientSession( connector=TCPConnector(verify_ssl=False)) as session: async with session.get(url) as resp: text = await resp.read() path = os.path.abspath('images') if not os.path.exists(path): os.makedirs(path) with open(f'{path}/{index}.{str(url).split(".")[-1]}', 'wb') as f: f.write(text) if __name__ == '__main__': index = 0 start = time.time() loop = asyncio.get_event_loop() task = loop.create_task(get_html('https://wall.alphacoders.com/')) links = loop.run_until_complete(task) tasks = [] for link in links: tasks.append(write_file(link, index)) index += 1 loop.run_until_complete(asyncio.gather(*tasks)) end = time.time() print(f'下载完成{index}个图片,用时:{end - start}秒')

运行

下载30个图片,用时4秒

学习链接 :

https://www.jianshu.com/p/20ca9daba85f

https://docs.aiohttp.org/en/stable/client_quickstart.html

https://juejin.cn/post/6857140761926828039 (这个未参考,但是看起来也很牛,收藏一下)

分类:

Python

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· Linux系列:如何用heaptrack跟踪.NET程序的非托管内存泄露

· 开发者必知的日志记录最佳实践

· SQL Server 2025 AI相关能力初探

· Linux系列:如何用 C#调用 C方法造成内存泄露

· AI与.NET技术实操系列(二):开始使用ML.NET

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· 葡萄城 AI 搜索升级:DeepSeek 加持,客户体验更智能

· 什么是nginx的强缓存和协商缓存

· 一文读懂知识蒸馏