python结巴分词及词频统计

1 def get_words(txt):

2 seg_list = jieba.cut(txt)

3 c = Counter()

4 for x in seg_list:

5 if len(x) > 1 and x != '\r\n':

6 c[x] += 1

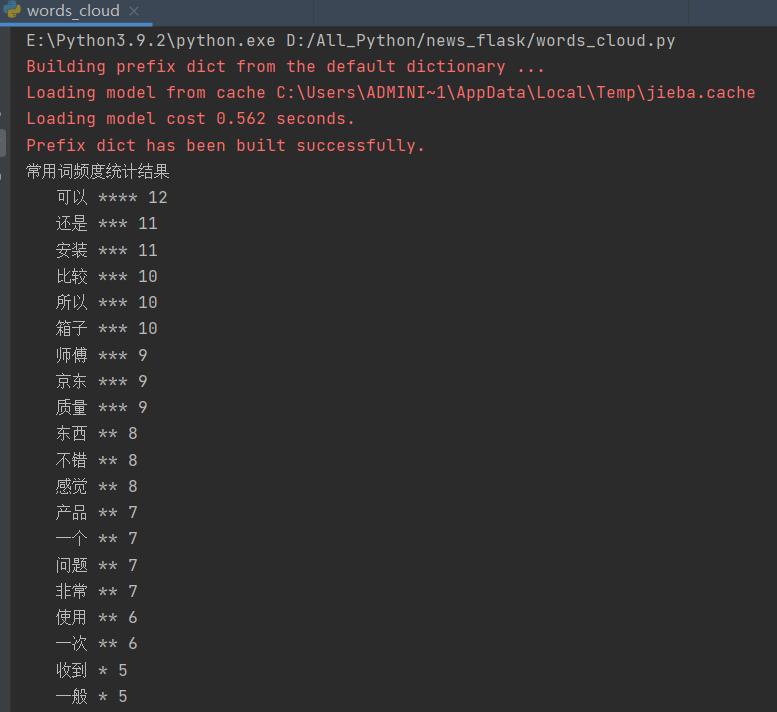

7 print('常用词频度统计结果')

8 for (k, v) in c.most_common(30):

9 print('%s%s %s %d' % (' ' * (5 - len(k)), k, '*' * int(v / 3), v))

10

11 if __name__ == '__main__':

12 with codecs.open('comments.txt', 'r', 'gbk') as f:

13 txt = f.read()

14 get_words(txt)

15 # get_text()

def get_words(txt):

seg_list = jieba.cut(txt)

c = Counter()

for x in seg_list:

if len(x) > 1 and x != '\r\n':

c[x] += 1

print('常用词频度统计结果')

for (k, v) in c.most_common(30):

print('%s%s %s %d' % (' ' * (5 - len(k)), k, '*' * int(v / 3), v))

if __name__ == '__main__':

with codecs.open('comments.txt', 'r', 'gbk') as f:

txt = f.read()

get_words(txt)

# get_text()

好看请赞,养成习惯:) 本文来自博客园,作者:靠谱杨, 转载请注明原文链接:https://www.cnblogs.com/rainbow-1/p/16010853.html

欢迎来我的51CTO博客主页踩一踩 我的51CTO博客

文章中的公众号名称可能有误,请统一搜索:靠谱杨的秘密基地

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 阿里最新开源QwQ-32B,效果媲美deepseek-r1满血版,部署成本又又又降低了!

· 开源Multi-agent AI智能体框架aevatar.ai,欢迎大家贡献代码

· Manus重磅发布:全球首款通用AI代理技术深度解析与实战指南

· 被坑几百块钱后,我竟然真的恢复了删除的微信聊天记录!

· AI技术革命,工作效率10个最佳AI工具