Docker进阶

总览

火药不制成枪炮是没有威力的; Docker 不搞成编排而搞成单容器的形式也是没有什么意义的; 通常10个以下的集群节点,用 Docker Swarm,以上的用 k8s 作 容器编排; 未来:云原生,现在所做的大多数功能开发都是没有意义的,因为有行业成熟的模板。

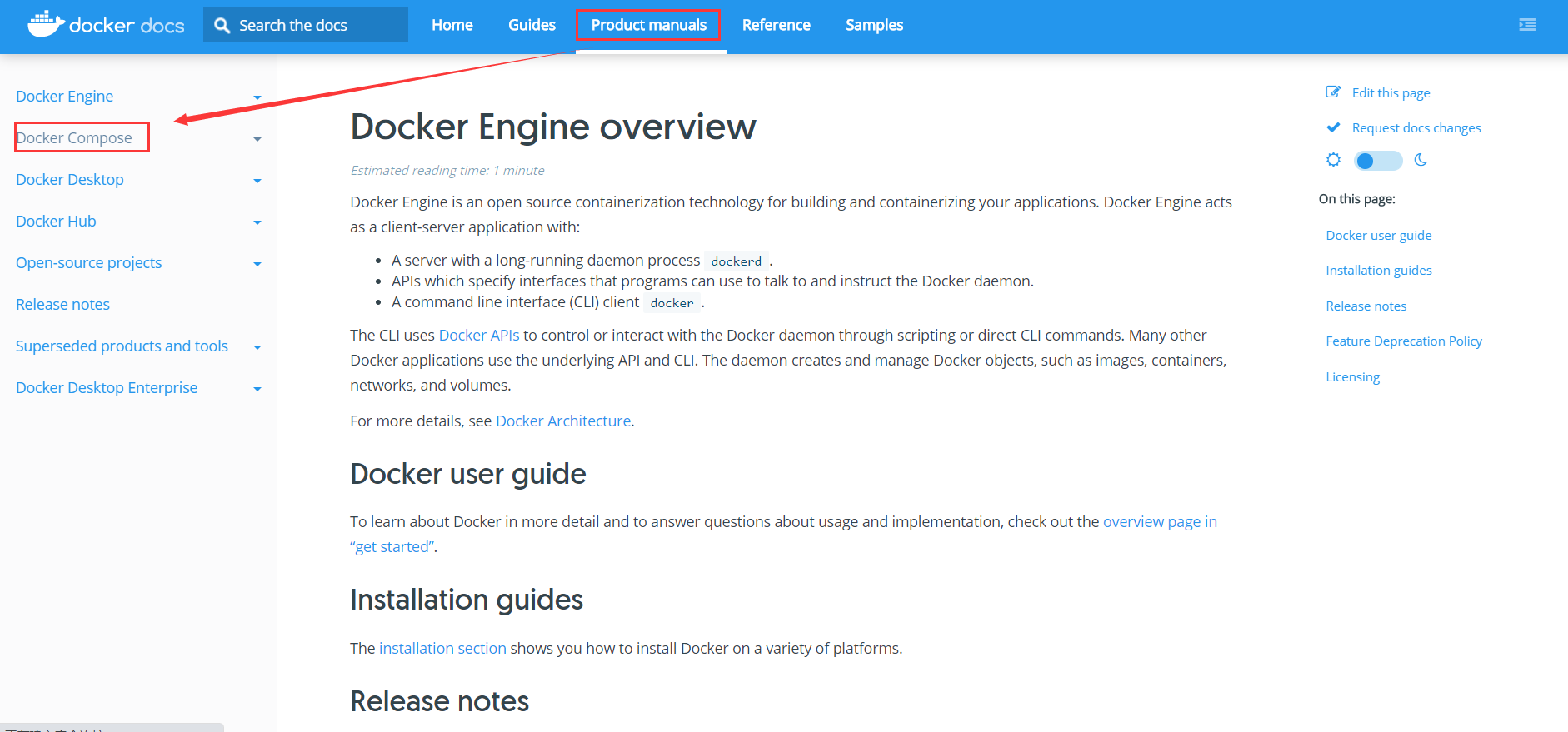

Docker Compose

Docker Swarm

Docker Stack / Docker Secret / Docker Config

综上都是为 k8s 做铺垫

Docker Compose

是什么

定义运行多个容器(批量容器编排,相当于把一个项目的各个部分的启动次序写在配置里); 是Docker官方的开源项目,需要安装;

怎么用

Define your app’s environment with a Dockerfile so it can be reproduced anywhere. Define the services that make up your app in docker-compose.yml so they can be run together in an isolated environment. Run docker-compose up and Compose starts and runs your entire app.

解决的问题

如果用Dockerfile,需要整半天,run很多遍 而compose可以根据配置文件批量打包(配置文件下文学习)

现在开始安装 Docker Compose

下载

官网的地址 sudo curl -L "https://github.com/docker/compose/releases/download/1.27.3/docker-compose-$(uname -s)-$(uname -m)" -o /usr/local/bin/docker-compose 国内的镜像(版本可以跟着官网的版本指定): curl -L https://get.daocloud.io/docker/compose/releases/download/1.27.3/docker-compose-`uname -s`-`uname -m` > /usr/local/bin/docker-compose

授权

sudo chmod +x /usr/local/bin/docker-compose

测试

[root@10-9-48-229 bin]# docker-compose version docker-compose version 1.27.3, build 4092ae5d docker-py version: 4.3.1 CPython version: 3.7.7 OpenSSL version: OpenSSL 1.1.0l 10 Sep 2019

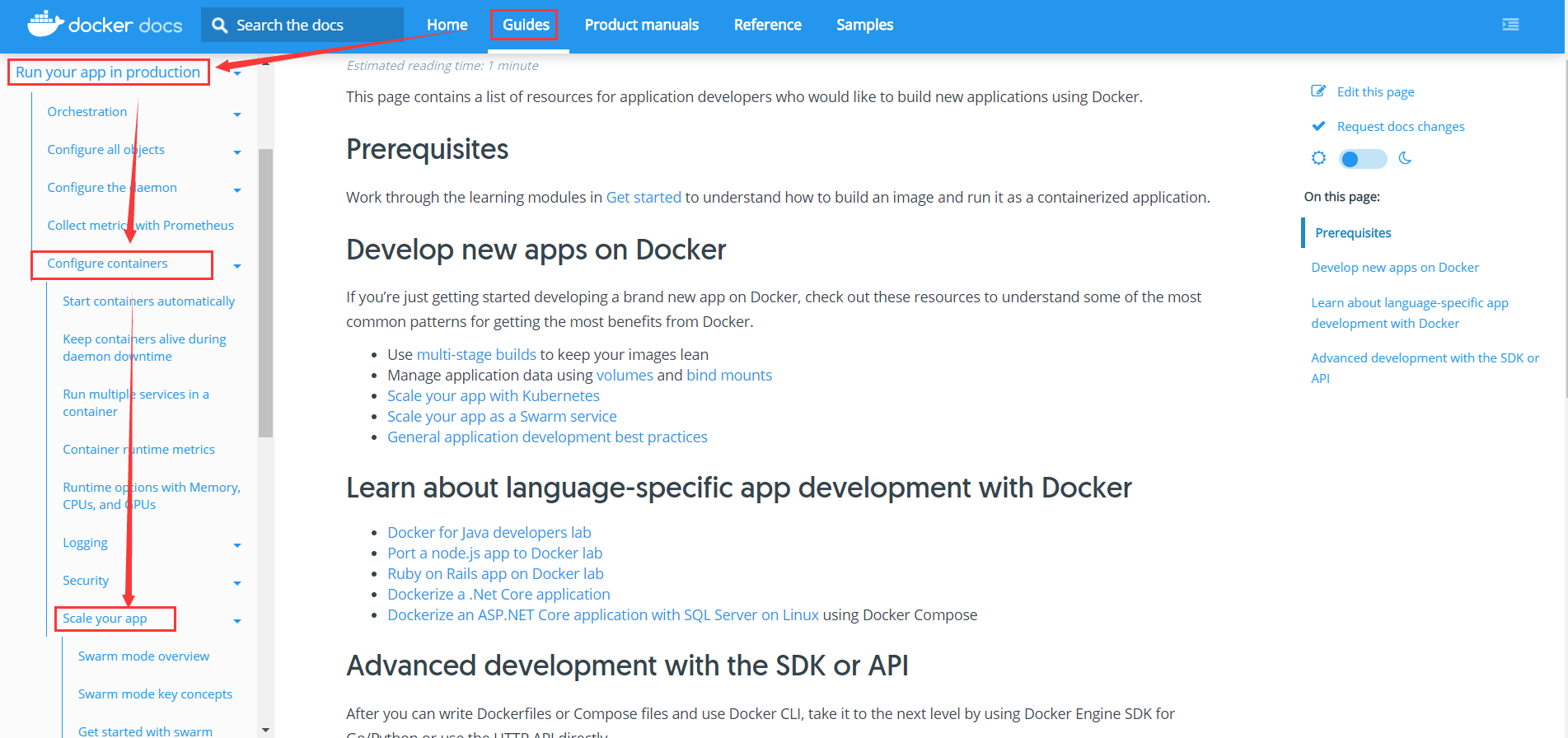

现在开始根据 https://docs.docker.com/compose/gettingstarted/ 体验 Docker Compose (python + redis 实现计数器)

/home/composetest 会产生4个文件

app.py # 项目 requirements.txt # 项目需要的依赖 docker images 能够看到 Dockerfile # 构建镜像,项目打包为镜像 docker-compose.yml # 编排项目,定义环境及服务/规则

/home/composetest 目录下启动 docker-compose up (下载很久..)

docker-compose build

docker-compose up 最终启动了两个项目,因为 docker-compose.yml 中的 services 下是两个服务

Starting composetest_redis_1 ... done Starting composetest_web_1 ... done

克隆会话 查看项目/依赖/测试项目

[root@10-9-48-229 ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 0e0bdd4498cb composetest_web "flask run" 11 hours ago Up 4 minutes 0.0.0.0:5000->5000/tcp composetest_web_1 0bbca033781a redis:alpine "docker-entrypoint.s…" 11 hours ago Up 4 minutes 6379/tcp composetest_redis_1 [root@10-9-48-229 ~]# curl localhost:5000 Hello World! I have been seen 1 times. [root@10-9-48-229 ~]# curl localhost:5000 Hello World! I have been seen 2 times.

服务

默认的服务名:文件名_服务名_num (num 表示副本数量)

网络(项目中的内容都在同一个网络下,域名访问。保证了 HA 高可用)

[root@10-9-48-229 ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 0965bf78dff5 bridge bridge local b180d2b16863 composetest_default bridge local 1e34f8f42875 host host local 804e7b4c9290 none null local [root@10-9-48-229 ~]# docker network inspect composetest_default [ { "Name": "composetest_default", "Id": "b180d2b1686314666b146a2c68922f25911e8f2fbe2608e7d7973cf66e42fa05", "Created": "2020-09-18T21:43:00.921622396+08:00", "Scope": "local", "Driver": "bridge", "EnableIPv6": false, "IPAM": { "Driver": "default", "Options": null, "Config": [ { "Subnet": "172.18.0.0/16", "Gateway": "172.18.0.1" } ] }, "Internal": false, "Attachable": true, "Ingress": false, "ConfigFrom": { "Network": "" }, "ConfigOnly": false, "Containers": { "0bbca033781a7c8c1a45747707db71f9a30fcd829c0c3deecb7ec048e258b4a1": { "Name": "composetest_redis_1", "EndpointID": "4aaa46b588bd8efb761b51c20820387a307d7ee7e5a0e358d06fcbd606110f0c", "MacAddress": "02:42:ac:12:00:03", "IPv4Address": "172.18.0.3/16", "IPv6Address": "" }, "0e0bdd4498cbdcd154fc4f61cbeb46abe69c553a649f44caf1ac65465dd6500e": { "Name": "composetest_web_1", "EndpointID": "cc3879fabde56428c8db4b90ab8f21e1355610b3d0680bedf2aca17c92928f04", "MacAddress": "02:42:ac:12:00:02", "IPv4Address": "172.18.0.2/16", "IPv6Address": "" } }, "Options": {}, "Labels": { "com.docker.compose.network": "default", "com.docker.compose.project": "composetest", "com.docker.compose.version": "1.27.3" } } ]

停止项目

docker-compose down # /home/composetest # 或 ctrl + c

Docker Compose 中的 docker-compose.yaml

https://docs.docker.com/compose/compose-file/#compose-file-structure-and-examples

docker-compose.yaml 规则,多写 多看 就会了

# 3层: version: '' # 版本 service: # 服务 服务1: web # 服务配置 images build network depends_on: # 依赖的服务需要先启动 - db - redis …… 服务2: redis …… # 其他配置: 网络/卷/全局规则 volumes: networks: configs:

利用 docker-compose 一键部署单体的 WP 博客

https://docs.docker.com/compose/wordpress/ 官网提供了部署 wordpress 博客的 demo 所谓 wordpress ,是使用PHP语言开发的开源博客

建文件夹/var/www/html 建文件夹mkdir /usr/local/etc/php/conf.d,其下建文件vi uploads.ini尝试解决wordpress上传安装插件的问题

uploads.ini内容

file_uploads = On memory_limit = 128M upload_max_filesize = 512M post_max_size = 128M max_execution_time = 600

后话,安装插件的问题是这样解决的

安装或者更新nss yum install nss 或者 yum update nss

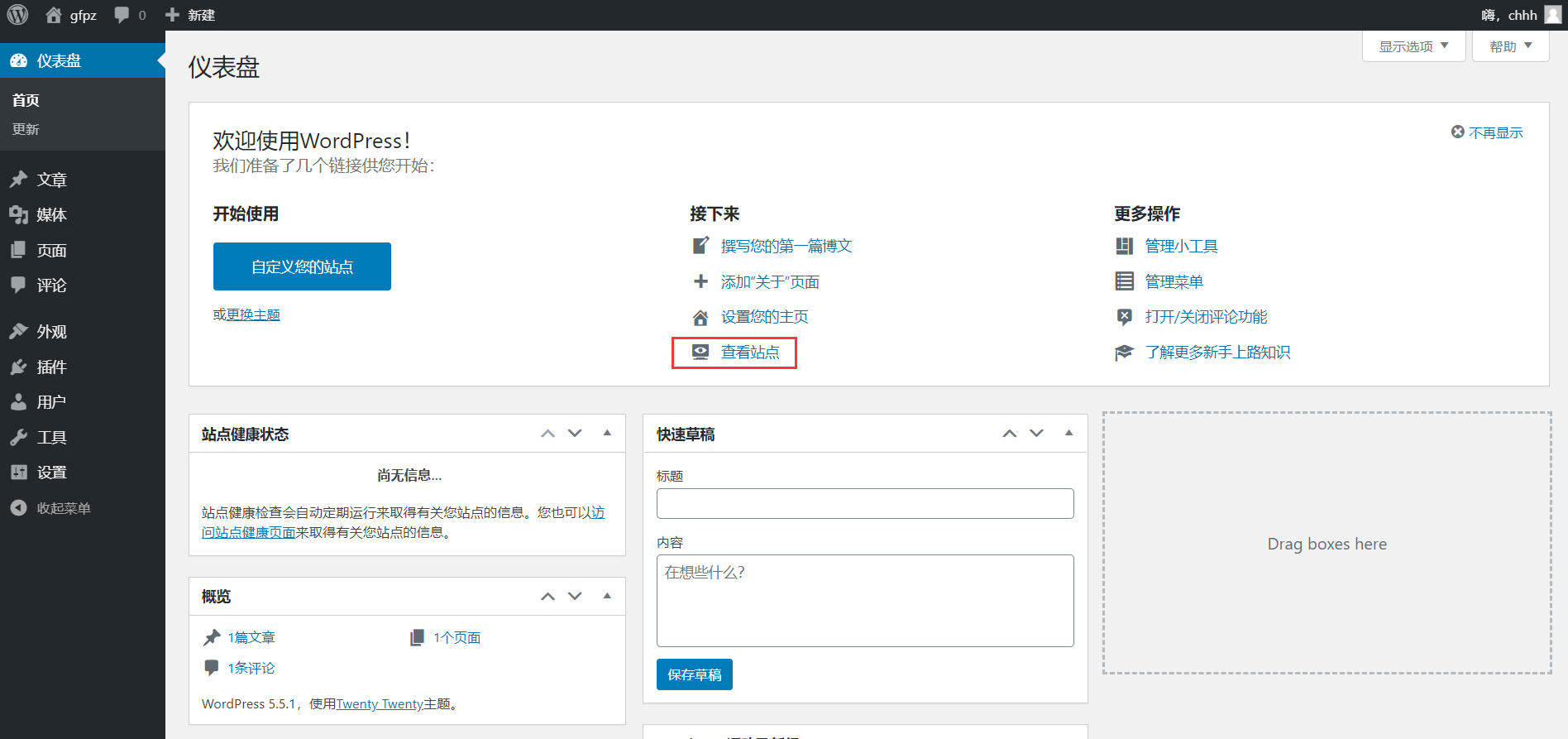

操作很简单(配置文件写好了,所有的东西都是从网上下载)/data/my_wordpress目录后期改

[root@10-9-48-229 home]# mkdir my_wordpress [root@10-9-48-229 home]# cd /home/my_wordpress [root@10-9-48-229 my_wordpress]# vim docker-compose.yml version: '3.3' services: db: image: mysql:5.7 volumes: - db_data:/var/lib/mysql restart: always # command: --default-authentication-plugin=mysql_native_password 如果是mysql8的话,和restart并列 ports: # 如果不写端口映射的话,外部无法连接该mysql - "3306:3306" environment: MYSQL_ROOT_PASSWORD: root MYSQL_DATABASE: wordpress MYSQL_USER: wordpress MYSQL_PASSWORD: wordpress wordpress: depends_on: # 依赖上方的 db service - db image: wordpress:latest ports: - "8000:80" volumes: - ./wp_site:/var/www/html - ./uploads.ini:/usr/local/etc/php/conf.d/uploads.ini restart: always environment: WORDPRESS_DB_HOST: db:3306 WORDPRESS_DB_USER: wordpress WORDPRESS_DB_PASSWORD: wordpress WORDPRESS_DB_NAME: wordpress volumes: db_data: {} "docker-compose.yml" [New] 29L, 592C written [root@10-9-48-229 my_wordpress]# docker-compose up # 部署&启动 docker-compose up -d 可以后台启动

查看 docker 服务(云主机开启 8000 端口)

[root@10-9-48-229 my_wordpress]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 4a2a089d7ac7 wordpress:latest "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 0.0.0.0:8000->80/tcp my_wordpress_wordpress_1 791e8fe8733e mysql:5.7 "docker-entrypoint.s…" 3 minutes ago Up 3 minutes 33060/tcp, 0.0.0.0:3310->3306/tcp my_wordpress_db_1

数据库客户端连接有问题可以尝试

#进入mysql容器 docker-compose exec db bash #登陆数据库 mysql -u root -p 密码是somewordpress use mysql; #开启root远程访问权限 grant all on *.* to 'root'@'%'; #修改加密规则 alter user 'root'@'localhost' identified by 'root' password expire never; #更新密码 alter user 'root'@'%' identified with mysql_native_password by 'root'; #刷新权限 flush privileges;

安装Nginx(不搞docker了,步步为营)

wget -c http://nginx.org/download/nginx-1.18.0.tar.gz tar -zxvf nginx-1.18.0.tar.gz gcc -v yum -y install gcc yum install -y pcre pcre-devel yum install -y zlib zlib-devel yum install -y openssl openssl-devel # nginx-1.18.0目录下执行 ./configure --prefix=/usr/local/nginx --with-http_stub_status_module --with-http_ssl_module make make install cd /usr/local/nginx # 编辑配置文件

配置文件

worker_processes 1;

events {

worker_connections 1024;

}

http {

include mime.types;

default_type application/octet-stream;

sendfile on;

keepalive_timeout 65;

upstream wordpress {

server 106.75.32.166:8000;#fail_timeout=10s

}

server {

listen 80;

server_name blog.possible2dream.cn;

#rewrite ^/(.*)$ https://blog.possible2dream.cn/$1 permanent;

location / {

proxy_pass http://wordpress;

}

error_page 500 502 503 504 /50x.html;

location = /50x.html {

root html;

}

}

#https访问页面是乱的,不知原因

#server {

# listen 443 ssl;

# server_name blog.possible2dream.cn;

#

# ssl_certificate /etc/certs/Nginx/blog.possible2dream.cn.crt;

# ssl_certificate_key /etc/certs/Nginx/blog.possible2dream.cn.key;

# ssl_session_cache shared:SSL:1m;

# ssl_session_timeout 5m;

# ssl_ciphers HIGH:!aNULL:!MD5;

# ssl_prefer_server_ciphers on;

#

# location / {

# proxy_pass http://wordpress;

# proxy_set_header Host $host;

# proxy_set_header X-Real-IP $remote_addr;

# proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

# }

#}

}

启动nginx

cd /usr/local/nginx/sbin ./nginx ps -ef | grep nginx

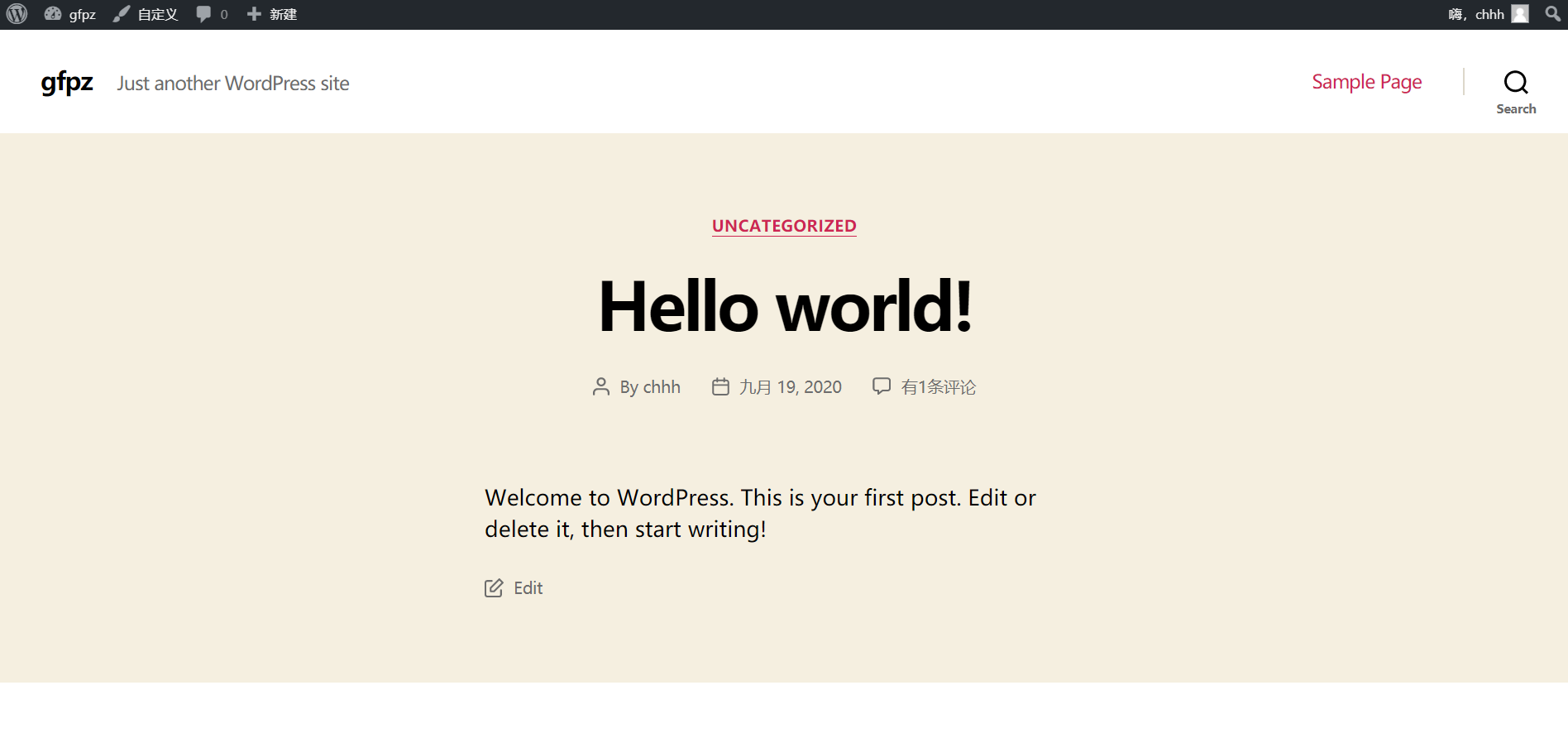

浏览器访问 106.75.32.166:8000/wp-admin/ 进入后台管理(chhh/浏览器记住密码)

http://possible2dream.cn:8000/ 或跳转进入 我的博客

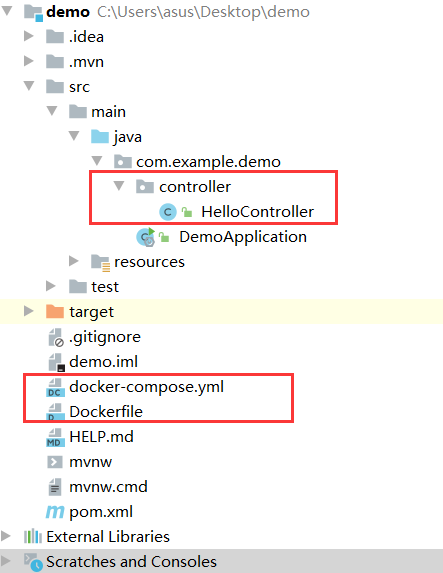

编写自己的微服务上线

(用 Springboot 实现一个计数器)

HelloController

package com.example.demo.controller; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.data.redis.core.StringRedisTemplate; import org.springframework.web.bind.annotation.GetMapping; import org.springframework.web.bind.annotation.RestController; @RestController public class HelloController { @Autowired StringRedisTemplate redisTemplate; @GetMapping("/hello") public String hello() { Long views = redisTemplate.opsForValue().increment("views:"); return "hello,world丶views:" + views; } }

Dockerfile

FROM java:8 COPY *.jar /app.jar CMD ["--server.port=8080"] EXPOSE 8080 ENTRYPOINT ["java","-jar","/app.jar"]

docker-compose.yml

version: '3.8'

services:

gfpz:

build: .

image: gfpz

depends_on:

- redis

ports:

- "8080:8080"

redis:

image: "library/redis:alpine"

上传到服务器部署/启动 (玛德,这个网络是真的恶心,老子都重装了云主机的系统,发现传文件还是巨慢,就是网络的原因,fuck丶)

[root@10-9-48-229 gfpz_app]# pwd /home/gfpz_app [root@10-9-48-229 gfpz_app]# ls demo-0.0.1-SNAPSHOT.jar docker-compose.yml Dockerfile [root@10-9-48-229 gfpz_app]# docker-compose up

访问测试

[root@10-9-48-229 gfpz_app]# curl localhost:8080 {"timestamp":"2020-09-20T02:43:41.071+00:00","status":404,"error":"Not Found","message":"","path":"/"}[root@10-9-48-229 gfpz_app]# curl localhost:8080/hello hello,world丶views:1[root@10-9-48-229 gfpz_app]# curl localhost:8080/hello hello,world丶views:2[root@10-9-48-229 gfpz_app]#

Docker Swarm

上文的 Docker Compose 还是仅仅解决了单机的问题

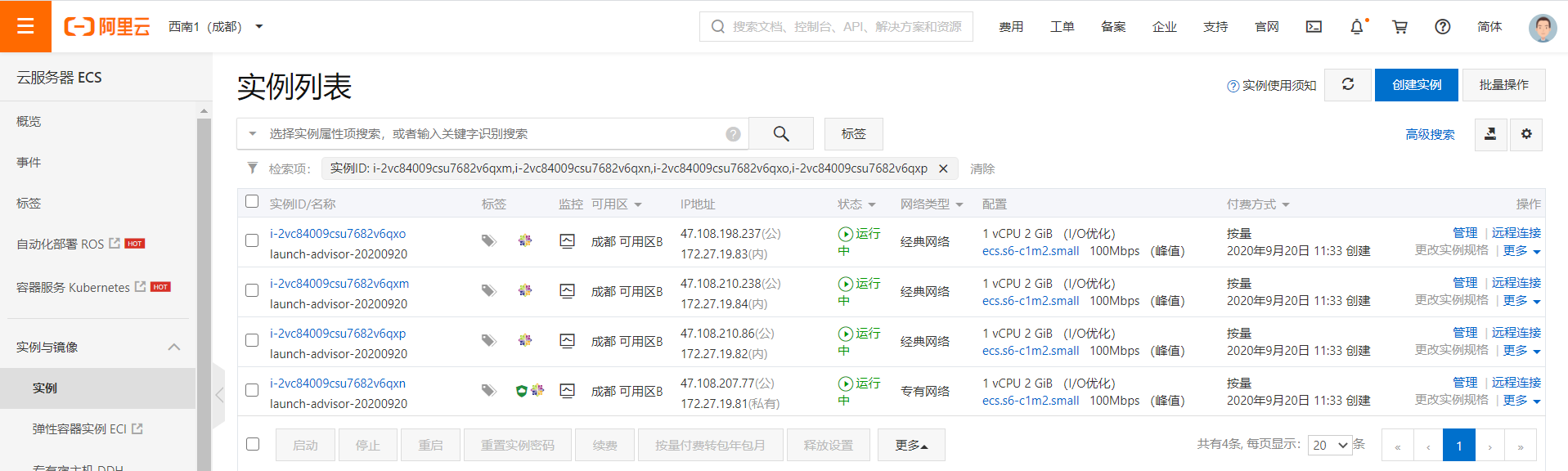

Docker Swarm 才能集群方式部署(买4台服务器:1核2G,做完实验可以释放,只花几块钱,24小时后可退钱)

4台都安装 Docker

现在开始搭建集群

在 docker-1 服务器上 初始化集群 用内网IP省钱

[root@iZ2vc84009csu7682v6qxoZ ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 4a1c8a38a18e bridge bridge local 50909c9b5d34 host host local d6ebf3a70ebb none null local [root@iZ2vc84009csu7682v6qxoZ ~]# docker swarm --help Usage: docker swarm COMMAND Manage Swarm Commands: ca Display and rotate the root CA init Initialize a swarm join Join a swarm as a node and/or manager join-token Manage join tokens leave Leave the swarm unlock Unlock swarm unlock-key Manage the unlock key update Update the swarm Run 'docker swarm COMMAND --help' for more information on a command. [root@iZ2vc84009csu7682v6qxoZ ~]# docker swarm init --help Usage: docker swarm init [OPTIONS] Initialize a swarm Options: --advertise-addr string Advertised address (format: <ip|interface>[:port]) --autolock Enable manager autolocking (requiring an unlock key to start a stopped manager) --availability string Availability of the node ("active"|"pause"|"drain") (default "active") --cert-expiry duration Validity period for node certificates (ns|us|ms|s|m|h) (default 2160h0m0s) --data-path-addr string Address or interface to use for data path traffic (format: <ip|interface>) --data-path-port uint32 Port number to use for data path traffic (1024 - 49151). If no value is set or is set to 0, the default port (4789) is used. --default-addr-pool ipNetSlice default address pool in CIDR format (default []) --default-addr-pool-mask-length uint32 default address pool subnet mask length (default 24) --dispatcher-heartbeat duration Dispatcher heartbeat period (ns|us|ms|s|m|h) (default 5s) --external-ca external-ca Specifications of one or more certificate signing endpoints --force-new-cluster Force create a new cluster from current state --listen-addr node-addr Listen address (format: <ip|interface>[:port]) (default 0.0.0.0:2377) --max-snapshots uint Number of additional Raft snapshots to retain --snapshot-interval uint Number of log entries between Raft snapshots (default 10000) --task-history-limit int Task history retention limit (default 5) [root@iZ2vc84009csu7682v6qxoZ ~]# ip addr 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000 link/ether 00:16:3e:01:60:de brd ff:ff:ff:ff:ff:ff inet 172.27.19.83/20 brd 172.27.31.255 scope global dynamic eth0 valid_lft 315357477sec preferred_lft 315357477sec inet6 fe80::216:3eff:fe01:60de/64 scope link valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:b6:ec:09:4a brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever [root@iZ2vc84009csu7682v6qxoZ ~]# docker swarm init --advertise-addr 172.27.19.83 Swarm initialized: current node (7f6pz3yw48b478hpue9avtmz9) is now a manager. To add a worker to this swarm, run the following command: docker swarm join --token SWMTKN-1-10m40b4ub1k77krt753li2u43cl5gc7scvytgd5j6b2zd9xnl0-8xpwrpn9wn9fjt62ygw7bm8uj 172.27.19.83:2377 To add a manager to this swarm, run 'docker swarm join-token manager' and follow the instructions. [root@iZ2vc84009csu7682v6qxoZ ~]#

通过 docker swarm join --token SWMTKN-1-10m40b4ub1k77krt753li2u43cl5gc7scvytgd5j6b2zd9xnl0-8xpwrpn9wn9fjt62ygw7bm8uj 172.27.19.83:2377 命令可以招一个奴隶; 想招一个管理的话,执行 docker swarm join-token manager

也可以执行命令再生成令牌

# 获取令牌,让拿该令牌的人成为一个 管理 或者 奴隶 docker swarm join-token manager docker swarm join-token worker

让 docker-2 成为这个集群的工作者,在 docker-2 上操作

[root@iZ2vc84009csu7682v6qxmZ ~]# docker swarm join --token SWMTKN-1-10m40b4ub1k77krt753li2u43cl5gc7scvytgd5j6b2zd9xnl0-8xpwrpn9wn9fjt62ygw7bm8uj 172.27.19.83:2377 This node joined a swarm as a worker. [root@iZ2vc84009csu7682v6qxmZ ~]#

回到 docker-1 上,发现有了奴隶

[root@iZ2vc84009csu7682v6qxoZ ~]# docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION o89a6golnfx4dphdxnqwlimtk iZ2vc84009csu7682v6qxmZ Ready Active 19.03.13 7f6pz3yw48b478hpue9avtmz9 * iZ2vc84009csu7682v6qxoZ Ready Active Leader 19.03.13 [root@iZ2vc84009csu7682v6qxoZ ~]#

按照上面的套路,让 docker-3 也成为一个奴隶

[root@iZ2vc84009csu7682v6qxpZ ~]# docker swarm join --token SWMTKN-1-10m40b4ub1k77krt753li2u43cl5gc7scvytgd5j6b2zd9xnl0-8xpwrpn9wn9fjt62ygw7bm8uj 172.27.19.83:2377 This node joined a swarm as a worker. [root@iZ2vc84009csu7682v6qxpZ ~]#

地主 docker-1 发现自己又多了个奴隶

[root@iZ2vc84009csu7682v6qxoZ ~]# docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION o89a6golnfx4dphdxnqwlimtk iZ2vc84009csu7682v6qxmZ Ready Active 19.03.13 7f6pz3yw48b478hpue9avtmz9 * iZ2vc84009csu7682v6qxoZ Ready Active Leader 19.03.13 us7so7o9h5wqqs54cgiho3r02 iZ2vc84009csu7682v6qxpZ Ready Active 19.03.13 [root@iZ2vc84009csu7682v6qxoZ ~]#

再招一个管理

[root@iZ2vc84009csu7682v6qxoZ ~]# docker swarm join-token manager To add a manager to this swarm, run the following command: docker swarm join --token SWMTKN-1-10m40b4ub1k77krt753li2u43cl5gc7scvytgd5j6b2zd9xnl0-apmys0ij0kwdndqag8s49nnih 172.27.19.83:2377 [root@iZ2vc84009csu7682v6qxoZ ~]#

docker-4 加入管理队伍

[root@iZ2vc84009csu7682v6qxnZ ~]# docker swarm join --token SWMTKN-1-10m40b4ub1k77krt753li2u43cl5gc7scvytgd5j6b2zd9xnl0-apmys0ij0kwdndqag8s49nnih 172.27.19.83:2377 This node joined a swarm as a manager. [root@iZ2vc84009csu7682v6qxnZ ~]#

docker-1 地主检阅自己的庄园

[root@iZ2vc84009csu7682v6qxoZ ~]# docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION o89a6golnfx4dphdxnqwlimtk iZ2vc84009csu7682v6qxmZ招的奴隶 Ready Active 19.03.13 xw0xcv5uep7ck1gbz342rpas8 iZ2vc84009csu7682v6qxnZ招的管理 Ready Active Reachable 19.03.13 7f6pz3yw48b478hpue9avtmz9 * iZ2vc84009csu7682v6qxoZ地主本人 Ready Active Leader 19.03.13 us7so7o9h5wqqs54cgiho3r02 iZ2vc84009csu7682v6qxpZ招的奴隶 Ready Active 19.03.13 [root@iZ2vc84009csu7682v6qxoZ ~]#

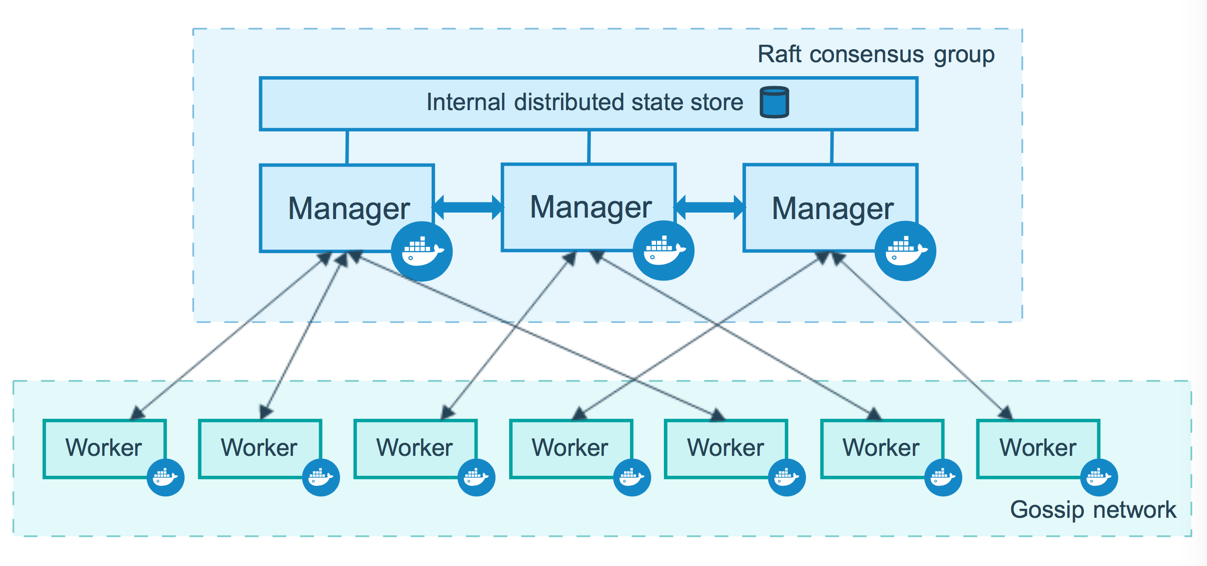

总结 swarm

# 第一步:init 一个地主 # 第二步:join 管理和奴隶 正常需要有3个主节点(管理)

Raft协议

若将地址重启,他将失去地主的地位

[root@iZ2vc84009csu7682v6qxoZ ~]# systemctl stop docker [root@iZ2vc84009csu7682v6qxoZ ~]# systemctl start docker [root@iZ2vc84009csu7682v6qxoZ ~]# docker node ls ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS ENGINE VERSION o89a6golnfx4dphdxnqwlimtk iZ2vc84009csu7682v6qxmZ Ready Active 19.03.13 xw0xcv5uep7ck1gbz342rpas8 iZ2vc84009csu7682v6qxnZ Unknown Active Leader 19.03.13 7f6pz3yw48b478hpue9avtmz9 * iZ2vc84009csu7682v6qxoZ Ready Active Reachable 19.03.13 us7so7o9h5wqqs54cgiho3r02 iZ2vc84009csu7682v6qxpZ Ready Active 19.03.13 [root@iZ2vc84009csu7682v6qxoZ ~]#

某节点想要离开集群

[root@iZ2vc84009csu7682v6qxpZ ~]# docker swarm leave Node left the swarm. [root@iZ2vc84009csu7682v6qxpZ ~]#

3个管理节点,挂一个,变成2个,还可以用; 2个管理节点,挂一个,变成1个,是不可用的; 最少需要有3个管理节点,存活数>=2;

Swarm 集群弹性创建服务

swarm,service,node,task,container

[root@iZ2vc84009csu7682v6qxoZ ~]# docker service --help Usage: docker service COMMAND Manage services Commands: create Create a new service inspect Display detailed information on one or more services logs Fetch the logs of a service or task ls List services ps List the tasks of one or more services rm Remove one or more services rollback Revert changes to a service's configuration scale Scale one or multiple replicated services update Update a service Run 'docker service COMMAND --help' for more information on a command.

创建服务,动态扩展服务,动态更新服务,灰度发布(金丝雀发布)

[root@iZ2vc84009csu7682v6qxoZ ~]# docker service create -p 8888:80 --name my-nginx nginx # 跟 docker run 可想象成类似的概念 ulytx1jkslmx3tjy5asmsm2ls overall progress: 1 out of 1 tasks 1/1: running [==================================================>] verify: Service converged [root@iZ2vc84009csu7682v6qxoZ ~]# docker service ps my-nginx ID NAME IMAGE NODE DESIRED STATE CURRENT STATE ERROR PORTS 6iyzhc27wx5z my-nginx.1 nginx:latest iZ2vc84009csu7682v6qxnZ Running Running about a minute ago [root@iZ2vc84009csu7682v6qxoZ ~]# docker service ls ID NAME MODE REPLICAS IMAGE PORTS ulytx1jkslmx my-nginx replicated 1/1 nginx:latest *:8888->80/tcp [root@iZ2vc84009csu7682v6qxoZ ~]#

上面可以看到服务只跑在一个副本上,现在来更新副本数(虽然一共只有4个节点,但是可以起10个服务(有的机器上分配多个!))

[root@iZ2vc84009csu7682v6qxoZ ~]# docker service update --replicas 3 my-nginx my-nginx overall progress: 3 out of 3 tasks 1/3: running [==================================================>] 2/3: running [==================================================>] 3/3: running [==================================================>] verify: Service converged [root@iZ2vc84009csu7682v6qxoZ ~]# docker ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 9212374a3948 nginx:latest "/docker-entrypoint.鈥 41 seconds ago Up 39 seconds 80/tcp my-nginx.2.vt9c4mm18n8ydcs4ztwpbd78f [root@iZ2vc84009csu7682v6qxoZ ~]#

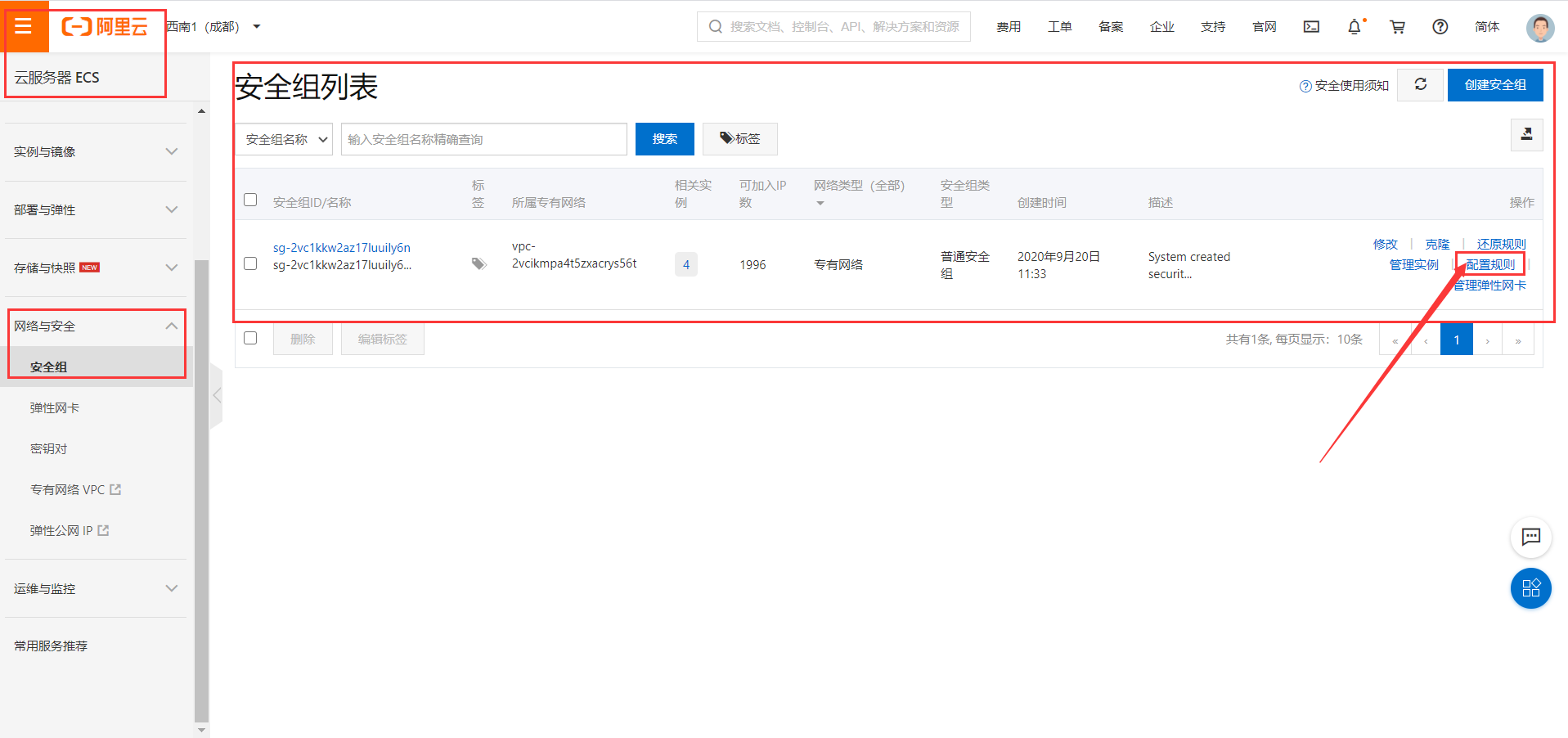

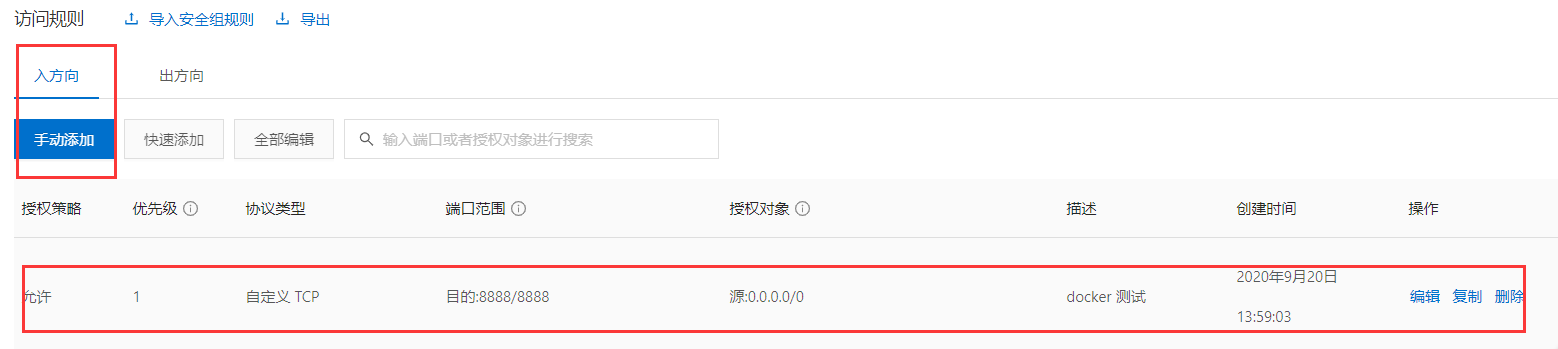

开启云主机8888端口

访问任一节点 http://47.108.198.237:8888/ 即访问这个集群服务,至于这个集群在每台机器上开启几个实例,是Swarm自己的事情

动态扩缩容

[root@iZ2vc84009csu7682v6qxnZ ~]# docker service scale my-nginx=5 my-nginx scaled to 5 overall progress: 5 out of 5 tasks 1/5: running [==================================================>] 2/5: running [==================================================>] 3/5: running [==================================================>] 4/5: running [==================================================>] 5/5: running [==================================================>] verify: Service converged [root@iZ2vc84009csu7682v6qxnZ ~]#

移除服务

[root@iZ2vc84009csu7682v6qxnZ ~]# docker service rm my-nginx my-nginx [root@iZ2vc84009csu7682v6qxnZ ~]# docker service ls ID NAME MODE REPLICAS IMAGE PORTS

云原生来了,完了完了,完蛋了!

k8s、Go语言(并发语言)……

浙公网安备 33010602011771号

浙公网安备 33010602011771号