python Scrapy Selenium PhantomJS 爬取微博图片

1,创建项目

scrapy startproject weibo #创建工程 scrapy genspider -t basic weibo.com weibo.com #创建spider

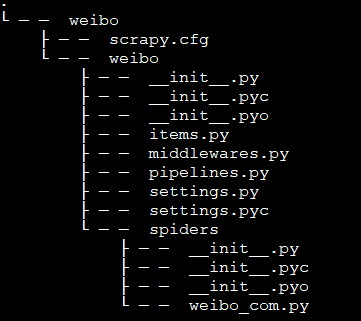

目录结构

定义Items

编辑items.py

import scrapy

class WeiboItem(scrapy.Item):

# define the fields for your item here like:

image_urls = scrapy.Field()

dirname = scrapy.Field()

编辑pipelines.py

# -*- coding: utf-8 -*-

# Define your item pipelines here

#

# Don't forget to add your pipeline to the ITEM_PIPELINES setting

# See: http://doc.scrapy.org/en/latest/topics/item-pipeline.html

import hashlib

# from scrapy.contrib.pipeline.images import ImagesPipeline

# from scrapy.http import Request

from scrapy.utils.python import to_bytes

import urllib

import os

import redis

#Scrapy 自带图片下载器,对gif动图支持不好,原因没找,所以为支持gif动图,自定义下载器

# class WeboPipeline(ImagesPipeline):

# # def process_item(self, item, spider):

# # return item

# def get_media_requests(self, item, info):

# for image_url in item['image_urls']:

# request = Request('http:'+image_url)

# request.meta['item'] = {'dirname':item['dirname']}

# yield request

#定义存储目录和文件扩展名

# def file_path(self, request, response=None, info=None):

# url = request.url

# item = request.meta['item']

# image_guid = hashlib.sha1(to_bytes(url)).hexdigest()

# ext = url.split('.')[-1]

# url = 'full/%s/%s.%s' % (item['dirname'],image_guid,ext)

# return url

# def item_completed(self, results, item, info):

# return item

#没有用原生的Scrapy文件下载功能,因为gif图片下载不全,不能动

class WeboPipeline(object):

#用redis判重,简单粗暴了点,

def open_spider(self,spider):

self.client=redis.Redis(host='127.0.0.1', port=6379)

def process_item(self, item, spider):

file_path = item['dirname']

#redis判重复

yn = self.client.get(file_path)

if yn is not None:

print '已经下载过'

return item

for image_url in item['image_urls']:

imageurl = 'http:'+image_url

savepath = self.get_file_path(file_path,imageurl)

print imageurl,savepath

try:

#下载图片到指定地址

urllib.urlretrieve(imageurl,savepath)

except Exception as e:

print str(e)

#根据微博内容中每组图片地址的hash做唯一标识

self.client.set(file_path,1)

return item

def get_file_path(self,dirname, url):

```

获取新的文件名

```

image_guid = hashlib.sha1(to_bytes(url)).hexdigest()

ext = url.split('.')[-1]

#文件存储目录写死了,可在setting中设置

file_dir = './full/%s'%(dirname)

if os.path.exists(file_dir) == False:

os.makedirs(file_dir)

return '%s/%s.%s' % (file_dir,image_guid,ext)

编写爬虫

spiders/weibo_com.py

# -*- coding: utf-8 -*-

import scrapy

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

import json

import os

import time

from collections import defaultdict

from scrapy.selector import Selector

import re

import urllib

from weibo.items import WeiboItem

import time

import hashlib

class WebComSpider(scrapy.Spider):

name = 'weibo.com'

allowed_domains = ['weibo.com']

start_urls = ['https://weibo.com/']

#cookie存储地址

cookie_file_path = './cookies.json'

#要抓取博主的ID

uids = ['5139152205']

def saveCookie(self,cookie):

```保存cookie到文件```

with open(self.cookie_file_path,'w') as outputfile:

json.dump(cookie, outputfile)

def getCookie(self):

```从文件中获取cookie```

if os.path.exists(self.cookie_file_path) == False:

self.cookie = None

return

with open(self.cookie_file_path,'r') as inputfile:

self.cookie = json.load(inputfile)

def start_requests(self):

```抓取微博```

self.getCookie()

#如果没有cookie,模拟登陆获取cookie

if self.cookie is None:

#用PhantomJS模拟浏览器

driver = webdriver.PhantomJS(executable_path='/data/software/phantomjs-2.1.1-linux-x86_64/bin/phantomjs')

driver.get('https://weibo.com')

try:

#设置窗口大小,这个很重要,如果不设置,获取不到下面的元素

driver.set_window_size(1920,1080)

#等待获取到 loginname 对象后才会进行下一步操作

userElen = WebDriverWait(driver,10).until(

EC.presence_of_element_located((By.ID,'loginname'))

)

#等待时间,反扒

time.sleep(3);

print 'sleep 3'

#设置登陆用户名

userElen.send_keys('登陆用户名')

print 'sleep 5'

time.sleep(5)

pasElen = driver.find_element_by_xpath('//*[@id="pl_login_form"]/div/div[3]/div[2]/div/input')

#设置登陆密码

pasElen.send_keys('登陆密码')

print 'sleep 1'

time.sleep(1)

sumbButton = driver.find_element_by_xpath('//*[@id="pl_login_form"]/div/div[3]/div[6]/a')

#登陆

sumbButton.click()

#当页面title中包含我的首页之后,表示登陆成功,绩效下一步

element = WebDriverWait(driver,10).until(

EC.title_contains(u'我的首页')

)

except Exception as e:

print '22222222222222222',str(e)

#获取cookie

ck = driver.get_cookies()

self.cookie = defaultdict()

for item in ck:

self.cookie[item['name']] = item['value']

#保存cookie

self.saveCookie(self.cookie)

#用得到的cookie抓取你想要的博主的图片微博

for uid in self.uids:

#博主带图片的微博列表,根据具体需求自己定义

url = 'https://weibo.com/u/%s?profile_ftype=1&is_pic=1#_0' %(uid,)

request = scrapy.Request(url=url,cookies=self.cookie,callback=self.parse)

request.meta['item'] = {'uid':uid,'page_num':1}

yield request

def parse(self,response):

```

解析页面,因为微博采用页面跳转和Ajax两种翻页模式,每次页面跳转之后都会有两次Ajax请求获取数据,

微博页面全部由js渲染html字符生成,所以没法用xpath,css选择器,只能采用正则查找方法找到自己想要的内容

```

title = response.xpath('//title/text()').extract()[0]

print title

seletor = Selector(text=response.body)

#获取Ajax请求参数

pageId = seletor.re(r"\$CONFIG\[\'page_id\'\]=\'(\d+)\'")[0]

domain = seletor.re(r"\$CONFIG\[\'domain\'\]=\'(\d+)\'")[0]

#分析页面跳转后html页面内容

for itemObj in self.parse_content(seletor):

yield itemObj

#Ajax请求数据

item = response.meta['item']

ajaxUrl = 'https://weibo.com/p/aj/v6/mblog/mbloglist?ajwvr=6&domain=%s&profile_ftype=1&is_pic=1&pagebar=%s&pl_name=Pl_Official_MyProfileFeed__21&id=%s&script_uri=/u/%s&feed_type=0&page=%s&pre_page=1&domain_op=%s&__rnd=%s'

for num in range(0,2):

rand = str(int(time.time()*1000))

url = ajaxUrl%(domain,num,pageId,item['uid'],item['page_num'],domain,rand)

print url

print '------------sleep 10------------'

time.sleep(10)

yield scrapy.Request(url=url,cookies=self.cookie,callback=self.parse_ajax)

item['page_num'] += 1

nexpage = 'https://weibo.com/u/%s?is_search=0&visible=0&is_pic=1&is_tag=0&profile_ftype=1&page=%s#feedtop'%(item['uid'],item['page_num'])

request = scrapy.Request(url = nexpage,cookies=self.cookie,callback=self.parse)

request.meta['item'] = item

yield request

def parse_ajax(self,response):

```解析Ajax内容```

bodyObj = json.loads(response.body)

seletor = Selector(text=bodyObj['data'])

for itemObj in self.parse_content(seletor):

yield itemObj

def parse_content(self,seletor):

```获取图片地址```

pre = re.compile(r'clear_picSrc=(.*?)[\&|\\"]')

imagelist = seletor.re(pre)

for row in imagelist:

hs = hashlib.md5()

hs.update(row)

row = urllib.unquote(row)

#用每组图片的地址做唯一标识,和子目录名

imgset = row.split(',')

yield WeiboItem(image_urls=imgset,dirname=hs.hexdigest())

修改Setting.py

ROBOTSTXT_OBEY = False #不遵循robots规则

注册 WeiboPipeline

ITEM_PIPELINES = {

'webo.pipelines.WeiboPipeline': 300,

}

执行爬虫

scrapy crawl weibo.com