kafka分区和副本如何分配

bin/kafka-topics.sh --create //操作类型 --zookeeper localhost:2181 //kafka依赖的zookeeper地址 --replication-factor 2//副本因子 --partitions 1 //分区数量 --topic test //topic 名称

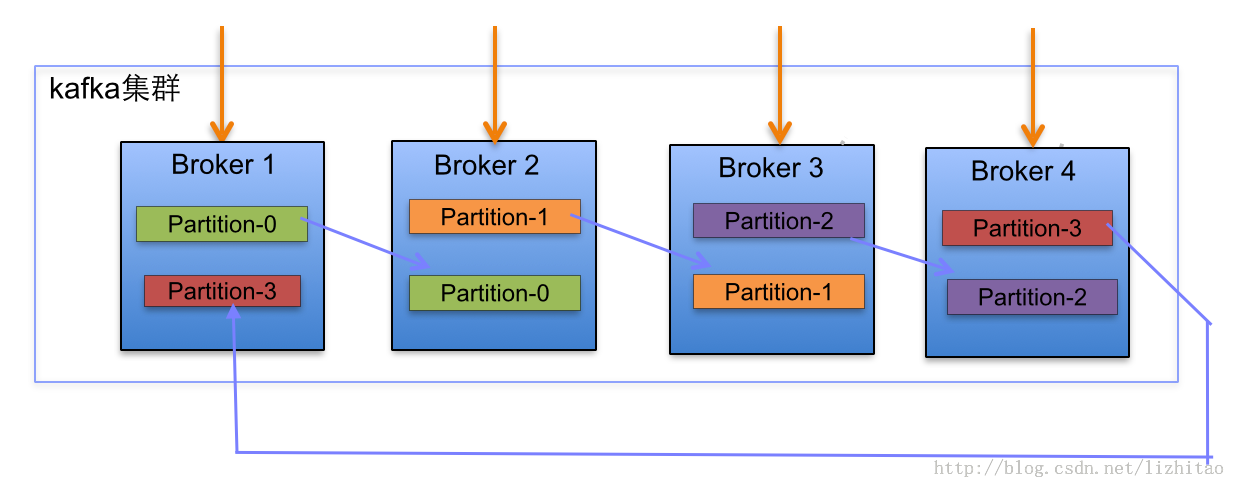

分区和副本的分配方式如下图:

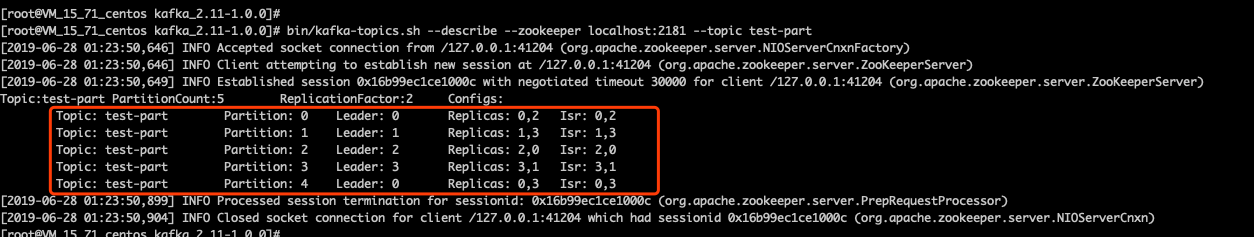

可能通过上图,你云里雾里,既然知道命令,我们就通过命令来看看,到底如何分配。

bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 2 --partitions 5 --topic test-part

通过命令可以看出 topic:test-part 指定了 5个分区,拷贝因子为2(代表只有一个副本),通过 执行命令 查看 topic:test-part 信息如下:

[root@VM_15_71_centos kafka_2.11-1.0.0]# bin/kafka-topics.sh --describe --zookeeper localhost:2181 --topic test-part

[2019-06-28 01:23:50,646] INFO Accepted socket connection from /127.0.0.1:41204 (org.apache.zookeeper.server.NIOServerCnxnFactory)

[2019-06-28 01:23:50,646] INFO Client attempting to establish new session at /127.0.0.1:41204 (org.apache.zookeeper.server.ZooKeeperServer)

[2019-06-28 01:23:50,649] INFO Established session 0x16b99ec1ce1000c with negotiated timeout 30000 for client /127.0.0.1:41204 (org.apache.zookeeper.server.ZooKeeperServer)

Topic:test-part PartitionCount:5 ReplicationFactor:2 Configs:

Topic: test-part Partition: 0 Leader: 0 Replicas: 0,2 Isr: 0,2

Topic: test-part Partition: 1 Leader: 1 Replicas: 1,3 Isr: 1,3

Topic: test-part Partition: 2 Leader: 2 Replicas: 2,0 Isr: 2,0

Topic: test-part Partition: 3 Leader: 3 Replicas: 3,1 Isr: 3,1

Topic: test-part Partition: 4 Leader: 0 Replicas: 0,3 Isr: 0,3

[2019-06-28 01:23:50,899] INFO Processed session termination for sessionid: 0x16b99ec1ce1000c (org.apache.zookeeper.server.PrepRequestProcessor)

[2019-06-28 01:23:50,904] INFO Closed socket connection for client /127.0.0.1:41204 which had sessionid 0x16b99ec1ce1000c (org.apache.zookeeper.server.NIOServerCnxn)

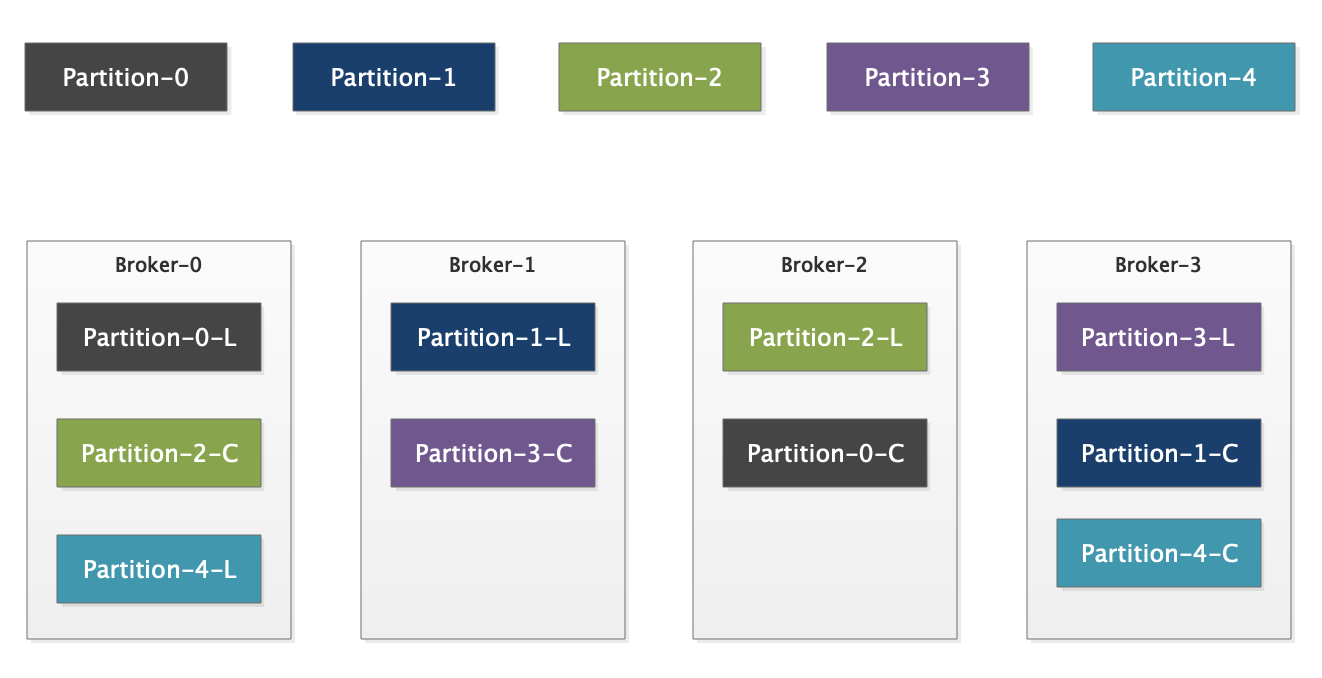

通过上边的描述信息,可以得到如下图分配方式 -L:Leader -C:Replicas(副本)

然后,我又执行了如下命令:

bin/kafka-topics.sh --create --zookeeper localhost:2181 --replication-factor 2 --partitions 6 --topic test-part-1

得到分配图如下:

通过以上两次创建topic对比,

|

第一次(5分区,2复制因子) test-part

|

第二次(6分区,2复制因子) test-part-1

|

|

|

分区0所在broker

|

0

|

3

|

|

副本与leader所在的关系

|

leader所在broker.id + 2,即副本所在broker.id

|

leader所在broker.id + 2,即副本所在broker.id

|

Read the fucking manual and source code