【翻译】QEMU内部机制:顶层概览

系列文章:

原文地址:http://blog.vmsplice.net/2011/03/qemu-internals-big-picture-overview.html

原文时间:2011年3月9日

作者介绍:Stefan Hajnoczi来自红帽公司的虚拟化团队,负责开发和维护QEMU项目的block layer, network subsystem和tracing subsystem。

目前工作是multi-core device emulation in QEMU和host/guest file sharing using vsock,过去从事过disk image formats, storage migration和I/O performance optimization

QEMU内部机制:顶层概览

在上一篇【翻译】QEMU内部机制:宏观架构和线程模型中,我直接介绍了QEMU的线程模型,并没有提到顶层的架构是什么样的。

本文将对QEMU顶层架构进行介绍,以帮助理解其线程模型。

vm的运行形式

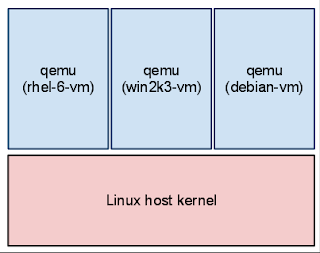

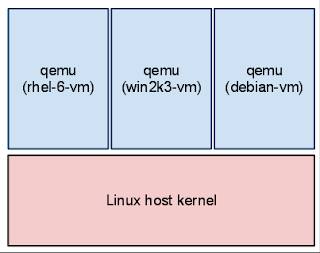

vm是通过qemu程序创建的,一般就是qemu-kvm或kvm程序。在一台运行有3个vm的宿主机上,将看到3个对应的qemu进程:

当vm关机时,qemu进程就会退出。vm重启时,为了方便,qemu进程不会被重启。不过先关闭并重新开启qemu进程也是完全可以的。

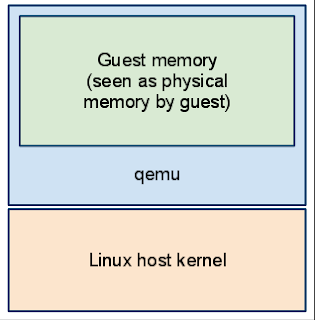

vm的内存

当qemu启动时,vm的内存就会被分配。-mem-path参数可以支持使用基于文件的内存系统,比如说hugetlbfs等。无论如何,内存会映射至qemu进程的地址空间,从vm的角度来看,这就是其物理内存。

qemu支持大端和小端字节序架构,所以从qemu代码访问vm内存时要小心。字节序的转换不是直接访问vm的内存进行的,而是由辅助函数负责。这使得可以从宿主机上运行具有不同字节序的vm。

kvm虚拟化

kvm是linux内核中的虚拟化特性,它使得类似于qemu的程序能够安全的在宿主cpu上直接执行vm的代码。但是必须得到宿主机cpu的支持。当前,kvm在x86, ARMv8, ppc, s390和MIPS等CPU上都已被支持。

为了使用kvm执行vm的代码,qemu进程会访问/dev/kvm设备并发起KVM_RUN ioctl调用。kvm内核模块会借助Intel和AMD提供的硬件虚拟化扩展功能执行vm的代码。当vm需要访问硬件设备寄存器、暂停访问cpu或执行其他特殊操作时,kvm就会把控制权交给qemu。此时,qemu会模拟操作的预期输出,或者只是在暂停的vm CPU的场景下等待下一次vm的中断。

vm的cpu执行任务的基本流程如下:

open("/dev/kvm")

ioctl(KVM_CREATE_VM)

ioctl(KVM_CREATE_VCPU)

for (;;) {

ioctl(KVM_RUN)

switch (exit_reason) {

case KVM_EXIT_IO: /* ... */

case KVM_EXIT_HLT: /* ... */

}

}

从宿主机视角来看vm的执行

宿主机内核会像调度其他进程一样来调度qemu进程。多个vm在完全不知道其他vm的存在的情况下同时运行。Firefox或Apache等应用程序也会与qemu竞争宿主机资源,但可以使用资源控制方法来隔离qemu并使其优先级更高。

qemu在用户空间进程中模拟了一个完整的虚拟机,它无从得知vm中运行有哪些进程。也就是说,qemu为vm提供了RAM、能够执行vm的代码并且能够模拟硬件设备,从而,在vm中可以运行任何类型的操作系统。宿主机无法“窥视”任意vm。

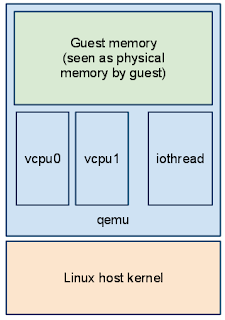

vm会在每个虚拟cpu上拥有一个vcpu线程。一个独立的iothread线程会通过运行select事件循环来处理类似于网络数据包或硬盘等IO请求。具体细节已经在上一篇【翻译】QEMU内部机制:宏观架构和线程模型中讨论过。

下图是宿主机视角的qemu进程结构:

其他信息

希望本文能够让你熟悉qemu和kvm架构。

以下是两则介绍kvm架构的ppt,可以了解到更多细节:

Jan Kiszka's Linux Kongress 2010: Architecture of the Kernel-based Virtual Machine (KVM)

我自己写的KVM Architecture Overview

===精选评论===

->/dev/kvm设备是由谁打开的?vcpu线程还是iothread线程?

它是在qemu启动时由主线程调用的。请注意,每个vcpu都有其自己的文件描述符,而/dev/kvm是vm的全局文件描述符,它并非专属于某个vcpu。

->vcpu线程如何给出cpu的错觉?它是否需要在上下文切换时维护cpu上下文?或者说它是否必须硬件虚拟化技术的支持?

是的,需要硬件支持。kvm模块的ioctl接口支持操作vcpu寄存器的状态。与物理CPU在机器开启时具有初始寄存器状态一样,QEMU在复位时也会对vcpu进行初始化。

KVM需要硬件的支持,intel cpu上称为VMX,AMD cpu上称为SVM。二者并不兼容,所以kvm为二者分别提供了代码上的支持。

->hypervisor和vm如何实现直接交互?

这要看你是需要什么样的交互,virtio-serial可以用于任何vm/host的交互。

qemu guest agent程序建立于virtio-serial之上,他支持json rpc api。

它支持宿主机在vm中调用一系列命令(比如查询IP、备份应用等)

->能否解释vm中的一个应用的IO操作流程是怎样的么?

根据KVM还是TCG、MMIO还是PIO,ioeventfd是否启用,会有多种不同的代码路径。

基本流程是vm的内存或IO读写操作会“陷入”kvm内核模块,kvm从KVM_RUN的ioctl调用返回后,将返回值交给QEMU处理。

QEMU找到负责该块内存地址的模拟设备,并调用其处理函数。当该设备处理完成之后,QEMU会使用KVM_RUN的ioctl调用重入进vm。

如果没有使用Passthrough,那么vm看到的是一个被模拟出来的网卡设备,此时,kvm和qemu不会直接读写物理网卡。他们会将数据包交给linux的网络栈(比如tap设备)进行处理。

virtio可以模拟网络、存储等虚拟设备,它会用一些优化方式,但是基本原理还是模拟一个“真实”设备。

->kvm内核模块是如何捕捉到virtqueue的kick动作的?

ioeventfd被注册为KVM的IO总线设备,kvm使用ioeventfd_write()通知ioeventfd。

trace流程为:

vmx_handle_exit with EXIT_REASON_IO_INSTRUCTION

--> handle_io

--> emulate_instruction

--> x86_emulate_instruction

--> x86_emulate_insn

--> writeback

--> segmented_write

--> emulator_write_emulated

--> emulator_read_write_onepage

--> vcpu_mmio_write

--> ioeventfd_write

该流程显示了当vm kicks宿主机时,信号是如何通知ioeventfd。

原文如下:

-

QEMU Internals: Big picture overview

-

The story of a guest

When a guest shuts down the qemu process exits. Reboot can be performed without restarting the qemu process for convenience although it would be fine to shut down and then start qemu again.

-

Guest RAM

QEMU supports both big-endian and little-endian target architectures so guest memory needs to be accessed with care from QEMU code. Endian conversion is performed by helper functions instead of accessing guest RAM directly. This makes it possible to run a target with a different endianness from the host.

-

KVM virtualization

In order to execute guest code using KVM, the qemu process opens /dev/kvm and issues the KVM_RUN ioctl. The KVM kernel module uses hardware virtualization extensions found on modern Intel and AMD CPUs to directly execute guest code. When the guest accesses a hardware device register, halts the guest CPU, or performs other special operations, KVM exits back to qemu. At that point qemu can emulate the desired outcome of the operation or simply wait for the next guest interrupt in the case of a halted guest CPU.

The basic flow of a guest CPU is as follows:

open("/dev/kvm")

ioctl(KVM_CREATE_VM)

ioctl(KVM_CREATE_VCPU)

for (;;) {

ioctl(KVM_RUN)

switch (exit_reason) {

case KVM_EXIT_IO: /* ... */

case KVM_EXIT_HLT: /* ... */

}

}

-

The host's view of a running guest

Since qemu system emulation provides a full virtual machine inside the qemu userspace process, the details of what processes are running inside the guest are not directly visible from the host. One way of understanding this is that qemu provides a slab of guest RAM, the ability to execute guest code, and emulated hardware devices; therefore any operating system (or no operating system at all) can run inside the guest. There is no ability for the host to peek inside an arbitrary guest.

Guests have a so-called vcpu thread per virtual CPU. A dedicated iothread runs a select(2) event loop to process I/O such as network packets and disk I/O completion. For more details and possible alternate configuration, see the threading model post.

The following diagram illustrates the qemu process as seen from the host:

-

Further information

Here are two presentations on KVM architecture that cover similar areas if you are interested in reading more:

- Jan Kiszka's Linux Kongress 2010 presentation on the Architecture of the Kernel-based Virtual Machine (KVM). Very good material.

- My own attempt at presenting a KVM Architecture Overview from 2010.