filebeat对接kafka

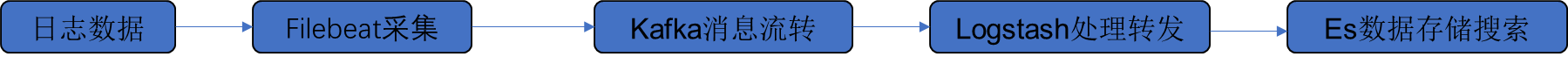

一般数据采集主要使用elk,为了提高数据采集性能和效率,filebeat采集日志发送给kafka,logstash从kafka消费数据存入es。主要记录使用以防忘记

一、kafka集群部署

按照标准方式部署就行

创建2个topic

./bin/kafka-topics.sh --create --zookeeper 127.0.0.1:3000 --replication-factor 2 --partitions 3 --topic topic-name ./bin/kafka-topics.sh --create --zookeeper 127.0.0.1:3000 --replication-factor 2 --partitions 3 --topic topic-name

二、filebeat配置

filebeat.inputs: - type: log enabled: true paths: - /data/logs/catalina.out.* fields: log_topic: topic-test exclude_files: ['\.gz$'] multiline.pattern: '^\d{4}\-\d{2}-\d{2}' multiline.negate: true multiline.match: after ignore_older: 5m - type: log enabled: true paths: - /data/logs/catalina.out.* fields: log_topic: topic-test1 exclude_files: ['\.gz$'] multiline.pattern: '^\d{4}\-\d{2}-\d{2}' multiline.negate: true multiline.match: after ignore_older: 5m processors: - drop_fields: fields: ["agent", "@version", "log", "input","ecs","version"] filebeat.config.modules: path: ${path.config}/modules.d/*.yml reload.enabled: false setup.template.settings: index.number_of_shards: 3 filebeat.registry.path: /data/filebeat/registry output.kafka: hosts: ["kafka01:8101","kafka02:8102","kafka03:8103"] topic: '%{[fields.log_topic]}' partition.round_robin: reachable_only: true required_acks: 1 compression: gzip max_message_bytes: 1000000 worker: 2

三、logstash配置

input{ kafka { enable_auto_commit => true auto_commit_interval_ms => "500" codec => "json" bootstrap_servers => "kafka01:8101,kafka02:8102,kafka03:8103" group_id => "group-01" auto_offset_reset => "latest" topics => "topic-test" consumer_threads => 3 } } output { elasticsearch { #action => "index" hosts => ["127.0.0.1:8200"] index => "topic-test-%{+YYYY.MM.dd}" user => "xxxx" password => "xxxx" } }

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· TypeScript + Deepseek 打造卜卦网站:技术与玄学的结合

· Manus的开源复刻OpenManus初探

· 三行代码完成国际化适配,妙~啊~

· .NET Core 中如何实现缓存的预热?

· 如何调用 DeepSeek 的自然语言处理 API 接口并集成到在线客服系统