k8s学习

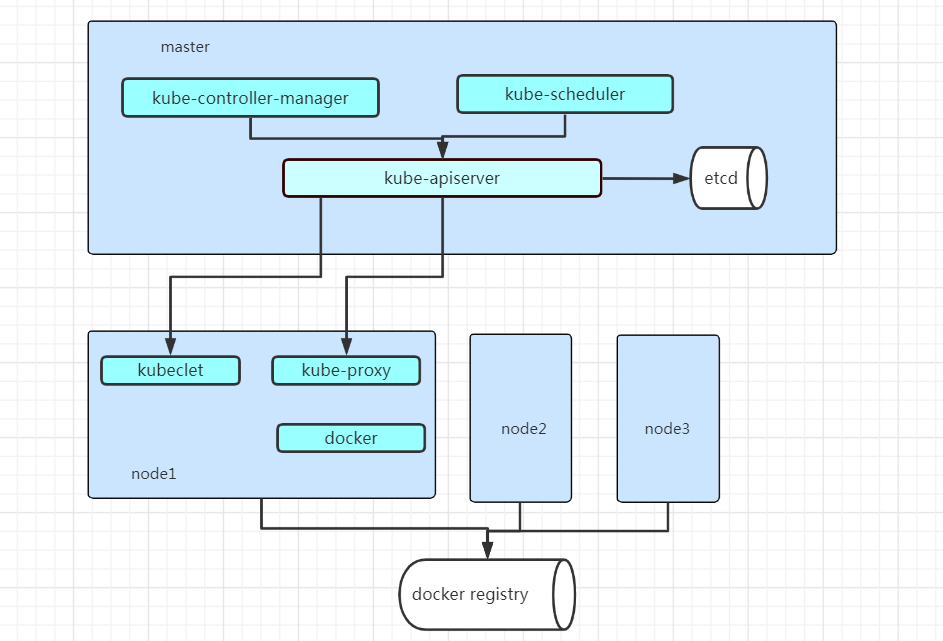

1、简单架构

master节点:apiserver、scheduler、controller-manager

node节点:kubelet、kube-proxy、docker

master节点组件: API Server。K8S 的请求入口服务。API Server 负责接收 K8S 所有请求(来自 UI 界面或者 CLI 命令行工具),然后,API Server 根据用户的具体请求,去通知其他组件干活。 Scheduler。K8S 所有Node 的调度器。当用户要部署服务时,Scheduler 会选择最合适的 Node(服务器)来部署。 Controller Manager。K8S 所有Node 的监控器。Controller 负责监控和调整在 Node 上部署的服务的状态,比如用户要求 A 服务部署 2 个副本,那么当其中一个服务挂了的时候,Controller 会马上调整,让 Scheduler 再选择一个 Node 重新部署服务 etcd。K8S 的存储服务。etcd 存储了 K8S 的关键配置和用户配置,K8S 中仅 API Server 才具备读写权限,其他组件必须通过 API Server 的接口才能读写数据

2、k8s中三种类型网络

node网络、service网络、pod网络

3、k8s中pod两种探测方式

livenessProbe:[活跃度探测] 根据用户自定义的规则判断容器是否健康。也叫存活指针

ReadnessProbe:[敏捷探测] 用来判断这个容器是否启动完成,即 pod 的 状态(期望值)是否 为ready

liveness主要用来确定何时重启容器。liveness探测的结果会存储在livenessManager中。kubelet在syncPod时,发现该容器的liveness探针检测失败时,会将其加入待启动的容器列表中,在之后的操作中会重新创建该容器

readiness主要来确定容器是否已经就绪。只有当Pod中的容器都处于就绪状态,也就是pod的condition里的Ready为true时,kubelet才会认定该Pod处于就绪状态。而pod是否处于就绪状态的作用是控制哪些Pod应该作为service的后端。如果Pod处于非就绪状态,那么它们将会被从service的endpoint中移除。

这个2中探测方式都支持三种探针:exec探针、HTTP探针、TCP探针

livenessProbe探测例子exec探针

apiVersion: v1 kind: Pod metadata: name: liveness-exec-pod namespace: default spec: containers: - name: liveness-exec-container image: busybox:latest imagePullPolicy: IfNotPresent command: ["/bin/sh","-c","touch /tmp/healthy;sleep 30;rm -f /tmp/healthy;sleep 3600"] livenessProbe: exec: command: ["test","-e","/tmp/healthy"] initialDelaySeconds: 1 periodSeconds: 3

创建pod :kubectl create -f liveness-exec.yml

查看pod可以看到已经正常创建

[root@localhost ~]# kubectl create -f liveness-exec.yml pod/liveness-exec-pod created [root@localhost ~]# kubectl get pods NAME READY STATUS RESTARTS AGE liveness-exec-pod 1/1 Running 0 10s mynginx 1/1 Running 0 23h

[root@localhost ~]# kubectl describe pod liveness-exec-pod Name: liveness-exec-pod Namespace: default Priority: 0 Node: localhost.localdomain/192.168.149.145 Start Time: Fri, 22 Jan 2021 01:22:22 -0800 Labels: <none> Annotations: <none> Status: Running IP: 10.244.0.5 IPs: IP: 10.244.0.5 Containers: liveness-exec-container: Container ID: docker://89bd5240e4607470f45ce2447b9231e4135adf3959be8ac4e86cbc13d8c21679 Image: busybox:latest Image ID: docker-pullable://busybox@sha256:c5439d7db88ab5423999530349d327b04279ad3161d7596d2126dfb5b02bfd1f Port: <none> Host Port: <none> Command: /bin/sh -c touch /tmp/healthy;sleep 30;rm -f /tmp/healthy;sleep 3600 State: Running Started: Fri, 22 Jan 2021 01:22:27 -0800 Ready: True Restart Count: 0 Liveness: exec [test -e /tmp/healthy] delay=1s timeout=1s period=3s #success=1 #failure=3 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-9rggj (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-9rggj: Type: Secret (a volume populated by a Secret) SecretName: default-token-9rggj Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 32s default-scheduler Successfully assigned default/liveness-exec-pod to localhost.localdomain Normal Pulling 30s kubelet, localhost.localdomain Pulling image "busybox:latest" Normal Pulled 28s kubelet, localhost.localdomain Successfully pulled image "busybox:latest" Normal Created 28s kubelet, localhost.localdomain Created container liveness-exec-container Normal Started 26s kubelet, localhost.localdomain Started container liveness-exec-container

过一段时间后重新查看pod信息可以看到已经重启过一次

[root@localhost ~]# kubectl get pods NAME READY STATUS RESTARTS AGE liveness-exec-pod 1/1 Running 1 84s mynginx 1/1 Running 0 23h

查看pod详细信息也可以看到检测到失败,也可以看到重启过2次

[root@localhost ~]# kubectl describe pod liveness-exec-pod Name: liveness-exec-pod Namespace: default Priority: 0 Node: localhost.localdomain/192.168.149.145 Start Time: Fri, 22 Jan 2021 01:39:54 -0800 Labels: <none> Annotations: <none> Status: Running IP: 10.244.0.6 IPs: IP: 10.244.0.6 Containers: liveness-exec-container: Container ID: docker://69b1679bc8051d5118889954fb5e20b1563677830f284bc92110318ce1074af0 Image: busybox:latest Image ID: docker-pullable://busybox@sha256:c5439d7db88ab5423999530349d327b04279ad3161d7596d2126dfb5b02bfd1f Port: <none> Host Port: <none> Command: /bin/sh -c touch /tmp/healthy;sleep 30;rm -f /tmp/healthy;sleep 3600 State: Running Started: Fri, 22 Jan 2021 01:42:22 -0800 Last State: Terminated Reason: Error Exit Code: 137 Started: Fri, 22 Jan 2021 01:41:12 -0800 Finished: Fri, 22 Jan 2021 01:42:20 -0800 Ready: True Restart Count: 2 Liveness: exec [test -e /tmp/healthy] delay=1s timeout=1s period=3s #success=1 #failure=3 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-9rggj (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-9rggj: Type: Secret (a volume populated by a Secret) SecretName: default-token-9rggj Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 2m39s default-scheduler Successfully assigned default/liveness-exec-pod to localhost.localdomain Warning Unhealthy 42s (x6 over 117s) kubelet, localhost.localdomain Liveness probe failed: Normal Killing 42s (x2 over 111s) kubelet, localhost.localdomain Container liveness-exec-container failed liveness probe, will be restarted Normal Pulled 11s (x3 over 2m35s) kubelet, localhost.localdomain Container image "busybox:latest" already present on machine Normal Created 11s (x3 over 2m34s) kubelet, localhost.localdomain Created container liveness-exec-container Normal Started 10s (x3 over 2m30s) kubelet, localhost.localdomain Started container liveness-exec-container

livenessProbe探测例子HTTP探针

apiVersion: v1 kind: Pod metadata: name: liveness-httpget-pod namespace: default spec: containers: - name: liveness-httpget-container image: ikubernetes/myapp:v1 imagePullPolicy: IfNotPresent ports: - name: http containerPort: 80 livenessProbe: httpGet: port: http path: /index.html initialDelaySeconds: 1 periodSeconds: 3

创建pod :kubectl create -f liveness-httpget.yml

查看pod可以看到已经创建完成

[root@localhost ~]# kubectl get pod NAME READY STATUS RESTARTS AGE liveness-httpget-pod 0/1 ContainerCreating 0 21s mynginx 1/1 Running 0 4d23h [root@localhost ~]# kubectl get pod NAME READY STATUS RESTARTS AGE liveness-httpget-pod 1/1 Running 0 31s mynginx 1/1 Running 0 4d23h

进入pod内删除index.html http请求变为404可以模拟探测失败

kubectl exec -it liveness-httpget-pod -- /bin/sh /usr/share/nginx/html # cd /usr/share/nginx/html/ /usr/share/nginx/html # mv index.html index.html-bak

过一会再查看pod可以看到重启过一次

root@localhost ~]# kubectl get pod NAME READY STATUS RESTARTS AGE liveness-httpget-pod 1/1 Running 1 9m8s mynginx 1/1 Running 0 4d23h

查看pod详细信息

[root@localhost ~]# kubectl describe pod liveness-httpget-pod Name: liveness-httpget-pod Namespace: default Priority: 0 Node: localhost.localdomain/192.168.149.145 Start Time: Tue, 26 Jan 2021 01:08:12 -0800 Labels: <none> Annotations: <none> Status: Running IP: 10.244.0.8 IPs: IP: 10.244.0.8 Containers: liveness-httpget-container: Container ID: docker://71f57bd895aaa55fa954138dd1de63a9a163c01c495a027db5f47e2ef61a4fb9 Image: ikubernetes/myapp:v1 Image ID: docker-pullable://ikubernetes/myapp@sha256:9c3dc30b5219788b2b8a4b065f548b922a34479577befb54b03330999d30d513 Port: 80/TCP Host Port: 0/TCP State: Running Started: Tue, 26 Jan 2021 01:16:29 -0800 Last State: Terminated Reason: Completed Exit Code: 0 Started: Tue, 26 Jan 2021 01:08:34 -0800 Finished: Tue, 26 Jan 2021 01:16:27 -0800 Ready: True Restart Count: 1 Liveness: http-get http://:http/index.html delay=1s timeout=1s period=3s #success=1 #failure=3 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-9rggj (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-9rggj: Type: Secret (a volume populated by a Secret) SecretName: default-token-9rggj Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled <unknown> default-scheduler Successfully assigned default/liveness-httpget-pod to localhost.localdomain Normal Pulling 14m kubelet, localhost.localdomain Pulling image "ikubernetes/myapp:v1" Normal Pulled 14m kubelet, localhost.localdomain Successfully pulled image "ikubernetes/myapp:v1" Warning Unhealthy 6m20s (x3 over 6m26s) kubelet, localhost.localdomain Liveness probe failed: HTTP probe failed with statuscode: 404 Normal Killing 6m20s kubelet, localhost.localdomain Container liveness-httpget-container failed liveness probe, will be restarted Normal Created 6m19s (x2 over 14m) kubelet, localhost.localdomain Created container liveness-httpget-container Normal Pulled 6m19s kubelet, localhost.localdomain Container image "ikubernetes/myapp:v1" already present on machine Normal Started 6m18s (x2 over 14m) kubelet, localhost.localdomain Started container liveness-httpget-container Warning Unhealthy 5m2s (x6 over 9m57s) kubelet, localhost.localdomain Liveness probe failed: Get http://10.244.0.8:80/index.html: net/http: request canceled (Client.Timeout exceeded while awaiting headers)

注意通过上面yml直接定义pod通过kubectl create创建后的pod 手工kubectl delete pod liveness-httpget-pod删除后,k8s并不会再自动创建新的pod,要通过控制器创建pod

4、pod控制器

pod控制器主要有以下几类

ReplicationController

ReplicaSet

Deployment

DaemonSet

Job

Cronjob

StatefulSet

RC (ReplicationController )主要的作用就是用来确保容器应用的副本数始终保持在用户定义的副本数 。即如 果有容器异常退出,会自动创建新的Pod来替代;而如果异常多出来的容器也会自动回收

通过ReplicaSet创建并控制pod,rs可以控制pod数量等

[root@localhost ~]# cat rs-pod.yml apiVersion: apps/v1 kind: ReplicaSet metadata: name: myrs namespace: default spec: replicas: 3 selector: matchLabels: app: myapp release: canary template: metadata: name: mayapp-pod labels: app: myapp release: canary enviroment: qa spec: containers: - name: myapp-container image: ikubernetes/myapp:v1 imagePullPolicy: IfNotPresent ports: - name: http containerPort: 80

创建 [root@localhost ~]# kubectl create -f rs-pod.yml replicaset.apps/myrs created 查看pod可以看到3个pod,可以看到pod的name是rs name+随机字符串 [root@localhost ~]# kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES myrs-648nt 1/1 Running 0 3m59s 10.244.0.4 localhost.localdomain <none> <none> myrs-jg7sz 1/1 Running 0 3m59s 10.244.0.6 localhost.localdomain <none> <none> myrs-m5crx 1/1 Running 0 3m59s 10.244.0.5 localhost.localdomain <none> <none> 查看rs,myrs即yml中定义的rs name [root@localhost ~]# kubectl get rs NAME DESIRED CURRENT READY AGE myrs 3 3 3 17s

请求任意一个pod的ip都可以正常响应 [root@localhost ~]# curl 10.244.0.4 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

可以通过kubectl describe 查看pod和rs的一些详细信息

[root@localhost ~]# kubectl describe rs myrs Name: myrs Namespace: default Selector: app=myapp,release=canary Labels: <none> Annotations: <none> Replicas: 3 current / 3 desired Pods Status: 3 Running / 0 Waiting / 0 Succeeded / 0 Failed Pod Template: Labels: app=myapp enviroment=qa release=canary Containers: myapp-container: Image: ikubernetes/myapp:v1 Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: <none> Volumes: <none> Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SuccessfulCreate 5m56s replicaset-controller Created pod: myrs-m5crx Normal SuccessfulCreate 5m56s replicaset-controller Created pod: myrs-jg7sz Normal SuccessfulCreate 5m56s replicaset-controller Created pod: myrs-648nt

[root@localhost ~]# kubectl describe pods myrs-648nt Name: myrs-648nt Namespace: default Priority: 0 Node: localhost.localdomain/192.168.149.145 Start Time: Tue, 26 Jan 2021 23:30:13 -0800 Labels: app=myapp enviroment=qa release=canary Annotations: <none> Status: Running IP: 10.244.0.4 IPs: IP: 10.244.0.4 Controlled By: ReplicaSet/myrs Containers: myapp-container: Container ID: docker://f1be2e7978228c8c072e1d52e1ea667a29bbdfa70faa8c0e26c9010052910b56 Image: ikubernetes/myapp:v1 Image ID: docker-pullable://ikubernetes/myapp@sha256:9c3dc30b5219788b2b8a4b065f548b922a34479577befb54b03330999d30d513 Port: 80/TCP Host Port: 0/TCP State: Running Started: Tue, 26 Jan 2021 23:30:15 -0800 Ready: True Restart Count: 0 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-rvlg6 (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-rvlg6: Type: Secret (a volume populated by a Secret) SecretName: default-token-rvlg6 Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 8m9s default-scheduler Successfully assigned default/myrs-648nt to localhost.localdomain Normal Pulled 8m7s kubelet, localhost.localdomain Container image "ikubernetes/myapp:v1" already present on machine Normal Created 8m7s kubelet, localhost.localdomain Created container myapp-container Normal Started 8m7s kubelet, localhost.localdomain Started container myapp-container

查看pod详细信息时可以看到 pod是有rs控制的 Controlled By: ReplicaSet/myrs 查看rs详细信息时可以看到期望的pod数量是3个 Replicas: 3 current / 3 desired Pods Status: 3 Running / 0 Waiting / 0 Succeeded / 0 Failed 此时如果我们删除一个pod后,rs会重新创建生成一个新的pod [root@localhost ~]# kubectl get pod NAME READY STATUS RESTARTS AGE myrs-648nt 1/1 Running 0 12m myrs-jg7sz 1/1 Running 0 12m myrs-m5crx 1/1 Running 0 12m [root@localhost ~]# kubectl delete pod myrs-648nt pod "myrs-648nt" deleted [root@localhost ~]# kubectl get pod NAME READY STATUS RESTARTS AGE myrs-jg7sz 1/1 Running 0 13m myrs-m5crx 1/1 Running 0 13m myrs-xvsh9 1/1 Running 0 15s 删除时新开一个窗口执行kubectl get pods -w可以看到执行pod删除和创建 [root@localhost ~]# kubectl get pods -w NAME READY STATUS RESTARTS AGE myrs-648nt 1/1 Running 0 11m myrs-jg7sz 1/1 Running 0 11m myrs-m5crx 1/1 Running 0 11m myrs-648nt 1/1 Terminating 0 12m myrs-xvsh9 0/1 Pending 0 0s myrs-xvsh9 0/1 Pending 0 0s myrs-xvsh9 0/1 ContainerCreating 0 1s myrs-648nt 0/1 Terminating 0 12m myrs-xvsh9 1/1 Running 0 3s myrs-648nt 0/1 Terminating 0 12m myrs-648nt 0/1 Terminating 0 12m

版本滚动更新 kubectl edit rs myrs编辑rs中镜像版本image: ikubernetes/myapp:v2 查看rs信息可以看到镜像版本已经是v2 [root@localhost ~]# kubectl get rs -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR myrs 3 3 3 21m myapp-container ikubernetes/myapp:v2 app=myapp,release=canary 此时向pod地址请求仍然是v1 [root@localhost ~]# curl 10.244.0.6 Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a> 是因为此时pod并未重建,此时删除pod后向新建的pod请求已经是v2版本 [root@localhost ~]# kubectl delete pod myrs-jg7sz pod "myrs-jg7sz" deleted [root@localhost ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES myrs-m5crx 1/1 Running 0 24m 10.244.0.5 localhost.localdomain <none> <none> myrs-x9jx4 1/1 Running 0 20s 10.244.0.8 localhost.localdomain <none> <none> myrs-xvsh9 1/1 Running 0 11m 10.244.0.7 localhost.localdomain <none> <none> [root@localhost ~]# curl 10.244.0.8 Hello MyApp | Version: v2 | <a href="hostname.html">Pod Name</a> 通过删除pod重建pod,我们可以实现版本滚动更新

Deployment为Pod和ReplicaSet提供了一个声明式方法,这种控制器并不直接管理pod,而是通过管理ReplicaSet来间接管理Pod,即: Deployment管理ReplicaSet,ReplicaSet管理Pod。所以DeploymenttLReplicaSet功能更加强大。

主要功能:

支持ReplicaSet

支持版本滚动更新和版本退回

支持发布的停、继续

简单使用例子

[root@localhost ~]# cat deploy-demo.yml apiVersion: apps/v1 kind: Deployment metadata: name: my-deploy namespace: default spec: replicas: 3 selector: matchLabels: app: myapp release: canary #pod 相关 template: metadata: labels: app: myapp release: canary enviroment: qa spec: containers: - name: myapp-container image: ikubernetes/myapp:v1 imagePullPolicy: IfNotPresent ports: - name: http containerPort: 80

[root@localhost ~]# kubectl apply -f deploy-demo.yml deployment.apps/my-deploy created 查看deploy,name即yml中定义的name [root@localhost ~]# kubectl get deployment -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR my-deploy 3/3 3 3 11m myapp-container ikubernetes/myapp:v1 app=myapp,release=canary 查看rs,name为deploy的name+随机串 [root@localhost ~]# kubectl get rs -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR my-deploy-d57c6c888 3 3 3 13m myapp-container ikubernetes/myapp:v1 app=myapp,pod-template-hash=d57c6c888,release=canary 查看pod,name为rs的name+随机串 [root@localhost ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES my-deploy-d57c6c888-6zn92 1/1 Running 0 13m 10.244.0.10 localhost.localdomain <none> <none> my-deploy-d57c6c888-m6fpx 1/1 Running 0 13m 10.244.0.9 localhost.localdomain <none> <none> my-deploy-d57c6c888-xxzjz 1/1 Running 0 13m 10.244.0.11 localhost.localdomain <none> <none> 说明 1、ReplicaSet 的名称始终被格式化为[DEPLOYMENT-NAME]-[RANDOM-STRING]。随机字符串是随机生成,并使用 pod-template-hash 作为选择器和标签 2、Deployment 控制器将 pod-template-hash 标签添加到 Deployment 创建或使用的每个 ReplicaSet 。此标签可确保 Deployment 的子 ReplicaSets 不重叠。因此不可修改 3、可以看到是deploy控制rs,rs控制pod

分别查看deploy、rs、pod资源详细信息

[root@localhost ~]# kubectl describe deploy my-deploy Name: my-deploy Namespace: default CreationTimestamp: Wed, 27 Jan 2021 00:09:33 -0800 Labels: <none> Annotations: deployment.kubernetes.io/revision: 1 Selector: app=myapp,release=canary Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable StrategyType: RollingUpdate MinReadySeconds: 0 RollingUpdateStrategy: 25% max unavailable, 25% max surge Pod Template: Labels: app=myapp enviroment=qa release=canary Containers: myapp-container: Image: ikubernetes/myapp:v1 Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: <none> Volumes: <none> Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable Progressing True NewReplicaSetAvailable OldReplicaSets: <none> NewReplicaSet: my-deploy-d57c6c888 (3/3 replicas created) Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 4m50s deployment-controller Scaled up replica set my-deploy-d57c6c888 to 3

[root@localhost ~]# kubectl describe rs my-deploy-d57c6c888 Name: my-deploy-d57c6c888 Namespace: default Selector: app=myapp,pod-template-hash=d57c6c888,release=canary Labels: app=myapp enviroment=qa pod-template-hash=d57c6c888 release=canary Annotations: deployment.kubernetes.io/desired-replicas: 3 deployment.kubernetes.io/max-replicas: 4 deployment.kubernetes.io/revision: 1 Controlled By: Deployment/my-deploy Replicas: 3 current / 3 desired Pods Status: 3 Running / 0 Waiting / 0 Succeeded / 0 Failed Pod Template: Labels: app=myapp enviroment=qa pod-template-hash=d57c6c888 release=canary Containers: myapp-container: Image: ikubernetes/myapp:v1 Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: <none> Volumes: <none> Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal SuccessfulCreate 6m7s replicaset-controller Created pod: my-deploy-d57c6c888-m6fpx Normal SuccessfulCreate 6m7s replicaset-controller Created pod: my-deploy-d57c6c888-xxzjz Normal SuccessfulCreate 6m7s replicaset-controller Created pod: my-deploy-d57c6c888-6zn92

[root@localhost ~]# kubectl describe pod my-deploy-d57c6c888-m6fpx Name: my-deploy-d57c6c888-m6fpx Namespace: default Priority: 0 Node: localhost.localdomain/192.168.149.145 Start Time: Wed, 27 Jan 2021 00:09:34 -0800 Labels: app=myapp enviroment=qa pod-template-hash=d57c6c888 release=canary Annotations: <none> Status: Running IP: 10.244.0.9 IPs: IP: 10.244.0.9 Controlled By: ReplicaSet/my-deploy-d57c6c888 Containers: myapp-container: Container ID: docker://6182700f3930c920fe6b8421f64d67174bd33fd16148618222e529c875d39f5a Image: ikubernetes/myapp:v1 Image ID: docker-pullable://ikubernetes/myapp@sha256:9c3dc30b5219788b2b8a4b065f548b922a34479577befb54b03330999d30d513 Port: 80/TCP Host Port: 0/TCP State: Running Started: Wed, 27 Jan 2021 00:09:36 -0800 Ready: True Restart Count: 0 Environment: <none> Mounts: /var/run/secrets/kubernetes.io/serviceaccount from default-token-rvlg6 (ro) Conditions: Type Status Initialized True Ready True ContainersReady True PodScheduled True Volumes: default-token-rvlg6: Type: Secret (a volume populated by a Secret) SecretName: default-token-rvlg6 Optional: false QoS Class: BestEffort Node-Selectors: <none> Tolerations: node.kubernetes.io/not-ready:NoExecute for 300s node.kubernetes.io/unreachable:NoExecute for 300s Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 7m49s default-scheduler Successfully assigned default/my-deploy-d57c6c888-m6fpx to localhost.localdomain Normal Pulled 7m47s kubelet, localhost.localdomain Container image "ikubernetes/myapp:v1" already present on machine Normal Created 7m47s kubelet, localhost.localdomain Created container myapp-container Normal Started 7m46s kubelet, localhost.localdomain Started container myapp-container

更新 Deployment

Deployment在更新时可以设置最大可用和最大不可用pod数 可以查看kubectl explain deploy.spec.strategy.rollingUpdate FIELDS: maxSurge <string> maxUnavailable <string>

更新Deployment,那么最好通过yaml文件更新,这样回滚到任何版本都非常便捷,而且更容易追述;而不是通过命令行 修改yml文件中镜像版本为v3:image: ikubernetes/myapp:v3 执行kubectl apply [root@localhost ~]# kubectl apply -f deploy-demo.yml --record deployment.apps/my-deploy configured # --record 参数可以记录命令,通过kubectl rollout history deployment my-deploy可查询 [root@localhost ~]# kubectl rollout history deployment my-deploy deployment.apps/my-deploy REVISION CHANGE-CAUSE 1 <none> 2 kubectl apply --filename=deploy-demo.yml --record=true 3 kubectl apply --filename=deploy-demo.yml --record=true

查看更新状态 [root@localhost ~]# kubectl rollout status deployment my-deploy Waiting for deployment "my-deploy" rollout to finish: 1 out of 3 new replicas have been updated... Waiting for deployment "my-deploy" rollout to finish: 1 out of 3 new replicas have been updated... Waiting for deployment "my-deploy" rollout to finish: 1 out of 3 new replicas have been updated... Waiting for deployment "my-deploy" rollout to finish: 2 out of 3 new replicas have been updated... Waiting for deployment "my-deploy" rollout to finish: 2 out of 3 new replicas have been updated... Waiting for deployment "my-deploy" rollout to finish: 2 out of 3 new replicas have been updated... Waiting for deployment "my-deploy" rollout to finish: 1 old replicas are pending termination... Waiting for deployment "my-deploy" rollout to finish: 1 old replicas are pending termination... deployment "my-deploy" successfully rolled out

更新中的Deployment、ReplicaSet、Pod信息 [root@localhost ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES my-deploy-6d86c996f4-mfmmg 0/1 ContainerCreating 0 3s <none> localhost.localdomain <none> <none> my-deploy-6d86c996f4-pcd5x 1/1 Running 0 8s 10.244.0.15 localhost.localdomain <none> <none> my-deploy-d57c6c888-6zn92 1/1 Running 0 30m 10.244.0.10 localhost.localdomain <none> <none> my-deploy-d57c6c888-m6fpx 1/1 Running 0 30m 10.244.0.9 localhost.localdomain <none> <none> my-deploy-d57c6c888-xxzjz 1/1 Terminating 0 30m 10.244.0.11 localhost.localdomain <none> <none> [root@localhost ~]# kubectl get rs -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR my-deploy-6d86c996f4 3 3 3 14s myapp-container ikubernetes/myapp:v3 app=myapp,pod-template-hash=6d86c996f4,release=canary my-deploy-d57c6c888 0 0 0 30m myapp-container ikubernetes/myapp:v1 app=myapp,pod-template-hash=d57c6c888,release=canary

更新成功后的Deployment、ReplicaSet、Pod信息 [root@localhost ~]# kubectl get deployment -o wide NAME READY UP-TO-DATE AVAILABLE AGE CONTAINERS IMAGES SELECTOR my-deploy 3/3 3 3 36m myapp-container ikubernetes/myapp:v3 app=myapp,release=canary [root@localhost ~]# kubectl get rs -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR my-deploy-6d86c996f4 3 3 3 6m39s myapp-container ikubernetes/myapp:v3 app=myapp,pod-template-hash=6d86c996f4,release=canary my-deploy-d57c6c888 0 0 0 36m myapp-container ikubernetes/myapp:v1 app=myapp,pod-template-hash=d57c6c888,release=canary [root@localhost ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES my-deploy-6d86c996f4-jwjst 1/1 Running 0 6m39s 10.244.0.17 localhost.localdomain <none> <none> my-deploy-6d86c996f4-mfmmg 1/1 Running 0 6m42s 10.244.0.16 localhost.localdomain <none> <none> my-deploy-6d86c996f4-pcd5x 1/1 Running 0 6m47s 10.244.0.15 localhost.localdomain <none> <none>

通过查询Deployment详情,知晓pod替换过程 [root@localhost ~]# kubectl describe deploy my-deploy Name: my-deploy Namespace: default CreationTimestamp: Wed, 27 Jan 2021 00:09:33 -0800 Labels: <none> Annotations: deployment.kubernetes.io/revision: 3 kubernetes.io/change-cause: kubectl apply --filename=deploy-demo.yml --record=true Selector: app=myapp,release=canary Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable StrategyType: RollingUpdate MinReadySeconds: 0 RollingUpdateStrategy: 25% max unavailable, 25% max surge Pod Template: Labels: app=myapp enviroment=qa release=canary Containers: myapp-container: Image: ikubernetes/myapp:v3 Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: <none> Volumes: <none> Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable Progressing True NewReplicaSetAvailable OldReplicaSets: <none> NewReplicaSet: my-deploy-6d86c996f4 (3/3 replicas created) Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 38m deployment-controller Scaled up replica set my-deploy-d57c6c888 to 3 Normal ScalingReplicaSet 11m deployment-controller Scaled up replica set my-deploy-844bcf589b to 1 Normal ScalingReplicaSet 8m31s deployment-controller Scaled down replica set my-deploy-844bcf589b to 0 Normal ScalingReplicaSet 8m31s deployment-controller Scaled up replica set my-deploy-6d86c996f4 to 1 Normal ScalingReplicaSet 8m26s deployment-controller Scaled down replica set my-deploy-d57c6c888 to 2 Normal ScalingReplicaSet 8m26s deployment-controller Scaled up replica set my-deploy-6d86c996f4 to 2 Normal ScalingReplicaSet 8m23s deployment-controller Scaled down replica set my-deploy-d57c6c888 to 1 Normal ScalingReplicaSet 8m23s deployment-controller Scaled up replica set my-deploy-6d86c996f4 to 3 Normal ScalingReplicaSet 8m19s deployment-controller Scaled down replica set my-deploy-d57c6c888 to 0

从上述的更新过程中可以看到,deploy可以控制多个rs,更新后deploy指向了新的rs,新的rs控制新的pod从而实现版本更新

回滚到上一个版本 [root@localhost ~]# kubectl rollout undo deployment my-deploy deployment.apps/my-deploy rolled back 回退到指定版本 [root@localhost ~]# kubectl rollout undo deployment my-deploy --to-revision=1 deployment.apps/my-deploy rolled back 同样可以通过kubectl rollout status deployment my-deploy查看回滚状态 [root@localhost ~]# kubectl rollout status deployment my-deploy Waiting for deployment "my-deploy" rollout to finish: 2 out of 3 new replicas have been updated... Waiting for deployment "my-deploy" rollout to finish: 2 out of 3 new replicas have been updated... Waiting for deployment "my-deploy" rollout to finish: 2 old replicas are pending termination... Waiting for deployment "my-deploy" rollout to finish: 1 old replicas are pending termination... Waiting for deployment "my-deploy" rollout to finish: 1 old replicas are pending termination... deployment "my-deploy" successfully rolled out

扩容、缩容 [root@localhost ~]# kubectl scale deployment my-deploy --replicas=5 deployment.apps/my-deploy scaled [root@localhost ~]# kubectl get rs -o wide NAME DESIRED CURRENT READY AGE CONTAINERS IMAGES SELECTOR my-deploy-6d86c996f4 0 0 0 20m myapp-container ikubernetes/myapp:v3 app=myapp,pod-template-hash=6d86c996f4,release=canary my-deploy-d57c6c888 5 5 5 50m myapp-container ikubernetes/myapp:v1 app=myapp,pod-template-hash=d57c6c888,release=canary [root@localhost ~]# kubectl get pod -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES my-deploy-d57c6c888-lkct6 1/1 Running 0 2m58s 10.244.0.21 localhost.localdomain <none> <none> my-deploy-d57c6c888-nb86k 1/1 Running 0 9s 10.244.0.24 localhost.localdomain <none> <none> my-deploy-d57c6c888-nlzxp 1/1 Running 0 2m52s 10.244.0.23 localhost.localdomain <none> <none> my-deploy-d57c6c888-v8m7z 1/1 Running 0 2m55s 10.244.0.22 localhost.localdomain <none> <none> my-deploy-d57c6c888-vx7mt 1/1 Running 0 9s 10.244.0.25 localhost.localdomain <none> <none>

5、service简单使用

在k8s上定义service资源也比较简单

[root@localhost ~]# cat svc-demo.yml apiVersion: v1 kind: Service metadata: name: my-svc namespace: default spec: selector: app: myapp release: canary clusterIP: 10.96.96.96 type: ClusterIP ports: - port: 8080 # pod port targetPort: 80

[root@localhost ~]# kubectl apply -f svc-demo.yml service/my-svc created

查看svc详细信息

[root@localhost ~]# kubectl get svc -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 24h <none> my-svc ClusterIP 10.96.96.96 <none> 8080/TCP 4s app=myapp,release=canary [root@localhost ~]# kubectl describe svc my-svc Name: my-svc Namespace: default Labels: <none> Annotations: Selector: app=myapp,release=canary Type: ClusterIP IP: 10.96.96.96 Port: <unset> 8080/TCP TargetPort: 80/TCP Endpoints: 10.244.0.21:80 Session Affinity: None Events: <none> [root@localhost ~]# kubectl describe svc my-svc Name: my-svc Namespace: default Labels: <none> Annotations: Selector: app=myapp,release=canary Type: ClusterIP IP: 10.96.96.96 Port: <unset> 8080/TCP TargetPort: 80/TCP Endpoints: 10.244.0.21:80,10.244.0.26:80 Session Affinity: None Events: <none> 可以看到Endpoints有2个pod的ip和port,请求service,请求最终会到某一个pod

service和Pod实际上并非直接联系,中间还有一个Endpoint作为转发。所以这里显示的是Endpoint而非Pod.

向service ip发起请求可以正常响应

[root@localhost ~]# curl 10.96.96.96:8080

Hello MyApp | Version: v1 | <a href="hostname.html">Pod Name</a>

把pod副本数增加到3个,service可以立即感知到,看Endpoints即可知道 [root@localhost ~]# kubectl scale deployment my-deploy --replicas=3 deployment.apps/my-deploy scaled [root@localhost ~]# kubectl describe svc my-svc Name: my-svc Namespace: default Labels: <none> Annotations: Selector: app=myapp,release=canary Type: ClusterIP IP: 10.96.96.96 Port: <unset> 8080/TCP TargetPort: 80/TCP Endpoints: 10.244.0.21:80,10.244.0.26:80,10.244.0.27:80 Session Affinity: None Events: <none>

6、ingress

7、存储

简单使用

apiVersion: apps/v1 kind: Deployment metadata: name: my-deploy namespace: default spec: replicas: 3 selector: matchLabels: app: myapp release: canary #pod 相关 template: metadata: labels: app: myapp release: canary enviroment: qa spec: containers: - name: myapp-container image: ikubernetes/myapp:v3 imagePullPolicy: IfNotPresent ports: - name: http containerPort: 80 volumeMounts: - mountPath: /data #container内路径 name: test-vm volumes: - name: test-vm hostPath: path: /data #node节点上的路径 type: Directory

[root@localhost ~]# kubectl apply -f deploy-demo.yml deployment.apps/my-deploy configured [root@localhost ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-deploy-798fd88bd7-hwpg4 1/1 Running 0 27s my-deploy-798fd88bd7-s2t4p 1/1 Running 0 23s my-deploy-798fd88bd7-x6nzh 0/1 ContainerCreating 0 11s my-deploy-d57c6c888-6vbbs 0/1 Terminating 0 8d my-deploy-d57c6c888-lkct6 1/1 Running 0 8d [root@localhost ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-deploy-798fd88bd7-hwpg4 1/1 Running 0 32s my-deploy-798fd88bd7-s2t4p 1/1 Running 0 28s my-deploy-798fd88bd7-x6nzh 1/1 Running 0 16s my-deploy-d57c6c888-lkct6 0/1 Terminating 0 8d [root@localhost ~]# kubectl get pods NAME READY STATUS RESTARTS AGE my-deploy-798fd88bd7-hwpg4 1/1 Running 0 35s my-deploy-798fd88bd7-s2t4p 1/1 Running 0 31s my-deploy-798fd88bd7-x6nzh 1/1 Running 0 19s

查看deploy可以看到定义存储相关信息,进入pod内,在/data目录下新建文件写入内容,可以看到在node节点上/data目录下会有相应的文件

[root@localhost ~]# kubectl describe deploy my-deploy Name: my-deploy Namespace: default CreationTimestamp: Wed, 27 Jan 2021 00:09:33 -0800 Labels: <none> Annotations: deployment.kubernetes.io/revision: 6 Selector: app=myapp,release=canary Replicas: 3 desired | 3 updated | 3 total | 3 available | 0 unavailable StrategyType: RollingUpdate MinReadySeconds: 0 RollingUpdateStrategy: 25% max unavailable, 25% max surge Pod Template: Labels: app=myapp enviroment=qa release=canary Containers: myapp-container: Image: ikubernetes/myapp:v3 Port: 80/TCP Host Port: 0/TCP Environment: <none> Mounts: /data from test-vm (rw) Volumes: test-vm: Type: HostPath (bare host directory volume) Path: /data HostPathType: Directory Conditions: Type Status Reason ---- ------ ------ Available True MinimumReplicasAvailable Progressing True NewReplicaSetAvailable OldReplicaSets: <none> NewReplicaSet: my-deploy-798fd88bd7 (3/3 replicas created) Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ScalingReplicaSet 2m7s deployment-controller Scaled up replica set my-deploy-798fd88bd7 to 1 Normal ScalingReplicaSet 2m3s deployment-controller Scaled down replica set my-deploy-d57c6c888 to 2 Normal ScalingReplicaSet 2m3s deployment-controller Scaled up replica set my-deploy-798fd88bd7 to 2 Normal ScalingReplicaSet 111s deployment-controller Scaled down replica set my-deploy-d57c6c888 to 1 Normal ScalingReplicaSet 111s deployment-controller Scaled up replica set my-deploy-798fd88bd7 to 3 Normal ScalingReplicaSet 99s deployment-controller Scaled down replica set my-deploy-d57c6c888 to 0

使用nfs做存储

首先部署个nfs服务端 yum install nfs-utils rpcbind -y systemctl restart rpcbind //必须要启动rpcbind服务,nfs服务需要向rpcbind服务注册端口 systemctl enable rpcbind systemctl start nfs-server systemctl enable nfs-server vim /etc/exports /mnt/data1 192.168.149.0/24(rw,no_root_squash) /mnt/data2 192.168.149.0/24(rw,no_root_squash) /mnt/data3 192.168.149.0/24(rw,no_root_squash) /mnt/data4 192.168.149.0/24(rw,no_root_squash) 重载配置 exportfs -rv

定义一个Pod [root@localhost ~]# cat volum-nfs.yml apiVersion: v1 kind: Pod metadata: name: pod-vol-nfs namespace: default spec: containers: - name: myapp-vol image: ikubernetes/myapp:v1 volumeMounts: - name: html mountPath: /usr/share/nginx/html/ volumes: - name: html nfs: path: /mnt/data1 server: 192.168.149.136

[root@localhost ~]# kubectl apply -f volum-nfs.yml

pod/pod-vol-nfs configured

查看pod

[root@localhost ~]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

my-deploy-798fd88bd7-hwpg4 1/1 Running 0 3d22h 10.244.0.28 localhost.localdomain <none> <none>

my-deploy-798fd88bd7-s2t4p 1/1 Running 0 3d22h 10.244.0.29 localhost.localdomain <none> <none>

my-deploy-798fd88bd7-x6nzh 1/1 Running 0 3d22h 10.244.0.30 localhost.localdomain <none> <none>

pod-vol-nfs 1/1 Running 0 2m39s 10.244.0.31 localhost.localdomain <none> <none>

在nfs服务端新建/mnt/data1/index.html写入内容

访问pod1地址测试,可以访问到内容

[root@localhost ~]# curl 10.244.0.31

nfs test

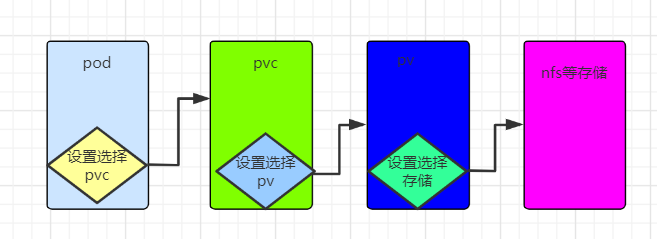

PVC、PV使用

使用nfs作为存储后端

定义pv [root@localhost ~]# cat pv-demo.yml apiVersion: v1 kind: PersistentVolume metadata: name: pv1 labels: name: pv1 spec: nfs: path: /mnt/data1 server: 192.168.149.136 accessModes: ["ReadWriteMany","ReadWriteOnce"] capacity: storage: 1Gi --- apiVersion: v1 kind: PersistentVolume metadata: name: pv2 labels: name: pv2 spec: nfs: path: /mnt/data2 server: 192.168.149.136 accessModes: ["ReadWriteMany","ReadWriteOnce"] capacity: storage: 2Gi --- apiVersion: v1 kind: PersistentVolume metadata: name: pv3 labels: name: pv3 spec: nfs: path: /mnt/data3 server: 192.168.149.136 accessModes: ["ReadWriteMany","ReadWriteOnce"] capacity: storage: 3Gi

创建和查看 [root@localhost ~]# kubectl apply -f pv-demo.yml persistentvolume/pv1 created [root@localhost ~]# kubectl get pv -o wide NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE VOLUMEMODE pv1 1Gi RWO,RWX Retain Available 5m38s Filesystem pv2 2Gi RWO,RWX Retain Available 5m38s Filesystem pv3 3Gi RWO,RWX Retain Available 5m38s Filesystem 查看pv详细信息 [root@localhost ~]# kubectl describe pv pv1 Name: pv1 Labels: name=pv1 Annotations: Finalizers: [kubernetes.io/pv-protection] StorageClass: Status: Available Claim: Reclaim Policy: Retain Access Modes: RWO,RWX VolumeMode: Filesystem Capacity: 1Gi Node Affinity: <none> Message: Source: Type: NFS (an NFS mount that lasts the lifetime of a pod) Server: 192.168.149.136 Path: /mnt/data1 ReadOnly: false Events: <none>

定义pvc 可以通过kubectl explain pvc.spec查看相关字段 [root@localhost ~]# cat pvc-demo.yml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: mypvc namespace: default spec: accessModes: ["ReadWriteMany"] resources: requests: storage: 1Gi

创建查看 [root@localhost ~]# kubectl apply -f pvc-demo.yml persistentvolumeclaim/mypvc created

[root@localhost ~]# kubectl get pvc -o wide NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE VOLUMEMODE mypvc Bound pv1 1Gi RWO,RWX 111s Filesystem

可以看到绑定了pv1 再查看pv [root@localhost ~]# kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pv1 1Gi RWO,RWX Retain Bound default/mypvc 15m pv2 2Gi RWO,RWX Retain Available 15m pv3 3Gi RWO,RWX Retain Available 15m

查看PVC和pv1详细信息 [root@localhost ~]# kubectl describe pvc mypvc Name: mypvc Namespace: default StorageClass: Status: Bound Volume: pv1 Labels: <none> Annotations: pv.kubernetes.io/bind-completed: yes pv.kubernetes.io/bound-by-controller: yes Finalizers: [kubernetes.io/pvc-protection] Capacity: 1Gi Access Modes: RWO,RWX VolumeMode: Filesystem Mounted By: <none> Events: <none> [root@localhost ~]# kubectl describe pv pv1 Name: pv1 Labels: name=pv1 Annotations: pv.kubernetes.io/bound-by-controller: yes Finalizers: [kubernetes.io/pv-protection] StorageClass: Status: Bound Claim: default/mypvc Reclaim Policy: Retain Access Modes: RWO,RWX VolumeMode: Filesystem Capacity: 1Gi Node Affinity: <none> Message: Source: Type: NFS (an NFS mount that lasts the lifetime of a pod) Server: 192.168.149.136 Path: /mnt/data1 ReadOnly: false Events: <none>

创建一个pod使用pvc [root@localhost ~]# cat pod-pvc-demo.yml apiVersion: v1 kind: Pod metadata: name: pod-pvc-demo namespace: default spec: containers: - name: myapp-pvc image: ikubernetes/myapp:v1 volumeMounts: - name: html mountPath: /usr/share/nginx/html/ volumes: - name: html persistentVolumeClaim: claimName: mypvc

访问pod地址看到可以请求到相应内容 [root@localhost ~]# curl 10.244.0.32 nfs test pvc pv

上面的测试可以用改图表示

要使用持久化存储,就需要使用pvc去跟pv去申请,然后pv查看自己有没有合适的存储空间卷,有合适的就与pvc进行绑定。pv与pvc是一一对应绑定的

8、ConfigMap 使用

命令行直接创建

[root@localhost ~]# kubectl create configmap nginx-cm --from-literal=nginx_port=80 --from-literal=nginx_serve r=a.cmvideo.cn configmap/nginx-cm created 查看cm信息 [root@localhost ~]# kubectl get cm NAME DATA AGE nginx-cm 2 9s [root@localhost ~]# kubectl describe cm nginx-cm Name: nginx-cm Namespace: default Labels: <none> Annotations: <none> Data ==== nginx_port: ---- 80 nginx_server: ---- a.cmvideo.cn Events: <none> [root@localhost ~]# kubectl get cm nginx-cm -o yaml apiVersion: v1 data: nginx_port: "80" nginx_server: a.cmvideo.cn kind: ConfigMap metadata: creationTimestamp: "2021-03-23T07:41:35Z" managedFields: - apiVersion: v1 fieldsType: FieldsV1 fieldsV1: f:data: .: {} f:nginx_port: {} f:nginx_server: {} manager: kubectl operation: Update time: "2021-03-23T07:41:35Z" name: nginx-cm namespace: default resourceVersion: "65306" selfLink: /api/v1/namespaces/default/configmaps/nginx-cm uid: d146ec64-61ef-47bc-a8aa-e9290cd63ed8

把value以文件的方式存放

[root@localhost ~]# cat www.conf server { server_name myapp.com; port 80; root /data/html; } [root@localhost ~]# kubectl create configmap nginx-cm-from-file --from-file=./www.conf configmap/nginx-cm-from-file created [root@localhost ~]# kubectl get cm NAME DATA AGE nginx-cm 2 11m nginx-cm-from-file 1 14s [root@localhost ~]# kubectl get cm nginx-cm-from-file -o yaml apiVersion: v1 data: www.conf: | server { server_name myapp.com; port 80; root /data/html; } kind: ConfigMap metadata: creationTimestamp: "2021-03-23T07:53:04Z" managedFields: - apiVersion: v1 fieldsType: FieldsV1 fieldsV1: f:data: .: {} f:www.conf: {} manager: kubectl operation: Update time: "2021-03-23T07:53:04Z" name: nginx-cm-from-file namespace: default resourceVersion: "66693" selfLink: /api/v1/namespaces/default/configmaps/nginx-cm-from-file uid: 5a1b9ea3-8f6e-4a55-9064-969273d1668e 可以看到用这种方式创建,key为文件名,value 为文件中的内容.

创建一个pod 关联刚刚创建的cm

[root@localhost ~]# cat cm-1.yml apiVersion: v1 kind: Pod metadata: name: myapp-cm namespace: default spec: containers: - name: myapp-cm-1 image: ikubernetes/myapp:v1 ports: - name: http containerPort: 80 env: - name: nginx_port valueFrom: configMapKeyRef: name: nginx-cm key: nginx_port - name: nginx_server valueFrom: configMapKeyRef: name: nginx-cm key: nginx_server [root@localhost ~]# kubectl apply -f cm-1.yml pod/myapp-cm created [root@localhost ~]# kubectl get pod NAME READY STATUS RESTARTS AGE my-deploy-798fd88bd7-hwpg4 1/1 Running 0 45d my-deploy-798fd88bd7-s2t4p 1/1 Running 0 45d my-deploy-798fd88bd7-x6nzh 1/1 Running 0 45d myapp-cm 1/1 Running 0 10m 进入pod查看环境变量 [root@localhost ~]# kubectl exec -it myapp-cm -- /bin/sh / # env|grep nginx_port nginx_port=80 / # env|grep nginx nginx_port=80 nginx_server=a.cmvideo.cn

基于存储卷的的 pod 引用环境变量

[root@localhost ~]# cat cm-2.yml apiVersion: v1 kind: Pod metadata: name: myapp-cm1 namespace: default spec: containers: - name: myapp-cm1 image: ikubernetes/myapp:v2 ports: - name: http containerPort: 8081 volumeMounts: - name: nginx-conf mountPath: /etc/nginx/conf.d/ volumes: - name: nginx-conf configMap: name: nginx-cm-from-file [root@localhost ~]# kubectl create -f cm-2.yml pod/myapp-cm1 created [root@localhost ~]# kubectl get pod NAME READY STATUS RESTARTS AGE my-deploy-798fd88bd7-hwpg4 1/1 Running 0 46d my-deploy-798fd88bd7-s2t4p 1/1 Running 0 46d my-deploy-798fd88bd7-x6nzh 1/1 Running 0 46d myapp-cm1 1/1 Running 0 19s 如果pod创建失败可以使用kubectl logs myapp-cm1查看日志 进入容器内查看 [root@localhost ~]# kubectl exec -it myapp-cm1 -- /bin/sh / # cat /etc/nginx/conf.d/www.conf server { server_name myapp.com; listen 80; root /data/html; }

修改configmap 的端口为8082 kubectl edit cm nginx-cm-from-file 过一段时间在容器内查看环境变量已经动态更新 [root@localhost ~]# kubectl exec -it myapp-cm1 -- /bin/sh / # cat /etc/nginx/conf.d/www.conf server { server_name myapp.com; listen 8082; root /data/html; }

9、权限学习

创建role

[root@localhost ~]# cat role-demo.yml apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: pods-reader namespace: default rules: - apiGroups: - "" resources: - pods verbs: - get - list - watch

[root@localhost ~]# kubectl apply -f role-demo.yml role.rbac.authorization.k8s.io/pods-reader created [root@localhost ~]# kubectl get role NAME CREATED AT pods-reader 2021-03-29T06:36:23Z [root@localhost ~]# kubectl describe role pods-reader Name: pods-reader Labels: <none> Annotations: PolicyRule: Resources Non-Resource URLs Resource Names Verbs --------- ----------------- -------------- ----- pods [] [] [get list watch]