实验五:全连接神经网络手写数字识别实验

【实验目的】

理解神经网络原理,掌握神经网络前向推理和后向传播方法;

掌握使用pytorch框架训练和推理全连接神经网络模型的编程实现方法。

【实验内容】

1.使用pytorch框架,设计一个全连接神经网络,实现Mnist手写数字字符集的训练与识别。

【实验报告要求】

修改神经网络结构,改变层数观察层数对训练和检测时间,准确度等参数的影响;

修改神经网络的学习率,观察对训练和检测效果的影响;

修改神经网络结构,增强或减少神经元的数量,观察对训练的检测效果的影响。

【实验代码及结果截图】

#导入包

import torch

import torch.nn.functional as functional

import torch.optim as optim

from torch.utils.data import DataLoader

from torchvision import datasets

from torchvision import transforms

BATCH_SIZE = 64

MNIST_PATH = "../../../Data/MNIST"#定义路径

#softmax归一化

transform = transforms.Compose([

transforms.ToTensor(),

# 均值 标准差

transforms.Normalize((0.1307,), (0.3081,))

])

#定义数据,并下载数据集

train_dataset = datasets.MNIST(root=MNIST_PATH,

train=True,

download=True,

transform=transform)

test_dataset = datasets.MNIST(root=MNIST_PATH,

train=False,

download=True,

transform=transform)

#载入数据集

train_loader = DataLoader(train_dataset,shuffle=True,batch_size=BATCH_SIZE)

test_loader = DataLoader(test_dataset,shuffle=False,batch_size=BATCH_SIZE)

#全连接神经网络

class FullyNeuralNetwork(torch.nn.Module):

def __init__(self):

super().__init__()

# 建立5层的全连接层

self.layer_1 = torch.nn.Linear(784, 512)

self.layer_2 = torch.nn.Linear(512, 256)

self.layer_3 = torch.nn.Linear(256, 128)

self.layer_4 = torch.nn.Linear(128, 64)

self.layer_5 = torch.nn.Linear(64, 10)

#forward函数

def forward(self, data):

x = data.view(-1, 784)

x = functional.relu(self.layer_1(x))

x = functional.relu(self.layer_2(x))#使用relu函数作为激活函数

x = functional.relu(self.layer_4(x))

x = self.layer_5(x)

return x

#训练数据

def train(epoch, model, criterion, optimizer):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 100 == 0:

print('[%d, %5d] loss: %.3f' % (epoch, batch_idx, running_loss / 100))

running_loss = 0.0

#测试数据

def test(model):

correct = 0

total = 0

with torch.no_grad():

for images, labels in test_loader:

outputs = model(images)

_, predicated = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicated == labels).sum().item()

print("Accuracy on test set: %d %%" % (100 * correct / total))

if __name__ == "__main__":

model = FullyNeuralNetwork()

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.1, momentum=0.5)

for epoch in range(5):

train(epoch, model, criterion, optimizer)

test(model)

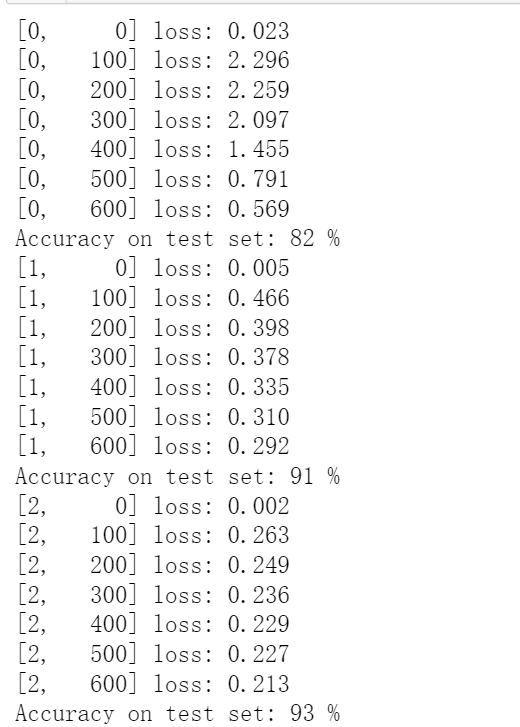

输出结果如下:

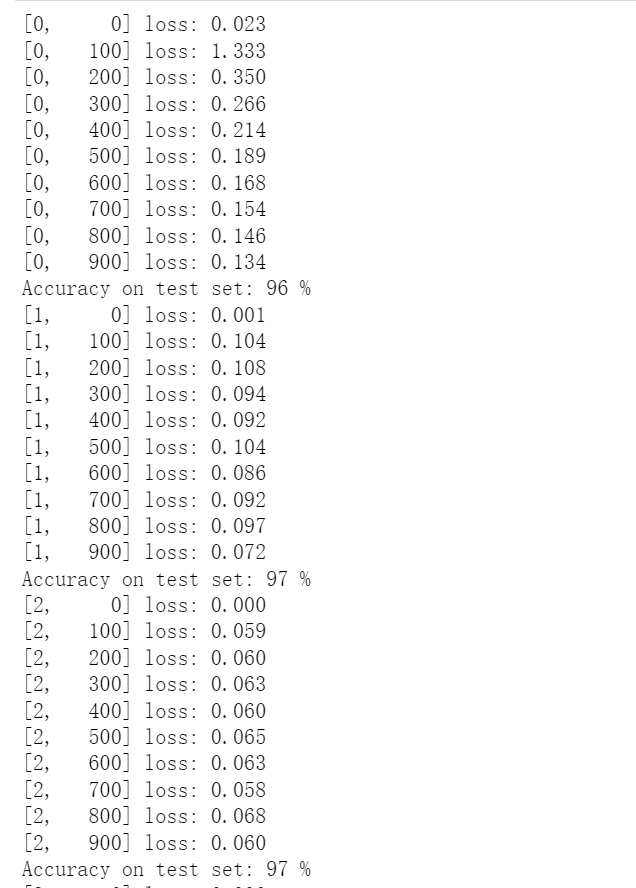

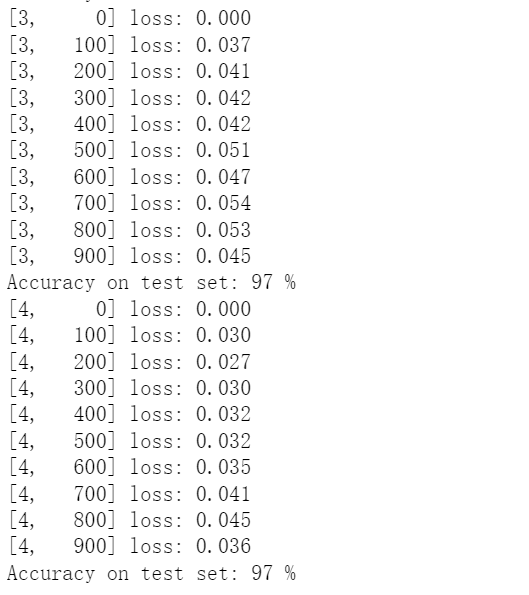

修改学习率为0.01,得出的结果如下所示: