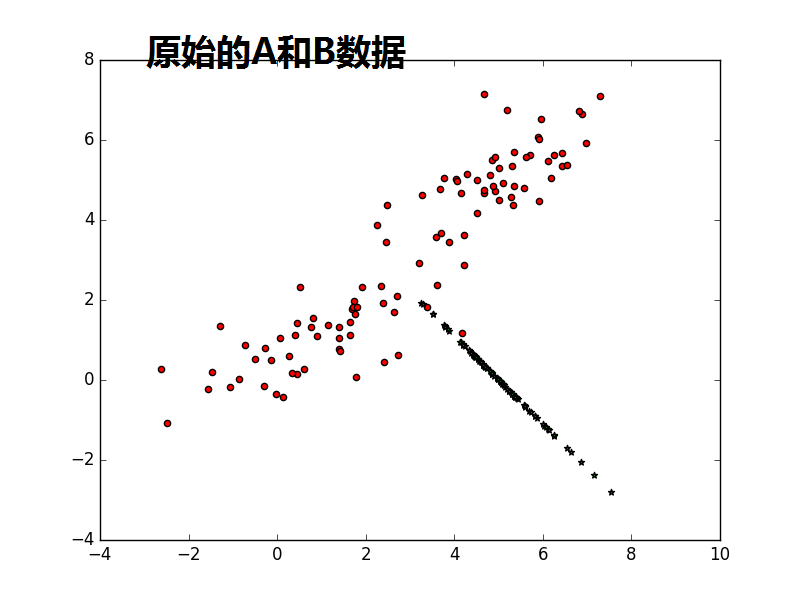

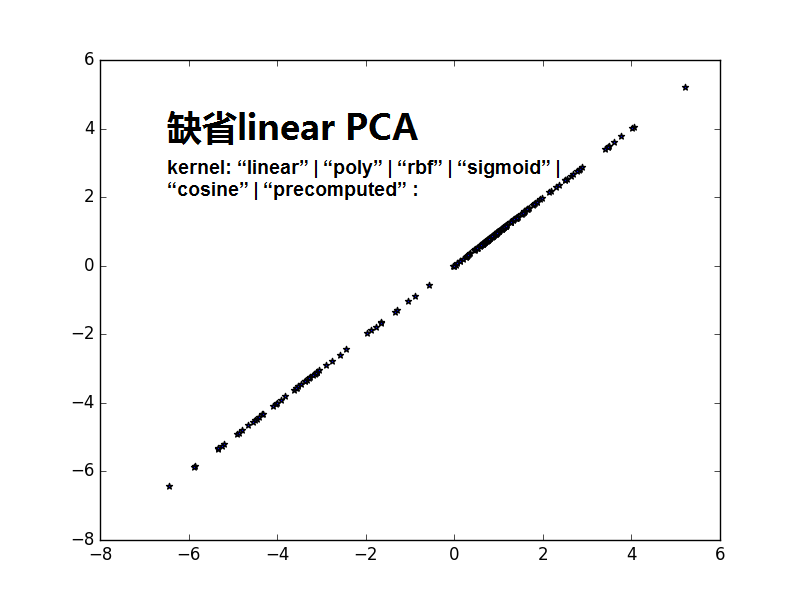

from sklearn import decomposition import numpy as np A1_mean = [1, 1] A1_cov = [[2, .99], [1, 1]] A1 = np.random.multivariate_normal(A1_mean, A1_cov, 50) A2_mean = [5, 5] A2_cov = [[2, .99], [1, 1]] A2 = np.random.multivariate_normal(A2_mean, A2_cov, 50) A = np.vstack((A1, A2)) #A1:50*2;A2:50*2,水平连接 B_mean = [5, 0] B_cov = [[.5, -1], [-0.9, .5]] B = np.random.multivariate_normal(B_mean, B_cov, 100) import matplotlib.pyplot as plt plt.scatter(A[:,0],A[:,1],c='r',marker='o') plt.scatter(B[:,0],B[:,1],c='g',marker='*') plt.show() #很蠢的想法,把A和B合并,然后进行一维可分 kpca = decomposition.KernelPCA(kernel='cosine', n_components=1) AB = np.vstack((A, B)) AB_transformed = kpca.fit_transform(AB) plt.scatter(AB_transformed,AB_transformed,c='b',marker='*') plt.show() kpca = decomposition.KernelPCA(n_components=1) AB = np.vstack((A, B)) AB_transformed = kpca.fit_transform(AB) plt.scatter(AB_transformed,AB_transformed,c='b',marker='*') plt.show()

注意1:书上说consin PCA 比缺省的linear PCA要好,是不是consin PCA更紧致,数据不发散.

始终搞不懂什么时候用,什么时候不用

fit(X, y=None)

Fit the model from data in X.

ParametersX: array-like, shape (n_samples, n_features) :

Training vector, where n_samples in the number of samples and n_features is the numberof features.

fit_transform(X, y=None, **params)

Fit the model from data in X and transform X.

ParametersX: array-like, shape (n_samples, n_features) :

Training vector, where n_samples in the number of samples and n_features is the numberof features.

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步