爬取信件信息(更新)

1 import requests 2 from bs4 import BeautifulSoup 3 from selenium import webdriver 4 5 6 7 url='http://www.beijing.gov.cn/hudong/hdjl/com.web.search.mailList.flow' 8 headers={'User-Agent':'Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:73.0) Gecko/20100101 Firefox/73.0'} 9 10 resp = requests.post(url, headers=headers) 11 #print(resp) 12 resp.encoding = resp.apparent_encoding # 将网页返回的字符集类型设置为 自动判断的字符集类型 13 html = resp.text 14 # 解析成为beautiful对象 15 soup = BeautifulSoup(html, 'html.parser') 16 # print(soup) 17 result = soup.find('body').find('div', {'class': 'conouter'}).find('div', {'id': 'mailul'}).find_all('div', { 18 'class': 'row clearfix my-2 list-group o-border-bottom2 p-3'}) 19 # print(result) 20 for info in result: 21 leixing = info.find('a').find('font').get_text() 22 biaoti = info.find('a').find('span').get_text() 23 shijian = info.find('div', {'class': 'o-font2 col-md-3 col-xs-12 text-muted'}).get_text() 24 bumen = info.find('div', {'class': 'o-font2 col-md-5 col-xs-12 text-muted'}).get_text() 25 str=('{} {} {} {}'.format(leixing[2:4],biaoti,shijian[5:],bumen[6:])) 26 list=str.split()#分割空格 27 #print(list) 28 #以字典格式输出 29 dict={'leixing':'','biaoti':'','shijian':'','bumen':''} 30 dict['leixing']=list[0] 31 dict['biaoti'] = list[1] 32 dict['shijian'] = list[2] 33 dict['bumen'] = list[3] 34 print(dict) 35 page=soup.find('body').find_all('div',{'id':'pageDiv1'}) 36 print(page)

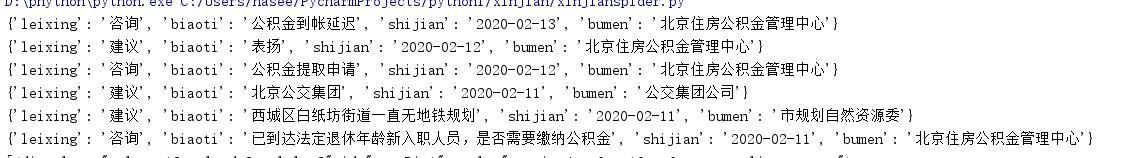

运行结果:

现在只能爬一页,明天考虑翻页爬取,并提交到mysql数据库