十三 spark 集群测试与基本命令

一、启动环境

启动脚本:bigdata-start.sh

#!/bin/bash echo -e "\n========Start run zkServer========\n" for nodeZk in node180,node181,node182;do ssh $nodeZk "zkServer.sh start;jps";done sleep 10 echo -e "\n========Start run journalnode========\n" hdfs --daemon start journalnode jps sleep 5 echo -e "\n========Start run dfs========\n" start-dfs.sh jps sleep 5 echo -e "\n========Start run yarn========\n" start-yarn.sh sleep 5 echo -e "\n========Start run hbase========\n" start-hbase.sh sleep 5 echo -e "\n========Start run kafka========\n" for nodeKa in node180,node181,node182;do ssh $nodeKa "kafka-server-start.sh /opt/module/kafka_2.12-2.0.1/config/server.properties &;jps";done sleep 15 echo -e "\n========Start run spark========\n" cd /opt/module/spark-3.0.0-hadoop3.2/sbin/ ./start-all.sh sleep 5 jps

二、测试准备

1、创建spark在HDFS集群中的输入路径

hdfs dfs -mkdir -p /spark/input

2、本地创建测试数据

3、上传HDFS集群中

hdfs dfs -put wordcount /spark/input

三、指令命令

A、Spark on Mesos运行模式

1、在ndoe180节点上运行Spark-Shell测试

/opt/module/spark-3.0.0-hadoop3.2/bin

./spark-shell --master spark://node180:7077

scala> val mapRdd=sc.textFile("/spark/input").flatMap(_.split(" ")).map(word=>(word,1)).reduceByKey(_+_).map(entry=>(entry._2,entry._1))

mapRdd: org.apache.spark.rdd.RDD[(Int, String)] = MapPartitionsRDD[5] at map at <console>:24

#下面的排序如果设置并行度为2则内存被撑爆,因为每个Executor(Task(JVM))的最小内存是480MB,并行度为2就表示所需内存在原有的基础上翻一倍

scala> val sortRdd=mapRdd.sortByKey(false,1)

sortRdd: org.apache.spark.rdd.RDD[(Int, String)] = ShuffledRDD[6] at sortByKey at <console>:26

scala> val mapRdd2=sortRdd.map(entry=>(entry._2,entry._1))

mapRdd2: org.apache.spark.rdd.RDD[(String, Int)] = MapPartitionsRDD[7] at map at <console>:28

#下面这个存储步骤也是非常消耗内存的

scala> mapRdd2.saveAsTextFile("/spark/output")

scala> :quit

或

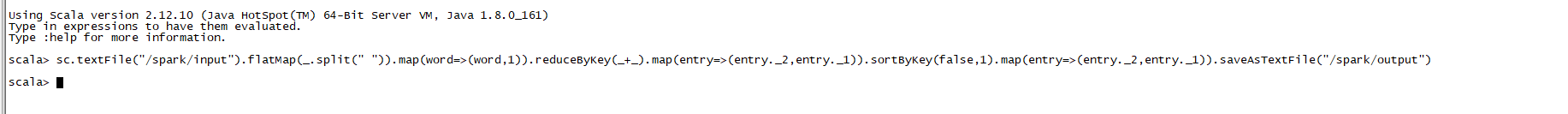

sc.textFile("/spark/input").flatMap(_.split(" ")).map(word=>(word,1)).reduceByKey(_+_).map(entry=>(entry._2,entry._1)).sortByKey(false,1).map(entry=>(entry._2,entry._1)).saveAsTextFile("/spark/output")

回车执行命令如下:

解释:

sc(Spark context available as 'sc' )

textFile(读取文件)

line1

line2

line3

_.split(" ")

[[word1,word2,word],[word1,word3,word],[word1,word4,word]]

flatmap(扁平化)

[word1,word2,word,word1,word2,word,word1,word2,word]

map(一个map任务,计算)

(word1,1),(word2,1),(word,1),(word1,1),(word3,1),(word,1),(word1,1),(word4,1),(word,1)

reduceByKey(通过key统计)

[(word1,3),(word2,1),(word,3),(word3,1),(word4,1)]

map(一个map任务,交换顺序)

[(3,word1),(1,word2),(3,word),(1,word3),(1,word4)]

sortByKey(根据key排序,排序如果设置并行度为2则内存被撑爆,因为每个Executor(Task(JVM))的最小内存是480MB,并行度为2就表示所需内存在原有的基础上翻一倍)

[(1,word2),(1,word3),(1,word4),(3,word1),(3,word)]

map(一个map任务,交换顺序)

[(word2,1),(word3,1),(word4,1),(word1,3),(word,3)]

saveAsTextFile(保存到指定目录)

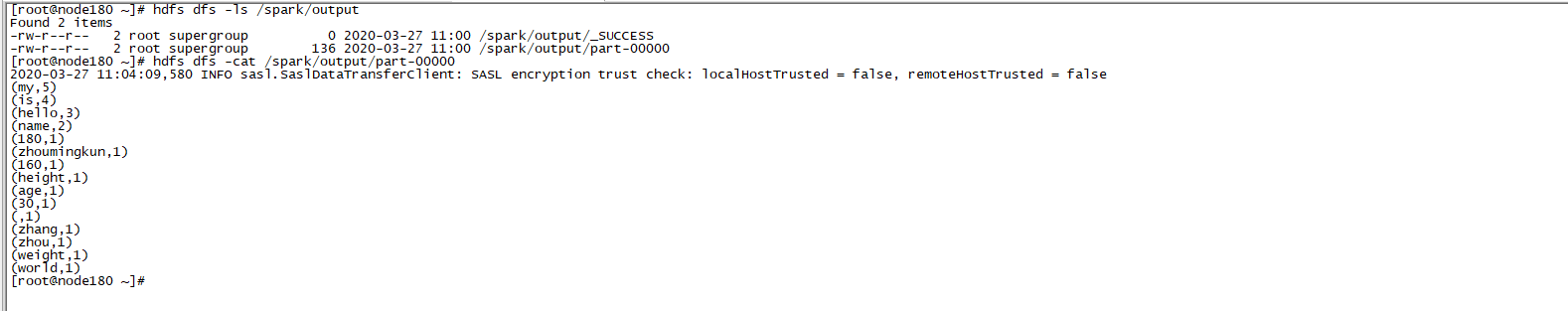

查看HDFS集群中是否已经存在数据

hdfs dfs -ls /spark/output

控制台打开文件

hdfs dfs -cat /spark/output/part-00000

2、以Spark on Mesos模式运行Spark-Submit,指定master参数为spark://node180:7077

spark-submit --class org.apache.spark.examples.JavaSparkPi --master spark://node180:7077 ../examples/jars/spark-examples_2.12-3.0.0-preview2.jar

2020-03-27 11:09:57,181 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-03-27 11:09:57,619 INFO spark.SparkContext: Running Spark version 3.0.0-preview2

2020-03-27 11:09:57,721 INFO resource.ResourceUtils: ==============================================================

2020-03-27 11:09:57,725 INFO resource.ResourceUtils: Resources for spark.driver:

2020-03-27 11:09:57,725 INFO resource.ResourceUtils: ==============================================================

2020-03-27 11:09:57,726 INFO spark.SparkContext: Submitted application: JavaSparkPi

2020-03-27 11:09:57,849 INFO spark.SecurityManager: Changing view acls to: root

2020-03-27 11:09:57,849 INFO spark.SecurityManager: Changing modify acls to: root

2020-03-27 11:09:57,849 INFO spark.SecurityManager: Changing view acls groups to:

2020-03-27 11:09:57,849 INFO spark.SecurityManager: Changing modify acls groups to:

2020-03-27 11:09:57,849 INFO spark.SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(root); groups with view permissions: Set(); users with modify permissions: Set(root); groups with modify permissions: Set()

2020-03-27 11:09:58,285 INFO util.Utils: Successfully started service 'sparkDriver' on port 46828.

2020-03-27 11:09:58,308 INFO spark.SparkEnv: Registering MapOutputTracker

2020-03-27 11:09:58,336 INFO spark.SparkEnv: Registering BlockManagerMaster

2020-03-27 11:09:58,352 INFO storage.BlockManagerMasterEndpoint: Using org.apache.spark.storage.DefaultTopologyMapper for getting topology information

2020-03-27 11:09:58,352 INFO storage.BlockManagerMasterEndpoint: BlockManagerMasterEndpoint up

2020-03-27 11:09:58,374 INFO spark.SparkEnv: Registering BlockManagerMasterHeartbeat

2020-03-27 11:09:58,384 INFO storage.DiskBlockManager: Created local directory at /tmp/blockmgr-9b0a1b6a-1682-4454-ad47-037957bfa1cb

2020-03-27 11:09:58,403 INFO memory.MemoryStore: MemoryStore started with capacity 366.3 MiB

2020-03-27 11:09:58,416 INFO spark.SparkEnv: Registering OutputCommitCoordinator

2020-03-27 11:09:58,513 INFO util.log: Logging initialized @4411ms to org.sparkproject.jetty.util.log.Slf4jLog

2020-03-27 11:09:58,577 INFO server.Server: jetty-9.4.z-SNAPSHOT; built: 2019-04-29T20:42:08.989Z; git: e1bc35120a6617ee3df052294e433f3a25ce7097; jvm 1.8.0_161-b12

2020-03-27 11:09:58,594 INFO server.Server: Started @4493ms

2020-03-27 11:09:58,614 INFO server.AbstractConnector: Started ServerConnector@7957dc72{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2020-03-27 11:09:58,614 INFO util.Utils: Successfully started service 'SparkUI' on port 4040.

2020-03-27 11:09:58,632 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@344561e0{/jobs,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,634 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2f40a43{/jobs/json,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,634 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@69c43e48{/jobs/job,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,637 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1c807b1d{/jobs/job/json,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,637 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1b39fd82{/stages,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,638 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@21680803{/stages/json,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,638 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@c8b96ec{/stages/stage,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,640 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7048f722{/stages/stage/json,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,640 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@58a55449{/stages/pool,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,641 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6e0ff644{/stages/pool/json,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,641 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2a2bb0eb{/storage,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,642 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@2d0566ba{/storage/json,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,642 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@7728643a{/storage/rdd,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,643 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@5167268{/storage/rdd/json,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,643 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@28c0b664{/environment,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,644 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@1af7f54a{/environment/json,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,644 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@436390f4{/executors,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,645 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@68ed96ca{/executors/json,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,645 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@3228d990{/executors/threadDump,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,650 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@50b8ae8d{/executors/threadDump/json,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,656 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@51c929ae{/static,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,657 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@33a2499c{/,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,658 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@33c2bd{/api,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,659 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@4d4d8fcf{/jobs/job/kill,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,660 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6f0628de{/stages/stage/kill,null,AVAILABLE,@Spark}

2020-03-27 11:09:58,662 INFO ui.SparkUI: Bound SparkUI to 0.0.0.0, and started at http://node180:4040

2020-03-27 11:09:58,681 INFO spark.SparkContext: Added JAR file:/opt/module/spark-3.0.0-hadoop3.2/bin/../examples/jars/spark-examples_2.12-3.0.0-preview2.jar at spark://node180:46828/jars/spark-examples_2.12-3.0.0-preview2.jar with timestamp 1585278598681

2020-03-27 11:09:59,003 INFO client.StandaloneAppClient$ClientEndpoint: Connecting to master spark://node180:7077...

2020-03-27 11:09:59,054 INFO client.TransportClientFactory: Successfully created connection to node180/192.168.0.180:7077 after 30 ms (0 ms spent in bootstraps)

2020-03-27 11:09:59,691 INFO cluster.StandaloneSchedulerBackend: Connected to Spark cluster with app ID app-20200327110959-0001

2020-03-27 11:09:59,697 INFO util.Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 33573.

2020-03-27 11:09:59,698 INFO netty.NettyBlockTransferService: Server created on node180:33573

2020-03-27 11:09:59,699 INFO storage.BlockManager: Using org.apache.spark.storage.RandomBlockReplicationPolicy for block replication policy

2020-03-27 11:09:59,707 INFO storage.BlockManagerMaster: Registering BlockManager BlockManagerId(driver, node180, 33573, None)

2020-03-27 11:09:59,721 INFO storage.BlockManagerMasterEndpoint: Registering block manager node180:33573 with 366.3 MiB RAM, BlockManagerId(driver, node180, 33573, None)

2020-03-27 11:09:59,724 INFO storage.BlockManagerMaster: Registered BlockManager BlockManagerId(driver, node180, 33573, None)

2020-03-27 11:09:59,725 INFO storage.BlockManager: Initialized BlockManager: BlockManagerId(driver, node180, 33573, None)

2020-03-27 11:09:59,859 INFO client.StandaloneAppClient$ClientEndpoint: Executor added: app-20200327110959-0001/0 on worker-20200327100412-192.168.0.182-44876 (192.168.0.182:44876) with 2 core(s)

2020-03-27 11:09:59,867 INFO cluster.StandaloneSchedulerBackend: Granted executor ID app-20200327110959-0001/0 on hostPort 192.168.0.182:44876 with 2 core(s), 1024.0 MiB RAM

2020-03-27 11:09:59,870 INFO client.StandaloneAppClient$ClientEndpoint: Executor added: app-20200327110959-0001/1 on worker-20200327100437-192.168.0.180-36332 (192.168.0.180:36332) with 2 core(s)

2020-03-27 11:09:59,870 INFO cluster.StandaloneSchedulerBackend: Granted executor ID app-20200327110959-0001/1 on hostPort 192.168.0.180:36332 with 2 core(s), 1024.0 MiB RAM

2020-03-27 11:09:59,929 INFO handler.ContextHandler: Started o.s.j.s.ServletContextHandler@6ff37443{/metrics/json,null,AVAILABLE,@Spark}

2020-03-27 11:09:59,930 INFO client.StandaloneAppClient$ClientEndpoint: Executor added: app-20200327110959-0001/2 on worker-20200327100423-192.168.0.181-34422 (192.168.0.181:34422) with 2 core(s)

2020-03-27 11:09:59,930 INFO cluster.StandaloneSchedulerBackend: Granted executor ID app-20200327110959-0001/2 on hostPort 192.168.0.181:34422 with 2 core(s), 1024.0 MiB RAM

2020-03-27 11:09:59,933 INFO client.StandaloneAppClient$ClientEndpoint: Executor updated: app-20200327110959-0001/0 is now RUNNING

2020-03-27 11:09:59,962 INFO cluster.StandaloneSchedulerBackend: SchedulerBackend is ready for scheduling beginning after reached minRegisteredResourcesRatio: 0.0

2020-03-27 11:10:00,056 INFO client.StandaloneAppClient$ClientEndpoint: Executor updated: app-20200327110959-0001/2 is now RUNNING

2020-03-27 11:10:00,997 INFO spark.SparkContext: Starting job: reduce at JavaSparkPi.java:54

2020-03-27 11:10:01,010 INFO scheduler.DAGScheduler: Got job 0 (reduce at JavaSparkPi.java:54) with 2 output partitions

2020-03-27 11:10:01,010 INFO scheduler.DAGScheduler: Final stage: ResultStage 0 (reduce at JavaSparkPi.java:54)

2020-03-27 11:10:01,011 INFO scheduler.DAGScheduler: Parents of final stage: List()

2020-03-27 11:10:01,012 INFO scheduler.DAGScheduler: Missing parents: List()

2020-03-27 11:10:01,016 INFO scheduler.DAGScheduler: Submitting ResultStage 0 (MapPartitionsRDD[1] at map at JavaSparkPi.java:50), which has no missing parents

2020-03-27 11:10:01,089 INFO memory.MemoryStore: Block broadcast_0 stored as values in memory (estimated size 3.9 KiB, free 366.3 MiB)

2020-03-27 11:10:01,129 INFO memory.MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 2.1 KiB, free 366.3 MiB)

2020-03-27 11:10:01,132 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on node180:33573 (size: 2.1 KiB, free: 366.3 MiB)

2020-03-27 11:10:01,134 INFO spark.SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1206

2020-03-27 11:10:01,145 INFO scheduler.DAGScheduler: Submitting 2 missing tasks from ResultStage 0 (MapPartitionsRDD[1] at map at JavaSparkPi.java:50) (first 15 tasks are for partitions Vector(0, 1))

2020-03-27 11:10:01,146 INFO scheduler.TaskSchedulerImpl: Adding task set 0.0 with 2 tasks

2020-03-27 11:10:09,546 INFO client.StandaloneAppClient$ClientEndpoint: Executor updated: app-20200327110959-0001/1 is now RUNNING

2020-03-27 11:10:11,028 INFO cluster.CoarseGrainedSchedulerBackend$DriverEndpoint: Registered executor NettyRpcEndpointRef(spark-client://Executor) (192.168.0.180:60514) with ID 1

2020-03-27 11:10:11,196 INFO storage.BlockManagerMasterEndpoint: Registering block manager 192.168.0.180:36496 with 366.3 MiB RAM, BlockManagerId(1, 192.168.0.180, 36496, None)

2020-03-27 11:10:11,322 INFO scheduler.TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, 192.168.0.180, executor 1, partition 0, PROCESS_LOCAL, 1007348 bytes)

2020-03-27 11:10:11,414 INFO scheduler.TaskSetManager: Starting task 1.0 in stage 0.0 (TID 1, 192.168.0.180, executor 1, partition 1, PROCESS_LOCAL, 1007353 bytes)

2020-03-27 11:10:11,891 INFO storage.BlockManagerInfo: Added broadcast_0_piece0 in memory on 192.168.0.180:36496 (size: 2.1 KiB, free: 366.3 MiB)

2020-03-27 11:10:12,375 INFO scheduler.TaskSetManager: Finished task 1.0 in stage 0.0 (TID 1) in 1047 ms on 192.168.0.180 (executor 1) (1/2)

2020-03-27 11:10:12,380 INFO scheduler.TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 1147 ms on 192.168.0.180 (executor 1) (2/2)

2020-03-27 11:10:12,381 INFO scheduler.TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

2020-03-27 11:10:12,386 INFO scheduler.DAGScheduler: ResultStage 0 (reduce at JavaSparkPi.java:54) finished in 11.350 s

2020-03-27 11:10:12,389 INFO scheduler.DAGScheduler: Job 0 is finished. Cancelling potential speculative or zombie tasks for this job

2020-03-27 11:10:12,389 INFO scheduler.TaskSchedulerImpl: Killing all running tasks in stage 0: Stage finished

2020-03-27 11:10:12,402 INFO scheduler.DAGScheduler: Job 0 finished: reduce at JavaSparkPi.java:54, took 11.404438 s

Pi is roughly 3.13894

2020-03-27 11:10:12,408 INFO server.AbstractConnector: Stopped Spark@7957dc72{HTTP/1.1,[http/1.1]}{0.0.0.0:4040}

2020-03-27 11:10:12,438 INFO ui.SparkUI: Stopped Spark web UI at http://node180:4040

2020-03-27 11:10:12,452 INFO cluster.StandaloneSchedulerBackend: Shutting down all executors

2020-03-27 11:10:12,453 INFO cluster.CoarseGrainedSchedulerBackend$DriverEndpoint: Asking each executor to shut down

2020-03-27 11:10:12,511 INFO spark.MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!

2020-03-27 11:10:12,554 INFO memory.MemoryStore: MemoryStore cleared

2020-03-27 11:10:12,554 INFO storage.BlockManager: BlockManager stopped

2020-03-27 11:10:12,559 INFO storage.BlockManagerMaster: BlockManagerMaster stopped

2020-03-27 11:10:12,563 INFO scheduler.OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!

2020-03-27 11:10:12,570 INFO spark.SparkContext: Successfully stopped SparkContext

2020-03-27 11:10:12,574 INFO util.ShutdownHookManager: Shutdown hook called

2020-03-27 11:10:12,574 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-faf020e1-8d74-415d-ae02-a1713b0eb57a

2020-03-27 11:10:12,576 INFO util.ShutdownHookManager: Deleting directory /tmp/spark-6d587ff5-d80f-479c-9d60-3e3280324118

参考文献:https://www.cnblogs.com/mmzs/p/8202836.html