四 hadoop集群部署

1、准备环境

centos 7.4

hadoop hadoop-3.2.1 (http://www.apache.org/dyn/closer.cgi/hadoop/common/hadoop-3.2.1/hadoop-3.2.1.tar.gz)

jdk 1.8.x

2、配置环境变量

命令:vi /etc/profile

#hadoop export HADOOP_HOME=/opt/module/hadoop-3.2.1 export PATH=$PATH:$HADOOP_HOME/bin export PATH=$PATH:$HADOOP_HOME/sbin export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_YARN_HOME=$HADOOP_HOME export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib/native" export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

命令::wq

命令:source /etc/profile (执行此命令刷新配置文件)

3、新建目录

(分别执行)

mkdir /root/hadoop mkdir /root/hadoop/tmp mkdir /root/hadoop/var mkdir /root/hadoop/dfs mkdir /root/hadoop/dfs/name mkdir /root/hadoop/dfs/data

4、修改配置 etc/hadoop

(1)、修改 core-site.xml

在<configuration>节点内加入配置:

<!-- 指定HADOOP所使用的文件系统schema(URI),HDFS的老大(NameNode)的地址,hdfs://后为主机名或者ip地址和端口号 --> <!-- 修改为 为集群取个名字 --> <!-- <property> <name>fs.default.name</name> <value>hdfs://node180:9000</value> </property> --> <property> <name>fs.defaultFS</name> <value>hdfs://mycluster</value> </property> <!-- hadoop 临时目录 多个用逗号隔开--> <property> <name>hadoop.tmp.dir</name> <value>/root/hadoop/tmp</value> <description>Abase for other temporary directories.</description> </property> <!-- 配置zookeeper 管理 hdfs --> <property> <name>ha.zookeeper.quorum</name> <value>node180:2181,node181:2181,node182:2181</value> </property> <!--修改core-site.xml中的ipc参数,防止出现连接journalnode服务ConnectException <property> <name>ipc.client.connect.max.retries</name> <value>100</value> <description>Indicates the number of retries a client will make to establish a server connection.</description> </property> <property> <name>ipc.client.connect.retry.interval</name> <value>10000</value> <description>Indicates the number of milliseconds a client will wait for before retrying to establish a server connection.</description> </property> -->

(2)、修改 hdfs-site.xml

在<configuration>节点内加入配置:

<property> <name>dfs.name.dir</name> <value>/root/hadoop/dfs/name</value> <description>Path on the local filesystem where theNameNode stores the namespace and transactions logs persistently. </description> </property> <property> <name>dfs.data.dir</name> <value>/root/hadoop/dfs/data</value> <description>Comma separated list of paths on the localfilesystem of a DataNode where it should store its blocks. </description> </property> <!-- 指定数据冗余份数,备份数 --> <property> <name>dfs.replication</name> <value>2</value> </property> <!-- 权限默认配置为false --> <property> <name>dfs.permissions</name> <value>false</value> <description>need not permissions</description> </property> <!-- 完全分布式集群名称,和core-site集群名称必须一致 --> <property> <name>dfs.nameservices</name> <value>mycluster</value> </property> <!-- 集群中NameNode节点都有哪些 --> <property> <name>dfs.ha.namenodes.mycluster</name> <value>nn1,nn2</value> </property> <!-- nn1的RPC通信地址 --> <property> <name>dfs.namenode.rpc-address.mycluster.nn1</name> <value>node180:9000</value> </property> <!-- nn2的RPC通信地址 --> <property> <name>dfs.namenode.rpc-address.mycluster.nn2</name> <value>node181:9000</value> </property> <!-- nn1的http通信地址 --> <property> <name>dfs.namenode.http-address.mycluster.nn1</name> <value>node180:50070</value> </property> <!-- nn2的http通信地址 --> <property> <name>dfs.namenode.http-address.mycluster.nn2</name> <value>node181:50070</value> </property> <!-- 启动故障自动恢复 --> <property> <name>dfs.ha.automatic-failover.enabled</name> <value>true</value> </property> <!-- 指定NameNode元数据在JournalNode上的存放位置 --> <property> <name>dfs.namenode.shared.edits.dir</name> <value>qjournal://node180:8485;node181:8485;node182:8485/mycluster</value> </property> <!-- 访问代理类:client,mycluster,active配置失败自动切换实现方式--> <property> <name>dfs.client.failover.proxy.provider.mycluster</name> <value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value> </property> <!-- 声明journalnode服务器存储目录--> <property> <name>dfs.journalnode.edits.dir</name> <value>/root/hadoop/hdpdata/jn</value> </property> <!-- 配置隔离机制,即同一时刻只能有一台服务器对外响应 --> <property> <name>dfs.ha.fencing.methods</name> <value> sshfence shell(/bin/true)</value> </property> <!-- 使用隔离机制时需要ssh无秘钥登录--> <property> <name>dfs.ha.fencing.ssh.private-key-files</name> <value>/root/.ssh/id_rsa</value> </property> <property> <name>dfs.ha.fencing.ssh.connect-timeout</name> <value>10000</value> </property> <property> <name>dfs.namenode.handler.count</name> <value>100</value> </property>

dfs.permissions配置为false后,可以允许不要检查权限就生成dfs上的文件,方便倒是方便了,但是你需要防止误删除,请将它设置为true,或者直接将该property节点删除,因为默认就是true。

(3)、修改 mapred-site.xml

在<configuration>节点内加入配置:

<!-- 配置mapReduce在Yarn上运行(默认本地运行) --> <property> <name>mapreduce.framework.name</name> <value>yarn</value> </property> <!-- <property> <name>mapreduce.jobhistory.address</name> <value>node180:10020</value> </property> <property> <name>mapreduce.jobhistory.webapp.address</name> <value>node180:19888</value> </property> --> <property> <name>yarn.app.mapreduce.am.env</name> <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value> </property> <property> <name>mapreduce.map.env</name> <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value> </property> <property> <name>mapreduce.reduce.env</name> <value>HADOOP_MAPRED_HOME=${HADOOP_HOME}</value> </property>

(4)、修改 yarn-site.xml

在<configuration>节点内加入配置:

<!-- NodeManager上运行的附属服务。需要配置成mapreduce_shfffle,才可运行MapReduce程序默认值 --> <property> <name>yarn.nodemanager.aux-services</name> <value>mapreduce_shuffle</value> </property> <!-- 日志聚合 --> <property> <name>yarn.log-aggregation-enable</name> <value>true</value> </property> <!-- 任务历史服务--> <property> <name>yarn.log.server.url</name> <value>http://node180:19888/jobhistory/logs/</value> </property> <property> <name>yarn.log-aggregation.retain-seconds</name> <value>86400</value> </property> <!--resourcemanager是否支持高可用HA--> <property> <name>yarn.resourcemanager.ha.enabled</name> <value>true</value> </property> <!--声明resourcemanager集群的名称--> <property> <name>yarn.resourcemanager.cluster-id</name> <value>cluster-yarn1</value> </property> <!--声明两台resourcemanager的地址--> <property> <name>yarn.resourcemanager.ha.rm-ids</name> <value>rm1,rm2</value> </property> <property> <name>yarn.resourcemanager.hostname.rm1</name> <value>node180</value> </property> <property> <name>yarn.resourcemanager.hostname.rm2</name> <value>node181</value> </property> <property> <name>yarn.resourcemanager.webapp.address.rm1</name> <value>node180:8088</value> </property> <property> <name>yarn.resourcemanager.webapp.address.rm2</name> <value>node181:8088</value> </property> <!--指定zookeeper集群的地址--> <property> <name>yarn.resourcemanager.zk-address</name> <value>node180:2181,node181:2181,node182:2181</value> </property> <!--启用自动恢复--> <property> <name>yarn.resourcemanager.recovery.enabled</name> <value>true</value> </property> <!--指定resourcemanager的状态信息存储在zookeeper集群--> <property> <name>yarn.resourcemanager.store.class</name> <value>org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore</value> </property> <!-- <property><description>指定YARN的老大(ResourceManager)的地址</description><name>yarn.resourcemanager.hostname</name><value>node180</value></property><property><discription>每个节点可用内存,单位MB,默认8182MB</discription><name>yarn.scheduler.maximum-allocation-mb</name><value>1024</value></property> --> <property> <name>yarn.nodemanager.vmem-check-enabled</name> <value>false</value> </property>

说明:yarn.nodemanager.vmem-check-enabled这个的意思是忽略虚拟内存的检查,如果你是安装在虚拟机上,这个配置很有用,配上去之后后续操作不容易出问题。如果是实体机上,并且内存够多,可以将这个配置去掉。

(5)、workers文件

改为:

node180 node181 node182

(6)、修改 hadoop-env.sh、mapred-env.sh、yarn-env.sh

加入jdk 配置路径

# jdk

JAVA_HOME=/opt/module/jdk1.8.0_161

其中 hadoop-env.sh 加入:

#export HADOOP_PREFIX=/opt/module/hadoop-3.2.1 export HDFS_DATANODE_SECURE_USER=root #export HDFS_NAMENODE_USER=root #export HDFS_DATANODE_USER=root export HDFS_SECONDARYNAMENODE_USER=root #export YARN_RESOURCEMANAGER_USER=root #export YARN_NODEMANAGER_USER=root

(此处注意:datanode 不能正常启动,由于 JSVC_HOME 未配置造成,根据日志查看,配置文件不正确

改为 hadoop-env.sh :

#export HADOOP_PREFIX=/opt/module/hadoop-3.2.1 #export HDFS_DATANODE_SECURE_USER=root #export HDFS_NAMENODE_USER=root #export HDFS_DATANODE_USER=root #export HDFS_SECONDARYNAMENODE_USER=root #export YARN_RESOURCEMANAGER_USER=root #export YARN_NODEMANAGER_USER=root

)

5、修改 sbin

(1)、修改 start-dfs.sh、stop-dfs.sh

首行加入

#!/usr/bin/env bash HDFS_DATANODE_USER=root #HADOOP_SECURE_DN_USER=hdfs HDFS_DATANODE_SECURE_USER=hdfs HDFS_NAMENODE_USER=root HDFS_SECONDARYNAMENODE_USER=root HDFS_ZKFC_USER=root HDFS_JOURNALNODE_USER=root

(2)、修改 stop-dfs.sh、stop-yarn.sh

#!/usr/bin/env bash YARN_RESOURCEMANAGER_USER=root HADOOP_SECURE_DN_USER=yarn YARN_NODEMANAGER_USER=root

6、同步文件,各个节点

(1)同步hadoop文件夹

scp -r hadoop-3.2.1/ root@192.168.0.181:/opt/module scp -r hadoop-3.2.1/ root@192.168.0.182:/opt/module

(2)同步数据文件夹

scp -r /root/hadoop/ root@192.168.0.181:/root scp -r /root/hadoop/ root@192.168.0.182:/root

6、启动hadoop

(1)、每台执行 journalnode命令

hadoop-daemon.sh start journalnode(hdfs --daemon start journalnode)

运行jps命令检验,master、slave1、slave2上多了JournalNode进程

(2)、格式化 namenode 命令

hdfs namenode -format

(注意是否有错误)

(2.1)其他namenode同步

(2.1.1)如果是使用高可用方式配置的namenode,使用下面命令同步(需要同步的namenode执行).

hdfs namenode -bootstrapStandby

(2.1.2) 如果不是使用高可用方式配置的namenode,使用下面命令同步:

hdfs namenode -initializeSharedEdits

(3)、格式化 zkfc命令

master执行:

hdfs zkfc -formatZK

(4)、启动 hdfs

start-dfs.sh

(6)、启动 yarn

start-yarn.sh

7、测试hadoop

https://blog.csdn.net/weixin_38763887/article/details/79157652

https://blog.csdn.net/s1078229131/article/details/93846369

https://www.pianshen.com/article/611428756/;jsessionid=295150BF82C9DD503455970EF76AB2E9

http://www.voidcn.com/article/p-ycxptkwf-brt.html

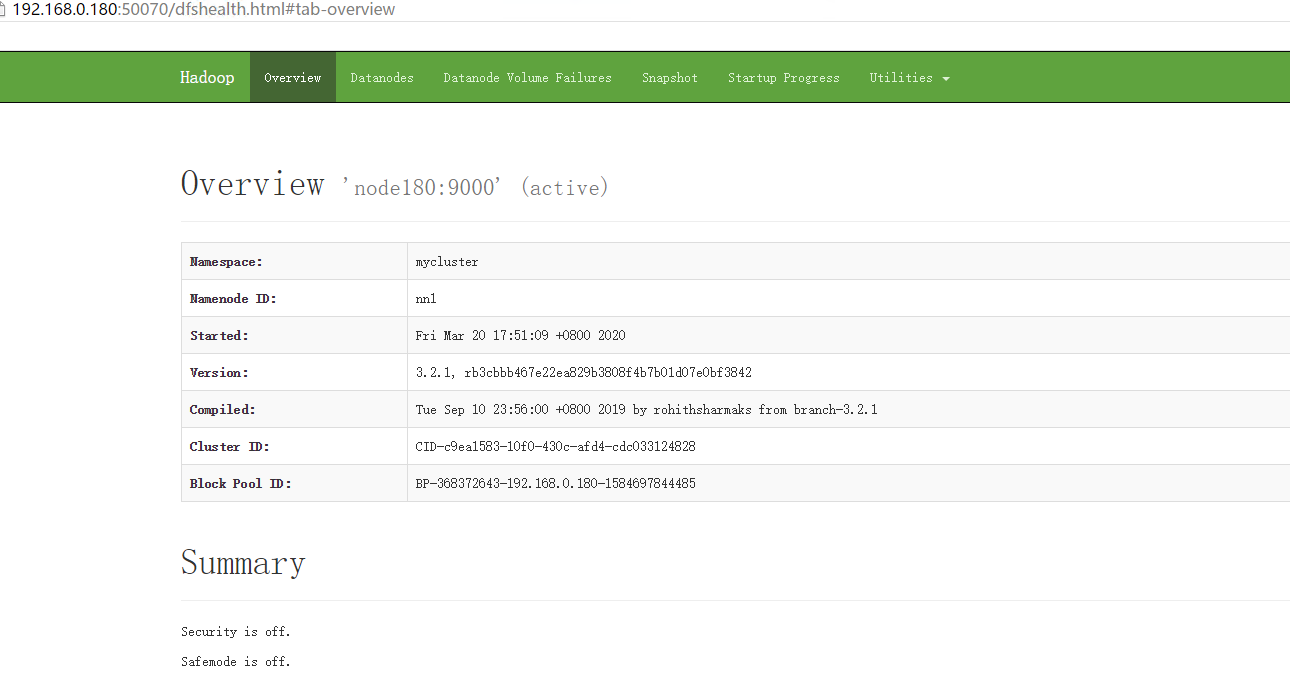

打开:http://192.168.0.180:50070/

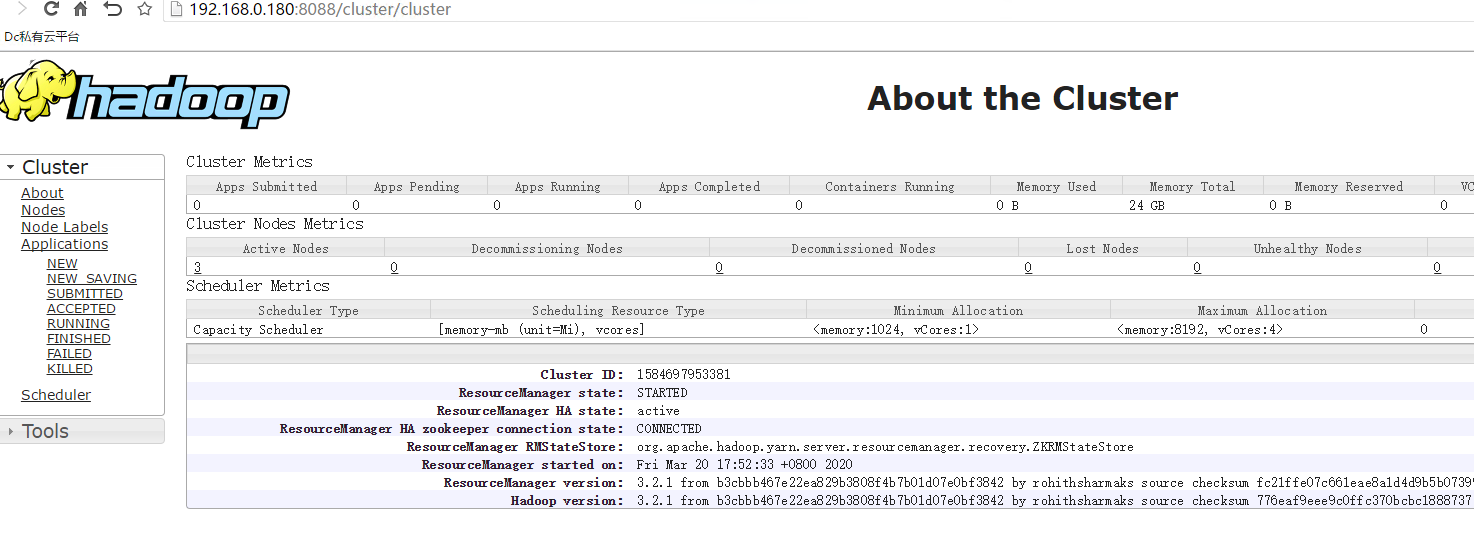

打开:http://192.168.0.180:8088/

8、测试分析

创建文件夹:

hdfs dfs -mkdir -p /user/root

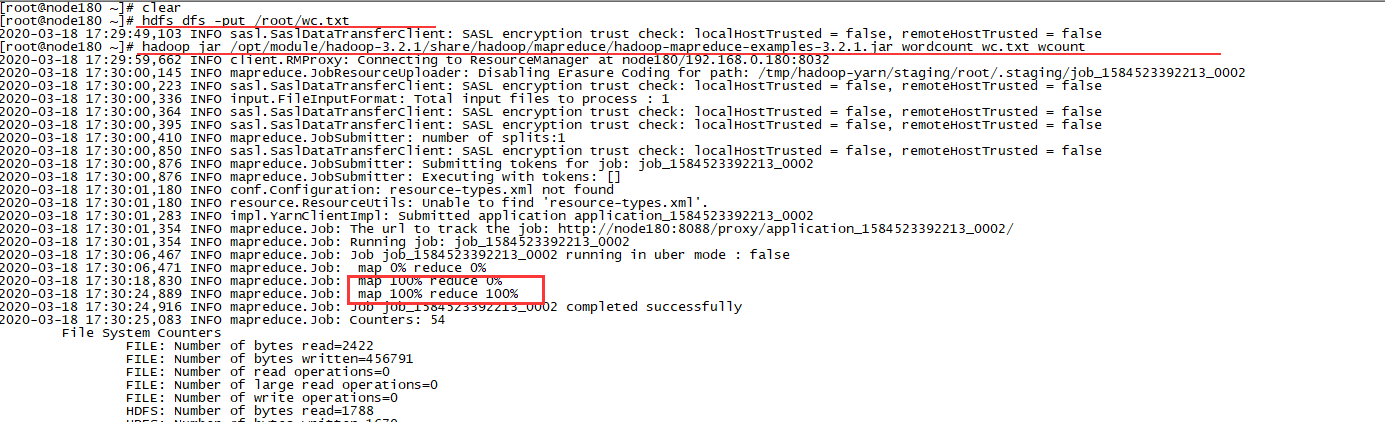

上传分词文件到hadoop服务器:wc.txt

执行命令:

hdfs dfs -put /root/wc.txt

执行分词命令:

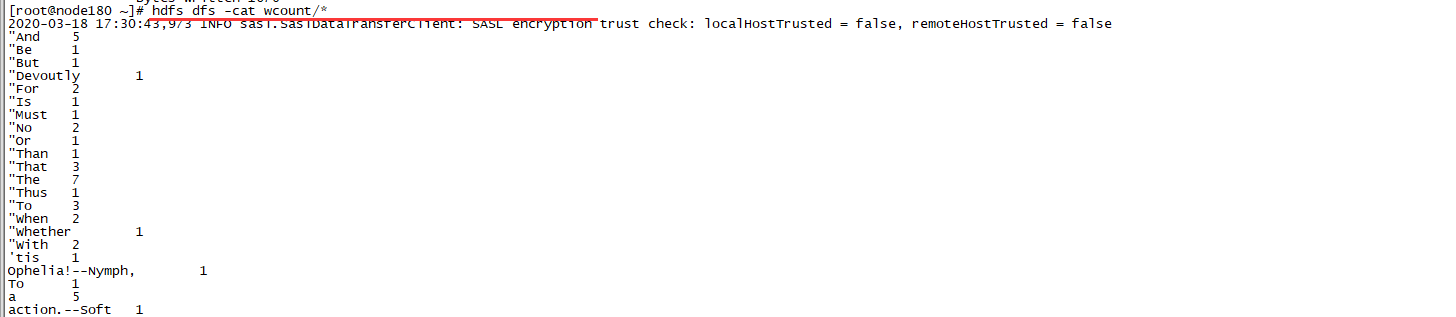

hadoop jar /opt/module/hadoop-3.2.1/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount wc.txt wcount

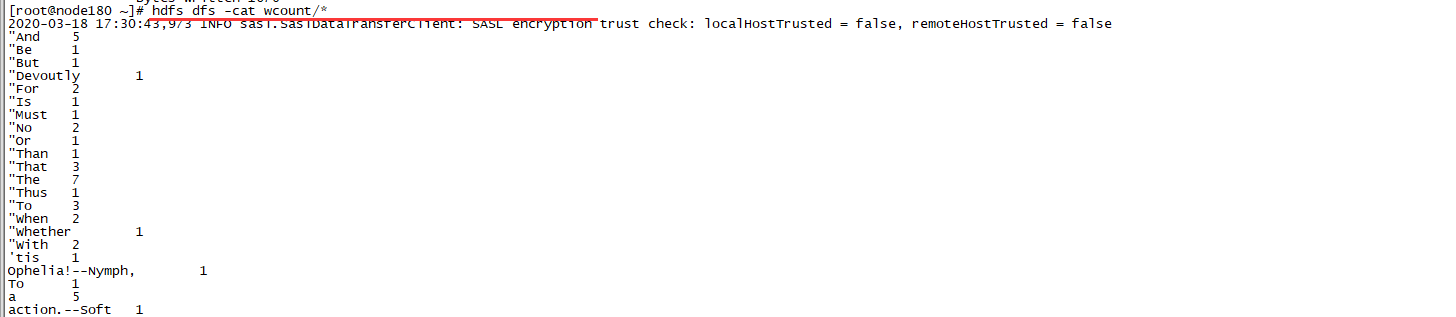

执行结果查看命令:

hdfs dfs -cat wcount/*

效果如图:

问题集锦:

1、

org.apache.hadoop.util.ExitUtil: Exiting with status 1: org.apache.hadoop.yarn.exceptions.YarnRuntimeException: java.lang.NullPointerException

hadoop拒绝history通过19888端口连接查看已完成的job的日志

原因在于:通过start-all.sh启动hadoop各项节点后,historyserver并没有启动,需要手动启动,因此可以通过以下命令来手动启动historyserver,关闭命令也给出.

启动命令:mr-jobhistory-daemon.sh start historyserver 关闭命令:mr-jobhistory-daemon.sh stop historyserver

(参考:

https://blog.csdn.net/u014326004/article/details/80332126

https://blog.csdn.net/qq_38337823/article/details/80580422

)