五 flink 分布式集群搭建

1、准备3台服务器

分别重命名服务器名称:

1 2 3 | hostnamectl set-hostname node180hostnamectl set-hostname node181hostnamectl set-hostname node182 |

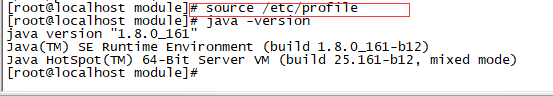

2、安装jdk-1.8.X

文件解压路径:文件解压:

1 | tar zxvf jdk-8u161-linux-x64.gz -C /opt/module |

配置环境变量:

1 2 3 4 5 6 7 | vi /etc/profileJAVA_HOME=/opt/module/jdk1.8.0_161JRE_HOME=$JAVA_HOME/jrePATH=$PATH:$JAVA_HOME/bin:$JRE_HOME/binCLASSPATH=:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar:$JRE_HOME/lib/dt.jarexport JAVA_HOME JRE_HOME PATH CLASSPATH |

3、linux局域网中,通过主机名进行通信

1 2 3 4 5 | vi /etc/hosts192.168.0.180 node180192.168.0.181 node181192.168.0.182 node182 |

利用ifconfig和iwconfig完成每台主机的IP配置,在终端即可通过ping 主机名的方式来验证相互之间是否联通。

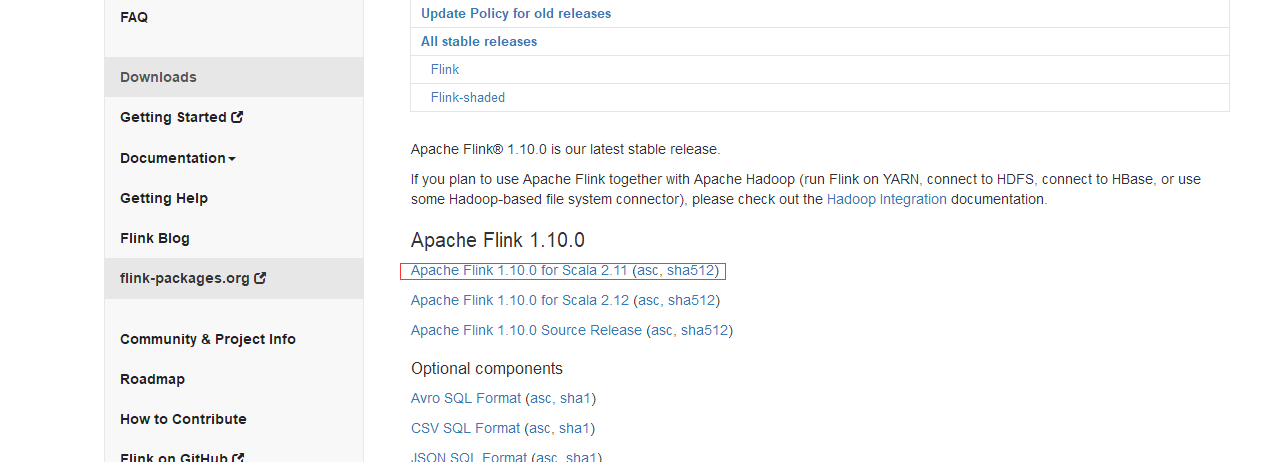

5、flink

flink 下载:http://flink.apache.org/downloads.html

源文件存放目录:/usr/software

文件解压:

1 | tar zxvf flink-1.10.0-bin-scala_2.12.tgz -C /opt/module |

设置主从:

1 2 3 | vi mastersnode180:8081 |

1 2 3 4 5 | vi slavesnode181node182 |

vi flink-conf.yaml

修改flink配置

1 2 3 4 5 6 7 8 9 10 | #jobmanager.rpc.address: node180high-availability: zookeeperhigh-availability.zookeeper.quorum: node180:2181,node181:2181,node182:2181high-availability.storageDir: hdfs:///flink/ha/#high-availability.zookeeper.path.root: /flink#high-availability.cluster-id: /flinkClusterstate.backend: filesystemstate.checkpoints.dir: hdfs:///flink/checkpointsstate.savepoints.dir: hdfs:///flink/checkpointsstate.savepoints.dir: hdfs:///flink/checkpoint |

cd /opt/module

拷贝安装节点包

1 2 3 | scp -r flink-1.10.0/ root@192.168.0.181:/opt/modulescp -r flink-1.10.0/ root@192.168.0.182:/opt/module |

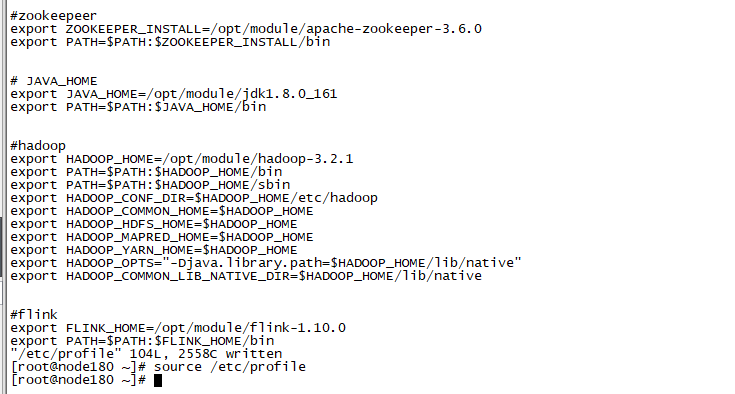

配置环境变量

1 2 3 | #flinkexport FLINK_HOME=/opt/module/flink-1.10.0export PATH=$PATH:$FLINK_HOME/bin |

执行刷新配置命令:

1 | source /etc/profile |

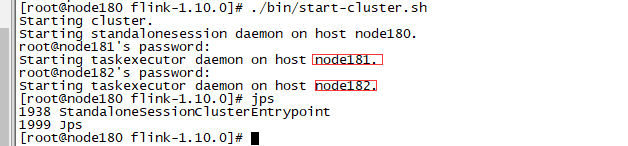

启动flink

1 | ./bin/start-cluster.sh |

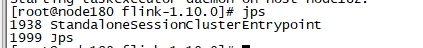

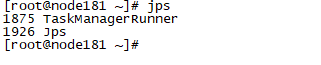

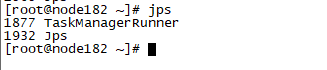

jps查看进程

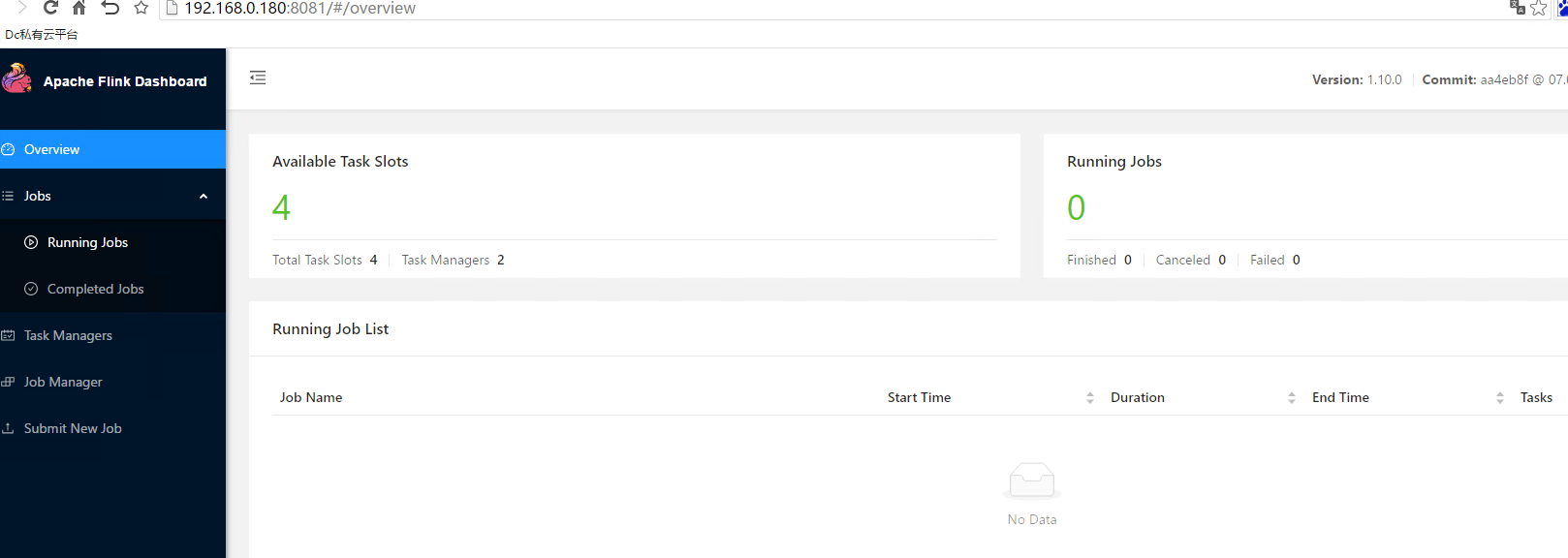

webui查看

flink同步配置文件

1 2 | scp -r flink-1.10.0/conf/. root@192.168.0.181:/opt/module/flink-1.10.0/confscp -r flink-1.10.0/conf/. root@192.168.0.182:/opt/module/flink-1.10.0/conf |

问题

1、org.apache.flink.core.fs.UnsupportedFileSystemSchemeException: Could not find a file system implementation for scheme 'hdfs'. The scheme is not directly supported by Flink and no Hadoop file system to support this scheme could be loaded.

flink 下lib 文件缺失jar(flink-shaded-hadoop-2-uber-2.6.5-10.0.jar)包导致,下载指定包上传到lib文件夹重新启动flink即可

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步