GBDT(Gradient Boosting Decision Tree)粗探

GBDT(Gradient Boosting Decision Tree)粗探

\[\begin{array}{l}{\tilde{y}_{i}=-\left[\frac{\partial L\left(y_{i}, F\left(x_{i}\right)\right)}{\partial F\left(x_{i}\right)}\right]_{F(x)=F_{t-1}(x)}, i=1,2, \ldots N} \\ {w^{*}=\underset{w}{\arg \min } \sum_{i=1}^{N}\left(\tilde{y}_{i}-h_{t}\left(x_{i} ; w\right)\right)^{2}} \\ {\rho^{*}=\underset{\rho}{\arg \min } \sum_{i=1}^{N} L\left(y_{i}, F_{t-1}\left(x_{i}\right)+\rho h_{t}\left(x_{i} ; w^{*}\right)\right)} \\{F_{t}=F_{t-1}+f_{t}}\end{array}

\]

\[\mathcal{L}(\phi)=\sum_{i} l\left(\hat{y}_{i}, y_{i}\right)+\sum_{k} \Omega\left(f_{k}\right)

\]

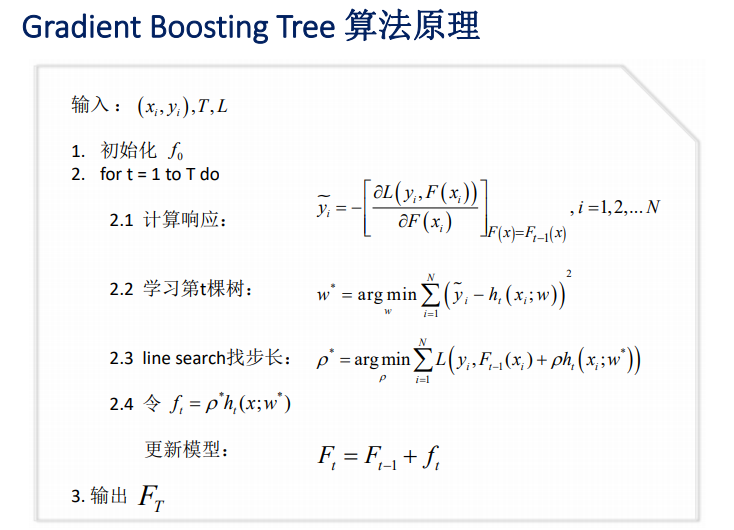

对每个样本$ i $ ,利用损失函数$ L\left(y_{i}, F\left(x_{i}\right)\right)$ 关于前一轮分类器预测$ F_{t-1}\left(x_{i}\right)$ 的梯度(梯度由此而来)算得一个残差$ \tilde{y}_{i} $ ,具体计算如下,

\[\tilde{y}_{i}=-\left[\frac{\partial L\left(y_{i}, F\left(x_{i}\right)\right)}{\partial F\left(x_{i}\right)}\right]_{F(x)=F_{t-1}(x)}, i=1,2, \ldots N

\]

将残差作为新的要回归或预测的变量,在特征和残差$ \tilde{y}{i} $ 构成的新数据集上学到一颗新树$ h\left(x ; w^{*}\right)$ (树由此而来),并以损失最小化为目标确定即将要整合进去整体模型中树的权重,

\[{w^{*}=\underset{w}{\arg \min } \sum_{i=1}^{N}\left(\tilde{y}_{i}-h_{t}\left(x_{i} ; w\right)\right)^{2}}

\]

\[{\rho^{*}=\underset{\rho}{\arg \min } \sum_{i=1}^{N} L\left(y_{i}, F_{t-1}\left(x_{i}\right)+\rho h_{t}\left(x_{i} ; w^{*}\right)\right)}

\]

令 $ f_{t}=\rho^{}h_{t}\left(x ; w^{}\right)$ ,将最新学到的树和最优系数整合进前一轮整体模型$ F_{t-1}$ 中,

\[{F_{t}=F_{t-1}+f_{t}}

\]

下面是当前一棵树的叶子个数,与上面总树的个数不相干。

\[\Omega(f)=\gamma T+\frac{1}{2} \lambda\|w\|^{2}

\]