在编译器中调试spark程序处理

在IDEA中调试spark程序会报错

18/05/16 07:33:51 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

18/05/16 07:33:51 ERROR SparkContext: Error initializing SparkContext.

org.apache.spark.SparkException: A master URL must be set in your configuration

at org.apache.spark.SparkContext.<init>(SparkContext.scala:401)

at LineAgeDemo$.main(LineAgeDemo.scala:12)

at LineAgeDemo.main(LineAgeDemo.scala)

18/05/16 07:33:52 INFO SparkContext: Successfully stopped SparkContext

Exception in thread "main" org.apache.spark.SparkException: A master URL must be set in your configuration

at org.apache.spark.SparkContext.<init>(SparkContext.scala:401)

at LineAgeDemo$.main(LineAgeDemo.scala:12)

at LineAgeDemo.main(LineAgeDemo.scala)

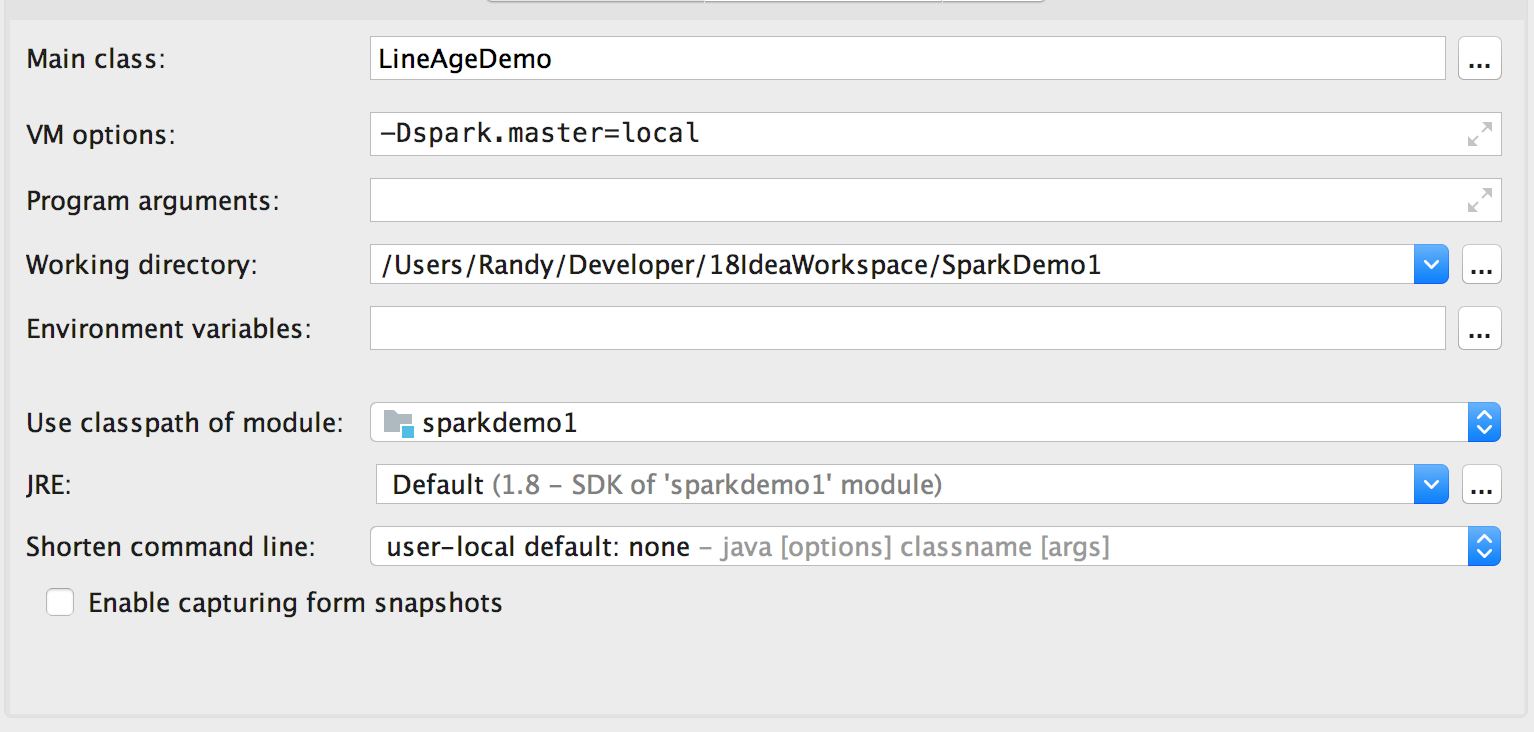

解决办法是在VM option中加入-Dspark.master=local

微信公众号:

Randy的技术笔记

微信公众号:

Randy的技术笔记

如果您认为阅读这篇博客让您有些收获,不妨点击一下右下角的【推荐】

如果您希望与我交流互动,欢迎关注微信公众号

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接。