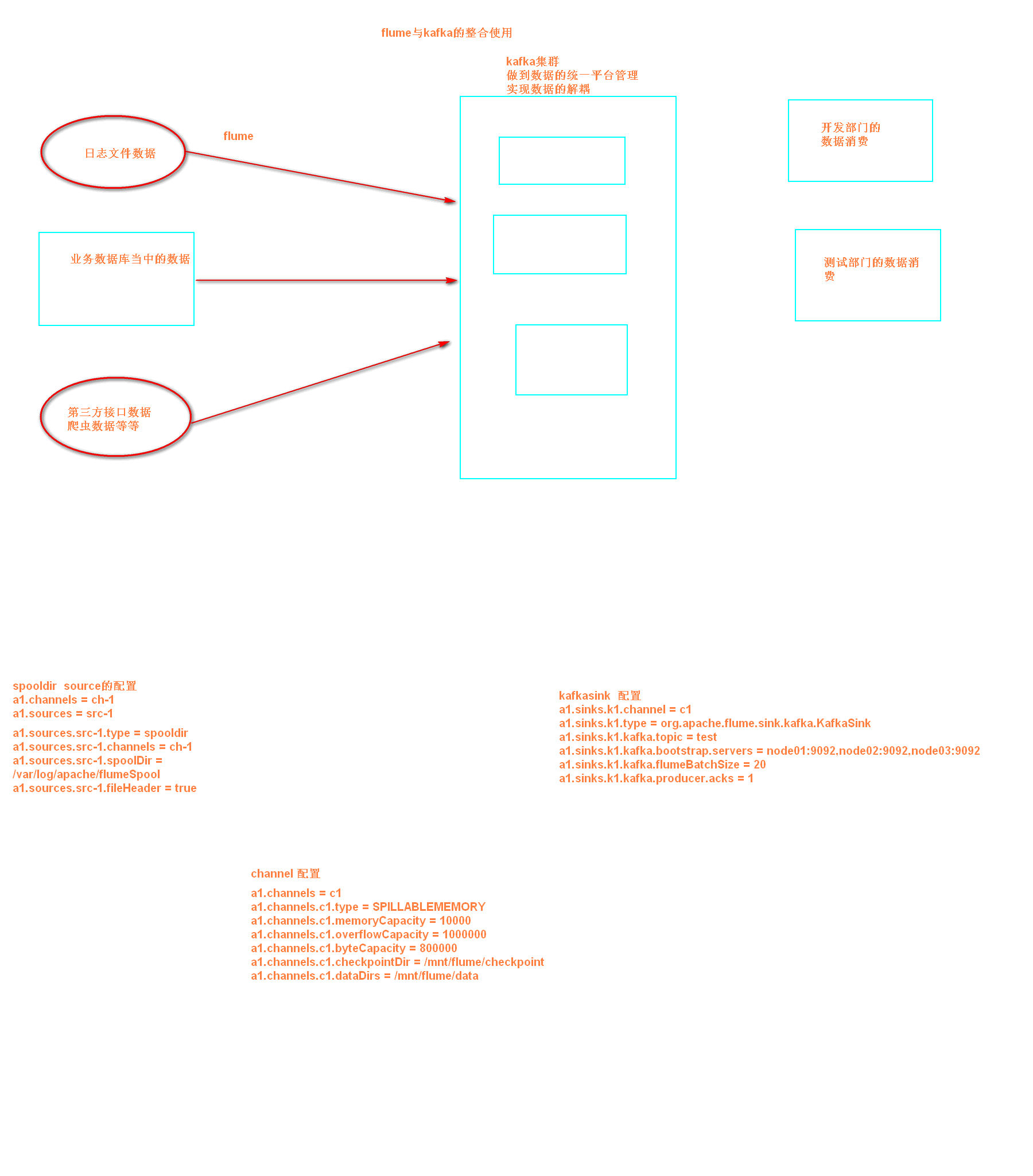

kafka和flume进行整合的日志采集的confi文件编写

配置flume.conf

为我们的source channel sink起名

a1.sources = r1

a1.channels = c1

a1.sinks = k1

指定我们的source收集到的数据发送到哪个管道

a1.sources.r1.channels = c1

指定我们的source数据收集策略

a1.sources.r1.type = spooldir

a1.sources.r1.spoolDir = /export/servers/flumedata

a1.sources.r1.deletePolicy = never

a1.sources.r1.fileSuffix = .COMPLETED

a1.sources.r1.ignorePattern = ^(.)*\.tmp$

a1.sources.r1.inputCharset = GBK

指定我们的channel为memory,即表示所有的数据都装进memory当中

a1.channels.c1.type = memory

指定我们的sink为kafka sink,并指定我们的sink从哪个channel当中读取数据

a1.sinks.k1.channel = c1

a1.sinks.k1.type = org.apache.flume.sink.kafka.KafkaSink

a1.sinks.k1.kafka.topic = test

a1.sinks.k1.kafka.bootstrap.servers = node01:9092,node02:9092,node03:9092

a1.sinks.k1.kafka.flumeBatchSize = 20

a1.sinks.k1.kafka.producer.acks = 1

启动flume

bin/flume-ng agent --conf conf --conf-file conf/flume.conf --name a1 -Dflume.root.logger=INFO,console