k8s实战案例之运行Java单体服务-jenkins

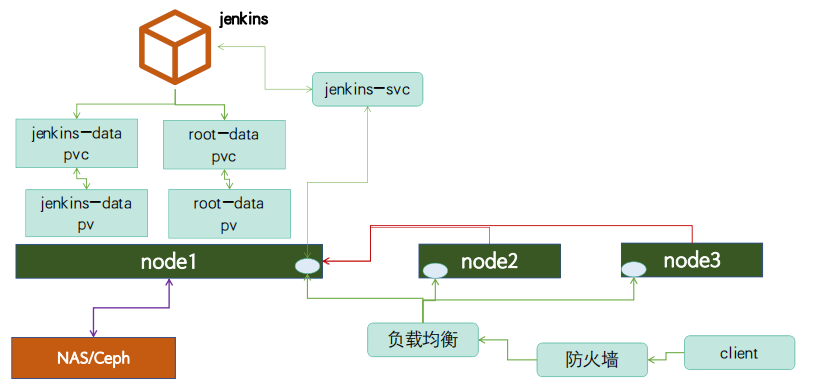

1、jenkins架构

基于java命令,运⾏java war包或jar包,本次以jenkins.war 包部署⽅式为例,且要求jenkins的数据保存⾄外部存储(NFS或者PVC),其他java应⽤看实际需求是否需要将数据保存⾄外部存储。

从上述架构图可以看到,Jenkins通过k8s上的pv/pvc来连接外部存储,通过svc的方式向外暴露服务,在集群内部通过直接访问svc就可以正常访问到jenkins,对于集群外部成员,通过外部负载均衡器来访问Jenkins;

2、镜像准备

2.1、Jenkins镜像目录文件

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/jenkins# tree

.

├── Dockerfile

├── build-command.sh

├── jenkins-2.319.2.war

└── run_jenkins.sh

0 directories, 4 files

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/jenkins#

2.2、构建Jenkins镜像

2.2.1、构建Jenkins镜像Dockerfile

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/jenkins# cat Dockerfile

#Jenkins Version 2.190.1

FROM harbor.ik8s.cc/pub-images/jdk-base:v8.212

ADD jenkins-2.319.2.war /apps/jenkins/jenkins.war

ADD run_jenkins.sh /usr/bin/

EXPOSE 8080

CMD ["/usr/bin/run_jenkins.sh"]

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/jenkins#

上述Dockerfile主要引用了一个jdk-base的镜像,该镜像有java环境,然后再这基础之上将jenkins war包和运行脚本加进去,然后暴露8080端口,最后给出运行jenkins的cmd命令;

2.2.2、运行jenkins 脚本

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/jenkins# cat run_jenkins.sh

#!/bin/bash

cd /apps/jenkins && java -server -Xms1024m -Xmx1024m -Xss512k -jar jenkins.war --webroot=/apps/jenkins/jenkins-data --httpPort=8080

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/jenkins#

2.2.3、构建Jenkins镜像脚本

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/jenkins# cat build-command.sh

#!/bin/bash

#docker build -t harbor.ik8s.cc/magedu/jenkins:v2.319.2 .

#echo "镜像制作完成,即将上传至Harbor服务器"

#sleep 1

#docker push harbor.ik8s.cc/magedu/jenkins:v2.319.2

#echo "镜像上传完成"

echo "即将开始就像构建,请稍等!" && echo 3 && sleep 1 && echo 2 && sleep 1 && echo 1

nerdctl build -t harbor.ik8s.cc/magedu/jenkins:v2.319.2 .

if [ $? -eq 0 ];then

echo "即将开始镜像上传,请稍等!" && echo 3 && sleep 1 && echo 2 && sleep 1 && echo 1

nerdctl push harbor.ik8s.cc/magedu/jenkins:v2.319.2

if [ $? -eq 0 ];then

echo "镜像上传成功!"

else

echo "镜像上传失败"

fi

else

echo "镜像构建失败,请检查构建输出信息!"

fi

root@k8s-master01:~/k8s-data/dockerfile/web/magedu/jenkins#

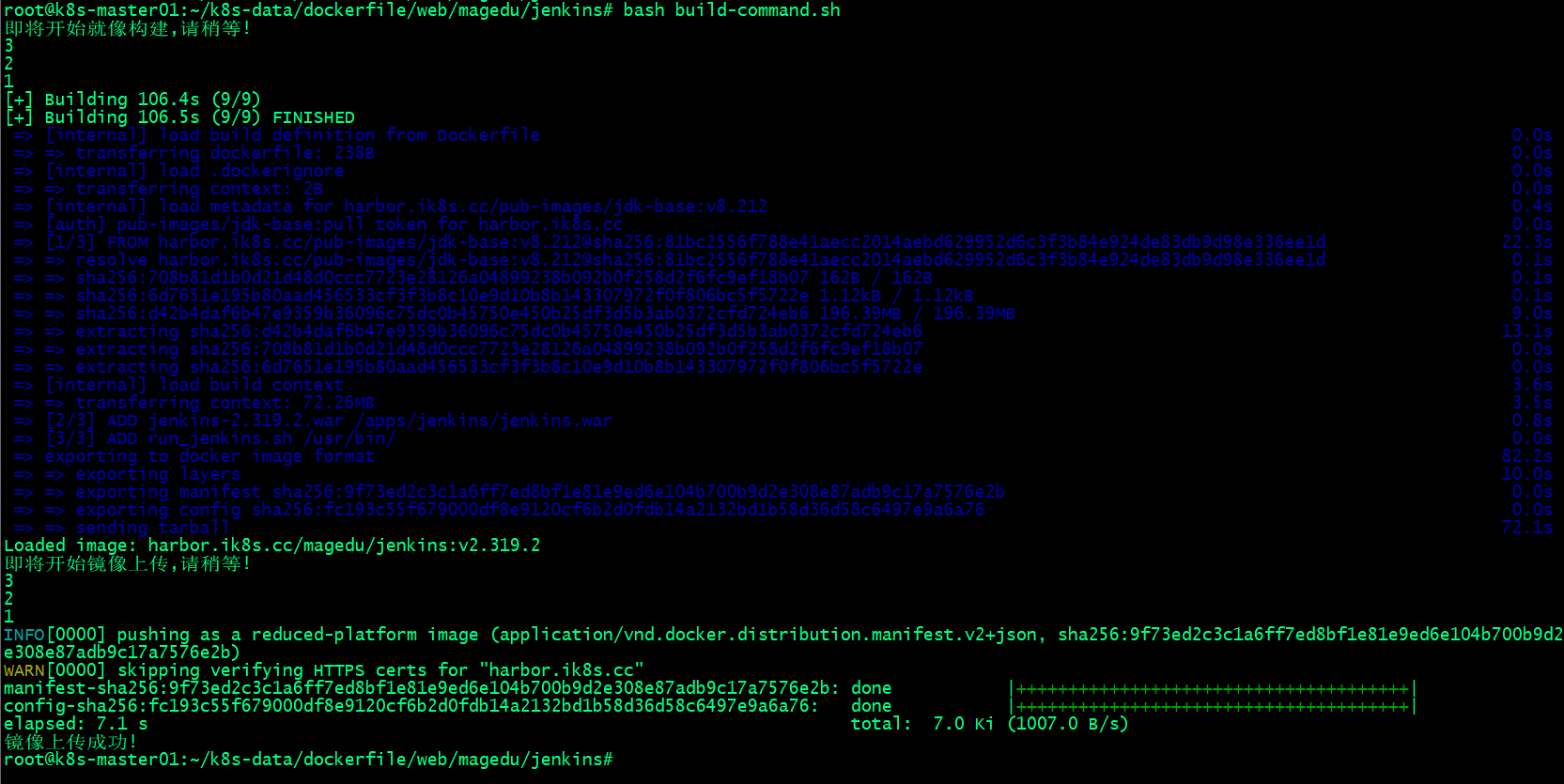

运行脚本构建镜像

2.3、验证Jenkins镜像

2.3.1、在harbor上查看对应jenkins镜像是否正常上传?

2.3.2、测试Jenkins镜像是否可以正常运行?

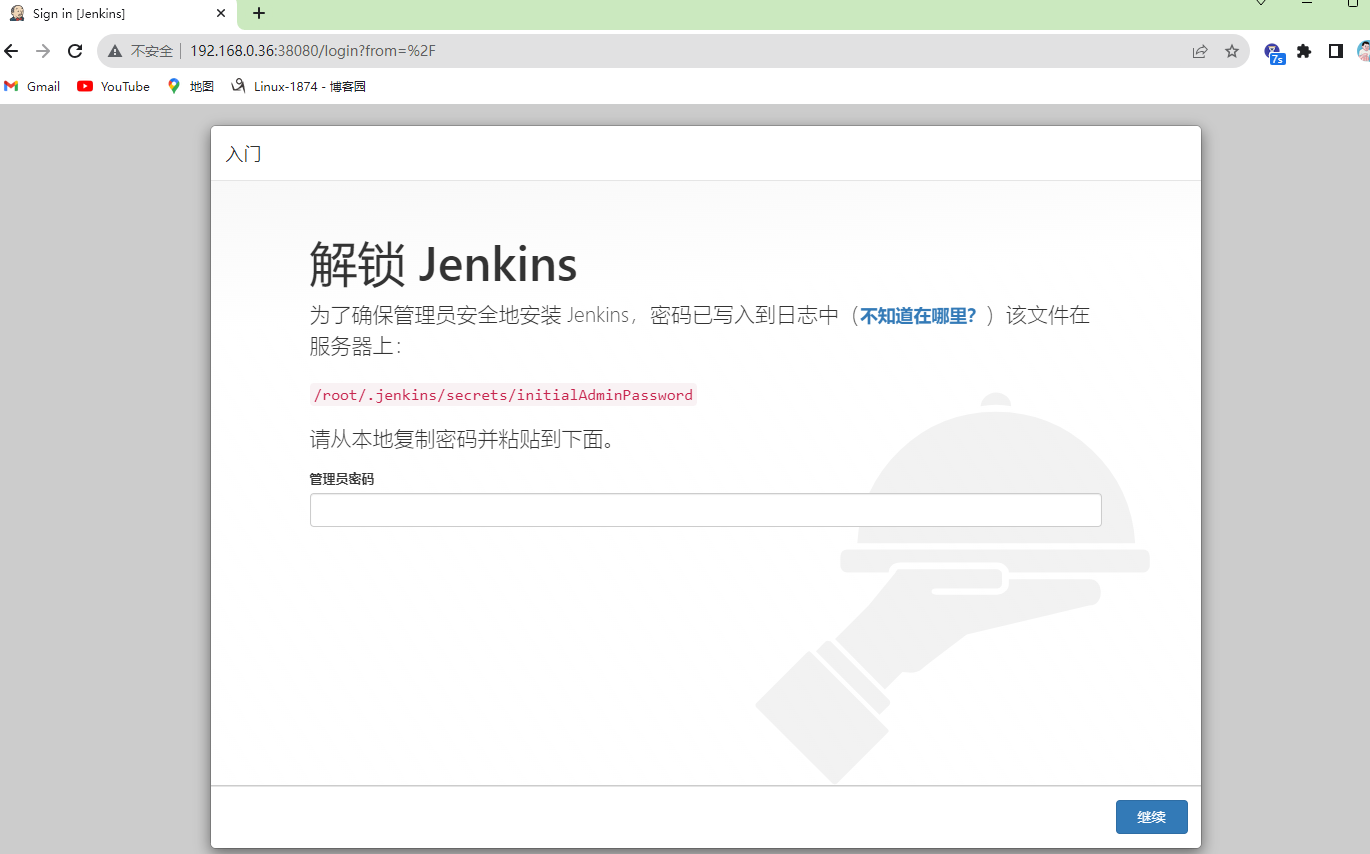

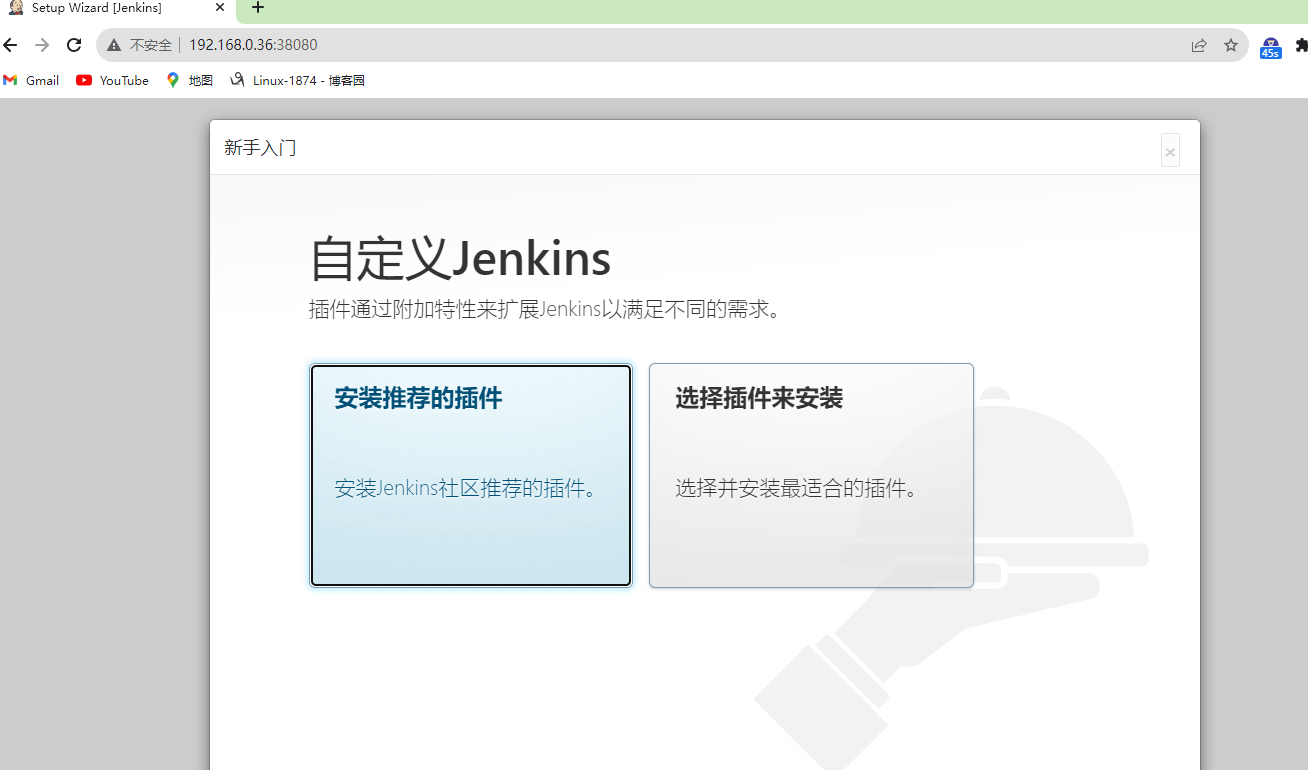

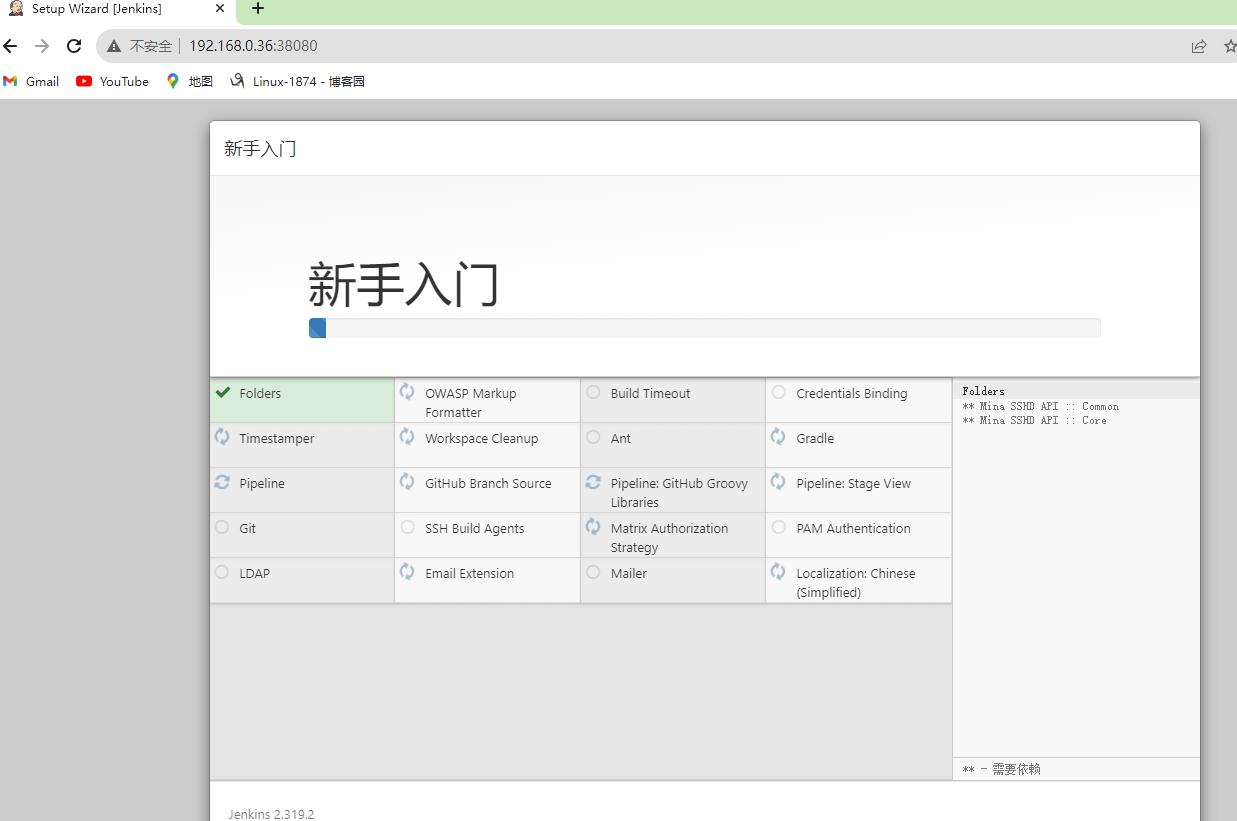

2.3.3、web访问jenkins是否可以正常访问?

能够看到上述页面,说明jenkins镜像制作没有问题;

3、准备PV/PVC

3.1、在nfs服务器上准备jenkins数据目录

root@harbor:~# mkdir -p /data/k8sdata/magedu/{jenkins-data,jenkins-root-data}

root@harbor:~# ll /data/k8sdata/magedu/{jenkins-data,jenkins-root-data}

/data/k8sdata/magedu/jenkins-data:

total 8

drwxr-xr-x 2 root root 4096 Aug 6 03:35 ./

drwxr-xr-x 21 root root 4096 Aug 6 03:35 ../

/data/k8sdata/magedu/jenkins-root-data:

total 8

drwxr-xr-x 2 root root 4096 Aug 6 03:35 ./

drwxr-xr-x 21 root root 4096 Aug 6 03:35 ../

root@harbor:~# tail /etc/exports

/data/k8sdata/magedu/mysql-datadir-1 *(rw,no_root_squash)

/data/k8sdata/magedu/mysql-datadir-2 *(rw,no_root_squash)

/data/k8sdata/magedu/mysql-datadir-3 *(rw,no_root_squash)

/data/k8sdata/magedu/mysql-datadir-4 *(rw,no_root_squash)

/data/k8sdata/magedu/mysql-datadir-5 *(rw,no_root_squash)

/data/k8sdata/magedu/jenkins-data *(rw,no_root_squash)

/data/k8sdata/magedu/jenkins-root-data *(rw,no_root_squash)

root@harbor:~# exportfs -av

exportfs: /etc/exports [1]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/kuboard".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [2]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/volumes".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [3]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/pod-vol".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [4]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/myserver".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [5]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/mysite".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [7]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/images".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [8]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/static".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [11]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/zookeeper-datadir-1".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [12]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/zookeeper-datadir-2".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [13]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/zookeeper-datadir-3".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [16]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis-datadir-1".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [18]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis0".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [19]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis1".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [20]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis2".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [21]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis3".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [22]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis4".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [23]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/redis5".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [27]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/mysql-datadir-1".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [28]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/mysql-datadir-2".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [29]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/mysql-datadir-3".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [30]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/mysql-datadir-4".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [31]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/mysql-datadir-5".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [34]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/jenkins-data".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exportfs: /etc/exports [35]: Neither 'subtree_check' or 'no_subtree_check' specified for export "*:/data/k8sdata/magedu/jenkins-root-data".

Assuming default behaviour ('no_subtree_check').

NOTE: this default has changed since nfs-utils version 1.0.x

exporting *:/data/k8sdata/magedu/jenkins-root-data

exporting *:/data/k8sdata/magedu/jenkins-data

exporting *:/data/k8sdata/magedu/mysql-datadir-5

exporting *:/data/k8sdata/magedu/mysql-datadir-4

exporting *:/data/k8sdata/magedu/mysql-datadir-3

exporting *:/data/k8sdata/magedu/mysql-datadir-2

exporting *:/data/k8sdata/magedu/mysql-datadir-1

exporting *:/data/k8sdata/magedu/redis5

exporting *:/data/k8sdata/magedu/redis4

exporting *:/data/k8sdata/magedu/redis3

exporting *:/data/k8sdata/magedu/redis2

exporting *:/data/k8sdata/magedu/redis1

exporting *:/data/k8sdata/magedu/redis0

exporting *:/data/k8sdata/magedu/redis-datadir-1

exporting *:/data/k8sdata/magedu/zookeeper-datadir-3

exporting *:/data/k8sdata/magedu/zookeeper-datadir-2

exporting *:/data/k8sdata/magedu/zookeeper-datadir-1

exporting *:/data/k8sdata/magedu/static

exporting *:/data/k8sdata/magedu/images

exporting *:/data/k8sdata/mysite

exporting *:/data/k8sdata/myserver

exporting *:/pod-vol

exporting *:/data/volumes

exporting *:/data/k8sdata/kuboard

root@harbor:~#

3.2、在k8s上创建pv

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-datadir-pv

namespace: magedu

spec:

capacity:

storage: 100Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/jenkins-data

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: jenkins-root-datadir-pv

namespace: magedu

spec:

capacity:

storage: 100Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.0.42

path: /data/k8sdata/magedu/jenkins-root-data

root@k8s-master01:~/k8s-data/yaml/magedu/jenkins/pv# kubectl apply -f jenkins-persistentvolume.yaml

persistentvolume/jenkins-datadir-pv created

persistentvolume/jenkins-root-datadir-pv created

root@k8s-master01:~/k8s-data/yaml/magedu/jenkins/pv#

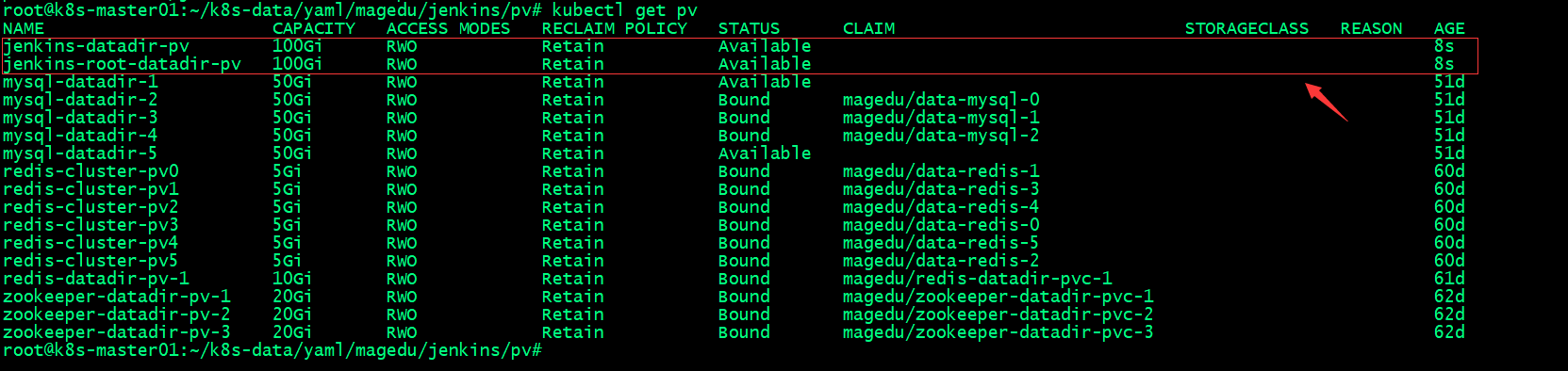

3.3、验证pv

3.4、在k8s上创建pvc

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-datadir-pvc

namespace: magedu

spec:

volumeName: jenkins-datadir-pv

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 80Gi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: jenkins-root-data-pvc

namespace: magedu

spec:

volumeName: jenkins-root-datadir-pv

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 80Gi

root@k8s-master01:~/k8s-data/yaml/magedu/jenkins/pv# kubectl apply -f jenkins-persistentvolumeclaim.yaml

persistentvolumeclaim/jenkins-datadir-pvc created

persistentvolumeclaim/jenkins-root-data-pvc created

root@k8s-master01:~/k8s-data/yaml/magedu/jenkins/pv#

3.5、验证pvc

4、准备在k8s上运行jenkins的yaml文件

kind: Deployment

#apiVersion: extensions/v1beta1

apiVersion: apps/v1

metadata:

labels:

app: magedu-jenkins

name: magedu-jenkins-deployment

namespace: magedu

spec:

replicas: 1

selector:

matchLabels:

app: magedu-jenkins

template:

metadata:

labels:

app: magedu-jenkins

spec:

containers:

- name: magedu-jenkins-container

image: harbor.ik8s.cc/magedu/jenkins:v2.319.2

#imagePullPolicy: IfNotPresent

imagePullPolicy: Always

ports:

- containerPort: 8080

protocol: TCP

name: http

volumeMounts:

- mountPath: "/apps/jenkins/jenkins-data/"

name: jenkins-datadir-magedu

- mountPath: "/root/.jenkins"

name: jenkins-root-datadir

volumes:

- name: jenkins-datadir-magedu

persistentVolumeClaim:

claimName: jenkins-datadir-pvc

- name: jenkins-root-datadir

persistentVolumeClaim:

claimName: jenkins-root-data-pvc

---

kind: Service

apiVersion: v1

metadata:

labels:

app: magedu-jenkins

name: magedu-jenkins-service

namespace: magedu

spec:

type: NodePort

ports:

- name: http

port: 80

protocol: TCP

targetPort: 8080

nodePort: 38080

selector:

app: magedu-jenkins

5、应用配置清单运行Jenkins

root@k8s-master01:~/k8s-data/yaml/magedu/jenkins# kubectl apply -f jenkins.yaml

deployment.apps/magedu-jenkins-deployment created

service/magedu-jenkins-service created

root@k8s-master01:~/k8s-data/yaml/magedu/jenkins#

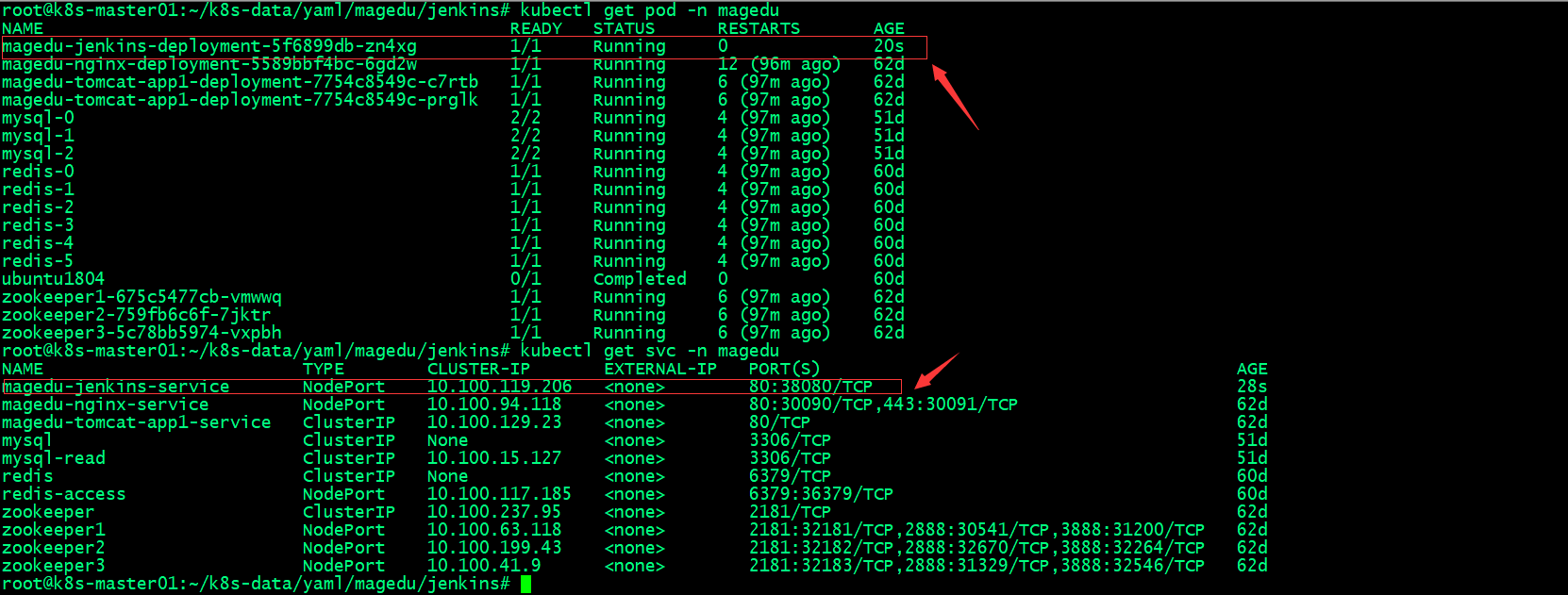

6、验证

6.1、验证Jenkins Pod是否正常运行?

6.2、验证web访问jenkins是否可正常访问?

查看jenkins密码

root@k8s-master01:~/k8s-data/yaml/magedu/jenkins# kubectl get pods -n magedu

NAME READY STATUS RESTARTS AGE

magedu-jenkins-deployment-5f6899db-zn4xg 1/1 Running 0 11m

magedu-nginx-deployment-5589bbf4bc-6gd2w 1/1 Running 12 (107m ago) 62d

magedu-tomcat-app1-deployment-7754c8549c-c7rtb 1/1 Running 6 (108m ago) 62d

magedu-tomcat-app1-deployment-7754c8549c-prglk 1/1 Running 6 (108m ago) 62d

mysql-0 2/2 Running 4 (108m ago) 51d

mysql-1 2/2 Running 4 (108m ago) 51d

mysql-2 2/2 Running 4 (108m ago) 51d

redis-0 1/1 Running 4 (108m ago) 60d

redis-1 1/1 Running 4 (108m ago) 60d

redis-2 1/1 Running 4 (108m ago) 60d

redis-3 1/1 Running 4 (108m ago) 60d

redis-4 1/1 Running 4 (108m ago) 60d

redis-5 1/1 Running 4 (108m ago) 60d

ubuntu1804 0/1 Completed 0 60d

zookeeper1-675c5477cb-vmwwq 1/1 Running 6 (108m ago) 62d

zookeeper2-759fb6c6f-7jktr 1/1 Running 6 (108m ago) 62d

zookeeper3-5c78bb5974-vxpbh 1/1 Running 6 (108m ago) 62d

root@k8s-master01:~/k8s-data/yaml/magedu/jenkins# kubectl exec -it magedu-jenkins-deployment-5f6899db-zn4xg -n magedu cat /root/.jenkins/secrets/initialAdminPassword

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

8c4a17a8ecfe4fb88ed8701cb18340df

root@k8s-master01:~/k8s-data/yaml/magedu/jenkins#

ok,能够通过web网页正常访问到jenkins pod,到此jenkins服务就正常运行至k8s上了;后续可以通过外部负载均衡器将jenkins发布到集群外部成员访问;

7、在外部负载均衡器上发布jenkins

ha01

root@k8s-ha01:~# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state MASTER

interface ens160

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.0.111 dev ens160 label ens160:0

192.168.0.112 dev ens160 label ens160:1

}

}

root@k8s-ha01:~# cat /etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen k8s_apiserver_6443

bind 192.168.0.111:6443

mode tcp

#balance leastconn

server k8s-master01 192.168.0.31:6443 check inter 2000 fall 3 rise 5

server k8s-master02 192.168.0.32:6443 check inter 2000 fall 3 rise 5

server k8s-master03 192.168.0.33:6443 check inter 2000 fall 3 rise 5

listen jenkins_80

bind 192.168.0.112:80

mode tcp

server k8s-node01 192.168.0.34:38080 check inter 2000 fall 3 rise 5

server k8s-node02 192.168.0.35:38080 check inter 2000 fall 3 rise 5

server k8s-node03 192.168.0.36:38080 check inter 2000 fall 3 rise 5

root@k8s-ha01:~# systemctl restart keepalived haproxy

root@k8s-ha01:~# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 4096 192.168.0.111:6443 0.0.0.0:*

LISTEN 0 4096 192.168.0.112:80 0.0.0.0:*

LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

root@k8s-ha01:~#

ha02

root@k8s-ha02:~# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_instance VI_1 {

state BACKUP

interface ens160

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 70

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.0.111 dev ens160 label ens160:0

192.168.0.112 dev ens160 label ens160:1

}

}

root@k8s-ha02:~# cat /etc/haproxy/haproxy.cfg

global

log /dev/log local0

log /dev/log local1 notice

chroot /var/lib/haproxy

stats socket /run/haproxy/admin.sock mode 660 level admin expose-fd listeners

stats timeout 30s

user haproxy

group haproxy

daemon

# Default SSL material locations

ca-base /etc/ssl/certs

crt-base /etc/ssl/private

# See: https://ssl-config.mozilla.org/#server=haproxy&server-version=2.0.3&config=intermediate

ssl-default-bind-ciphers ECDHE-ECDSA-AES128-GCM-SHA256:ECDHE-RSA-AES128-GCM-SHA256:ECDHE-ECDSA-AES256-GCM-SHA384:ECDHE-RSA-AES256-GCM-SHA384:ECDHE-ECDSA-CHACHA20-POLY1305:ECDHE-RSA-CHACHA20-POLY1305:DHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384

ssl-default-bind-ciphersuites TLS_AES_128_GCM_SHA256:TLS_AES_256_GCM_SHA384:TLS_CHACHA20_POLY1305_SHA256

ssl-default-bind-options ssl-min-ver TLSv1.2 no-tls-tickets

defaults

log global

mode http

option httplog

option dontlognull

timeout connect 5000

timeout client 50000

timeout server 50000

errorfile 400 /etc/haproxy/errors/400.http

errorfile 403 /etc/haproxy/errors/403.http

errorfile 408 /etc/haproxy/errors/408.http

errorfile 500 /etc/haproxy/errors/500.http

errorfile 502 /etc/haproxy/errors/502.http

errorfile 503 /etc/haproxy/errors/503.http

errorfile 504 /etc/haproxy/errors/504.http

listen k8s_apiserver_6443

bind 192.168.0.111:6443

mode tcp

#balance leastconn

server k8s-master01 192.168.0.31:6443 check inter 2000 fall 3 rise 5

server k8s-master02 192.168.0.32:6443 check inter 2000 fall 3 rise 5

server k8s-master03 192.168.0.33:6443 check inter 2000 fall 3 rise 5

listen jenkins_80

bind 192.168.0.112:80

mode tcp

server k8s-node01 192.168.0.34:38080 check inter 2000 fall 3 rise 5

server k8s-node02 192.168.0.35:38080 check inter 2000 fall 3 rise 5

server k8s-node03 192.168.0.36:38080 check inter 2000 fall 3 rise 5

root@k8s-ha02:~# systemctl restart keepalived haproxy

root@k8s-ha02:~#

root@k8s-ha02:~# ss -tnl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 4096 192.168.0.111:6443 0.0.0.0:*

LISTEN 0 4096 192.168.0.112:80 0.0.0.0:*

LISTEN 0 4096 127.0.0.53%lo:53 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

root@k8s-ha02:~#

7.1、访问负载均衡器的vip,看看是否能够访问到jenkins ?

能够正常通过访问负载均衡器的vip访问到jenkins,说明jenkins服务正常被负载均衡器反代成功;

作者:Linux-1874

本文版权归作者和博客园共有,欢迎转载,但未经作者同意必须保留此段声明,且在文章页面明显位置给出原文连接,否则保留追究法律责任的权利.

Jenkins通过k8s上的pv/pvc来连接外部存储,通过svc的方式向外暴露服务,在集群内部通过直接访问svc就可以正常访问到jenkins,对于集群外部成员,通过外部负载均衡器来访问Jenkins;

Jenkins通过k8s上的pv/pvc来连接外部存储,通过svc的方式向外暴露服务,在集群内部通过直接访问svc就可以正常访问到jenkins,对于集群外部成员,通过外部负载均衡器来访问Jenkins;