容器编排系统K8s之访问控制--准入控制

前文我们聊到了k8s的访问控制第二关RBAC授权插件的相关话题,回顾请参考:https://www.cnblogs.com/qiuhom-1874/p/14216634.html;今天我们来聊一下k8s上的访问控制第三关准入控制相关话题;

在说准入控制之前,我们先来回顾下之前的用户认证和授权,在k8s上用户认证和授权机制都是通过一个个插件进行功能的扩展,我们要要想使用某种用户认证和授权机制,相应的我们应该去启用对应的插件;对于用户认证和授权这两个机制来说,它们的工作逻辑都是一样的,都是一票通过的机制,一票通过是指启有多个插件,只要对应用户来认证或做授权验证,只要有其中的一个插件满足要求,对应的用户就能通过认证,同样的道理验证授权也是,在k8s上授权插件是显式授权的,即只要满足对应定义的授权规则,则对应的验证授权就会被通过,反之没有显式授权的,即便认真通过权限验证也是不能放行的;对于准入控制来说,在k8s上准入控制是通过准入控制器实现的,一个准入控制器就对应了一个插件,我们要使用对应的准入控制器,相应我们也要启用对应的插件才行;在k8s上准入控制、用户认证、授权默认都内置并启用一些插件,我们可以直接编写对应的规则即可;准入控制和用户认证、授权机制不同的是,准入控制的工作逻辑是一票否决机制,所谓一票否决是指在多个准入控制规则当中,只要不满足其中一个规则,对应的请求就是拒绝操作的;对于准入控制来说,它有两种类型,一种是变异型,一种是校验型;所谓变异型是指我们在提交给apiserver进行资源创建时,默认没有指定的对应字段的信息;它会给我们补上,或者我们定义资源的某些属性的值不太规范,它会帮助我们修改对应的属性的值为一个规范的值,然后提交给apiserver;这种准入控制器通常是帮助我们把对应提交的资源创建信息,规范后提交给apiserver,使得我们提交资源的信息是能够满足对应api规范;校验型准入控制器是用来限制我们对应创建资源是否合理,是否满足我们定义的规则,如果不满足就直接拒绝我们创建;这种控制器主要用来限制我们对k8s上的资源的使用;

在k8s上准入控制器的模块有很多,其中比较常用的有LimitRanger、ResourceQuota、ServiceAccount、PodSecurityPolicy等等,对于前面三种准入控制器系统默认是启用的,我们只需要定义对应的规则即可;对于PodSecurityPolicy这种准入控制器,系统默认没有启用,如果我们要使用,就必需启用以后,对应规则才会正常生效;这里需要注意一点,对应psp准入控制器,一定要先写好对应的规则,把规则和权限绑定好以后,在启用对应的准入控制器,否则先启用准入控制器,没有对应的规则,默认情况它是拒绝操作,在k8s上没有显式定义规则都是拒绝,这可能导致现有的k8s系统跑的系统级pod无法正常工作;所以对于psp准入控制器要慎用,如果规则和权限做的足够精细,它会给我们的k8s系统安全带来大幅度的提升,反之,可能导致整个k8s系统不可用;

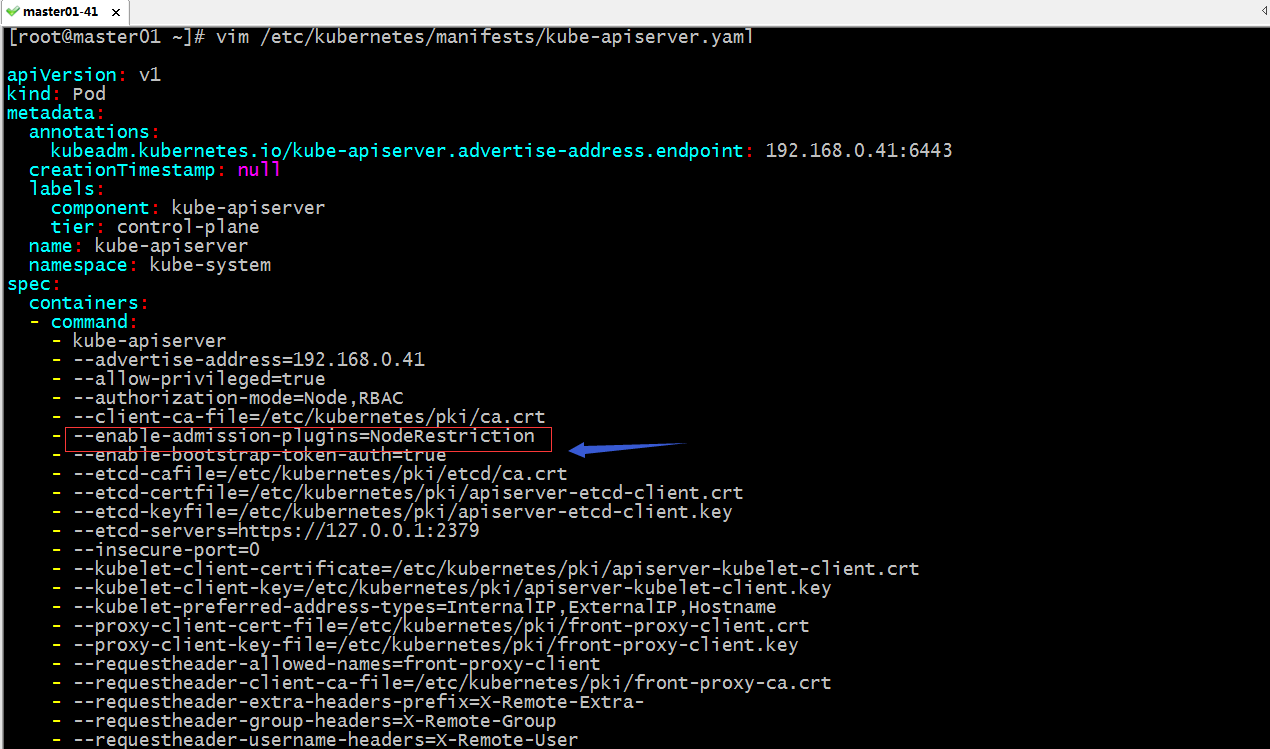

查看apiserver启用的准入控制器

提示:apiserver启用准入控制插件需要使用--enable-admission-plugins选项来指定,该选项可以使用多个值,用逗号隔开表示启用指定的准入控制插件;这里配置文件中显式启用了NodeRestrication这个插件;默认没有写在这上面的内置准入控制器,它也是启用了的,比如LimitRanger、ResourceQuota、ServiceAccount等等;对于不同的k8s版本,内置的准入控制器和启用与否请查看相关版本的官方文档;对于那些没有启动的准入控制器,我们可以在上面选项中直接启用,分别用逗号隔开即可;

LimitRanger控制器

LimitRanger准入控制器是k8s上一个内置的准入控制器,LimitRange是k8s上的一个标准资源,它主要用来定义在某个名称空间下限制pod或pod里的容器对k8s上的cpu和内存资源使用;它能够定义我们在某个名称空间下创建pod时使用的cpu和内存的上限和下限以及默认cpu、内存的上下限;如果我们创建pod时定义了资源上下限,但不满足LimitRange规则中定义的资源上下限,此时LimitRanger就会拒绝我们创建此pod;如果我们在LimitRange规则中定义了默认的资源上下限制,我们创建资源没有指定其资源限制,它默认会使用LimitRange规则中的默认资源限制;同样的逻辑LimitRanger可以限制一个pod使用资源的上下限,它还可以限制pod中的容器的资源上下限,比限制pod更加精准;不管是针对pod还是pod里的容器,它始终只是限制单个pod资源使用;

LimitRange规则定义

[root@master01 ~]# cat LimitRang-demo.yaml

apiVersion: v1

kind: Namespace

metadata:

name: myns

---

apiVersion: v1

kind: LimitRange

metadata:

name: cpu-memory-limit-range

namespace: myns

spec:

limits:

- default:

cpu: 1000m

memory: 1000Mi

defaultRequest:

cpu: 500m

memory: 500Mi

min:

cpu: 500m

memory: 500Mi

max:

cpu: 2000m

memory: 2000Mi

maxLimitRequestRatio:

cpu: 4

memory: 4

type: Container

[root@master01 ~]#

提示:以上清单主要定义了两个资源,一个创建myns名称空间,一个是在对应myns名称空间下定义了LimitRange资源;其中LimitRange资源的名称为cpu-memory-limit-range,default字段用来指定默认容器资源上限值;defaultRequest用来指定默认容器资源下限值;min字段用来指定限制用户指定的资源下限不能小于对应资源的值;max是用来限制用户指定资源上限值不能大于该值;maxLimitRequestRatio字段用来指定资源的上限和下限的比值;即上限是下限的多少倍;type是用来描述对应资源限制的级别,该字段有两个值pod和container;上述资源清单表示在该名称空间下创建pod时,默认不指定其容器的资源限制,就限制对应容器最少要有0.5个核心的cpu和500M的内存;最大为1个核心cpu,1g内存;如果我们手动定义了容器的资源限制,那么对应资源限制最小不能小于cpu为0.5个核心,内存为500M,最大不能超过cpu为2个核心,内存为2000M;如果我们在创建pod时,只指定了容器的资源上限或下限,那么上限最大是下限的的4倍,如果指定cpu上限为2000m那么下限一定不会小于500m,如果只指定了cpu下限为500m那么上限最大不会超过2000m,对于内存也是同样的逻辑;

应用资源清单

[root@master01 ~]# kubectl apply -f LimitRang-demo.yaml namespace/myns created limitrange/cpu-memory-limit-range created [root@master01 ~]# kubectl get limitrange -n myns NAME CREATED AT cpu-memory-limit-range 2021-01-01T08:01:24Z [root@master01 ~]# kubectl describe limitrange cpu-memory-limit-range -n myns Name: cpu-memory-limit-range Namespace: myns Type Resource Min Max Default Request Default Limit Max Limit/Request Ratio ---- -------- --- --- --------------- ------------- ----------------------- Container cpu 500m 2 500m 1 4 Container memory 500Mi 2000Mi 500Mi 1000Mi 4 [root@master01 ~]#

提示:资源清单中如果指定了maxLimitRequestRatio,需要注意min中和max中的对应资源的单位,如果指定的倍数和max与min中指定的值比例不同,它这里会不会让我们创建;如下提示

[root@master01 ~]# kubectl apply -f LimitRang-demo.yaml

namespace/myns unchanged

The LimitRange "cpu-limit-range" is invalid: spec.limits[0].maxLimitRequestRatio[memory]: Invalid value: resource.Quantity{i:resource.int64Amount{value:4, scale:0}, d:resource.infDecAmount{Dec:(*inf.Dec)(nil)}, s:"4", Format:"DecimalSI"}: ratio 4 is greater than max/min = 3.814697

[root@master01 ~]#

提示:我们在资源中指定maxLimitRequestRatio,它内部就是使用max/min,所以对应的值必须符合max/min的值;

验证:在myns名称空间下创建一个pod,默认不指定其资源限制,看看它是否会被limitrange规则自动附加其资源限制?

[root@master01 manifests]# cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod-demo

namespace: myns

spec:

containers:

- image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

name: nginx

[root@master01 manifests]# kubectl apply -f pod-demo.yaml

pod/nginx-pod-demo created

[root@master01 manifests]# kubectl get pods -n myns

NAME READY STATUS RESTARTS AGE

nginx-pod-demo 1/1 Running 0 15s

[root@master01 manifests]# kubectl describe pod nginx-pod-demo -n myns

Name: nginx-pod-demo

Namespace: myns

Priority: 0

Node: node01.k8s.org/192.168.0.44

Start Time: Fri, 01 Jan 2021 16:12:53 +0800

Labels: <none>

Annotations: kubernetes.io/limit-ranger: LimitRanger plugin set: cpu, memory request for container nginx; cpu, memory limit for container nginx

Status: Running

IP: 10.244.1.108

IPs:

IP: 10.244.1.108

Containers:

nginx:

Container ID: docker://4d566a239fe3f80e823352ff2eb0f4bc9e5ca1c107c62d87a5035e2d70e7d8f2

Image: nginx:1.14-alpine

Image ID: docker-pullable://nginx@sha256:485b610fefec7ff6c463ced9623314a04ed67e3945b9c08d7e53a47f6d108dc7

Port: <none>

Host Port: <none>

State: Running

Started: Fri, 01 Jan 2021 16:12:54 +0800

Ready: True

Restart Count: 0

Limits:

cpu: 1

memory: 1000Mi

Requests:

cpu: 500m

memory: 500Mi

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-n6tg5 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-n6tg5:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-n6tg5

Optional: false

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 33s default-scheduler Successfully assigned myns/nginx-pod-demo to node01.k8s.org

Normal Pulled 32s kubelet Container image "nginx:1.14-alpine" already present on machine

Normal Created 32s kubelet Created container nginx

Normal Started 32s kubelet Started container nginx

[root@master01 manifests]#

提示:可以看到我们在myns名称空间下创建的pod没有指定其容器资源限制,创建pod后,其内部容器自动就有了默认的资源限制;其大小就是我们在定义LimitRange规则中的default和defaultRequite字段中指定的资源限制;

验证:创建一个pod指定其cpu下限为200m,看看对应pod是否允许我们创建?

[root@master01 manifests]# cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod-demo2

namespace: myns

spec:

containers:

- image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

name: nginx

resources:

requests:

cpu: 200m

[root@master01 manifests]# kubectl apply -f pod-demo.yaml

Error from server (Forbidden): error when creating "pod-demo.yaml": pods "nginx-pod-demo2" is forbidden: [minimum cpu usage per Container is 500m, but request is 200m, cpu max limit to request ratio per Container is 4, but provided ratio is 5.000000]

[root@master01 manifests]#

提示:我们在创建资源清单中限制了容器使用cpu为200m,在应用资源清单时,它这里就不允许我们创建,其原因是我们指定的资源限制规则不满足LimitRange这条准入控制规则,所以对应创建pod的请求被拒绝;这也意味着我们在创建pod时,指定容器资源限制不能低于LimitRange准入控制规则中的最低限制,否则对应pod不被允许创建;

验证:创建pod时指定其cpu最大限制为2500m,看看对应pod是否允许被创建?

[root@master01 manifests]# cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod-demo2

namespace: myns

spec:

containers:

- image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

name: nginx

resources:

limits:

cpu: 2500m

[root@master01 manifests]# kubectl apply -f pod-demo.yaml

Error from server (Forbidden): error when creating "pod-demo.yaml": pods "nginx-pod-demo2" is forbidden: maximum cpu usage per Container is 2, but limit is 2500m

[root@master01 manifests]#

提示:可以看到在pod容器里指定对应的资源限制上限大于LimitRange准入控制规则中的max字段的值时,对应pod是不被允许创建;

验证:给定pod容器的cpu上限或下限为对应范围内的值看看对应pod是否允许被创建?

[root@master01 manifests]# cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod-demo2

namespace: myns

spec:

containers:

- image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

name: nginx

resources:

requests:

cpu: 700m

[root@master01 manifests]# kubectl apply -f pod-demo.yaml

pod/nginx-pod-demo2 created

[root@master01 manifests]# kubectl describe pod nginx-pod-demo2 -n myns

Name: nginx-pod-demo2

Namespace: myns

Priority: 0

Node: node03.k8s.org/192.168.0.46

Start Time: Fri, 01 Jan 2021 16:33:39 +0800

Labels: <none>

Annotations: kubernetes.io/limit-ranger: LimitRanger plugin set: memory request for container nginx; cpu, memory limit for container nginx

Status: Running

IP: 10.244.3.119

IPs:

IP: 10.244.3.119

Containers:

nginx:

Container ID: docker://4a576eda79e0f2a377ca9d058ee6e64decf45223b720b93c3291eed9c2a920d1

Image: nginx:1.14-alpine

Image ID: docker-pullable://nginx@sha256:485b610fefec7ff6c463ced9623314a04ed67e3945b9c08d7e53a47f6d108dc7

Port: <none>

Host Port: <none>

State: Running

Started: Fri, 01 Jan 2021 16:33:40 +0800

Ready: True

Restart Count: 0

Limits:

cpu: 1

memory: 1000Mi

Requests:

cpu: 700m

memory: 500Mi

Environment: <none>

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from default-token-n6tg5 (ro)

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

default-token-n6tg5:

Type: Secret (a volume populated by a Secret)

SecretName: default-token-n6tg5

Optional: false

QoS Class: Burstable

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 23s default-scheduler Successfully assigned myns/nginx-pod-demo2 to node03.k8s.org

Normal Pulled 23s kubelet Container image "nginx:1.14-alpine" already present on machine

Normal Created 22s kubelet Created container nginx

Normal Started 22s kubelet Started container nginx

[root@master01 manifests]#

提示:从上面的示例可以看到,我们给定资源下限,对应资源上限它会用默认值给填充;

ResourceQuota准入控制器

ResourceQuota准入控制器也是k8s上内置的准入控制器,默认该控制器是启用的状态,它主要作用是用来限制一个名称空间下的资源的使用;相对于LimitRanger准入控制器相比,它能防止在一个名称空间下的pod被过多创建时,导致过多占用k8s上的资源;简单讲它是用来在名称空间级别限制用户的资源使用;不同于LimitRanger准入控制器,Resourcequota准入控制器限制的是某个名称空间下的资源,而LimitRanger准入控制器限制的是单个pod或pod中的容器的资源使用;

ResourceQuota资源的创建

[root@master01 ~]# cat resourcequota-demo.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: quota-demo

namespace: myns

spec:

hard:

pods: "5"

requests.cpu: "5"

requests.memory: 5Gi

limits.cpu: "4"

limits.memory: 10Gi

count/deployments.apps: "5"

count/deployments.extensions: "5"

persistentvolumeclaims: "5"

[root@master01 ~]#

提示:ResourceQuota的定义其kind类型为ResourceQuota,群组为核心群组v1;其中spec.hard字段是用来定义对应名称空间下的资源限制规则;pods用来限制在对应名称空间下的pod数量,requests.cpu字段用来限制对应名称空间下所有pod的cpu资源的下限总和;requests.memory用来限制对应名称空间下pod的内存资源的下限总和;limits.cpu用来限制对应名称空间下的podcpu资源的上限总和,limits.memory用来限制对应名称空间下pod内存资源上限总和;count/deployments.apps用来限制对应名称空间下apps群组下的deployments的个数,count/deployments.extensions用来限制对应名称空间下extensions群组下的deployments的数量;以上配置清单表示,在myns名称空间下运行的pod数量不能超过5个,或者所有pod的cpu资源下限总和不能大于5个核心,内存资源下限总和不能大于5G,或者cpu上限资源总和不能大于4个核心,内存上限总和不能超过10G,或者apps群组下的deployments控制器不能超过5个,exetensions群组下的deploy控制器不能超过5个,pv个数不能超过5个;以上条件中任意一个条目不满足,都将无法在对应名称空间创建对应的资源;

应用资源清单

[root@master01 ~]# kubectl apply -f resourcequota-demo.yaml resourcequota/quota-demo created [root@master01 ~]# kubectl get resourcequota -n myns NAME AGE REQUEST LIMIT quota-demo 33s count/deployments.apps: 0/5, count/deployments.extensions: 0/5, persistentvolumeclaims: 0/5, pods: 2/5, requests.cpu: 1200m/5, requests.memory: 1000Mi/5Gi limits.cpu: 2/4, limits.memory: 2000Mi/10Gi [root@master01 ~]# kubectl describe resourcequota quota-demo -n myns Name: quota-demo Namespace: myns Resource Used Hard -------- ---- ---- count/deployments.apps 0 5 count/deployments.extensions 0 5 limits.cpu 2 4 limits.memory 2000Mi 10Gi persistentvolumeclaims 0 5 pods 2 5 requests.cpu 1200m 5 requests.memory 1000Mi 5Gi [root@master01 ~]#

提示:可以看到应用资源清单以后,对应名称空间下的资源使用情况可以通过查看resourcequota资源的详细信息就能了解到;从上述resourcequota资源的详细信息中可以看到,当前myns中pod的cpu资源上限已经使用了2颗核心,对应内存的上限是了2000M;

验证:在myns下创建2个cpu资源上限为2000m的pod,看看对应pod是否被允许创建?

[root@master01 manifests]# cat pod-demo.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod-demo3

namespace: myns

spec:

containers:

- image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

name: nginx

resources:

limits:

cpu: 2000m

[root@master01 manifests]# kubectl apply -f pod-demo.yaml

pod/nginx-pod-demo3 created

[root@master01 manifests]# kubectl describe resourcequota quota-demo -n myns

Name: quota-demo

Namespace: myns

Resource Used Hard

-------- ---- ----

count/deployments.apps 0 5

count/deployments.extensions 0 5

limits.cpu 4 4

limits.memory 3000Mi 10Gi

persistentvolumeclaims 0 5

pods 3 5

requests.cpu 3200m 5

requests.memory 1500Mi 5Gi

[root@master01 manifests]# cat pod-demo2.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod-demo4

namespace: myns

spec:

containers:

- image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

name: nginx

resources:

limits:

cpu: 2000m

[root@master01 manifests]# kubectl apply -f pod-demo2.yaml

Error from server (Forbidden): error when creating "pod-demo2.yaml": pods "nginx-pod-demo4" is forbidden: exceeded quota: quota-demo, requested: limits.cpu=2,requests.cpu=2, used: limits.cpu=4,requests.cpu=3200m, limited: limits.cpu=4,requests.cpu=5

[root@master01 manifests]#

提示:可以看到在创建第一个pod时,对应pod成功创建,在创建第二个pod时,对应pod就能正常创建,其原因是对应myns名称空间下正常处于运行状态的pod的cpu资源上限总和已经和resourcequota准入控制规则中的limit.cpus字段的值一样了,再次创建pod时,myns名称空间下的pod的cpu资源上限总和大于limit.cpus的值,所以不满足resourcequota准入控制规则,所以第二个pod就不允许被创建;

示例:使用resourcequota资源限制名称空间下的storage资源

[root@master01 ~]# cat resourcequota-storage-demo.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: quota-storage-demo

namespace: default

spec:

hard:

requests.storage: "5Gi"

persistentvolumeclaims: "5"

requests.ephemeral-storage: "1Gi"

limits.ephemeral-storage: "2Gi"

[root@master01 ~]#

提示:requests.storage用来限制对应名称空间下的存储下限总和,persistenvolumeclaims用来限制pvc总数量,requests.ephemeral-storage用来现在使用本地临时存储的下限总容量;limits.ephemeral-storage用来限制使用本地临时存储上限总容量;以上配置表示在default名称空间下非停止状态的容器存储下限总容量不能超过5G,pvc的数量不能超过5个,本地临时存储下限容量不能超过1G,上限不能超过2G;

应用配置清单

[root@master01 ~]# kubectl apply -f resourcequota-storage-demo.yaml resourcequota/quota-storage-demo created [root@master01 ~]# kubectl get resourcequota NAME AGE REQUEST LIMIT quota-storage-demo 7s persistentvolumeclaims: 3/5, requests.ephemeral-storage: 0/1Gi, requests.storage: 3Gi/5Gi limits.ephemeral-storage: 0/2Gi [root@master01 ~]# kubectl describe resourcequota quota-storage-demo Name: quota-storage-demo Namespace: default Resource Used Hard -------- ---- ---- limits.ephemeral-storage 0 2Gi persistentvolumeclaims 3 5 requests.ephemeral-storage 0 1Gi requests.storage 3Gi 5Gi [root@master01 ~]#

验证:在default名称空间下,再创建3个pvc看看对应资源是否被允许创建?

[root@master01 ~]# cat pvc-v1-demo.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-nfs-pv-v4

namespace: default

spec:

accessModes:

- ReadWriteMany

volumeMode: Filesystem

resources:

requests:

storage: 500Mi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-nfs-pv-v5

namespace: default

spec:

accessModes:

- ReadWriteMany

volumeMode: Filesystem

resources:

requests:

storage: 500Mi

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-nfs-pv-v6

namespace: default

spec:

accessModes:

- ReadWriteMany

volumeMode: Filesystem

resources:

requests:

storage: 500Mi

[root@master01 ~]# kubectl apply -f pvc-v1-demo.yaml

persistentvolumeclaim/pvc-nfs-pv-v4 created

persistentvolumeclaim/pvc-nfs-pv-v5 created

Error from server (Forbidden): error when creating "pvc-v1-demo.yaml": persistentvolumeclaims "pvc-nfs-pv-v6" is forbidden: exceeded quota: quota-storage-demo, requested: persistentvolumeclaims=1, used: persistentvolumeclaims=5, limited: persistentvolumeclaims=5

[root@master01 ~]#

提示:可以看到现在在default名称空间下创建3个pvc时,对应前两个被成功创建,第三个被拒绝了;原因是defualt名称空间下的pvc资源数量已经达到resourcequota准入控制规则中定义的数量,所以不予创建;

示例:创建resourcequota准入控制规则,并限定其生效范围

[root@master01 ~]# cat resourcequota-scopes-demo.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: quota-scopes-demo

namespace: myns

spec:

hard:

pods: "5"

scopes: ["BestEffort"]

[root@master01 ~]#

提示:限制resourcequota准入控制规则生效范围,可以使用scopes字段来指定对应的范围;该字段为一个列表,默认不指定是指所有状态的pod;其中BsetEffort表示匹配对应pod的QOS(服务质量类别)值为BestEffort的pod ;NotBestEffort表示匹配对应pod的QOS值不是BestEffort的pod;Terminating表示匹配对应Pod状态为Terminating状态的pod;NotTerminating表示匹配状态不是Terminating状态的pod;上述清单规则表示只对pod的服务质量类别为BestEffort的pod生效;其他类型的pod不记录到对应规则中;即只能在对应名称空间下创建5个服务质量类别为BestEffort类别的pod;

应用配置清单

[root@master01 ~]# kubectl apply -f resourcequota-scopes-demo.yaml resourcequota/quota-scopes-demo created [root@master01 ~]# kubectl get quota -n myns NAME AGE REQUEST LIMIT quota-scopes-demo 12s pods: 0/5 [root@master01 ~]#

提示:限制pod的服务质量类别为BestEffort,只能对pod资源施加,其它资源都不支持;

PodSecurityPolicy准入控制器

PodSecurityPolicy准入控制器主要用来设置pod安全相关的策略,比如是否允许对应pod共享宿主机网络名称空间,是否允许pod允许为特权模式等等;

示例:定义psp准入控制器规则

[root@master01 psp]# cat psp-privileged.yaml

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: privileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: '*'

spec:

privileged: true

allowPrivilegeEscalation: true

allowedCapabilities:

- '*'

volumes:

- '*'

hostNetwork: true

hostPorts:

- min: 0

max: 65535

hostIPC: true

hostPID: true

runAsUser:

rule: 'RunAsAny'

seLinux:

rule: 'RunAsAny'

supplementalGroups:

rule: 'RunAsAny'

fsGroup:

rule: 'RunAsAny'

[root@master01 psp]#

提示:PodSecurityPolicy是k8s的标准资源,其类型为PodSecurityPolicy,群组版本为policy/v1beta1;其中spec字段用来定义对pod的相关安全属性的定义;privileged用来定义对应pod是否允许运行为特权模式;allowPrivilegeEscalation用来指定是否运行对应容器子进程特权;allowedCapabilities用来指定允许使用内核中的Capabilities功能,“*”表示所有;volumes用来指定可以使用的卷类型列表,*表示可以使用支持的任意类型的的卷;hostNetwork表示是否允许共享宿主机网络名称空间;hostPorts用来指定可以使用宿主机端口范围,min限制端口下限,max限制端口上限;hostIPC表示是否允许共享宿主机的IPC,hostPID表示是否共享宿主机的PID;runAsUser用来指定对应pod允许以那个用户身份运行,RunAsAny表示可以以任意用户身份运行;以上清单主要定义了一个特权psp准入控制规则,通常这类psp应该有应用在对所有系统级pod使用;一般的普通用户创建的pod不应该拥有上述权限;

psp准入控制规则相关属性说明

示例:定义一个非特权psp准入控制法则

[root@master01 psp]# cat psp-restricted.yaml

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: restricted

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: 'docker/default'

seccomp.security.alpha.kubernetes.io/defaultProfileName: 'docker/default'

spec:

privileged: false

allowPrivilegeEscalation: false

requiredDropCapabilities:

- ALL

volumes:

- 'configMap'

- 'emptyDir'

- 'projected'

- 'secret'

- 'downwardAPI'

- 'persistentVolumeClaim'

hostNetwork: false

hostIPC: false

hostPID: false

runAsUser:

rule: 'MustRunAsNonRoot'

seLinux:

rule: 'RunAsAny'

supplementalGroups:

rule: 'MustRunAs'

ranges:

- min: 1

max: 65535

fsGroup:

rule: 'MustRunAs'

ranges:

- min: 1

max: 65535

readOnlyRootFilesystem: false

[root@master01 psp]#

提示:定义好上述psp准入控制资源以后,我们还应该把对那个对应准入控制资源绑定到不同的角色上,以实现不同角色拥有不同的psp准入控制法则的使用权限;

示例:定义clusterrole分别关联不同的psp准入控制资源

[root@master01 psp]# cat clusterrole-with-psp.yaml kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: psp:restricted rules: - apiGroups: ['policy'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: - restricted --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: psp:privileged rules: - apiGroups: ['policy'] resources: ['podsecuritypolicies'] verbs: ['use'] resourceNames: - privileged [root@master01 psp]#

提示:以上定义了两个clusterrole,一个名为psp:restricted,该角色主要关联非特权psp资源,其资源名为restricted;第二个clusterrole名为psp:privileged,该角色主要关联特权psp资源;其对应psp资源名称为privileged;

示例:创建clusterrolebinding关联相关用户和组

[root@master01 psp]# cat clusterrolebinding-with-psp.yaml kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: restricted-psp-user roleRef: kind: ClusterRole name: psp:restricted apiGroup: rbac.authorization.k8s.io subjects: - kind: Group apiGroup: rbac.authorization.k8s.io name: system:authenticated --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: privileged-psp-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: psp:privileged subjects: - apiGroup: rbac.authorization.k8s.io kind: Group name: system:masters - apiGroup: rbac.authorization.k8s.io kind: Group name: system:node - apiGroup: rbac.authorization.k8s.io kind: Group name: system:serviceaccounts:kube-system [root@master01 psp]#

提示:以上配置主要创建了两个clusterrolebinding,第一个cluserrolebinding主要把在k8s上认证通过的用户的权限绑定到psp:restricted这个角色上,让其拥有对应的权限,即通过认证的用户拥有非特权psp准入控制法则权限;第二个clusterrolebinding主要绑定了三类用户,第一类是system:masters组上的用户,第二类是system:node组上的用户,第三类是system:serviceaccounts:kube-system组上的用户;这三个组都是系统级别用户,主要是系统及pod和组件使用的组;所以这些用户应该拥有特权psp准入控制法则,对应通过clusterrolebinding绑定到psp:privileged这个角色;

应用上述资源清单

[root@master01 psp]# kubectl apply -f psp-privileged.yaml podsecuritypolicy.policy/privileged created [root@master01 psp]# kubectl apply -f psp-restricted.yaml podsecuritypolicy.policy/restricted created [root@master01 psp]# kubectl apply -f clusterrole-with-psp.yaml clusterrole.rbac.authorization.k8s.io/psp:restricted created clusterrole.rbac.authorization.k8s.io/psp:privileged created [root@master01 psp]# kubectl apply -f clusterrolebinding-with-psp.yaml clusterrolebinding.rbac.authorization.k8s.io/restricted-psp-user created clusterrolebinding.rbac.authorization.k8s.io/privileged-psp-user created [root@master01 psp]#

提示:应用顺序先应用psp准入控制资源,然后在应用clusterrole资源,最后应用clusterrolebinding资源;

启用psp准入控制器

提示:编辑/etc/kubernetes/manifests/kube-apiserver.yaml文件,找到--enable-admission-plugins选项,在最后加上PodSecurityPolicy用逗号隔开;然后保存退出;对应psp准入控制器就启用了;

验证:把tom用户设置为myns名称空间下的管理员,然后使用tom用户的配置文件在myns下创建一个pod,该pod使用hostNetwork:true选项来共享宿主机网络名称空间,看看是否能够正常将pod创建出来?

设置tom用户为myns名称空间下的管理员

[root@master01 ~]# cat tom-rolebinding-myns-admin.yaml apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: tom-myns-admin namespace: myns roleRef: kind: ClusterRole name: admin apiGroup: rbac.authorization.k8s.io subjects: - kind: User apiGroup: rbac.authorization.k8s.io name: tom [root@master01 ~]# kubectl apply -f tom-rolebinding-myns-admin.yaml rolebinding.rbac.authorization.k8s.io/tom-myns-admin created [root@master01 ~]# kubectl get rolebinding -n myns NAME ROLE AGE tom-myns-admin ClusterRole/admin 10s [root@master01 ~]#

验证tom用户证书信息不是在system:master组内

[root@master01 ~]# cat /tmp/myk8s.config |grep client-certificate-data|awk {'print $2'}|base64 -d|openssl x509 -text -noout

Certificate:

Data:

Version: 1 (0x0)

Serial Number:

f4:39:a9:5d:2f:01:09:2b

Signature Algorithm: sha256WithRSAEncryption

Issuer: CN=kubernetes

Validity

Not Before: Dec 29 16:29:59 2020 GMT

Not After : Dec 29 16:29:59 2021 GMT

Subject: CN=tom, O=myuser

Subject Public Key Info:

Public Key Algorithm: rsaEncryption

Public-Key: (2048 bit)

Modulus:

00:b7:02:e8:0d:ca:d5:3f:36:42:20:5c:16:6c:dd:

2a:be:53:f3:b7:14:8e:ca:9f:f4:45:4a:61:97:df:

3c:e8:c2:cd:2f:d8:80:85:29:3d:87:b1:9b:5e:9b:

dd:60:0b:5f:60:46:8f:71:8b:49:1a:a6:48:94:f8:

15:0c:f2:98:3c:ab:3a:7c:28:c4:64:76:bf:03:90:

53:f7:2b:6d:18:b5:9b:53:d2:7b:e1:9e:56:bd:c6:

41:a7:99:0a:20:d9:d3:1b:f2:3d:f8:84:bb:8a:22:

c7:66:1f:8e:7a:ee:e7:06:27:90:06:ce:23:69:eb:

c7:42:69:13:d3:bd:2a:c2:5f:bd:1d:2c:0a:19:ca:

f4:d6:a2:d4:47:73:bb:4e:5a:01:75:37:ba:2f:2b:

78:5f:70:3b:ce:5b:46:25:fb:c8:3f:8a:7b:15:ea:

85:aa:b0:b9:28:85:1a:fd:4a:7e:f2:92:40:bd:00:

2a:6c:08:84:eb:7b:dc:5b:e0:13:71:d3:af:75:e3:

6a:23:e1:a5:78:a2:03:ba:bf:e6:1b:bb:37:cc:11:

aa:aa:d2:66:10:22:8f:31:a3:4d:f8:79:d2:05:d7:

c9:9a:8c:ce:59:7c:30:7e:f1:2d:9a:4a:53:94:cc:

83:47:91:ea:6d:4f:01:9c:c9:3d:c6:9d:85:e0:41:

5c:ff

Exponent: 65537 (0x10001)

Signature Algorithm: sha256WithRSAEncryption

41:47:71:e6:60:70:5b:b0:5f:9c:47:2d:05:07:fd:93:6d:1b:

16:c3:fd:c8:d4:2e:45:b3:fd:d0:4c:e7:19:b4:80:86:ae:8f:

01:5b:26:f7:01:00:3a:e0:0f:b7:ce:6e:0a:a2:e2:84:2c:86:

cd:d8:cb:42:3a:c6:bd:b0:50:72:2e:35:fb:02:5e:78:0c:ce:

fa:3d:28:bc:96:63:d0:83:30:93:6f:59:4d:94:27:8d:ea:5c:

1e:19:1b:35:29:87:cf:76:3b:60:4d:8d:f2:b7:37:9a:5a:b6:

7c:58:ae:dd:f0:7a:fd:de:b9:9f:77:bb:fb:9c:42:d8:50:bc:

2f:50:5c:9b:56:4b:90:89:14:c9:52:6d:64:59:dd:3f:53:b1:

e4:32:91:d5:98:fb:83:fa:78:23:45:0f:53:92:f0:1a:58:81:

03:f3:a3:b4:0a:83:d3:7c:ef:04:e8:ee:27:df:e8:4f:68:dc:

df:46:ef:6b:45:7b:c0:bb:55:fd:82:c6:d9:3b:66:26:14:4a:

fe:79:7d:a1:24:43:ba:20:19:6b:b3:d8:0f:2f:30:2b:d3:22:

e6:f9:a9:88:38:98:7b:d6:c4:41:17:62:8d:05:6e:1f:c3:e2:

44:dc:35:a2:7f:ed:70:2f:68:75:50:61:74:41:d2:86:dd:75:

18:21:a1:c9

[root@master01 ~]#

提示:可以看到tom用户的证书中O=myuser,并非system:master;

使用tom用户的配置文件在myns名称空间下创建pod

[root@master01 manifests]# cat pod-demo3.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod-demo3

namespace: myns

spec:

containers:

- image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

name: nginx

hostNetwork: true

[root@master01 manifests]# kubectl apply -f pod-demo3.yaml --kubeconfig=/tmp/myk8s.config

Error from server (Forbidden): error when creating "pod-demo3.yaml": pods "nginx-pod-demo3" is forbidden: PodSecurityPolicy: unable to admit pod: [spec.securityContext.hostNetwork: Invalid value: true: Host network is not allowed to be used]

[root@master01 manifests]#

提示:可以看到tom用户在myns名称空间下创建pod并共享宿主机网络名称空间,被apiserver拒绝了;这是因为我们刚才启用了psp准入控制器,对应规则明确规定不在system:master组或system:serviceaccounts:system-kube组或system:node组的所有用户都不能创建pod共享宿主机名称空间;

使用system:master组上的用户创建pod共享宿主机网络名称空间,看看是否可以?

验证:kubectl的证书,看看对应的组是否是system:master

[root@master01 ~]# cat /etc/kubernetes/admin.conf |grep client-certificate-data|awk {'print $2'}|base64 -d |openssl x509 -text -noout

Certificate:

Data:

Version: 3 (0x2)

Serial Number: 886870692518705366 (0xc4ecc322d4838d6)

Signature Algorithm: sha256WithRSAEncryption

Issuer: CN=kubernetes

Validity

Not Before: Dec 8 06:38:54 2020 GMT

Not After : Dec 8 06:38:56 2021 GMT

Subject: O=system:masters, CN=kubernetes-admin

Subject Public Key Info:

Public Key Algorithm: rsaEncryption

Public-Key: (2048 bit)

Modulus:

00:c0:4f:cc:45:9b:4a:19:00:a4:af:68:13:f4:39:

b0:4c:a8:67:85:af:b3:f7:04:7e:de:16:4b:62:2c:

d4:e8:c8:b3:26:39:d2:9a:8e:00:71:ef:82:ff:d3:

ad:1a:89:f2:9b:2a:7b:84:c5:5c:85:5a:d0:c5:6e:

89:9d:96:e7:ec:db:92:50:f7:4f:a1:d8:14:2e:48:

33:de:05:48:f1:aa:36:d1:d3:9c:bf:6d:b9:6b:75:

ce:66:a5:72:52:6c:bb:6c:2b:96:98:da:e1:99:1b:

d4:51:3d:5d:d4:fa:76:d9:18:c7:d2:37:95:ad:3c:

e7:af:87:21:75:1b:96:bb:64:51:f5:ae:44:ba:43:

e1:d5:5d:39:57:a1:f0:04:e5:39:6c:af:8c:a6:7e:

eb:4f:98:5d:07:ce:da:89:91:08:34:db:67:0b:09:

0c:59:3b:16:b0:13:f7:13:b8:fb:6f:54:d1:c9:e5:

ce:27:a6:09:af:cc:9d:b5:1e:0a:9c:b4:d2:64:76:

cd:35:67:9e:b5:a6:ba:d8:44:e9:c9:e8:0d:fb:c7:

00:06:4a:ce:72:67:a7:0e:56:57:8c:75:2a:c7:0f:

bb:4a:d9:0c:ec:a1:27:3a:ce:92:13:e4:bf:d1:31:

c8:be:20:58:a0:d6:43:f7:21:8a:cb:e3:fe:5e:1d:

d9:2d

Exponent: 65537 (0x10001)

X509v3 extensions:

X509v3 Key Usage: critical

Digital Signature, Key Encipherment

X509v3 Extended Key Usage:

TLS Web Client Authentication

X509v3 Authority Key Identifier:

keyid:BE:53:44:7A:0F:3D:B0:99:37:F3:D3:52:BE:C1:75:E6:EF:81:DD:13

Signature Algorithm: sha256WithRSAEncryption

35:ea:55:af:57:62:46:26:e9:60:59:82:c2:52:55:45:23:f3:

f9:2a:2a:78:28:6c:26:6a:32:c9:df:75:17:ca:44:0f:7f:2a:

ae:a5:7b:e5:d0:99:3e:97:27:1d:13:a9:5f:d7:09:29:c8:d9:

68:a6:c1:c5:e3:28:14:6e:0f:c8:32:4b:06:8a:6b:fe:ba:ce:

e4:59:b6:70:d4:11:71:cf:e9:c2:dc:da:86:9c:12:82:82:58:

78:83:32:ac:ff:99:6e:1f:07:e0:9d:02:86:dc:e2:e4:30:a1:

36:f1:43:cb:a1:13:1c:27:87:19:89:15:38:25:0a:29:dd:66:

6b:ed:7e:8c:fe:95:8e:10:77:5f:70:47:98:a0:37:4f:9e:57:

6a:66:35:9c:dc:64:f5:1a:01:cd:45:6e:01:bc:15:6f:6f:cd:

f6:51:f4:8e:28:14:77:9e:50:42:42:e2:a8:42:76:b5:f9:c8:

87:bb:a5:3e:64:ce:1c:88:6d:31:99:53:c6:8f:88:f1:72:7c:

5a:d6:dc:fe:7e:fa:26:d2:e0:f3:b8:47:d5:8b:c7:b2:88:80:

16:53:38:31:96:19:9a:73:98:c8:c3:30:13:23:71:b7:1d:d4:

c9:00:c0:b0:99:bf:24:f3:cf:c6:76:27:d2:6e:3a:5f:fc:5c:

55:25:98:e2

[root@master01 ~]#

提示:可以看到对应证书信息中的O=system:master,说明使用kubectl工具加载/etc/kubernetes/admin.config文件,在apiserver上认证会被是被为system:master组上的成员;

使用kubectl 加载/etc/kubernetes/admin.conf 创建pod共享宿主机上的网络名称空间;

[root@master01 manifests]# cat pod-demo3.yaml

apiVersion: v1

kind: Pod

metadata:

name: nginx-pod-demo3

namespace: myns

spec:

containers:

- image: nginx:1.14-alpine

imagePullPolicy: IfNotPresent

name: nginx

hostNetwork: true

[root@master01 manifests]# kubectl apply -f pod-demo3.yaml --kubeconfig=/etc/kubernetes/admin.conf

pod/nginx-pod-demo3 created

[root@master01 manifests]# kubectl get pod -n myns --kubeconfig=/etc/kubernetes/admin.conf

NAME READY STATUS RESTARTS AGE

nginx-pod-demo3 1/1 Running 0 20s

[root@master01 manifests]#

提示:可以看到使用system:master组上用户的证书在myns名称空间下就能够正常创建共享宿主机网络名称空间的pod;通过上面的示例可以看到对应psp准入控制规则的设置是生效的;

在k8s上准入控制器的模块有很多,其中比较常用的有LimitRanger、ResourceQuota、ServiceAccount、PodSecurityPolicy等等,对于前面三种准入控制器系统默认是启用的,我们只需要定义对应的规则即可;对于PodSecurityPolicy这种准入控制器,系统默认没有启用,如果我们要使用,就必需启用以后,对应规则才会正常生效;这里需要注意一点,对应psp准入控制器,一定要先写好对应的规则,把规则和权限绑定好以后,在启用对应的准入控制器,否则先启用准入控制器,没有对应的规则,默认情况它是拒绝操作,在k8s上没有显式定义规则都是拒绝,这可能导致现有的k8s系统跑的系统级pod无法正常工作;所以对于psp准入控制器要慎用,如果规则和权限做的足够精细,它会给我们的k8s系统安全带来大幅度的提升,反之,可能导致整个k8s系统不可用;

在k8s上准入控制器的模块有很多,其中比较常用的有LimitRanger、ResourceQuota、ServiceAccount、PodSecurityPolicy等等,对于前面三种准入控制器系统默认是启用的,我们只需要定义对应的规则即可;对于PodSecurityPolicy这种准入控制器,系统默认没有启用,如果我们要使用,就必需启用以后,对应规则才会正常生效;这里需要注意一点,对应psp准入控制器,一定要先写好对应的规则,把规则和权限绑定好以后,在启用对应的准入控制器,否则先启用准入控制器,没有对应的规则,默认情况它是拒绝操作,在k8s上没有显式定义规则都是拒绝,这可能导致现有的k8s系统跑的系统级pod无法正常工作;所以对于psp准入控制器要慎用,如果规则和权限做的足够精细,它会给我们的k8s系统安全带来大幅度的提升,反之,可能导致整个k8s系统不可用;