高可用服务之Keepalived基础入门

前面我们聊了聊高可用集群corosync+pacemaker的相关概念以及相关工具的使用和说明,回顾请参考https://www.cnblogs.com/qiuhom-1874/category/1838133.html;今天我们说一下高可用服务keepalived;

keepalived相对corosync+pacemaker这种高可用集群,它要轻量很多;它的工作原理就是vrrp的实现;vrrp(Virtual Router Redundancy Protocol,虚拟路由冗余协议 ),设计之初它主要用于对LVS集群的高可用,同时它也能够对LVS后端real server做健康状态检测;它主要功能有基于vrrp协议完成地址流动,从而实现服务的故障转移;为VIP地址所在的节点生成ipvs规则;为ipvs集群的各RS做健康状态检测;基于脚本调用接口通过执行脚本完成脚本中定义的功能,进而影响集群事务;

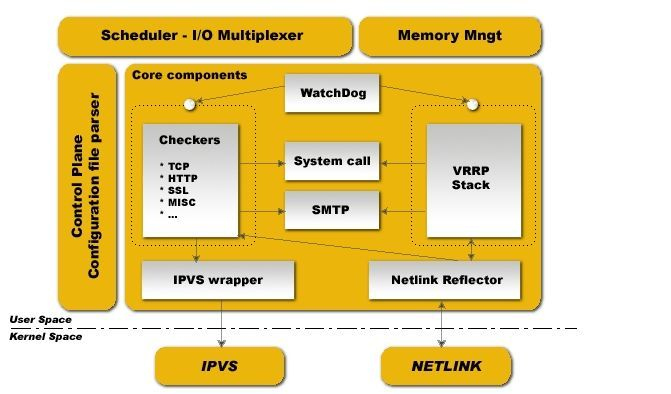

keepalved架构

提示:keepalived的主要由vrrp stack、checkers、ipvs wrapper以及控制组件配置文件分析器,IO复用器,内存管理这些组件组成,其中vrrp stack 是用来实现vip的高可用;checkers用于基于不同协议对后端服务做检测,它两都是基于系统调用和SMTP协议来完成对vip的转移,以及故障转移后的邮件通知,以及vip和后端服务的检测;ipvs wrapper主要用于生成ipvs规则;而对于keepalved的核心组件vrrp stack 和checkers是由watchdog进程一直监控着,一旦vrrp stack 或者checkers宕掉,watchdog会立即启动一个新的vrrp stack或checkers,从而保证了keepalived自身的组件的高可用;

keepalived实现

环境说明

准备两台keepalived服务器,各server必须满足时间同步,确保iptables及selinux都是关闭着;如果有必要可以配置各节点通过hosts文件解析以及各节点的ssh互信,后面的主机名解析和ssh互信不是必须的;

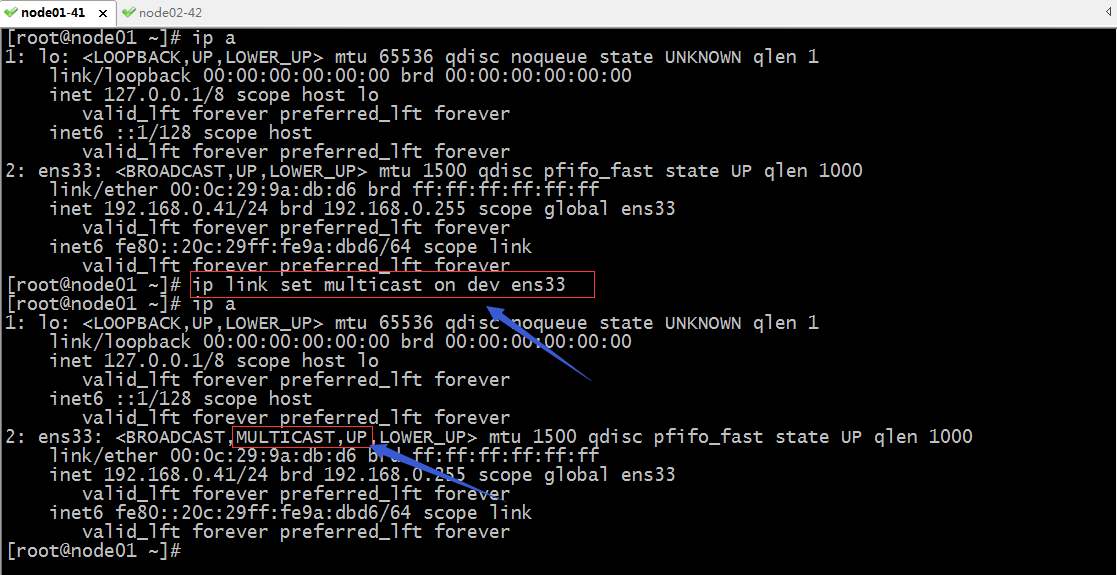

提示:有关ssh互信,可以参考本人博客https://www.cnblogs.com/qiuhom-1874/p/11783371.html;除了确保以上几条外,还需要确保我们的网卡支持多播功能;

提示:如果网卡没有启动多播功能需要用ip link set multicast on dev 网卡名称即可;

安装keepalived程序包

yum install keepalived -y

提示:两节点都要安装;

查看keepalived的程序环境

[root@node01 ~]# rpm -ql keepalived /etc/keepalived /etc/keepalived/keepalived.conf /etc/sysconfig/keepalived /usr/bin/genhash /usr/lib/systemd/system/keepalived.service /usr/libexec/keepalived /usr/sbin/keepalived /usr/share/doc/keepalived-1.3.5 /usr/share/doc/keepalived-1.3.5/AUTHOR /usr/share/doc/keepalived-1.3.5/CONTRIBUTORS /usr/share/doc/keepalived-1.3.5/COPYING /usr/share/doc/keepalived-1.3.5/ChangeLog /usr/share/doc/keepalived-1.3.5/NOTE_vrrp_vmac.txt /usr/share/doc/keepalived-1.3.5/README /usr/share/doc/keepalived-1.3.5/TODO /usr/share/doc/keepalived-1.3.5/keepalived.conf.SYNOPSIS /usr/share/doc/keepalived-1.3.5/samples /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.HTTP_GET.port /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.IPv6 /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.SMTP_CHECK /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.SSL_GET /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.fwmark /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.inhibit /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.misc_check /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.misc_check_arg /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.quorum /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.sample /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.status_code /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.track_interface /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.virtual_server_group /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.virtualhost /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.vrrp /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.vrrp.localcheck /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.vrrp.lvs_syncd /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.vrrp.routes /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.vrrp.rules /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.vrrp.scripts /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.vrrp.static_ipaddress /usr/share/doc/keepalived-1.3.5/samples/keepalived.conf.vrrp.sync /usr/share/doc/keepalived-1.3.5/samples/sample.misccheck.smbcheck.sh /usr/share/man/man1/genhash.1.gz /usr/share/man/man5/keepalived.conf.5.gz /usr/share/man/man8/keepalived.8.gz /usr/share/snmp/mibs/KEEPALIVED-MIB.txt /usr/share/snmp/mibs/VRRP-MIB.txt /usr/share/snmp/mibs/VRRPv3-MIB.txt [root@node01 ~]#

提示:主配置文件是/etc/keepalived/keepalived.conf;主程序文件/usr/sbin/keepalived;unit file是/usr/lib/systemd/system/keepalived.service;unit file的环境配置文件是/etc/sysconfig/keepalived;

keepalived默认配置

[root@node01 ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

notification_email {

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc

smtp_server 192.168.200.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER

interface eth0

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.200.16

192.168.200.17

192.168.200.18

}

}

virtual_server 192.168.200.100 443 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

real_server 192.168.201.100 443 {

weight 1

SSL_GET {

url {

path /

digest ff20ad2481f97b1754ef3e12ecd3a9cc

}

url {

path /mrtg/

digest 9b3a0c85a887a256d6939da88aabd8cd

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

virtual_server 10.10.10.2 1358 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

sorry_server 192.168.200.200 1358

real_server 192.168.200.2 1358 {

weight 1

HTTP_GET {

url {

path /testurl/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl3/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.200.3 1358 {

weight 1

HTTP_GET {

url {

path /testurl/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334c

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334c

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

virtual_server 10.10.10.3 1358 {

delay_loop 3

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

real_server 192.168.200.4 1358 {

weight 1

HTTP_GET {

url {

path /testurl/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl3/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.200.5 1358 {

weight 1

HTTP_GET {

url {

path /testurl/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl3/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@node01 ~]#

提示:keepalived的配置文件主要由global configuration、vrrpdconfiguration、LVS configuration这三部分配置段组成;其中global配置段主要定义全局属性以及静态路由和地址相关配置;vrrp配置段主要定义VRRP实例或vrrp同步组相关配置;LVS配置段主要定义IPVS集群和LVS后端各real server相关的配置;

keepalived配置说明

全局配置常用指令说明

global_defs {...}:用于定义全局配置段,在这个配置段里可以配置全局属性,以及邮件通知相关配置;

notification_email {...}:该配置段是globald_defs配置段的一个子配置段用于配置当集群发生状态变化时,接受通知的邮箱;

notification_email_from:用于指定发送邮件的发件人邮箱地址;

smtp_server:用于指定邮件服务器地址;

smtp_connect_timeout:用于指定邮件服务器连接超时时间;

router_id:集群节点ID,通常这个ID是唯一的,不和其他节点相同;

vrrp_skip_check_adv_addr:忽略检查通告vrrp通告和上一次接收的vrrp是同master地址的通告;

vrrp_strict:严格遵守VRRP协议;

vrrp_garp_interval:设定同一接口的两次arp广播的延迟时长,默认为0表示不延迟;

vrrp_gna_interval:设定同一接口的两次na消息延迟时长,默认为0表示不延迟;

vrrp_mcast_group4:设定组播ip地址,默认是224.0.0.18;组播地址是一个D类地址,它的范围是224.0.0.0-239.255.255.255;

vrrp_iptables:关闭生成iptables规则;

vrrp实例常用指令

vrrp_instance:指定一个vrrp示例名称,并引用一个配置实例上下文配置段用大括号括起来;

state:用于定义该vrrp实例的角色,常用的有MASTER和BACKUP两个角色,并且多个节点上同虚拟路由id的实例,只能有一个MASTER角色且优先级是最高的,其他的都为BACKUP优先级都要略小于MASTER角色的优先级;

interface:用于指定vrrp实例的网卡名称,就是把vip配置在那个接口上;

virtual_router_id:虚拟路由ID取值范围是0-255;

advert_int:指定发送心跳间隔时长,默认是1秒;

priority:指定该实例的优先级;

authentication {...}:用于定义认证信息;

auth_type:指定认证类型,常用认证类型有PASS和AH,PASS指简单的密码认证,AH指IPSEC认证;如果使用PASS类型,默认只会取前8个字符作为认证密码;

auth_pass:指定认证密码;

virtual_ipaddress {..}:用于设定虚拟ip地址的配置,用大括号括起来;定义虚拟ip的语法格式为:<IPADDR>/<MASK> brd <IPADDR> dev <STRING> scope <SCOPE> label <LABEL>;其中brd用于指定广播地址,dev用于指定接口名称,scope用于指定作用域,label用于指定别名;可以配置多个虚拟ip,通常一个实例中只配置一个虚拟ip;

示例:在node01和node02利用keepalived配置vip192.168.0.33

node01上的配置

! Configuration File for keepalived global_defs { notification_email { root@localhost } notification_email_from node01_keepalived@localhost smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id node01 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.12.132 } vrrp_instance VI_1 { state MASTER interface ens33 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 12345678 } virtual_ipaddress { 192.168.0.33/24 brd 192.168.0.255 dev ens33 label ens33:1 } }

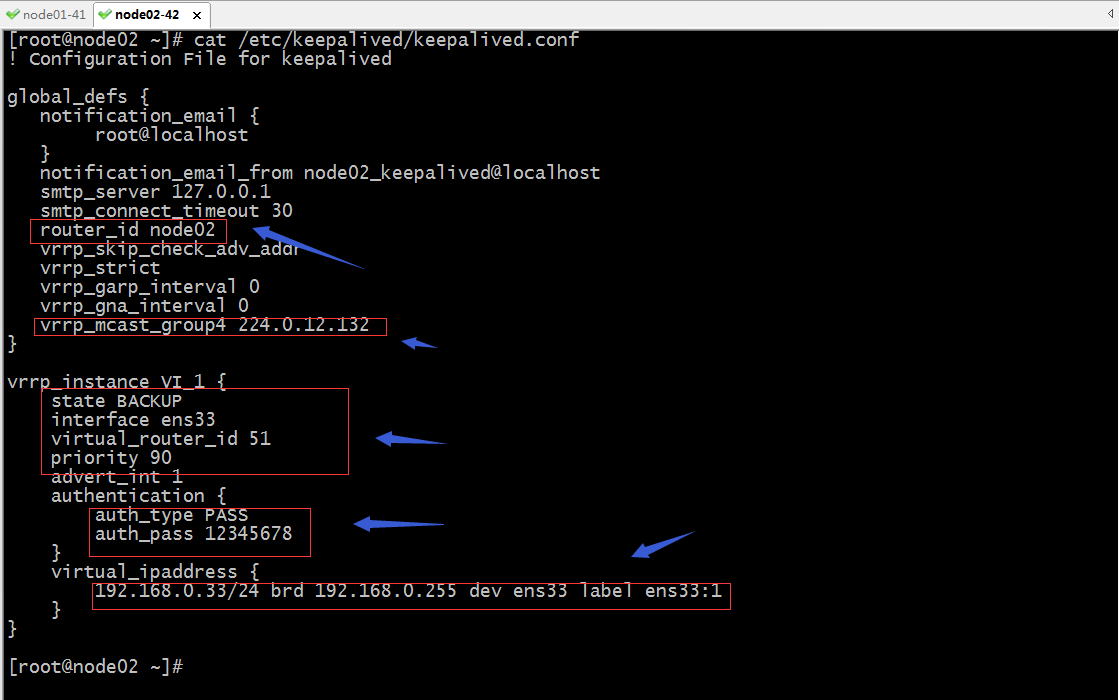

node02上的配置

! Configuration File for keepalived global_defs { notification_email { root@localhost } notification_email_from node02_keepalived@localhost smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id node02 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.12.132 } vrrp_instance VI_1 { state BACKUP interface ens33 virtual_router_id 51 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 12345678 } virtual_ipaddress { 192.168.0.33/24 brd 192.168.0.255 dev ens33 label ens33:1 } }

启动node01和node02上的keepalived

提示:可以看到把node01上的keepalived启动起来以后,vip就配置在外面指定的ens33接口上;

提示:可以看到node02上的keepalived启动起来以后,vip并没有从node01上抢过来,并且在node02上看keepalived的状态信息,清楚的看到node02以backup角色运行着,这意味着只有当master宕机以后,它才会有可能把vip抢过来;

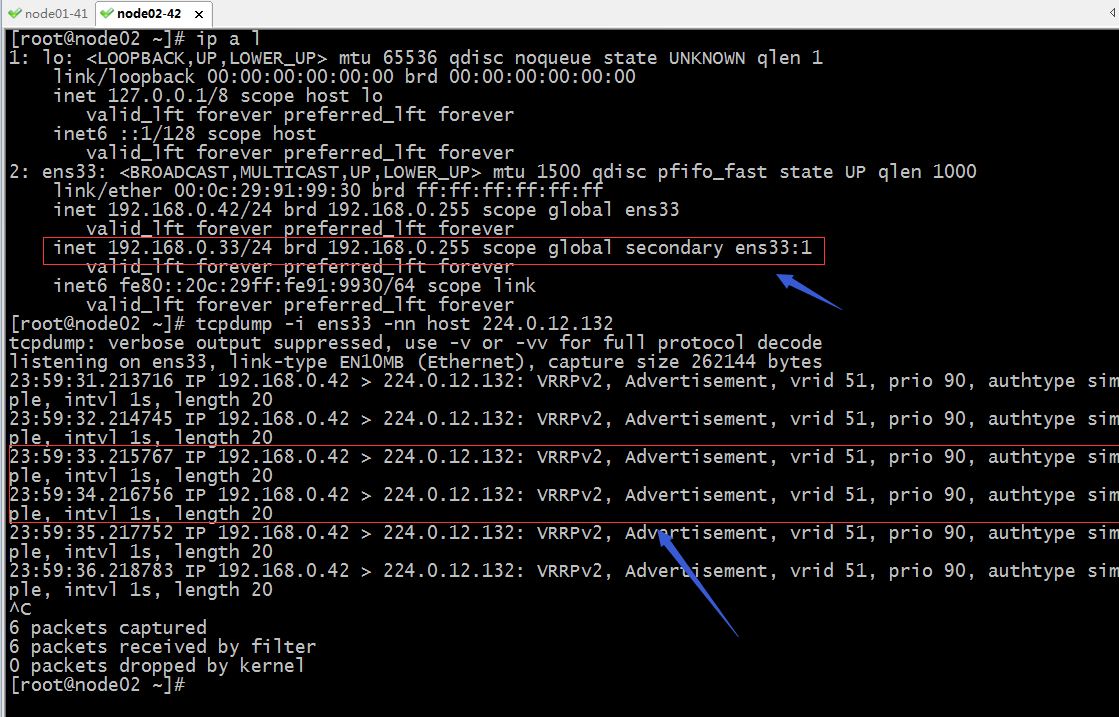

在node02上抓包,看看心跳信息是否是我们指定1秒一个呢?是否是在我们指定的组播域?

提示:可以看到node01(MASTER节点)一秒一个心跳报文给指定的组播域发送通告信息,只要在组播域内地主机能够收到MASTER的通告,它们都认为MASTER还活着,一旦master没有发通告,那么backup节点就会触发重新争夺VIP;

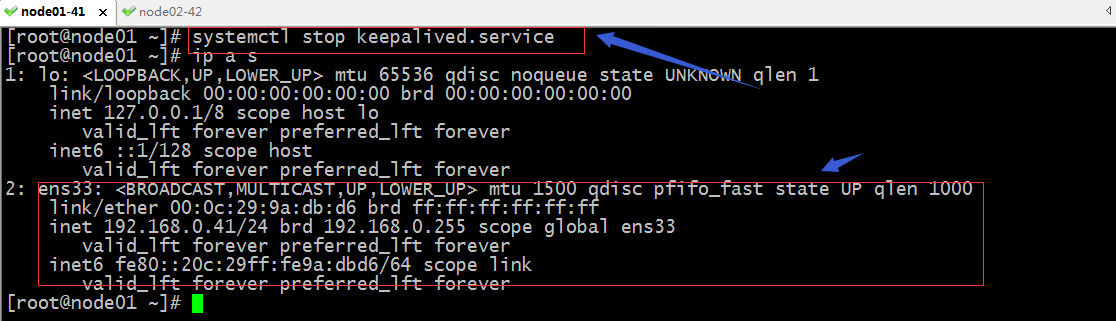

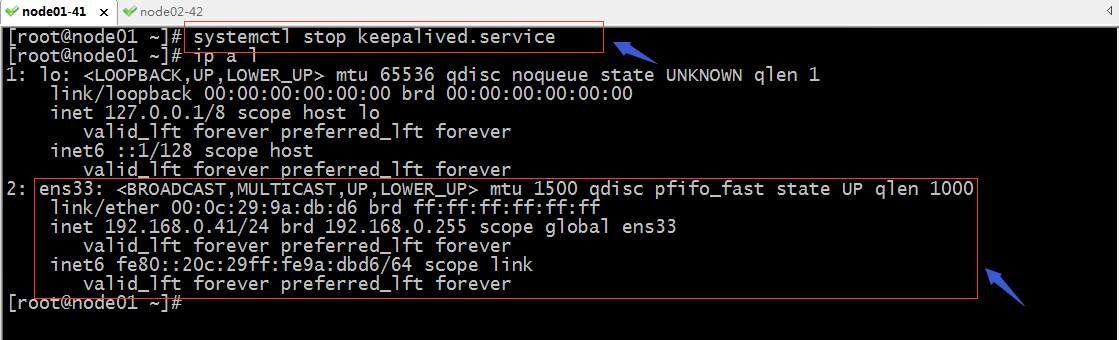

验证:把master keepalived停掉,看看VIP是否飘到node02上呢?

提示:可以看到当把node01上的keepalived停掉以后,对应vip会飘到node02上,并且node02会向组播域一直通告自己的vrrid 优先级 等等信息;

验证:把node01的keepalived启动起来,vip是否会被node01抢过去呢?

提示:默认我们没有指定是否工作在抢占模式,默认就为抢占模式,意思是只要对应的组播域有比当前VIP所在节点上的优先级高的通告,拥有VIP的节点会自动把vip让出来,让其优先级高的节点应用;

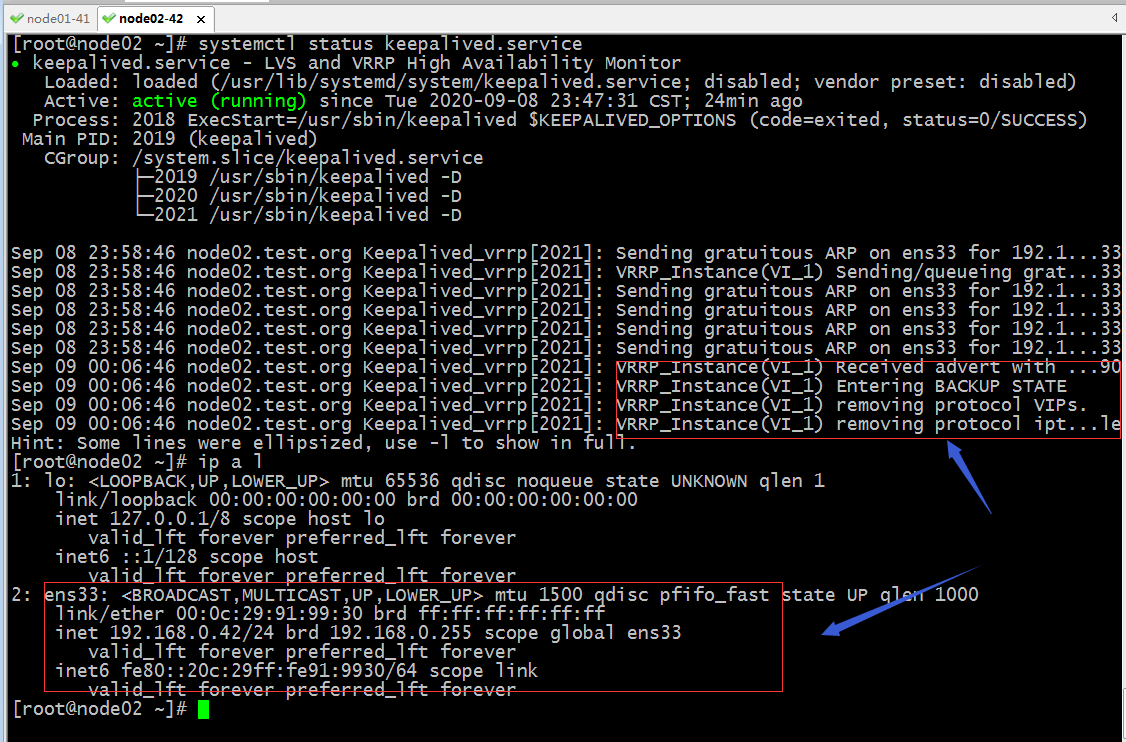

在node02上查看keepalived的状态以及ip地址信息

提示:从node02的keepalived的状态信息可以看到,它接收到更高优先级的通告,然后自己自动移除了VIP ,iptables规则,并工作为BACKUP角色;

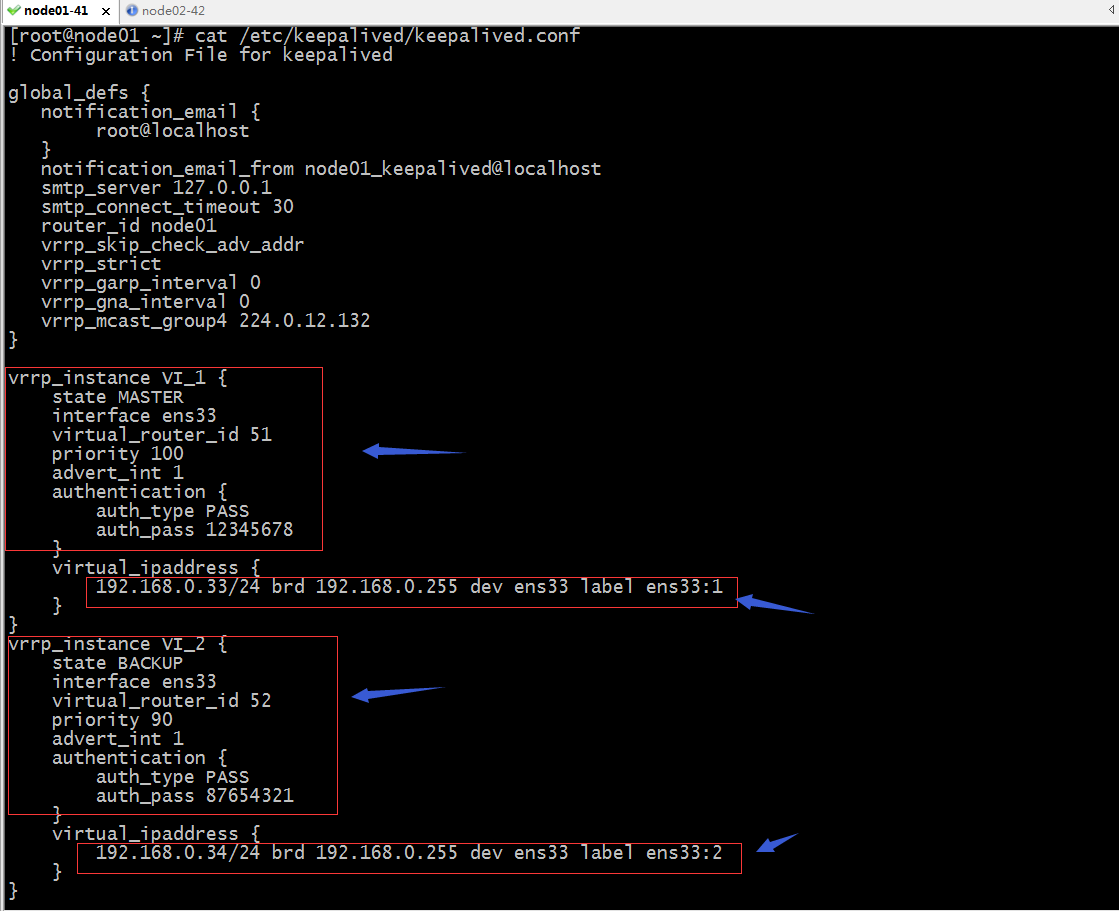

示例:配置keepalived的双主模型

node01上的配置

! Configuration File for keepalived global_defs { notification_email { root@localhost } notification_email_from node01_keepalived@localhost smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id node01 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.12.132 } vrrp_instance VI_1 { state MASTER interface ens33 virtual_router_id 51 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 12345678 } virtual_ipaddress { 192.168.0.33/24 brd 192.168.0.255 dev ens33 label ens33:1 } } vrrp_instance VI_2 { state BACKUP interface ens33 virtual_router_id 52 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 87654321 } virtual_ipaddress { 192.168.0.34/24 brd 192.168.0.255 dev ens33 label ens33:2 } }

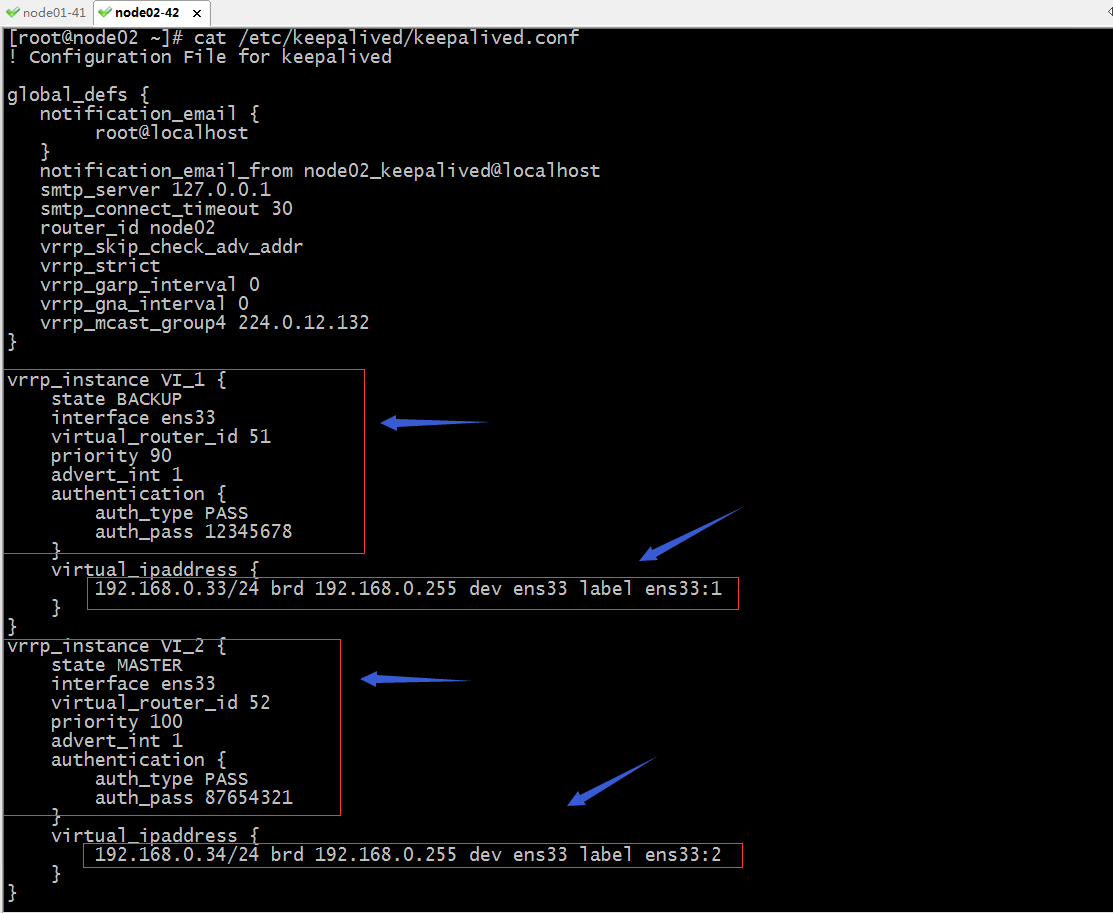

node02上的配置

! Configuration File for keepalived global_defs { notification_email { root@localhost } notification_email_from node02_keepalived@localhost smtp_server 127.0.0.1 smtp_connect_timeout 30 router_id node02 vrrp_skip_check_adv_addr vrrp_strict vrrp_garp_interval 0 vrrp_gna_interval 0 vrrp_mcast_group4 224.0.12.132 } vrrp_instance VI_1 { state BACKUP interface ens33 virtual_router_id 51 priority 90 advert_int 1 authentication { auth_type PASS auth_pass 12345678 } virtual_ipaddress { 192.168.0.33/24 brd 192.168.0.255 dev ens33 label ens33:1 } } vrrp_instance VI_2 { state MASTER interface ens33 virtual_router_id 52 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 87654321 } virtual_ipaddress { 192.168.0.34/24 brd 192.168.0.255 dev ens33 label ens33:2 } }

提示:定义双主模型,通常我们会利用两个vrrp实例来配置,中心思想就是利用两个节点的两个vrrp实例,把两个实例分别在node01和node02上各配置一个实例为MASTER,对应剩下节点就为BACKUP;这样配置以后,重启keepalived,如果node01和node02都正常在线,那么对于两个vip他们会各自占一个,如果其中一台server宕机,他们都会把自身为MASTER角色的vip转移到另外的节点;

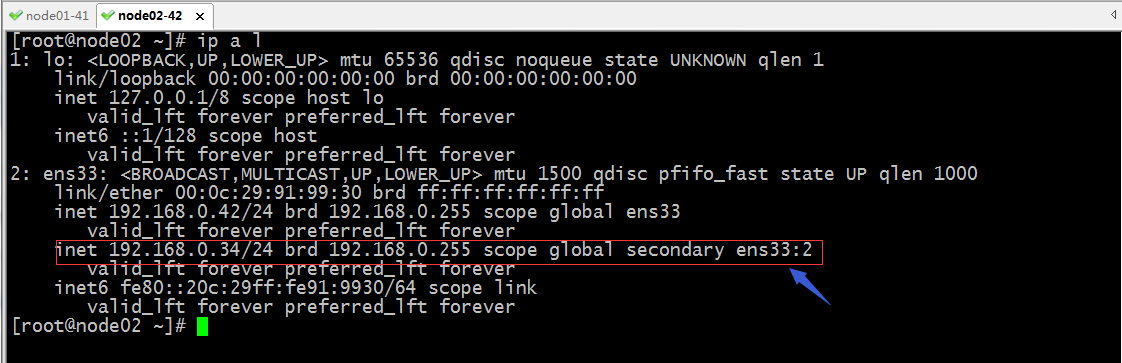

验证:重启node01和node02上的keepalived,看看对应vip是否都会在两个节点各自一个呢?

提示:可以看到重启两个节点上的keepalived后,根据我们配置的初始化角色各自都占用了一个vip;这样我们只需在把对位的域名(如果是web服务)的A记录解析分别解析到这两个vip后,这两个vip就可以各自承担一部分请求,从而实现两个keepalived都在工作;

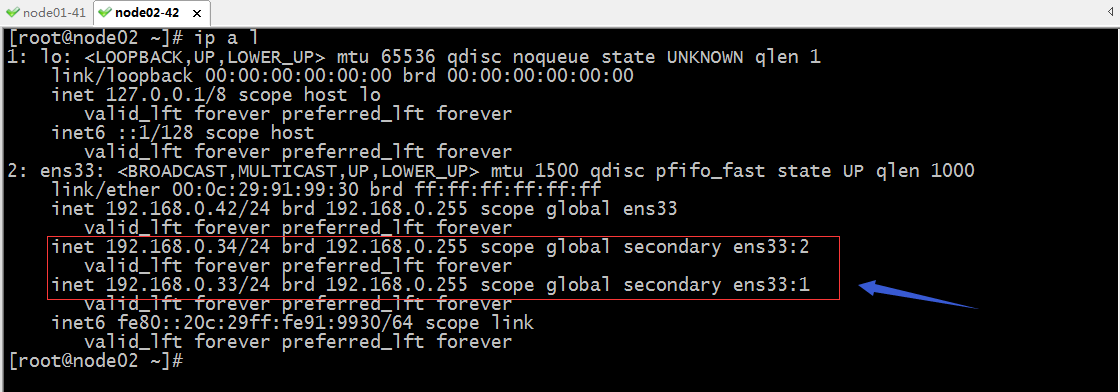

验证:把node01宕机以后,看看192.168.0.33这个地址是否会飘到node02上呢?

提示:可以看到当node01宕机以后,node02就把原来在node01上的vip抢过来应用在自身节点上;这样一来就实现了把原来访问192.168.0.33的流量转移到node02上了;同样的道理我们把node02宕机,在node02上的VIP也会转移到node01上;

keepalived相对corosync+pacemaker这种高可用集群,它要轻量很多;它的工作原理就是vrrp的实现;vrrp(Virtual Router Redundancy Protocol,虚拟路由冗余协议 ),设计之初它主要用于对LVS集群的高可用,同时它也能够对LVS后端real server做健康状态检测;它主要功能有基于vrrp协议完成地址流动,从而实现服务的故障转移;为VIP地址所在的节点生成ipvs规则;为ipvs集群的各RS做健康状态检测;基于脚本调用接口通过执行脚本完成脚本中定义的功能,进而影响集群事务;

keepalived相对corosync+pacemaker这种高可用集群,它要轻量很多;它的工作原理就是vrrp的实现;vrrp(Virtual Router Redundancy Protocol,虚拟路由冗余协议 ),设计之初它主要用于对LVS集群的高可用,同时它也能够对LVS后端real server做健康状态检测;它主要功能有基于vrrp协议完成地址流动,从而实现服务的故障转移;为VIP地址所在的节点生成ipvs规则;为ipvs集群的各RS做健康状态检测;基于脚本调用接口通过执行脚本完成脚本中定义的功能,进而影响集群事务;