机器学习sklearn(86):算法实例(43)分类(22)朴素贝叶斯(五)贝叶斯分类器做文本分类

1 文本编码技术简介

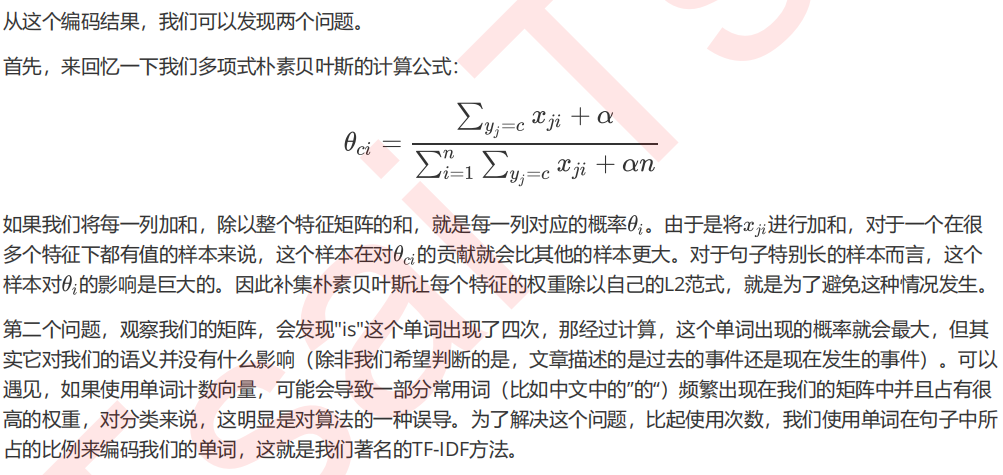

1.1 单词计数向量

sample = ["Machine learning is fascinating, it is wonderful" ,"Machine learning is a sensational techonology" ,"Elsa is a popular character"] from sklearn.feature_extraction.text import CountVectorizer vec = CountVectorizer() X = vec.fit_transform(sample) X#使用接口get_feature_names()调用每个列的名称 import pandas as pd #注意稀疏矩阵是无法输入pandas的 CVresult = pd.DataFrame(X.toarray(),columns = vec.get_feature_names()) CVresult

1.2 TF-IDF

from sklearn.feature_extraction.text import TfidfVectorizer as TFIDF vec = TFIDF() X = vec.fit_transform(sample) X #同样使用接口get_feature_names()调用每个列的名称 TFIDFresult = pd.DataFrame(X.toarray(),columns=vec.get_feature_names()) TFIDFresult #使用TF-IDF编码之后,出现得多的单词的权重被降低了么? CVresult.sum(axis=0)/CVresult.sum(axis=0).sum() TFIDFresult.sum(axis=0) / TFIDFresult.sum(axis=0).sum()

在之后的例子中,我们都会使用TF-IDF的编码方式。

2 探索文本数据

from sklearn.datasets import fetch_20newsgroups #初次使用这个数据集的时候,会在实例化的时候开始下载 data = fetch_20newsgroups() #通常我们使用data来查看data里面到底包含了什么内容,但由于fetch_20newsgourps这个类加载出的数据巨大,数 据结构中混杂很多文字,因此很难去看清 #不同类型的新闻 data.target_names #其实fetch_20newsgroups也是一个类,既然是类,应该就有可以调用的参数 #面对简单数据集,我们往往在实例化的过程中什么都不写,但是现在data中数据量太多,不方便探索 #因此我们需要来看看我们的类fetch_20newsgroups都有什么样的参数可以帮助我们

现在我们就可以直接通过参数来提取我们希望得到的数据集了。

import numpy as np import pandas as pd categories = ["sci.space" #科学技术 - 太空 ,"rec.sport.hockey" #运动 - 曲棍球 ,"talk.politics.guns" #政治 - 枪支问题 ,"talk.politics.mideast"] #政治 - 中东问题 train = fetch_20newsgroups(subset="train",categories = categories) test = fetch_20newsgroups(subset="test",categories = categories) train #可以观察到,里面依然是类字典结构,我们可以通过使用键的方式来提取内容 train.target_names #查看总共有多少篇文章存在 len(train.data) #随意提取一篇文章来看看 train.data[0] #查看一下我们的标签 np.unique(train.target) len(train.target) #是否存在样本不平衡问题? for i in [1,2,3]: print(i,(train.target == i).sum()/len(train.target))

3 使用TF-IDF将文本数据编码

from sklearn.feature_extraction.text import TfidfVectorizer as TFIDF Xtrain = train.data Xtest = test.data Ytrain = train.target Ytest = test.target tfidf = TFIDF().fit(Xtrain) Xtrain_ = tfidf.transform(Xtrain) Xtest_ = tfidf.transform(Xtest) Xtrain_ tosee = pd.DataFrame(Xtrain_.toarray(),columns=tfidf.get_feature_names()) tosee.head() tosee.shape

4 在贝叶斯上分别建模,查看结果

from sklearn.naive_bayes import MultinomialNB, ComplementNB, BernoulliNB from sklearn.metrics import brier_score_loss as BS name = ["Multinomial","Complement","Bournulli"] #注意高斯朴素贝叶斯不接受稀疏矩阵 models = [MultinomialNB(),ComplementNB(),BernoulliNB()] for name,clf in zip(name,models): clf.fit(Xtrain_,Ytrain) y_pred = clf.predict(Xtest_) proba = clf.predict_proba(Xtest_) score = clf.score(Xtest_,Ytest) print(name) #4个不同的标签取值下的布里尔分数 Bscore = [] for i in range(len(np.unique(Ytrain))): bs = BS(Ytest,proba[:,i],pos_label=i) Bscore.append(bs) print("\tBrier under {}:{:.3f}".format(train.target_names[i],bs)) print("\tAverage Brier:{:.3f}".format(np.mean(Bscore))) print("\tAccuracy:{:.3f}".format(score)) print("\n")

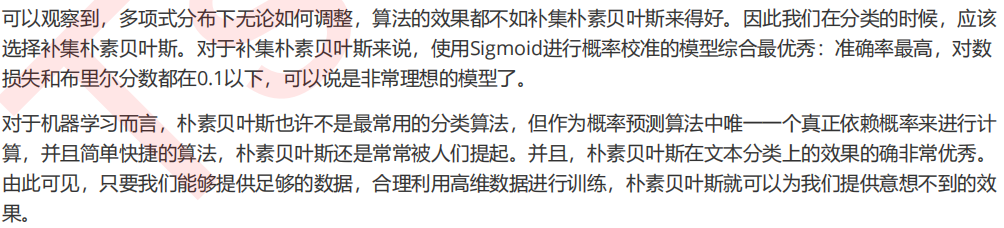

从结果上来看,两种贝叶斯的效果都很不错。虽然补集贝叶斯的布里尔分数更高,但它的精确度更高。我们可以使用概率校准来试试看能否让模型进一步突破:

from sklearn.calibration import CalibratedClassifierCV name = ["Multinomial" ,"Multinomial + Isotonic" ,"Multinomial + Sigmoid" ,"Complement" ,"Complement + Isotonic" ,"Complement + Sigmoid" ,"Bernoulli" ,"Bernoulli + Isotonic" ,"Bernoulli + Sigmoid"] models = [MultinomialNB() ,CalibratedClassifierCV(MultinomialNB(), cv=2, method='isotonic') ,CalibratedClassifierCV(MultinomialNB(), cv=2, method='sigmoid') ,ComplementNB() ,CalibratedClassifierCV(ComplementNB(), cv=2, method='isotonic') ,CalibratedClassifierCV(ComplementNB(), cv=2, method='sigmoid') ,BernoulliNB() ,CalibratedClassifierCV(BernoulliNB(), cv=2, method='isotonic') ,CalibratedClassifierCV(BernoulliNB(), cv=2, method='sigmoid') ] for name,clf in zip(name,models): clf.fit(Xtrain_,Ytrain) y_pred = clf.predict(Xtest_) proba = clf.predict_proba(Xtest_) score = clf.score(Xtest_,Ytest) print(name) Bscore = [] for i in range(len(np.unique(Ytrain))): bs = BS(Ytest,proba[:,i],pos_label=i) Bscore.append(bs) print("\tBrier under {}:{:.3f}".format(train.target_names[i],bs)) print("\tAverage Brier:{:.3f}".format(np.mean(Bscore))) print("\tAccuracy:{:.3f}".format(score)) print("\n")

本文来自博客园,作者:秋华,转载请注明原文链接:https://www.cnblogs.com/qiu-hua/p/14967591.html