03Hadoop

1. MapReduce 介绍

1.1. MapReduce 设计构思和框架结构

3. WordCount

- 需求: 在一堆给定的文本文件中统计输出每一个单词出现的总次数

4. MapReduce 运行模式

yarn jar hadoop_hdfs_operate‐1.0‐SNAPSHOT.jar

cn.itcast.hdfs.demo1.JobMain

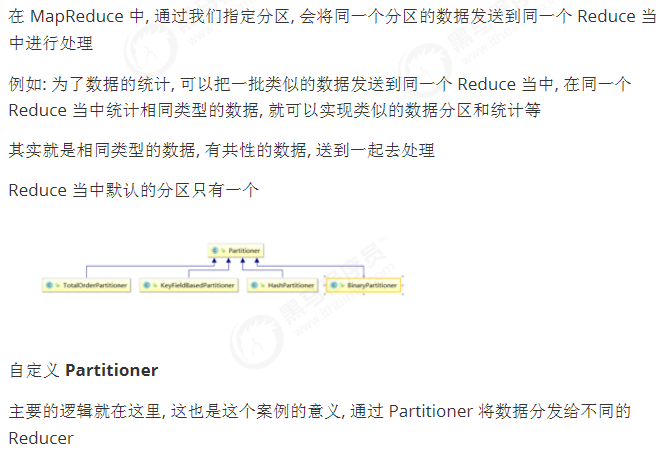

5. MapReduce 分区

Step 4. Main 入口

public class PartitionMain extends Configured implements Tool {

public static void main(String[] args) throws Exception{

int run = ToolRunner.run(new Configuration(), new

PartitionMain(), args);

System.exit(run);

}

@Override

public int run(String[] args) throws Exception {

Job job = Job.getInstance(super.getConf(),

PartitionMain.class.getSimpleName());

job.setJarByClass(PartitionMain.class);

job.setInputFormatClass(TextInputFormat.class);

job.setOutputFormatClass(TextOutputFormat.class);

TextInputFormat.addInputPath(job,new

Path("hdfs://192.168.52.250:8020/partitioner"));

TextOutputFormat.setOutputPath(job,new

Path("hdfs://192.168.52.250:8020/outpartition"));

job.setMapperClass(MyMapper.class);

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(NullWritable.class);

job.setOutputKeyClass(Text.class);

job.setMapOutputValueClass(NullWritable.class);

job.setReducerClass(MyReducer.class);

/**

* 设置我们的分区类,以及我们的reducetask的个数,注意reduceTask的个数

一定要与我们的

* 分区数保持一致

*/

job.setPartitionerClass(MyPartitioner.class);

job.setNumReduceTasks(2);

boolean b = job.waitForCompletion(true);

return b?0:1;

}

}

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 记一次.NET内存居高不下排查解决与启示

· 探究高空视频全景AR技术的实现原理

· 理解Rust引用及其生命周期标识(上)

· 浏览器原生「磁吸」效果!Anchor Positioning 锚点定位神器解析

· 没有源码,如何修改代码逻辑?

· 全程不用写代码,我用AI程序员写了一个飞机大战

· DeepSeek 开源周回顾「GitHub 热点速览」

· 记一次.NET内存居高不下排查解决与启示

· MongoDB 8.0这个新功能碉堡了,比商业数据库还牛

· .NET10 - 预览版1新功能体验(一)