Redis Cluster

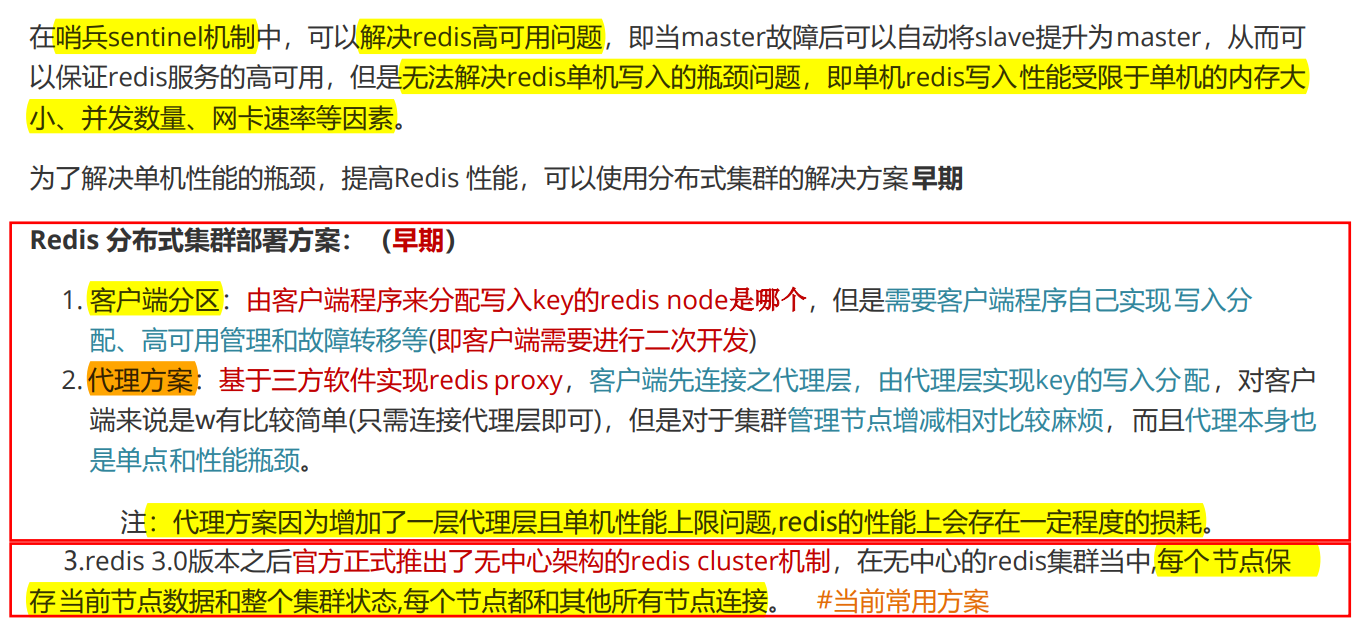

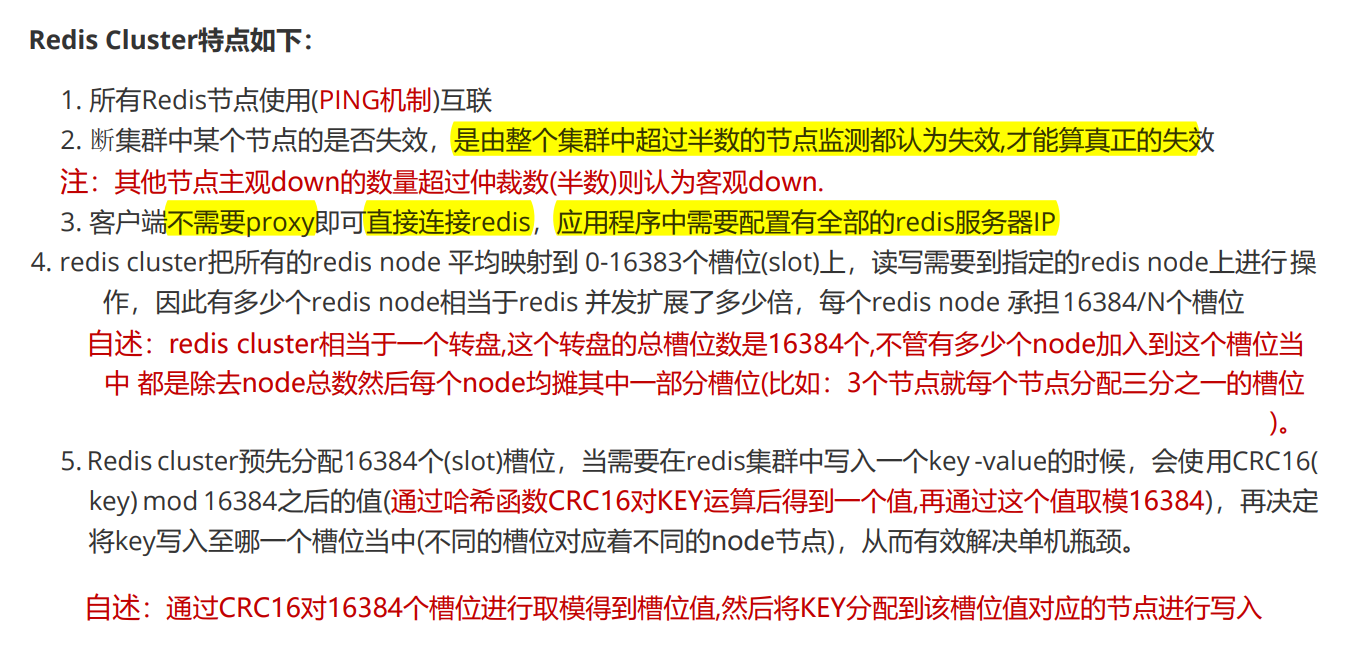

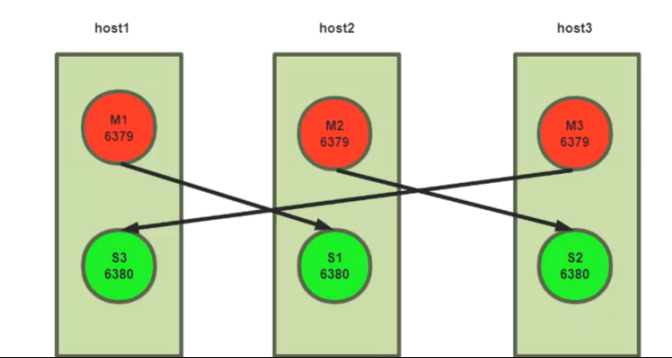

Redis Cluster介绍:

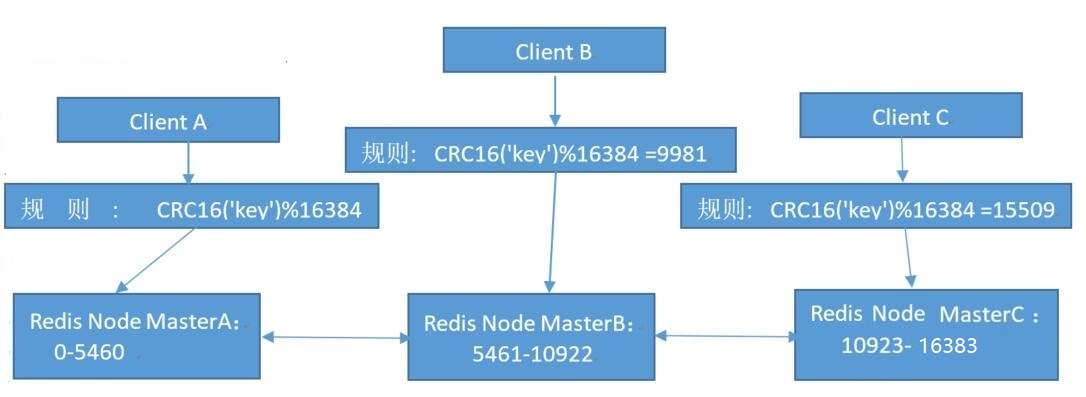

Redis cluster 基本架构:

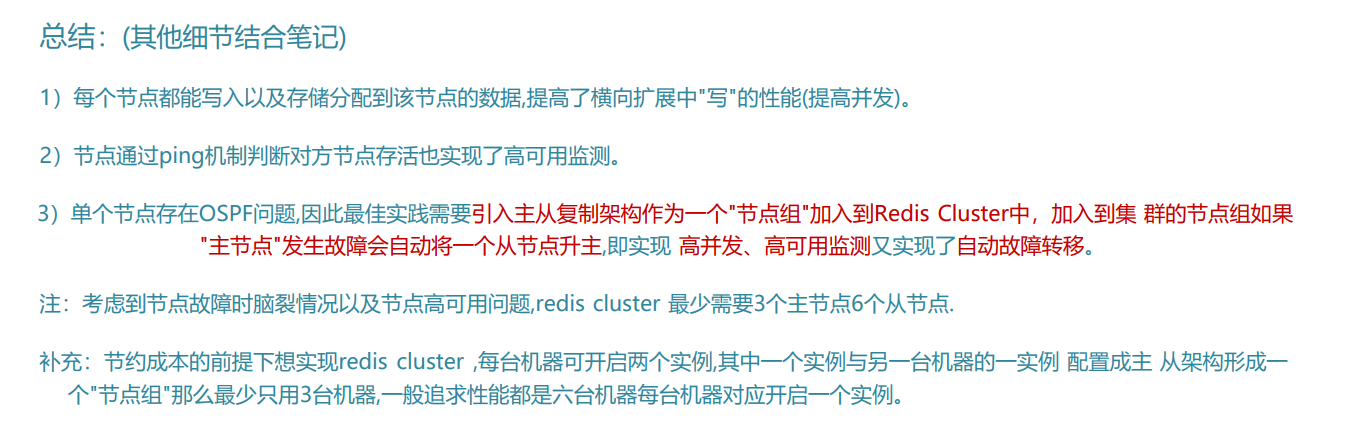

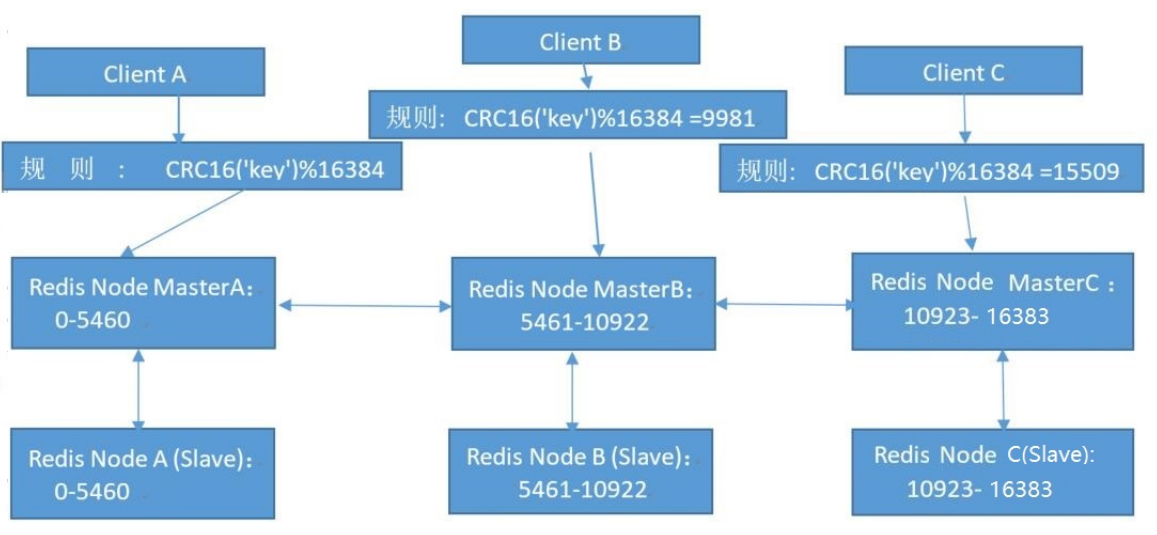

Redis cluster 主从架构:

Redis cluster的工作原理:

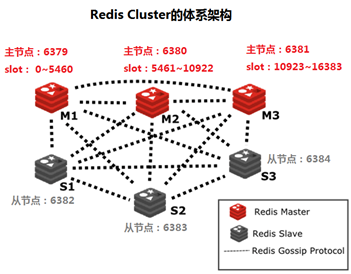

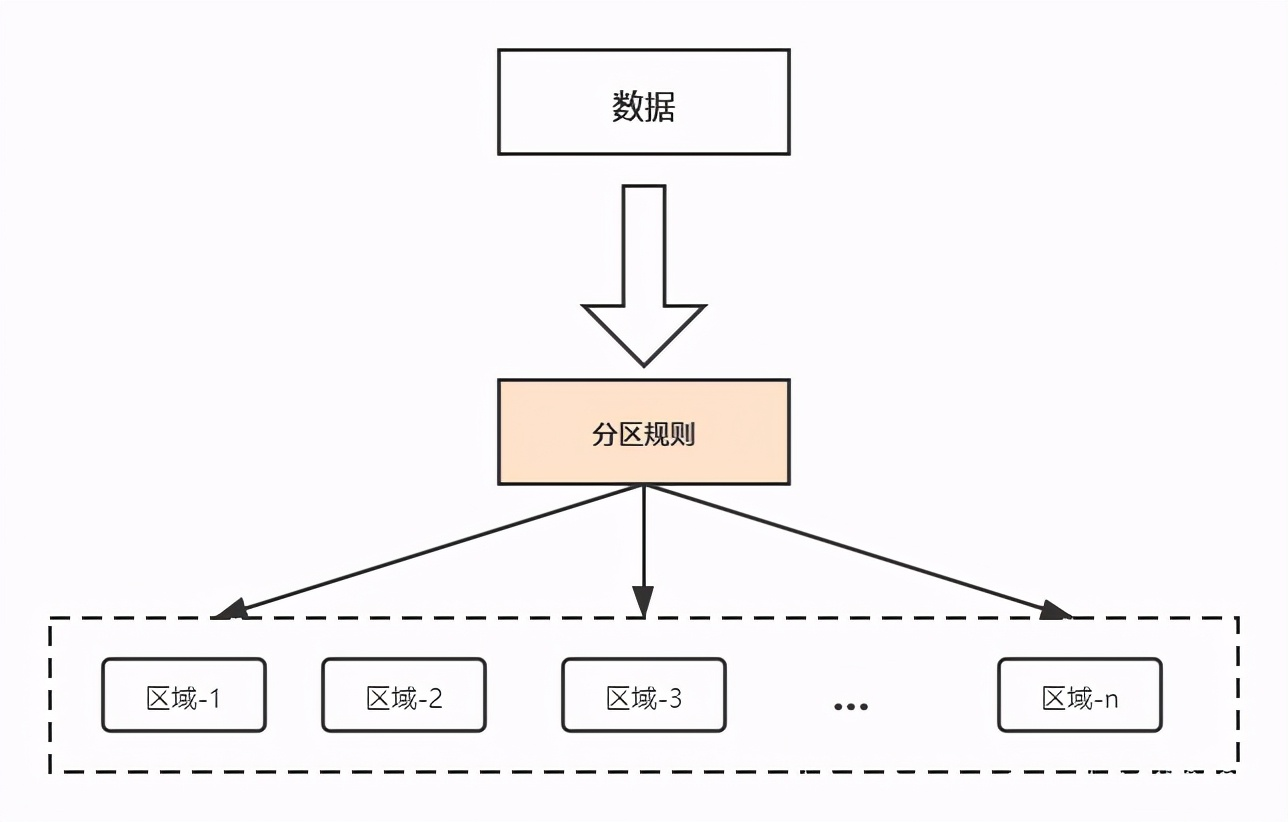

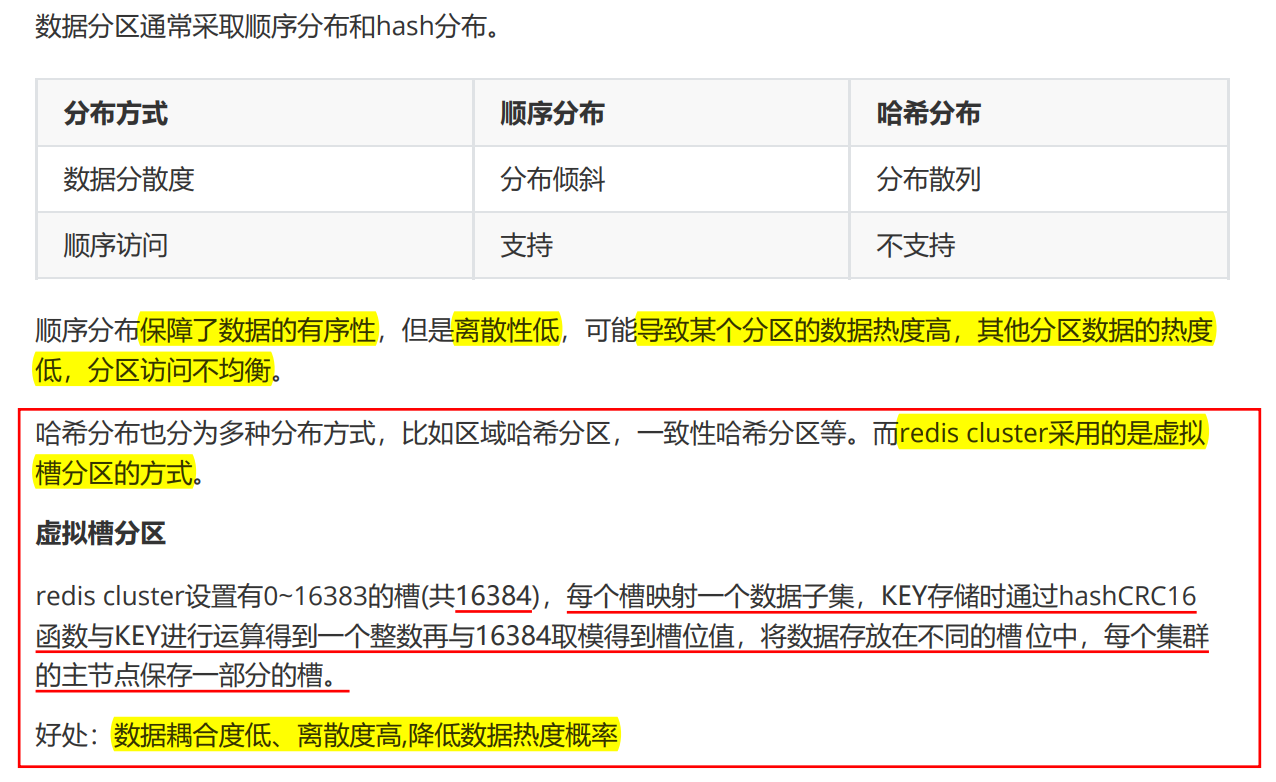

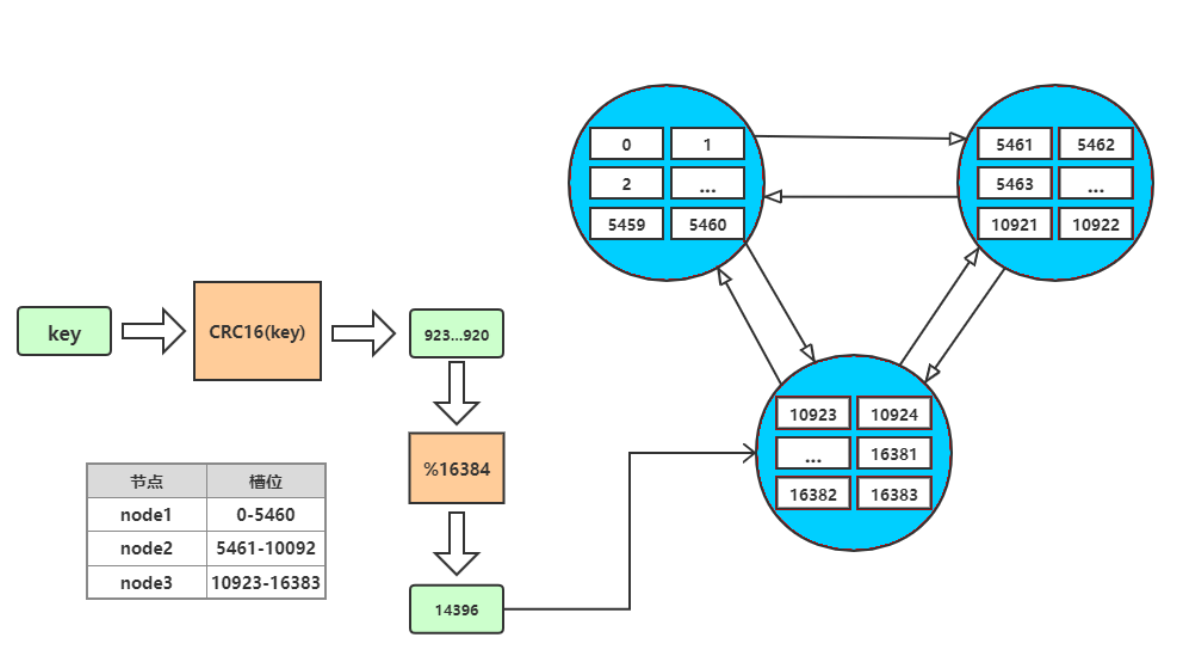

1.数据分区原理:

节点间信息互通细节:

1. 节点A对节点B发送一个meet操作,B返回后表示A和B之间能够进行沟通。

2. 节点A对节点C发送meet操作,C返回后,A和C之间也能进行沟通。

3. 然后B根据对A的了解,就能找到C,B和C之间也建立了联系。

4. 直到所有节点都能建立联系,这样每个节点都能互相知道对方负责哪些槽。

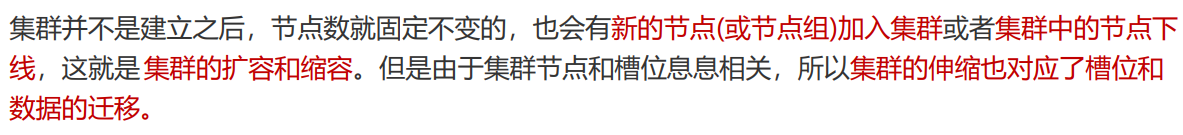

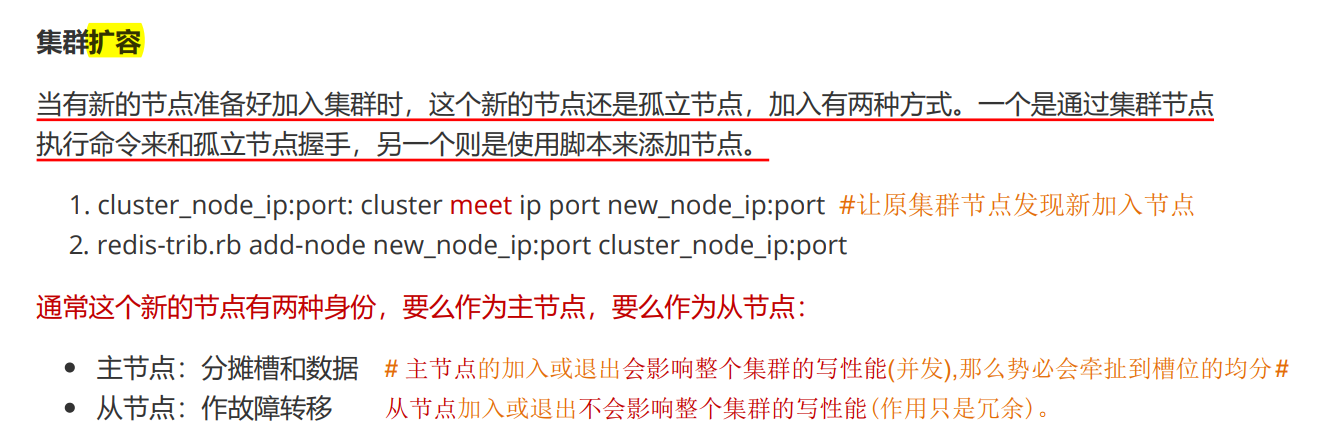

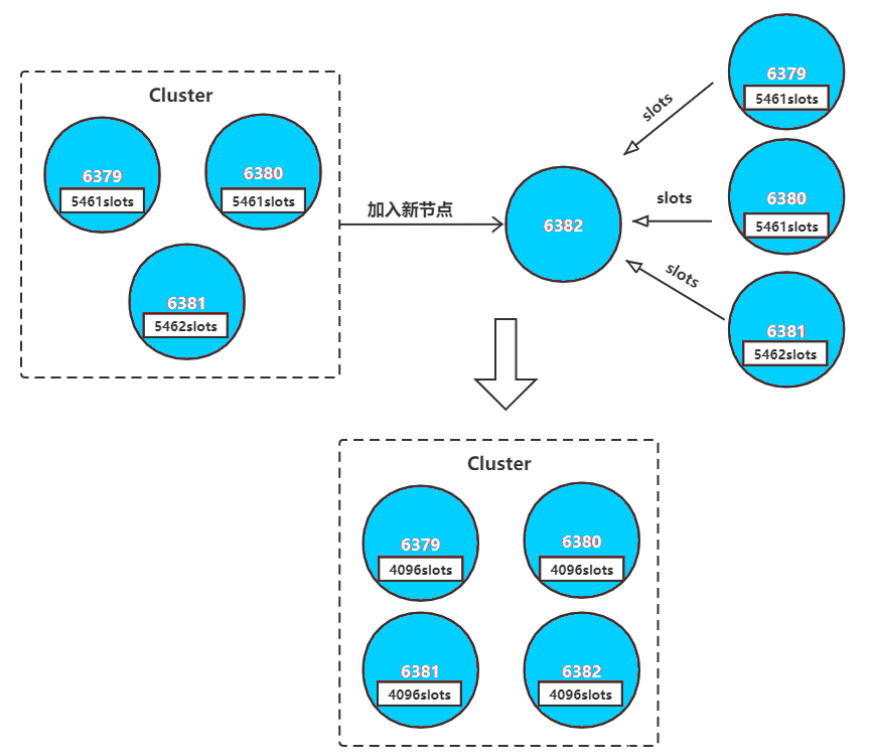

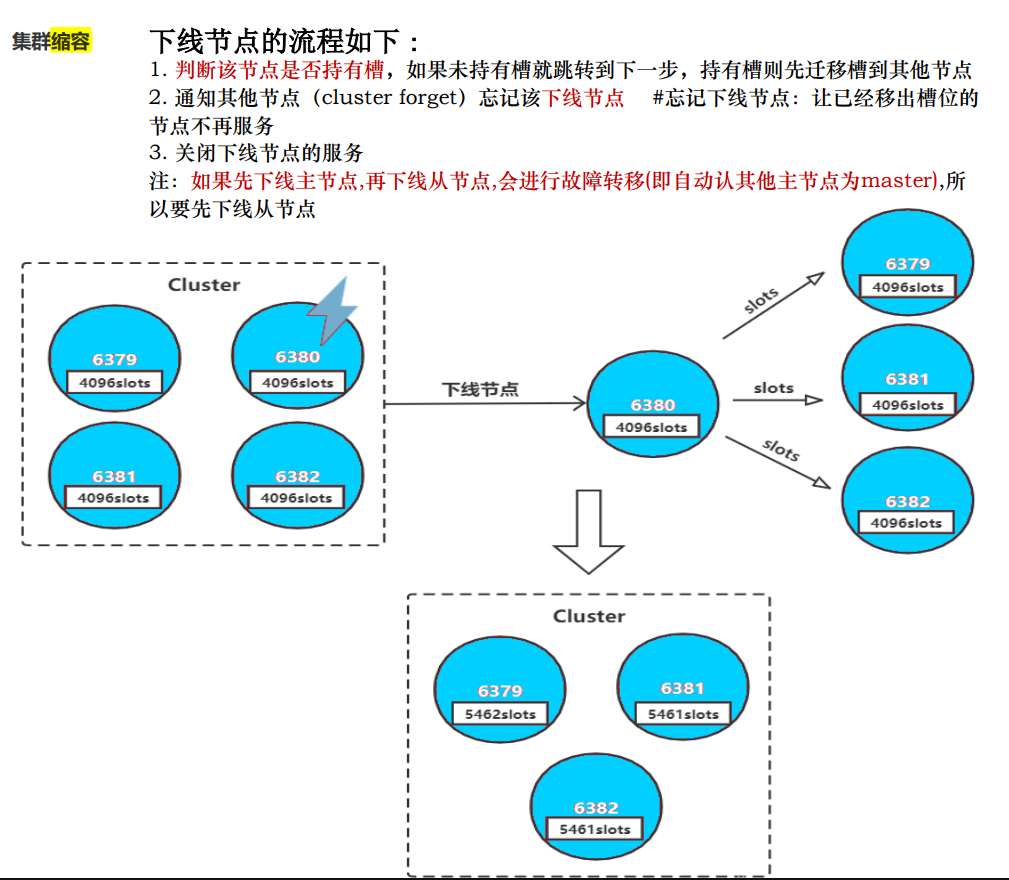

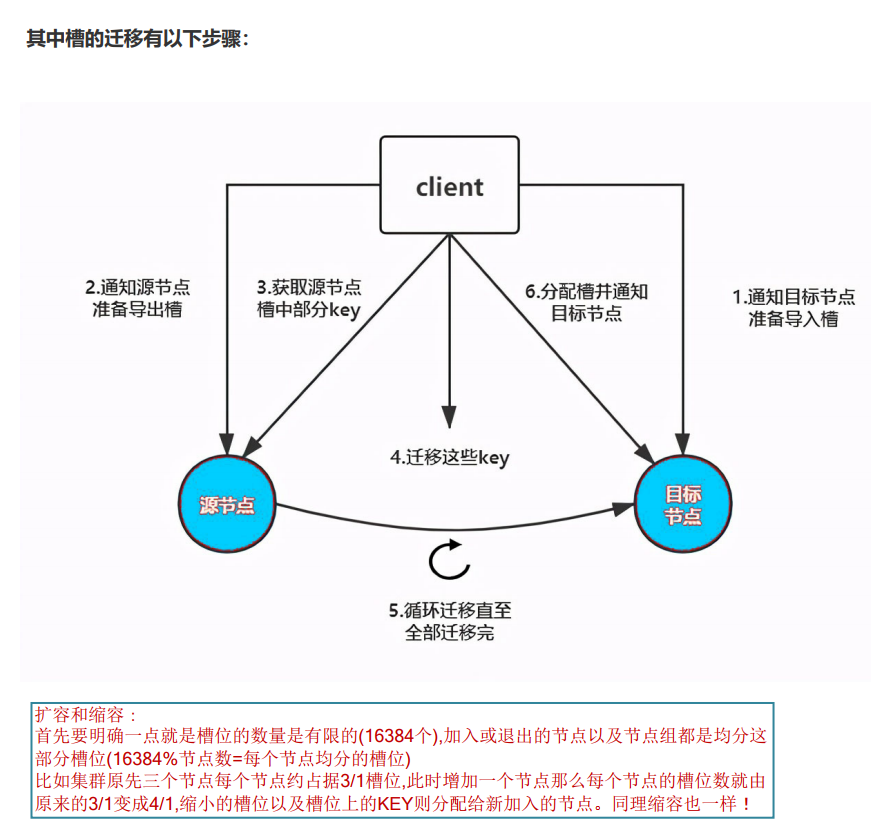

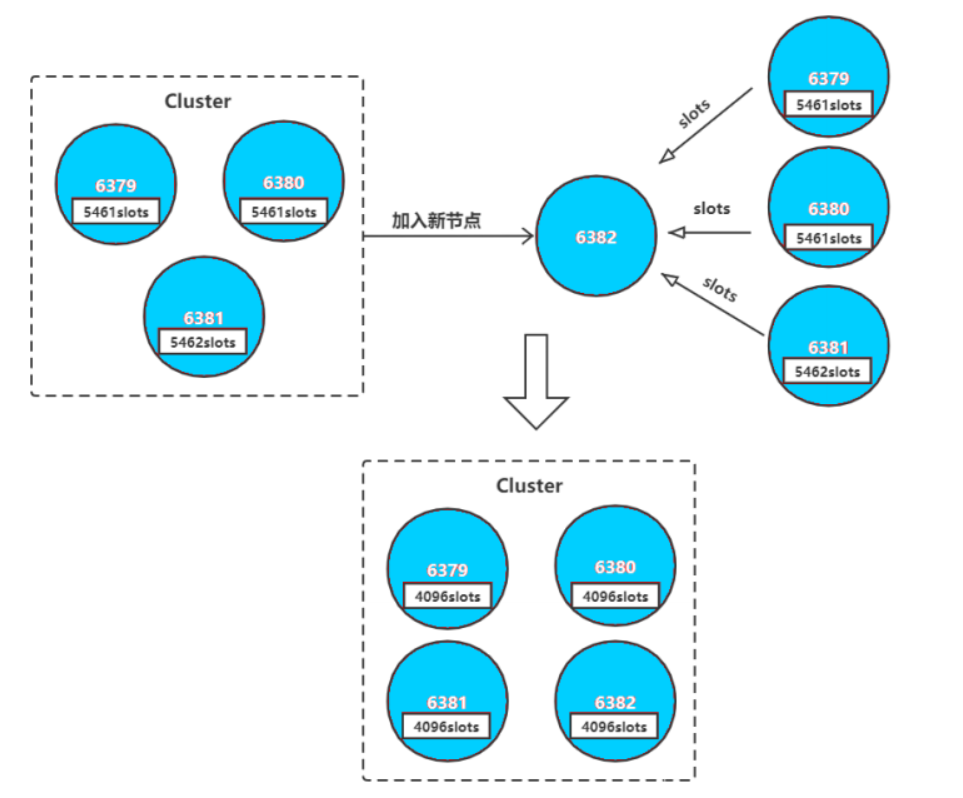

2. 集群伸缩原理:

扩容和缩容步骤总结:

扩容:添加节点>分配槽位>配置主从复制

缩容:如果缩减的是主节点需先将槽位移入到其他节点、如果是从节点则直接del(从节点不分配槽位)

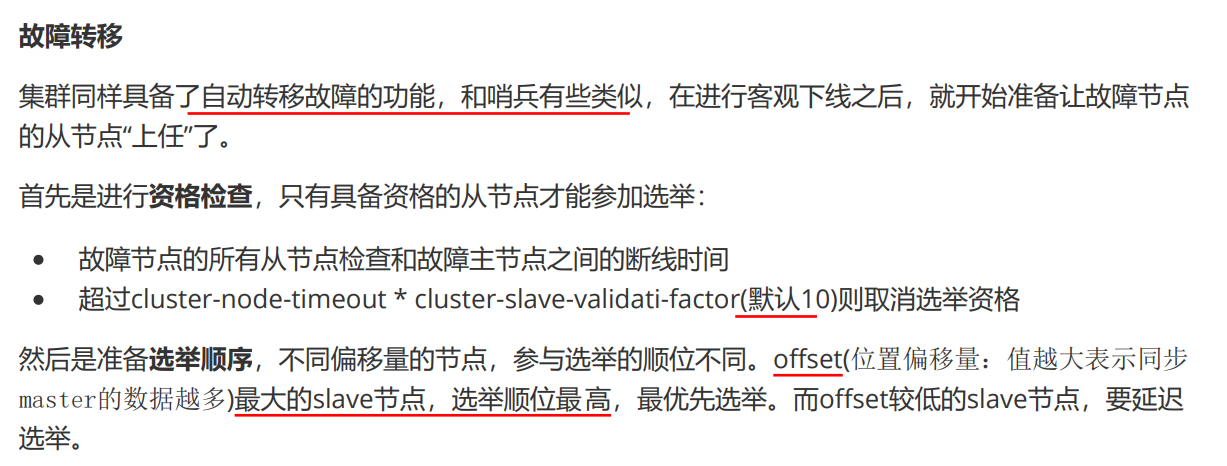

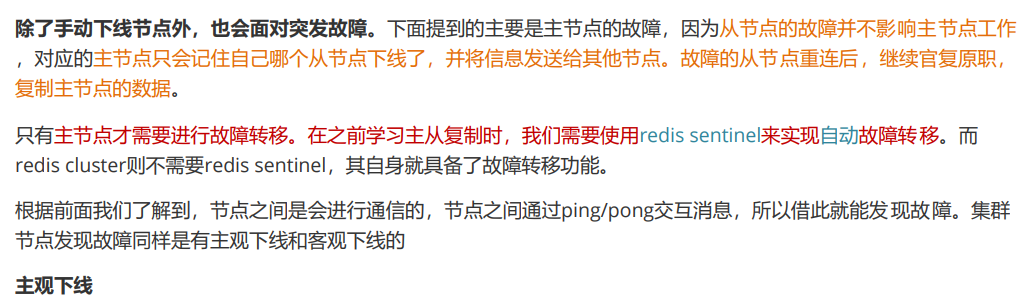

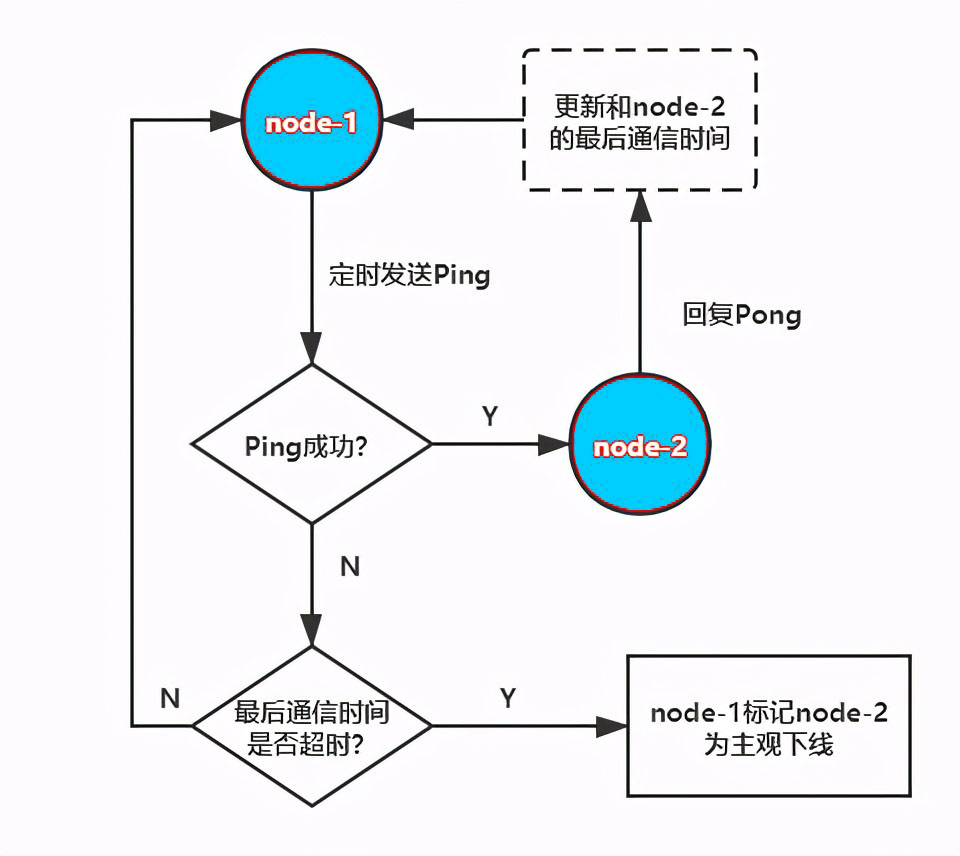

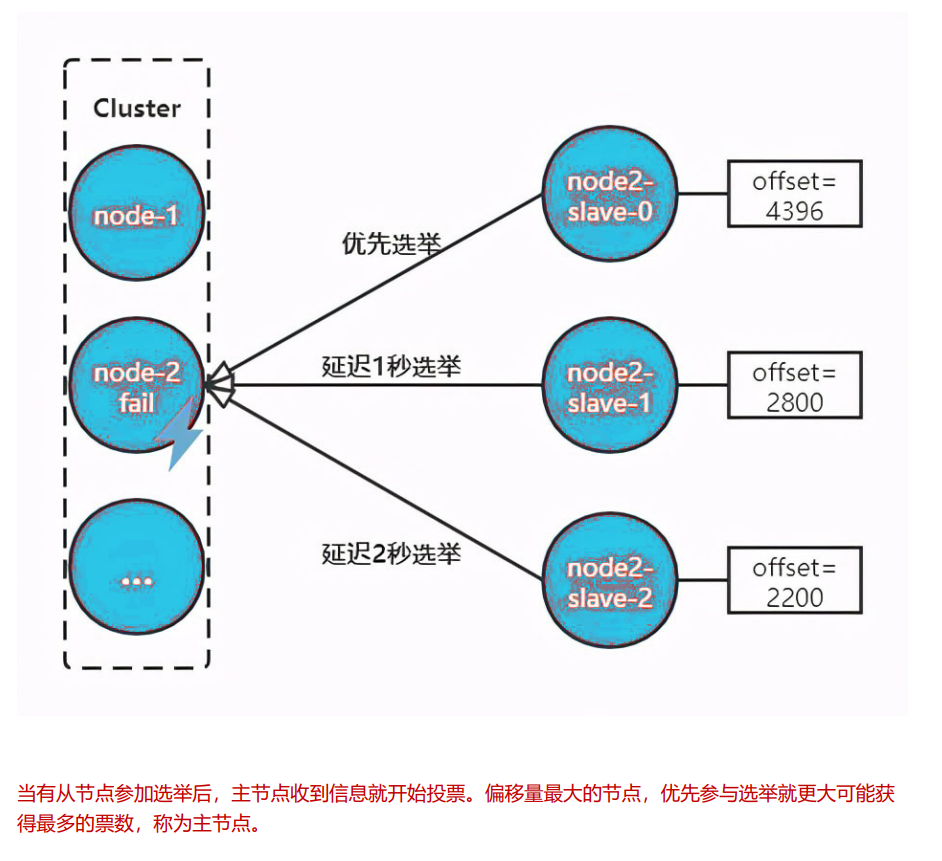

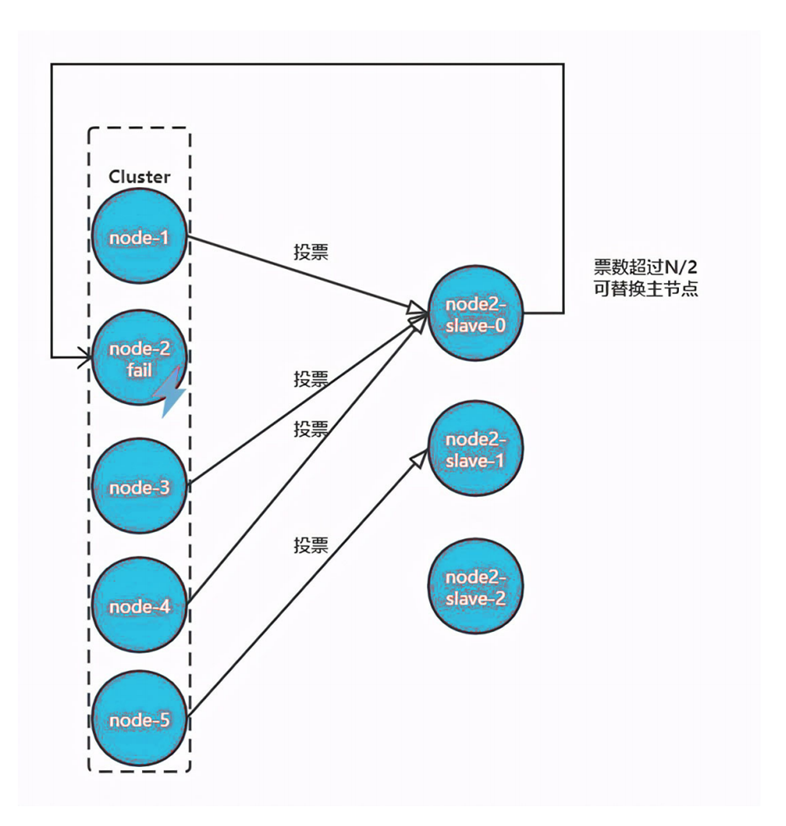

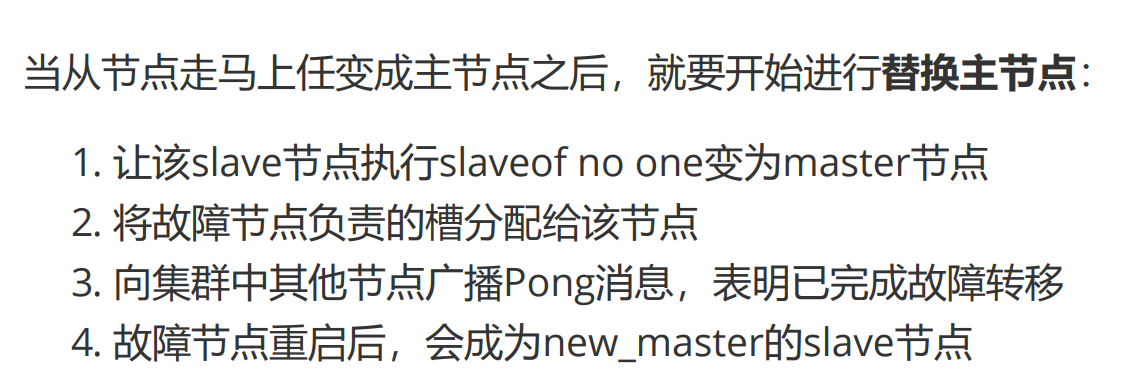

3.故障转移原理:

客观下线

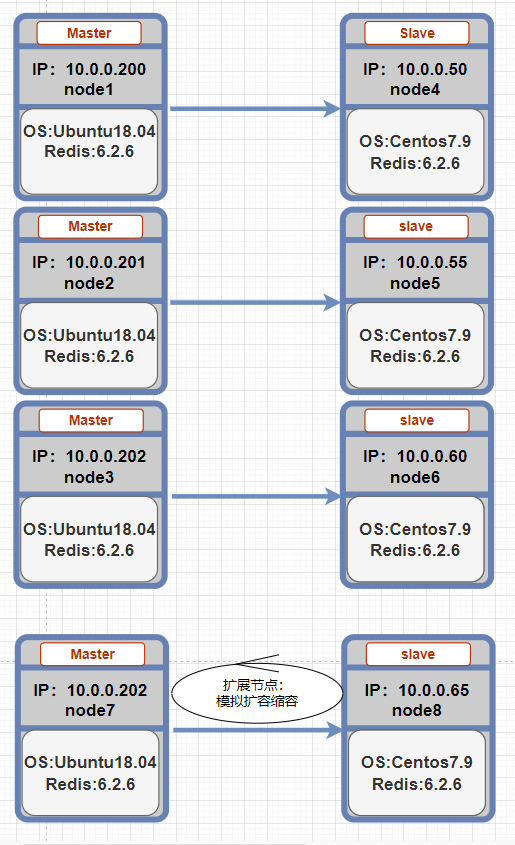

Redis Cluster 部署架构图:

1 所有节点安装redis并启动cluster功能

1)redis安装脚本<通用Ubuntu18.04和Centos7.9>

#!/bin/bash

#

#********************************************************************

#Author: caoqinglin

#QQ: 1251618589

#Date: 2021-10-22

#FileName: install_redis.sh

#Description: Ubuntu18.04

#Copyright (C): 2021 All rights reserved

#********************************************************************

#随机颜色

COLOR="\E[1;$[RANDOM%7+31]m"

END="\E[0m"

#系统与CPU核数

OS=`cat /etc/os-release | sed -rn 's@^ID="?(.*[^"])"?@\1@p'`

CPUS=`lscpu |grep "CPU(s)"|awk '{print $2}'|head -1`

REDIS_FILE="redis-6.2.6.tar.gz"

REDIS_PATH="/usr/local/src"

REDIS_APP_PATH="/apps/redis"

install_rely(){

if [ ${OS} = "centos" ];then

yum clean all;yum makecache

yum -y install gcc jemalloc-devel systemd-devel &> /dev/null || { echo -e "${COLOR}依赖包安装失败${END}"; exit; }

elif [ ${OS} = "ubuntu" ];then

apt update

apt -y install make gcc libjemalloc-dev libsystemd-dev &> /dev/nul || { echo -e "${COLOR}依赖包安装失败${END}"; exit; }

else

{ echo -e "${COLOR}该脚本仅支持CentOS和Ubuntu${END}"; exit; }

fi

}

install_redis(){

if [ -d ${REDIS_PATH}/${REDIS_FILE%%.tar*} ];then

{ echo -e "${COLOR}解压失败,${REDIS_PATH}下已存在${REDIS_FILE%%.tar*}!!!${END}"; exit; }

elif ! [ -d ${REDIS_PATH}/${REDIS_FILE%%.tar*} ];then

tar xfz ${REDIS_PATH}/${REDIS_FILE} -C ${REDIS_PATH}/ && echo -e "${COLOR}${REDIS_FILE}已解压在${REDIS_PATH}路径下${END}" || { echo -e "${COLOR}解压失败!!!确认${REDIS_FILE}是否已放置${REDIS_PATH}路径下${END}"; exit; }

cd ${REDIS_PATH}/${REDIS_FILE%%.tar*}

make -j $CUPS USE_SYSTEMD=yes PREFIX=$REDIS_APP_PATH install

[ $? -eq 0 ] && echo -e "${COLOR}redis已编译成功${END}" || { echo -e "${COLOR}redis编译失败,已退出!!!${END}"; exit; }

fi

}

redis_conf(){

mkdir -p $REDIS_APP_PATH/{etc,log,data,run} &>/dev/null

cp ${REDIS_PATH}/${REDIS_FILE%%.tar*}/redis.conf $REDIS_APP_PATH/etc/

cp ${REDIS_PATH}/${REDIS_FILE%%.tar*}/sentinel.conf $REDIS_APP_PATH/etc/

ln -s $REDIS_APP_PATH/bin/redis-* /usr/bin/

if id redis &>/dev/null ;then

echo -e "${COLOR}redis账号已存在${END}"

chown -R redis:redis /apps/redis/

else

useradd -r -s /sbin/nologin redis

echo -e "${COLOR}redis 用户创建成功${END}"

chown -R redis:redis /apps/redis/

fi

cat > /lib/systemd/system/redis.service <<-EOF

[Unit]

Description=Redis persistent key-value database

After=network.target

[Service]

ExecStart=$REDIS_APP_PATH/bin/redis-server $REDIS_APP_PATH/etc/redis.conf --supervised systemd

ExecStop=/bin/kill -s QUIT \$MAINPID

Type=notify

User=redis

Group=redis

RuntimeDirectory=redis

RuntimeDirectoryMode=0755

LimitNOFILE=1000000

[Install]

WantedBy=multi-user.target

EOF

cat > /lib/systemd/system/redis-sentinel.service <<-EOF

[Unit]

Description=Redis Sentinel

After=network.target

[Service]

ExecStart=$REDIS_APP_PATH/bin/redis-sentinel $REDIS_APP_PATH/etc/sentinel.conf --supervised systemd

ExecStop=/bin/kill -s QUIT \$MAINPID

User=redis

Group=redis

RuntimeDirectory=redis

RuntimeDirectoryMode=0755

[Install]

WantedBy=multi-user.target

EOF

systemctl daemon-reload

systemctl enable --now redis &> /dev/null

systemctl is-active redis &> /dev/null

if [ $? -eq 0 ];then

echo -e "${COLOR}redis 安装完成${END}"

elif ! [ $? -eq 0 ];then

echo -e "${COLOR} redis启动失败!!!${END}"

fi

}

main(){

#install_rely

#install_redis

redis_conf

}

main

2)修改redis配置文件.<标红为集群功能必须项>

grep -vE "^#|^$" /apps/redis/etc/redis.conf

bind 0.0.0.0

protected-mode yes

port 6379

tcp-backlog 511

timeout 0

tcp-keepalive 300

daemonize no

pidfile /apps/redis/run/redis_6379.pid

loglevel notice

logfile "/apps/redis/log/redis_6379.log"

databases 16

always-show-logo no

set-proc-title yes

proc-title-template "{title} {listen-addr} {server-mode}"

stop-writes-on-bgsave-error yes

rdbcompression yes

rdbchecksum yes

dbfilename 6379.rdb

rdb-del-sync-files no

dir "/apps/redis/data"

masterauth "123456"

replica-serve-stale-data yes

replica-read-only yes

repl-diskless-sync no

repl-diskless-sync-delay 40

repl-diskless-load disabled

repl-disable-tcp-nodelay yes

repl-backlog-size 1024mb

repl-backlog-ttl 3600

replica-priority 100

acllog-max-len 128

requirepass "123456"

rename-command FLUSHALL ""

maxclients 100000

lazyfree-lazy-eviction no

lazyfree-lazy-expire no

lazyfree-lazy-server-del no

replica-lazy-flush no

lazyfree-lazy-user-del no

lazyfree-lazy-user-flush no

oom-score-adj no

oom-score-adj-values 0 200 800

disable-thp yes

appendonly yes

appendfilename "6379.aof"

appendfsync everysec

no-appendfsync-on-rewrite yes

auto-aof-rewrite-percentage 100

auto-aof-rewrite-min-size 64mb

aof-load-truncated yes

aof-use-rdb-preamble yes

lua-time-limit 5000

cluster-enabled yes

cluster-config-file /apps/redis/etc/nodes-6379.conf

cluster-require-full-coverage no

slowlog-log-slower-than 10000

slowlog-max-len 128

latency-monitor-threshold 0

notify-keyspace-events ""

hash-max-ziplist-entries 512

hash-max-ziplist-value 64

list-max-ziplist-size -2

list-compress-depth 0

set-max-intset-entries 512

zset-max-ziplist-entries 128

zset-max-ziplist-value 64

hll-sparse-max-bytes 3000

stream-node-max-bytes 4096

stream-node-max-entries 100

activerehashing yes

client-output-buffer-limit normal 0 0 0

client-output-buffer-limit replica 256mb 64mb 60

client-output-buffer-limit pubsub 32mb 8mb 60

hz 10

dynamic-hz yes

aof-rewrite-incremental-fsync yes

rdb-save-incremental-fsync yes

jemalloc-bg-thread yes

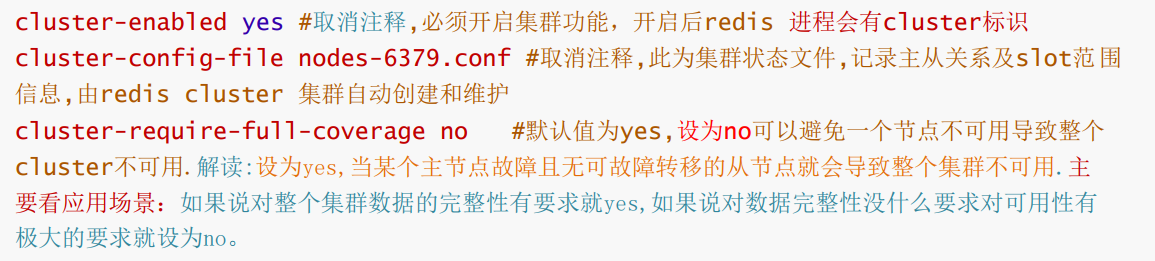

cluster选项解析:

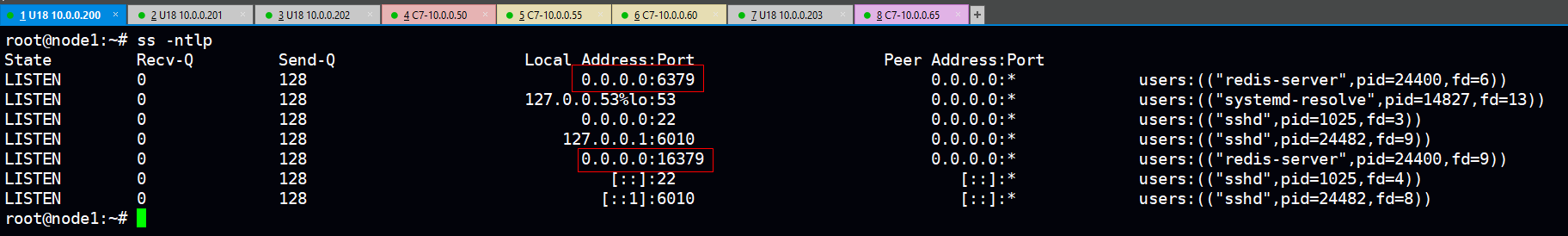

3)重启所有节点redis服务,查看集群状态。

1>可以看到所有节点的redis服务和集群功能分别监听在6379和16379端口(不一一贴图)

ss --ntlp

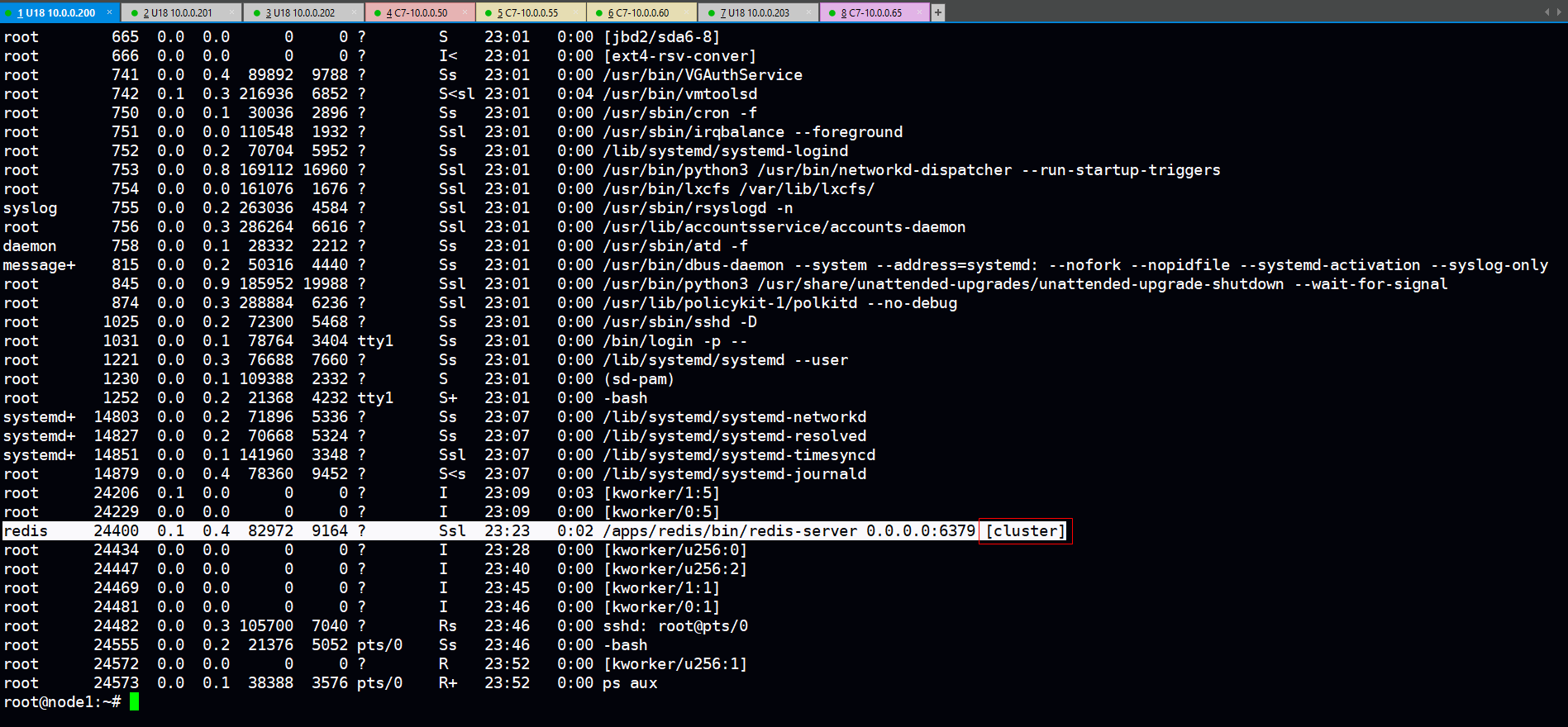

2>查看进程,可以看到redis进程上附带有[cluster]标签

ps aux

2.创建集群:主节点不可少于3个否则报错!

1)通过redis-cli的选项创建集群 --cluster-replicas 1 表示每个master自动分配一个slave节点.注:所有master在前、slave在后,master会自动选择一个slave

redis-cli -a 123456 -p 6379 --no-auth-warning --cluster create 10.0.0.200:6379 10.0.0.201:6379 10.0.0.202:6379 10.0.0.50:6379 10.0.0.55:6379 10.0.0.60:6379 --cluster-replicas 1

>>> Performing hash slots allocation on 6 nodes...

Master[0] -> Slots 0 - 5460

Master[1] -> Slots 5461 - 10922

Master[2] -> Slots 10923 - 16383

Adding replica 10.0.0.55:6379 to 10.0.0.200:6379

Adding replica 10.0.0.60:6379 to 10.0.0.201:6379

Adding replica 10.0.0.50:6379 to 10.0.0.202:6379

M: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots:[0-5460] (5461 slots) master

M: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots:[5461-10922] (5462 slots) master

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[10923-16383] (5461 slots) master

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

S: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

replicates eee564607734658f35c95c3561855ccb67f4c02b

S: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

replicates f402ab2424851da0673f85a2e77a437b66e1efbf

Can I set the above configuration? (type 'yes' to accept): yes #输入yes自动创建集群

>>> Nodes configuration updated

>>> Assign a different config epoch to each node

>>> Sending CLUSTER MEET messages to join the cluster

Waiting for the cluster to join

..

>>> Performing Cluster Check (using node 10.0.0.200:6379)

M: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots:[0-5460] (5461 slots) master #已经分配的槽位5461个

1 additional replica(s) #分配了一个slave

S: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots: (0 slots) slave #slave没有分配槽位(slave不分配槽位)

replicates eee564607734658f35c95c3561855ccb67f4c02b #master10.0.0.200的节点ID

S: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots: (0 slots) slave

replicates f402ab2424851da0673f85a2e77a437b66e1efbf #master10.0.0.201的节点ID

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230 #master10.0.0.202的节点ID

M: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration. #所有槽位分配成功

>>> Check for open slots... #检查打开的槽位

>>> Check slots coverage... #检查插槽覆盖范围

[OK] All 16384 slots covered. #16394个槽位全部分配成功

得到的主从关系:

master:10.0.0.200---slave:10.0.0.55

master:10.0.0.201---slave:10.0.0.60

master:10.0.0.202---slave:10.0.0.50

或者可通过客户端工具以此命令查看其主从关系

redis-cli -a 123456 -p 6379 --no-auth-warning -c cluster nodes

80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379@16379 slave eee564607734658f35c95c3561855ccb67f4c02b 0 1634920747030 1 connected

ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379@16379 slave f402ab2424851da0673f85a2e77a437b66e1efbf 0 1634920748035 2 connected

6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379@16379 master - 0 1634920747000 3 connected 10923-16383

efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379@16379 slave 6d0b3d42a216735fef0695014d404cf2f5a83230 0 1634920749041 3 connected

eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379@16379 myself,master - 0 1634920747000 1 connected 0-5460

f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379@16379 master - 0 1634920746026 2 connected 5461-10922

2)查看集群状态

1>显示redis cluster 状态

redis-cli -a 123456 -p 6379 --no-auth-warning -c cluster info

cluster_state:ok

cluster_slots_assigned:16384 #已分配槽位数

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6 #节点数

cluster_size:3 #主从复制集群数

cluster_current_epoch:6

cluster_my_epoch:1

cluster_stats_messages_ping_sent:2210

cluster_stats_messages_pong_sent:2276

cluster_stats_messages_sent:4486

cluster_stats_messages_ping_received:2271

cluster_stats_messages_pong_received:2210

cluster_stats_messages_meet_received:5

cluster_stats_messages_received:4486

2>显示redis cluster中的主从复制集群状态(可选取cluster任意一个节点观看)

root@node1:~# redis-cli -a 123456 --no-auth-warning -c --cluster info 10.0.0.201:6379

10.0.0.201:6379 (f402ab24...) -> 0 keys | 5462 slots | 1 slaves.

10.0.0.202:6379 (6d0b3d42...) -> 0 keys | 5461 slots | 1 slaves.

10.0.0.200:6379 (eee56460...) -> 0 keys | 5461 slots | 1 slaves.

[OK] 0 keys in 3 masters.

0.00 keys per slot on average.

root@node1:~#

3>check一下集群节点情况

root@node1:~# redis-cli -a 123456 --no-auth-warning -c --cluster check 10.0.0.201:6379 #可跟集群任意节点IP 10.0.0.201:6379 (f402ab24...) -> 0 keys | 5462 slots | 1 slaves. 10.0.0.202:6379 (6d0b3d42...) -> 0 keys | 5461 slots | 1 slaves. 10.0.0.200:6379 (eee56460...) -> 0 keys | 5461 slots | 1 slaves. [OK] 0 keys in 3 masters. 0.00 keys per slot on average. >>> Performing Cluster Check (using node 10.0.0.201:6379) M: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379 slots:[5461-10922] (5462 slots) master 1 additional replica(s) M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379 slots:[10923-16383] (5461 slots) master 1 additional replica(s) S: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379 slots: (0 slots) slave replicates f402ab2424851da0673f85a2e77a437b66e1efbf S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379 slots: (0 slots) slave replicates 6d0b3d42a216735fef0695014d404cf2f5a83230 M: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379 slots:[0-5460] (5461 slots) master 1 additional replica(s) S: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379 slots: (0 slots) slave replicates eee564607734658f35c95c3561855ccb67f4c02b [OK] All nodes agree about slots configuration. >>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

3.对集群中主节点写入数据

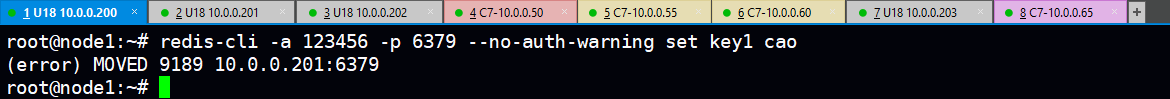

1)通过客户端工具连接集群中主节点写入数据会报错,提示:移动到9189槽位所对应的节点:10.0.0.201:6379写入

原因:这是由于客户端工具redis-cli不支持集群重定向功能,致使在进行KEY写入时无法跟随当前节点重定向到分配的槽位值所对应的节点上写入KEY.

解决方法:通过-c选项支持集群重定向功能

root@node1:~# redis-cli -a 123456 -p 6379 --no-auth-warning -c set key1 cao

OK

root@node1:~# redis-cli -a 123456 -p 6379 --no-auth-warning -c get key1

"cao

2)显示KEY所对应槽位值信息(与之前没有使用-c时所分配的槽位值一致)

root@node1:~# redis-cli -a 123456 -p 6379 --no-auth-warning -c cluster keyslot key1

(integer) 9189

3)通过python测试代码对集群中节点进行批量KEY写入

代码逻辑:导入redis相关库,标注节点IP和端口以及登录节点的验证密码,通过for循环写入一万个KEY

apt -y install python3

apt install python3-pip

pip3 install redis-py-cluster

vim redis_cluster_test.py

#!/usr/bin/env python3

from rediscluster import RedisCluster

startup_nodes = [

{"host":"10.0.0.200", "port":6379},

{"host":"10.0.0.201", "port":6379},

{"host":"10.0.0.202", "port":6379},

{"host":"10.0.0.50", "port":6379},

{"host":"10.0.0.55", "port":6379},

{"host":"10.0.0.60", "port":6379}

]

redis_conn= RedisCluster(startup_nodes=startup_nodes,password='123456', decode_responses=True)

for i in range(0, 10000):

redis_conn.set('key'+str(i),'value'+str(i))

print('key'+str(i)+':',redis_conn.get('key'+str(i)))

<执行脚本写入KEY>

chmod +x redis_cluster_test.py

root@node1:~# python3 redis_cluster_test.py

......

.........

key9996: value9996

key9997: value9997

key9998: value9998

key9999: value9999

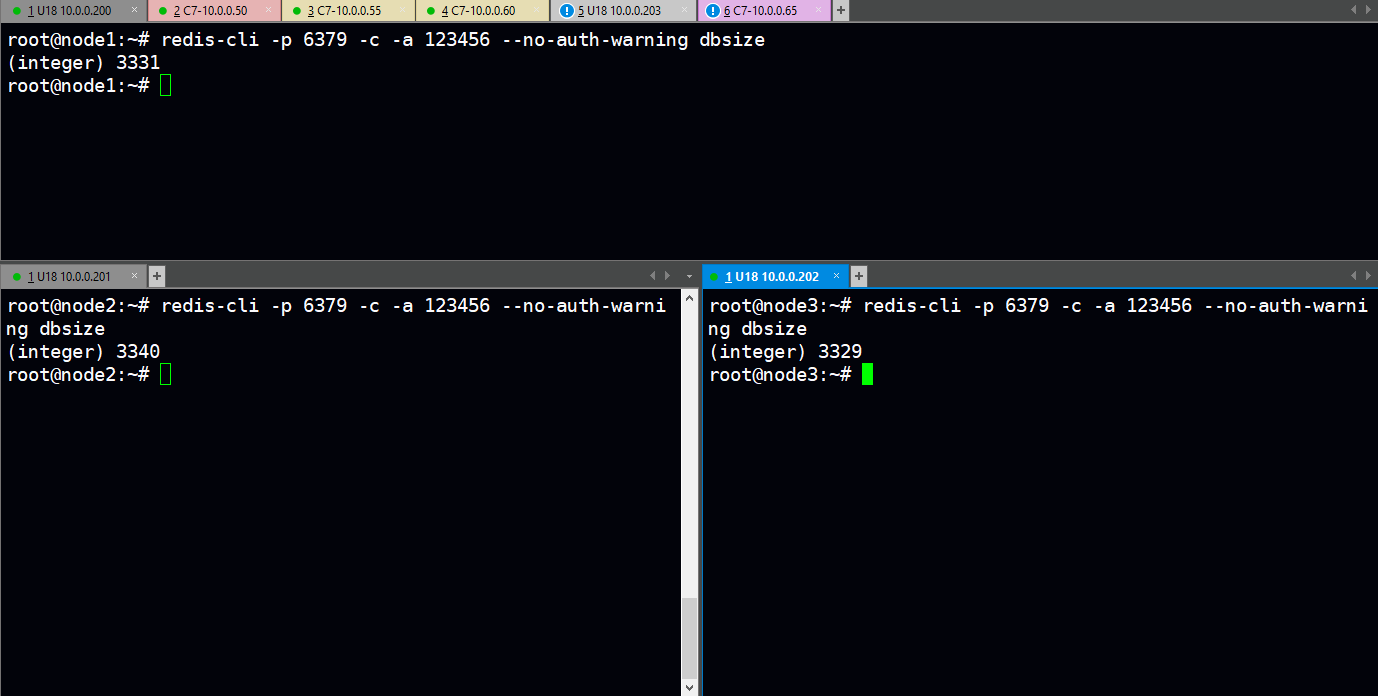

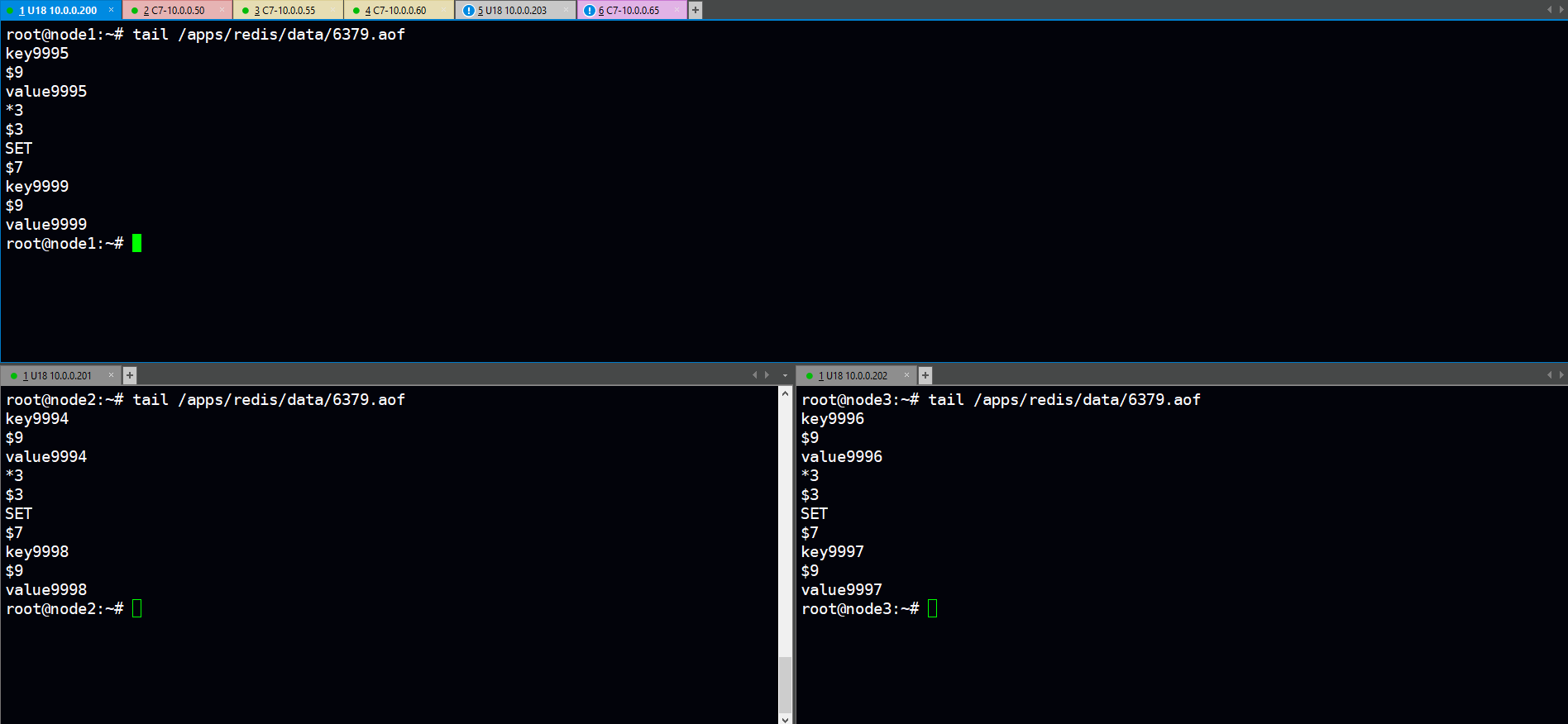

4)验证数据:

1>通过dbsize统计,三个主节点各写入的KEY数量

2>查看aof文件,可以看到三个主节点都将写入一万个KEY的操作记录下来,但通过计算最终每个主节点均分数据。

4.模拟故障转移,对应slave切换为master

systemctl stop redis.service

tail -f /apps/redis/log/redis_6379.log #无日志输出

1)可以看到,node1:10.0.0.200标记fail,而原先为slave:10.0.0.55此刻升为master.

root@node2:~# redis-cli -a 123456 -p 6379 --no-auth-warning cluster nodes

eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379@16379 master,fail - 1634967298739 1634967294630 1 disconnected

f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379@16379 myself,slave ef9f73ee3a6a17063023d1c48f722df6429714fd 0 1634968359000 7 connected

80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379@16379 master - 0 1634968361591 8 connected 0-5460

ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379@16379 master - 0 1634968359000 7 connected 5461-10922

6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379@16379 master - 0 1634968358000 3 connected 10923-16383

efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379@16379 slave 6d0b3d42a216735fef0695014d404cf2f5a83230 0 1634968360562 3 connected

2)进入node5:10.0.0.55,查看其role已为master.

[root@node5 ~]# redis-cli -a 123456 -p 6379 --no-auth-warning role

1) "master"

2) (integer) 42

3) (empty array)

3)check所有节点,发现主节点10.0.0.200进行故障转移后槽位被从节点10.0.0.55继承了下来

[root@node5 ~]# redis-cli -a 123456 --no-auth-warning --cluster check 10.0.0.50:6379

Could not connect to Redis at 10.0.0.200:6379: Connection refused

10.0.0.202:6379 (6d0b3d42...) -> 3329 keys | 5461 slots | 1 slaves.

10.0.0.55:6379 (80ad4c43...) -> 3331 keys | 5461 slots | 0 slaves.

10.0.0.60:6379 (ef9f73ee...) -> 3340 keys | 5462 slots | 1 slaves.

[OK] 10000 keys in 3 masters.

0.61 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.50:6379)

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[0-5460] (5461 slots) master

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

4)恢复node1:10.0.0.200

1>node1自动以slave的身份重新加入到了集群当中

root@node2:~# redis-cli -a 123456 -p 6379 --no-auth-warning cluster nodes

eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379@16379 slave 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 0 1634969583961 8 connected

f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379@16379 myself,slave ef9f73ee3a6a17063023d1c48f722df6429714fd 0 1634969577000 7 connected

80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379@16379 master - 0 1634969581910 8 connected 0-5460

ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379@16379 master - 0 1634969581000 7 connected 5461-10922

6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379@16379 master - 0 1634969582000 3 connected 10923-16383

efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379@16379 slave 6d0b3d42a216735fef0695014d404cf2f5a83230 0 1634969583000 3 connected

2>node1角色已为slave(命令在node1上执行)

root@node1:~# redis-cli -a 123456 -p 6379 --no-auth-warning role

1) "slave"

2) "10.0.0.55"

3) (integer) 6379

4) "connected"

5) (integer) 420

3>再次check,node1的信息已显示出

[root@node5 ~]# redis-cli -a 123456 --no-auth-warning --cluster check 10.0.0.50:6379

10.0.0.202:6379 (6d0b3d42...) -> 3329 keys | 5461 slots | 1 slaves.

10.0.0.55:6379 (80ad4c43...) -> 3331 keys | 5461 slots | 1 slaves.

10.0.0.60:6379 (ef9f73ee...) -> 3340 keys | 5462 slots | 1 slaves.

[OK] 10000 keys in 3 masters.

0.61 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.50:6379)

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

S: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots: (0 slots) slave

replicates 80ad4c4328056af48c47b14b43ff58cbb5fd77b2

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

Redis cluster集群节点维护:

集群维护之动态扩容

实战案例:

因公司业务发展迅猛,现有的三主三从的redis cluster架构可能无法满足现有业务的并发写入需求,因此公司紧急采购两台服务器10.0.0.203,10.0.0.65,需要将其动态添加到集群当中,但不能影响业务使用和数据丢失。

1.添加新节点的准备(参考架构图)

注:增加Redis node节点,需要与之前的Redis node版本相同、配置一致,然后分别再启动两台Redis node,应为一主一从。

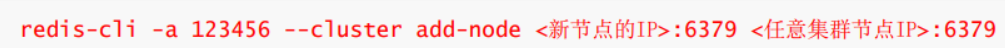

1)添加主节点命令格式:

root@node7:~# redis-cli -a 123456 --no-auth-warning --cluster add-node 10.0.0.203:6379 10.0.0.200:6379

>>> Adding node 10.0.0.203:6379 to cluster 10.0.0.200:6379

>>> Performing Cluster Check (using node 10.0.0.200:6379)

S: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots: (0 slots) slave

replicates 80ad4c4328056af48c47b14b43ff58cbb5fd77b2

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 10.0.0.203:6379 to make it join the cluster.

[OK] New node added correctly.

根据提示信息:节点:10.0.0.203已加入集群当中

2)check以下,显示添加节点后的集群状态信息,可以看到新添加的node7:10.0.0.203默认为MASTER状态且没有分配槽位

root@node7:~# redis-cli -a 123456 --no-auth-warning --cluster check 10.0.0.203:6379

10.0.0.203:6379 (738ce07c...) -> 0 keys | 0 slots | 0 slaves.

10.0.0.60:6379 (ef9f73ee...) -> 3340 keys | 5462 slots | 1 slaves.

10.0.0.202:6379 (6d0b3d42...) -> 3329 keys | 5461 slots | 1 slaves.

10.0.0.55:6379 (80ad4c43...) -> 3331 keys | 5461 slots | 1 slaves.

[OK] 10000 keys in 4 masters.

0.61 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.203:6379)

M: 738ce07cbd707de400bf15e168e062389ab595e7 10.0.0.203:6379

slots: (0 slots) master

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots: (0 slots) slave

replicates 80ad4c4328056af48c47b14b43ff58cbb5fd77b2

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

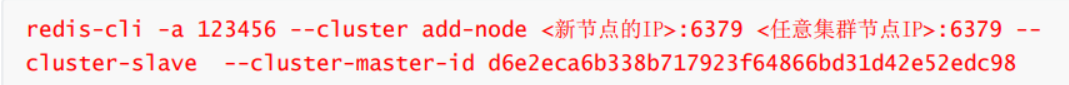

3)添加从节点命令格式:

[root@node8 ~]# redis-cli -a 123456 --cluster add-node 10.0.0.65:6379 10.0.0.203:6379 --cluster-slave --cluster-master-id 738ce07cbd707de400bf15e168e062389ab595e7

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Adding node 10.0.0.65:6379 to cluster 10.0.0.203:6379

>>> Performing Cluster Check (using node 10.0.0.203:6379)

M: 738ce07cbd707de400bf15e168e062389ab595e7 10.0.0.203:6379

slots: (0 slots) master

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots: (0 slots) slave

replicates 80ad4c4328056af48c47b14b43ff58cbb5fd77b2

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

>>> Send CLUSTER MEET to node 10.0.0.65:6379 to make it join the cluster.

Waiting for the cluster to join

>>> Configure node as replica of 10.0.0.203:6379.

[OK] New node added correctly.

根据提示信息:节点:10.0.0.65成功加入集群且为节点:10.0.0.203的从节点

4)再次check一下获取最新集群信息,node8:10.0.0.65和node7:10.0.0.203确为主从关系

[root@node8 ~]# redis-cli -a 123456 --no-auth-warning --cluster check 10.0.0.65:6379

10.0.0.203:6379 (738ce07c...) -> 0 keys | 0 slots | 1 slaves.

10.0.0.55:6379 (80ad4c43...) -> 3331 keys | 5461 slots | 1 slaves.

10.0.0.202:6379 (6d0b3d42...) -> 3329 keys | 5461 slots | 1 slaves.

10.0.0.60:6379 (ef9f73ee...) -> 3340 keys | 5462 slots | 1 slaves.

[OK] 10000 keys in 4 masters.

0.61 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.65:6379)

S: 9910577a5cbf9d59c583e20d834c458f18c2fbbe 10.0.0.65:6379

slots: (0 slots) slave

replicates 738ce07cbd707de400bf15e168e062389ab595e7

M: 738ce07cbd707de400bf15e168e062389ab595e7 10.0.0.203:6379

slots: (0 slots) master

1 additional replica(s)

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots: (0 slots) slave

replicates 80ad4c4328056af48c47b14b43ff58cbb5fd77b2

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

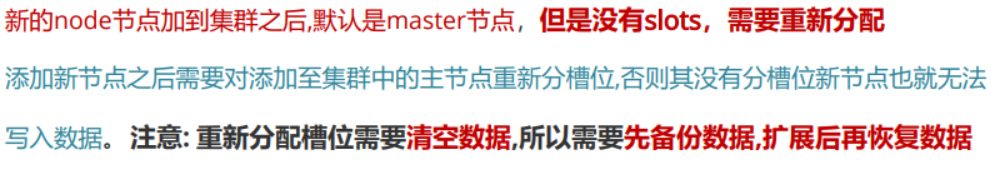

2.为新主节点:10.0.0.203 分配槽位值

1)通过reshard命令进行分配

root@node7:~# redis-cli -a 123456 --no-auth-warning --cluster reshard 10.0.0.203:6379

>>> Performing Cluster Check (using node 10.0.0.65:6379)

S: 9910577a5cbf9d59c583e20d834c458f18c2fbbe 10.0.0.65:6379

slots: (0 slots) slave

replicates 738ce07cbd707de400bf15e168e062389ab595e7

M: 738ce07cbd707de400bf15e168e062389ab595e7 10.0.0.203:6379

slots: (0 slots) master

1 additional replica(s)

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[0-5460] (5461 slots) master

1 additional replica(s)

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[10923-16383] (5461 slots) master

1 additional replica(s)

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[5461-10922] (5462 slots) master

1 additional replica(s)

S: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots: (0 slots) slave

replicates 80ad4c4328056af48c47b14b43ff58cbb5fd77b2

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

[OK] All nodes agree about slots configuration.

>>> Check for open slots... >>> Check slots coverage... [OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)?4096 #新分配多少个槽位 =16384/主节点个数,

What is the receiving node ID? d6e2eca6b338b717923f64866bd31d42e52edc98 #槽位 分配的目的主机(新的加入集群的主节点ID)

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots. Type 'done' once you entered all the source nodes IDs.

Source node #1: all #从哪些源主节点将槽位分配给新主节点,all表示从集群当前所有主节点划分

......

Do you want to proceed with the proposed reshard plan (yes/no)? yes #确认分配

......

Moving slot 12280 from 10.0.0.55:6379 to 10.0.0.203:6379: .

Moving slot 12281 from 10.0.0.55:6379 to 10.0.0.203:6379: .

Moving slot 12282 from 10.0.0.55:6379 to 10.0.0.203:6379:

Moving slot 12283 from 10.0.0.55:6379 to 10.0.0.203:6379: ..

Moving slot 12284 from 10.0.0.55:6379 to 10.0.0.203:6379:

Moving slot 12285 from 10.0.0.55:6379 to 10.0.0.203:6379: .

Moving slot 12286 from 10.0.0.55:6379 to 10.0.0.203:6379:

Moving slot 12287 from 10.0.0.55:6379 to 10.0.0.203:6379: ..

2)同样再次check一下查看集群状态:可以看到新加入的主节点:10.0.0.203已成功分配4096个(虽然不连续)

root@node7:~# redis-cli -a 123456 --no-auth-warning --cluster check 10.0.0.203:6379

10.0.0.203:6379 (738ce07c...) -> 2474 keys | 4096 slots | 1 slaves.

10.0.0.60:6379 (ef9f73ee...) -> 2515 keys | 4096 slots | 1 slaves.

10.0.0.202:6379 (6d0b3d42...) -> 2500 keys | 4096 slots | 1 slaves.

10.0.0.55:6379 (80ad4c43...) -> 2511 keys | 4096 slots | 1 slaves.

[OK] 10000 keys in 4 masters.

0.61 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.203:6379)

M: 738ce07cbd707de400bf15e168e062389ab595e7 10.0.0.203:6379

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

1 additional replica(s)

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

S: 9910577a5cbf9d59c583e20d834c458f18c2fbbe 10.0.0.65:6379

slots: (0 slots) slave

replicates 738ce07cbd707de400bf15e168e062389ab595e7

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots: (0 slots) slave

replicates 80ad4c4328056af48c47b14b43ff58cbb5fd77b2

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

集群维护之动态缩容

实战案例:

由于10.0.0.8服务器使用年限已经超过三年,已经超过厂商质保期而且硬盘出现异常报警,经运维部架构师提交方案并与开发同事开会商议,决定将现有Redis集群的8台主服务器中的master:10.0.0.203和对应 的slave :10.0.0.65 临时下线,三台服务器的并发写入性能足够支出未来1-2年的业务需求。

注意事项:

1)迁移槽位Redis master源服务器必须保证已经没有任何数据,否则迁移报错并会被强制中断。

2)拆除节点时:如果先拆主节点的话,从节点会自动选择其他主节点作为master

3)每次分配槽位的目的节点只能是一个,如果将下线节点所有槽位全部分到同一个目的节点显然不合理.因此有多少个目的节点就进行多少次槽位分配达到一个均分的合理值

(计算公式:下线节点槽位总数/剩下集群主节点数=xxx个槽位 )

目的节点:接收槽位的节点

源节点:分出槽位的节点

1.迁移下线主节点的槽位至其他主节点,根据公式计算下:下线主节点:10.0.0.203要均分槽位给集群三个主节点那么就要进行三次reshard(每次分配完可通过check确认一下,我这里就不贴图了)

1)第一次分配

root@node7:~# redis-cli -a 123456 --no-auth-warning --cluster reshard 10.0.0.203:6379

>>> Performing Cluster Check (using node 10.0.0.203:6379)

M: 738ce07cbd707de400bf15e168e062389ab595e7 10.0.0.203:6379

slots:[0-1364],[5461-6826],[10923-12287] (4096 slots) master

1 additional replica(s)

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

S: 9910577a5cbf9d59c583e20d834c458f18c2fbbe 10.0.0.65:6379

slots: (0 slots) slave

replicates 738ce07cbd707de400bf15e168e062389ab595e7

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots: (0 slots) slave

replicates 80ad4c4328056af48c47b14b43ff58cbb5fd77b2

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[1365-5460] (4096 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 1356 #分配多少个槽位出去

What is the receiving node ID? 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 #目的节点:即接收槽位的节点

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: 738ce07cbd707de400bf15e168e062389ab595e7 #源节点:即移除槽位的节点(这里是下线节点:10.0.0.203)

Source node #2: done #是否有第二个源节点?done表:没有(因为只下线一个主节点:10.0.0.203)

................

...................

Moving slot 1349 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 1350 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 1351 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 1352 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 1353 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 1354 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 1355 from 738ce07cbd707de400bf15e168e062389ab595e7

Do you want to proceed with the proposed reshard plan (yes/no)? yes #确认分配?yes

Moving slot 1352 from 10.0.0.203:6379 to 10.0.0.55:6379:

Moving slot 1353 from 10.0.0.203:6379 to 10.0.0.55:6379:

Moving slot 1354 from 10.0.0.203:6379 to 10.0.0.55:6379: .

Moving slot 1355 from 10.0.0.203:6379 to 10.0.0.55:6379: .

2)第二次分配

root@node7:~# redis-cli -a 123456 --no-auth-warning --cluster reshard 10.0.0.203:6379

>>> Performing Cluster Check (using node 10.0.0.203:6379)

M: 738ce07cbd707de400bf15e168e062389ab595e7 10.0.0.203:6379

slots:[1356-1364],[5461-6826],[10923-12287] (2740 slots) master

1 additional replica(s)

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

S: 9910577a5cbf9d59c583e20d834c458f18c2fbbe 10.0.0.65:6379

slots: (0 slots) slave

replicates 738ce07cbd707de400bf15e168e062389ab595e7

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots: (0 slots) slave

replicates 80ad4c4328056af48c47b14b43ff58cbb5fd77b2

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[12288-16383] (4096 slots) master

1 additional replica(s)

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[0-1355],[1365-5460] (5452 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 1356

What is the receiving node ID? 6d0b3d42a216735fef0695014d404cf2f5a83230

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: 738ce07cbd707de400bf15e168e062389ab595e7

Source node #2: done

..............

................

Moving slot 6802 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 6803 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 6804 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 6805 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 6806 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 6807 from 738ce07cbd707de400bf15e168e062389ab595e7

Do you want to proceed with the proposed reshard plan (yes/no)? yes

3)第三次分配(最后一次分配的话就将下线节点的所有槽位都分配给最后一个集群主节点)

root@node7:~# redis-cli -a 123456 --no-auth-warning --cluster reshard 10.0.0.203:6379

>>> Performing Cluster Check (using node 10.0.0.203:6379)

M: 738ce07cbd707de400bf15e168e062389ab595e7 10.0.0.203:6379

slots:[6808-6826],[10923-12287] (1384 slots) master

1 additional replica(s)

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

S: 9910577a5cbf9d59c583e20d834c458f18c2fbbe 10.0.0.65:6379

slots: (0 slots) slave

replicates 738ce07cbd707de400bf15e168e062389ab595e7

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[6827-10922] (4096 slots) master

1 additional replica(s)

S: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots: (0 slots) slave

replicates 80ad4c4328056af48c47b14b43ff58cbb5fd77b2

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[1356-1364],[5461-6807],[12288-16383] (5452 slots) master

1 additional replica(s)

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[0-1355],[1365-5460] (5452 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

How many slots do you want to move (from 1 to 16384)? 1384

What is the receiving node ID? ef9f73ee3a6a17063023d1c48f722df6429714fd

Please enter all the source node IDs.

Type 'all' to use all the nodes as source nodes for the hash slots.

Type 'done' once you entered all the source nodes IDs.

Source node #1: 738ce07cbd707de400bf15e168e062389ab595e7

Source node #2: done

Moving slot 12282 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 12283 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 12284 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 12285 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 12286 from 738ce07cbd707de400bf15e168e062389ab595e7

Moving slot 12287 from 738ce07cbd707de400bf15e168e062389ab595e7

Do you want to proceed with the proposed reshard plan (yes/no)? yes

.............

...............

Moving slot 12283 from 10.0.0.203:6379 to 10.0.0.60:6379: .

Moving slot 12284 from 10.0.0.203:6379 to 10.0.0.60:6379:

Moving slot 12285 from 10.0.0.203:6379 to 10.0.0.60:6379: .

Moving slot 12286 from 10.0.0.203:6379 to 10.0.0.60:6379:

Moving slot 12287 from 10.0.0.203:6379 to 10.0.0.60:6379:

4)下线节点:10.0.0.203的所有槽位分配到集群主节点后check一下情况,可以看到集群主节点都已得到下线节点所分配的槽位.

root@node7:~# redis-cli -a 123456 --no-auth-warning --cluster check 10.0.0.203:6379

10.0.0.203:6379 (738ce07c...) -> 0 keys | 0 slots | 0 slaves.

10.0.0.60:6379 (ef9f73ee...) -> 3357 keys | 5480 slots | 2 slaves.

10.0.0.202:6379 (6d0b3d42...) -> 3316 keys | 5452 slots | 1 slaves.

10.0.0.55:6379 (80ad4c43...) -> 3327 keys | 5452 slots | 1 slaves.

[OK] 10000 keys in 4 masters.

0.61 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.203:6379)

M: 738ce07cbd707de400bf15e168e062389ab595e7 10.0.0.203:6379

slots: (0 slots) master #下线主节点已将全部槽位分配出去,所剩槽位为0

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

S: 9910577a5cbf9d59c583e20d834c458f18c2fbbe 10.0.0.65:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[6808-12287] (5480 slots) master

2 additional replica(s)

S: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots: (0 slots) slave

replicates 80ad4c4328056af48c47b14b43ff58cbb5fd77b2

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[1356-1364],[5461-6807],[12288-16383] (5452 slots) master

1 additional replica(s)

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[0-1355],[1365-5460] (5452 slots) master

1 additional replica(s)

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

2.从集群中删除服务器

1)从集群中正式下线节点:10.0.0.203

root@node7:~# redis-cli -a 123456 --cluster del-node 10.0.0.203:6379 738ce07cbd707de400bf15e168e062389ab595e7

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Removing node 738ce07cbd707de400bf15e168e062389ab595e7 from cluster 10.0.0.203:6379

>>> Sending CLUSTER FORGET messages to the cluster...

>>> Sending CLUSTER RESET SOFT to the deleted node.

2)同样继续check一下,可以看到下线节点:10.0.0.203已不在集群当中.其次集合注意事项第二条发现我们先删除主节点:10.0.0.203后它的从节点:10.0.0.65确实选择一个集群中的主节点:10.0.0.60作为新master

root@node7:~# redis-cli -a 123456 --no-auth-warning --cluster check 10.0.0.201:6379

10.0.0.55:6379 (80ad4c43...) -> 3327 keys | 5452 slots | 1 slaves.

10.0.0.60:6379 (ef9f73ee...) -> 3357 keys | 5480 slots | 2 slaves.

10.0.0.202:6379 (6d0b3d42...) -> 3316 keys | 5452 slots | 1 slaves.

[OK] 10000 keys in 3 masters.

0.61 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.201:6379)

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

S: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots: (0 slots) slave

replicates 80ad4c4328056af48c47b14b43ff58cbb5fd77b2

S: 9910577a5cbf9d59c583e20d834c458f18c2fbbe 10.0.0.65:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[0-1355],[1365-5460] (5452 slots) master

1 additional replica(s)

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[6808-12287] (5480 slots) master

2 additional replica(s)

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[1356-1364],[5461-6807],[12288-16383] (5452 slots) master

1 additional replica(s)

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

3)因为要同时下线10.0.0.65,而它为slave没有槽位分配那么直接就可以进行del操作.

[root@node8 ~]# redis-cli -a 123456 --cluster del-node 10.0.0.65:6379 9910577a5cbf9d59c583e20d834c458f18c2fbbe

Warning: Using a password with '-a' or '-u' option on the command line interface may not be safe.

>>> Removing node 9910577a5cbf9d59c583e20d834c458f18c2fbbe from cluster 10.0.0.65:6379

>>> Sending CLUSTER FORGET messages to the cluster...

>>> Sending CLUSTER RESET SOFT to the deleted node.

4)删除后从节点:10.0.0.65后再次check一下:从节点:10.0.0.65已然消失无踪

[root@node8 ~]# redis-cli -a 123456 --no-auth-warning --cluster check 10.0.0.200:6379

10.0.0.55:6379 (80ad4c43...) -> 3327 keys | 5452 slots | 1 slaves.

10.0.0.60:6379 (ef9f73ee...) -> 3357 keys | 5480 slots | 1 slaves.

10.0.0.202:6379 (6d0b3d42...) -> 3316 keys | 5452 slots | 1 slaves.

[OK] 10000 keys in 3 masters.

0.61 keys per slot on average.

>>> Performing Cluster Check (using node 10.0.0.200:6379)

S: eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379

slots: (0 slots) slave

replicates 80ad4c4328056af48c47b14b43ff58cbb5fd77b2

M: 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379

slots:[0-1355],[1365-5460] (5452 slots) master

1 additional replica(s)

M: ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379

slots:[6808-12287] (5480 slots) master

1 additional replica(s)

S: f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379

slots: (0 slots) slave

replicates ef9f73ee3a6a17063023d1c48f722df6429714fd

M: 6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379

slots:[1356-1364],[5461-6807],[12288-16383] (5452 slots) master

1 additional replica(s)

S: efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379

slots: (0 slots) slave

replicates 6d0b3d42a216735fef0695014d404cf2f5a83230

[OK] All nodes agree about slots configuration.

>>> Check for open slots...

>>> Check slots coverage...

[OK] All 16384 slots covered.

5)在集群任意节点,查看集群节点信息<通过节点信息发现确实只剩下三组主从>

root@node1:~# redis-cli -a 123456 -p 6379 --no-auth-warning cluster nodes

80ad4c4328056af48c47b14b43ff58cbb5fd77b2 10.0.0.55:6379@16379 master - 0 1634981706231 10 connected 0-1355 1365-5460

ef9f73ee3a6a17063023d1c48f722df6429714fd 10.0.0.60:6379@16379 master - 0 1634981704187 12 connected 6808-12287

f402ab2424851da0673f85a2e77a437b66e1efbf 10.0.0.201:6379@16379 slave ef9f73ee3a6a17063023d1c48f722df6429714fd 0 1634981703000 12 connected

eee564607734658f35c95c3561855ccb67f4c02b 10.0.0.200:6379@16379 myself,slave 80ad4c4328056af48c47b14b43ff58cbb5fd77b2 0 1634981704000 10 connected

6d0b3d42a216735fef0695014d404cf2f5a83230 10.0.0.202:6379@16379 master - 0 1634981705000 11 connected 1356-1364 5461-6807 12288-16383

efdeaf802d4a0bd6e60105377a1b0bba6e352d6e 10.0.0.50:6379@16379 slave 6d0b3d42a216735fef0695014d404cf2f5a83230 0 1634981705213 11 connected

root@node1:~# redis-cli -a 123456 -p 6379 --no-auth-warning cluster info

cluster_state:ok

cluster_slots_assigned:16384

cluster_slots_ok:16384

cluster_slots_pfail:0

cluster_slots_fail:0

cluster_known_nodes:6

cluster_size:3

cluster_current_epoch:12

cluster_my_epoch:10

cluster_stats_messages_ping_sent:12359

cluster_stats_messages_pong_sent:12112

cluster_stats_messages_update_sent:11

cluster_stats_messages_sent:24482

cluster_stats_messages_ping_received:12110

cluster_stats_messages_pong_received:20551

cluster_stats_messages_meet_received:2

cluster_stats_messages_update_received:16

cluster_stats_messages_received:3267

集群维护之导入现有Redis数据至集群

注:建议让开发将数据导出备份然后让开发自己导进去运维只负责搭建(避免背锅)

实战案例:

1. 在非集群节点:10.0.0.203生成数据(因为是模拟环境所以需先生成数据)

注:10.0.0.203是之前缩容的下线节点,需关闭集群功能再生成数据

<脚本逻辑:写入一万条数据>

#!/bin/bash

NUM=10000

PASS=123456

for i in `seq $NUM`;do

redis-cli -h 127.0.0.1 -a "$PASS" -p 6379 --no-auth-warning set testkey${i} testvalue${i}

echo "testkey${i} testvalue${i} 写入完成"

done

echo "$NUM个key写入到Redis完成"

<执行脚本写入数据>

bash redis_test.sh

........

............

testkey9998 testvalue9998 写入完成

OK

testkey9999 testvalue9999 写入完成

OK

testkey10000 testvalue10000 写入完成

<确认并统计是否写入>

root@node7:~# redis-cli -p 6379 -a 123456 --no-auth-warning dbsize

(integer) 10000 #确为一万条数据,写入成功

2.基础环境准备:非集群外部节点=导出数据的节点=10.0.0.203

root@node7:~# grep -E '#requirepass |#masterauth ' /apps/redis/etc/redis.conf

#masterauth "123456"

#requirepass "123456"

3.将非集群节点:10.0.0.203数据导入到集群节点

命令格式:因为是分片存储集群服务器IP只要是集群节点都可以,cluster-replace选择性使用!

redis-cli --cluster import <集群服务器IP:PORT> --cluster-from <外部 Redis node-IP:PORT> --cluster-copy --cluster-replace

redis-cli -a 123456 --cluster import 10.0.0.200:6379 --cluster-from 10.0.0.203:6379 --cluster-copy --cluster-replace

.........

............

Migrating testkey6608 to 10.0.0.55:6379: OK

Migrating testkey1418 to 10.0.0.55:6379: OK

Migrating testkey5635 to 10.0.0.202:6379: OK

4.导入完毕后进入集群任意节点通过dbsize根据得到的数据条数与导入之前进行比较.(不贴图)

完结~

posted on 2021-10-22 17:56 1251618589 阅读(7) 评论(0) 编辑 收藏 举报