tfserving部署

-

进入root 用户下

- yum -y install docker 安装容器

- systemctl start docker 启动容器

- 查看命令 sudo docker ps ,如图所示 ,说明启动成功

- 拉取镜像 sudo docker pull tensorflow/serving:2.11.0 如图

- 查看镜像 sudo docker images

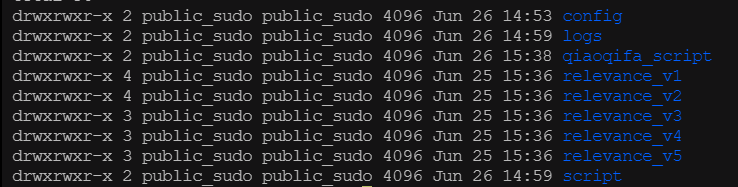

docker的文件路径:

配置文件和模型文件

2. docker的启动脚本 run.sh

1 2 3 4 5 6 7 8 9 | #!/bin/bashsudo docker run -t --rm -p 8016:8500 -p 8017:8501 --name prerank_8016 --cpuset-cpus="64-71" --memory="16g" -v "/home/public_sudo/data/prerank:/models" -v "/home/public_sudo/data/prerank/config:/config" -e TF_CPP_MIN_VLOG_LEVEL=4 tensorflow/serving:2.11.0 --per_process_gpu_memory_fraction=0.8 --enable_batching=true --model_config_file_poll_wait_seconds=60 --model_config_file=/config/model.config > /home/public_sudo/data/prerank/logs/log8016.log &sudo docker run -t --rm -p 8018:8500 -p 8019:8501 --cpuset-cpus="72-79" --memory="16g" -v "/home/public_sudo/data/prerank:/models" -v "/home/public_sudo/data/prerank/config:/config" --name prerank_8018 -e TF_CPP_MIN_VLOG_LEVEL=4 tensorflow/serving:2.11.0 --per_process_gpu_memory_fraction=0.8 --enable_batching=true --model_config_file_poll_wait_seconds=60 --model_config_file=/config/model.config > /home/public_sudo/data/prerank/logs/log8018.log &<br><br><br> |

sudo docker run -t --rm -p 8020:8500 -p 8021:8501 --name relevance_8020 --cpuset-cpus="80-87" --memory="16g" -v "/home/public_sudo/data/relevance:/models" -v "/home/public_sudo/data/relevance/config:/config" -e TF_CPP_MIN_VLOG_LEVEL=4 tensorflow/serving:2.11.0 --per_process_gpu_memory_fraction=0.8 --enable_batching=true --model_config_file_poll_wait_seconds=60 --model_config_file=/config/model.config > /home/public_sudo/data/relevance/logs/8020.log &

sudo docker run -t --rm -p 8022:8500 -p 8023:8501 --name relevance_8022 --cpuset-cpus="88-95" --memory="16g" -v "/home/public_sudo/data/relevance:/models" -v "/home/public_sudo/data/relevance/config:/config" -e TF_CPP_MIN_VLOG_LEVEL=4 tensorflow/serving:2.11.0 --per_process_gpu_memory_fraction=0.8 --enable_batching=true --model_config_file_poll_wait_seconds=60 --model_config_file=/config/model.config > /home/public_sudo/data/relevance/logs/8022.log &

3. docker的配置文件格式

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 | model_config_list:{config:{name:'relevance_v5'base_path:'/models/relevance_v5'model_platform:'tensorflow'},config:{name:'relevance_v4'base_path:'/models/relevance_v4'model_platform:'tensorflow'},config:{name:'relevance_v3'base_path:'/models/relevance_v3'model_platform:'tensorflow'},config:{name:'relevance_v1'base_path:'/models/relevance_v1'model_platform:'tensorflow'},config:{name:'relevance_v2'base_path:'/models/relevance_v2'model_platform:'tensorflow'}} |

4. docker的python测试

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 | import jsonimport requestsinput = [1 ,1 ,1 ,1 ,1 ,1 ,0 ,1 ,0.999 ,0 ,3 ,1 ,0.3333333333333333 ,2 ,0.6666666666666666 ,1 ,0.3333333333333333 ,0 ,0.0 ,0 ,0 ,0 ,1 ,0 ,1 ,0 ,0 ,1 ,0 ,1 ,0 ,0.8652462791972874 ,0.020301 ,0.626570119698537 ,0.0 ,1 ,1 ,1.0 ,0 ,0.0 ,1 ,1.0 ,0 ,0.0 ,1 ,1 ,0 ,0 ,0 ,1 ,0 ,0 ,0 ,0 ,0 ,1 ,0.8342278053174881 ,0.01272 ,1.0 ,0.0 ,0.08677808000000002 ,-0.5144478466666667 ,0.09813555641 ,0.4995743833333333 ,0.6875768999999999 ,0.29120327 ,-0.28226265 ,-0.4784384366666667 ,-0.58632582 ,0.04208295999999998 ,-0.32798395333333336 ,-0.02741264333333332 ,0.049279349666666666 ,-0.16968005666666663 ,-0.33240925466666665 ,0.4360717 ,-0.2929261233333333 ,-0.2981464466666667 ,-0.07636033 ,-0.274444911 ,-0.3359526518666667 ,0.50867007 ,0.39146848666666667 ,-0.7227929266666666 ,-0.191814214 ,0.043227369999999994 ,-0.8780203333333333 ,0.10589143999999999 ,0.15558687 ,-0.4661960733333333 ,-0.37050828333333335 ,0.04813903993333333 ,-0.142883988375 ,-0.734637185625 ,0.15876262870312502 ,0.7311148107500001 ,0.4145796666875001 ,-0.009326533625000001 ,-0.3222952091875 ,-0.5682045862499999 ,-0.8492591543749999 ,-0.057231256250000015 ,-0.2482288354999999 ,0.24096290889374997 ,-0.030687868800000004 ,-0.496588725 ,-0.29752982512500004 ,0.283379688875 ,-0.5157605628124999 ,-0.29660309737499996 ,0.23857122374999998 ,-0.2940595146875 ,-0.312397124 ,0.4648682387500001 ,0.3255289983125 ,-0.599876252 ,0.06666920775 ,0.2970209875 ,-0.48590265374999997 ,-0.20199137175 ,0.18776840000000006 ,-0.37830229231250007 ,-0.31018528725000005 ,0.02904392187500001]def v1(): instance = [] instance.append({"relevance_input": input}) instance.append({"relevance_input": input}) data = json.dumps({"signature_name": "serving_default", "instances": instance}) headers = {"content-type": "application/json"} json_response = requests.post('http://localhost:8021/v1/models/relevance_v1:predict', data=data, headers=headers) predictions = json.loads(json_response.text)["predictions"] json_response1 = requests.post('http://localhost:8023/v1/models/relevance_v1:predict', data=data, headers=headers) predictions1 = json.loads(json_response1.text)["predictions"] print("v1:", predictions, predictions1)def v2(): instance = [] instance.append({"relevance_input": input[:60]}) instance.append({"relevance_input": input[:60]}) data = json.dumps({"signature_name": "serving_default", "instances": instance}) headers = {"content-type": "application/json"} json_response = requests.post('http://localhost:8021/v1/models/relevance_v2:predict', data=data, headers=headers) predictions = json.loads(json_response.text)["predictions"] json_response1 = requests.post('http://localhost:8023/v1/models/relevance_v2:predict', data=data, headers=headers) predictions1 = json.loads(json_response1.text)["predictions"] print("v2:", predictions, predictions1)def v3(): instance = [] instance.append({"relevance_input": input[:60]}) instance.append({"relevance_input": input[:60]}) data = json.dumps({"signature_name": "serving_default", "instances": instance}) headers = {"content-type": "application/json"} json_response = requests.post('http://localhost:8021/v1/models/relevance_v3:predict', data=data, headers=headers) predictions = json.loads(json_response.text)["predictions"] json_response1 = requests.post('http://localhost:8023/v1/models/relevance_v3:predict', data=data, headers=headers) predictions1 = json.loads(json_response1.text)["predictions"] print("v3:", predictions, predictions1)def v4(): instance = [] instance.append({"relevance_input": input[:60]}) instance.append({"relevance_input": input[:60]}) data = json.dumps({"signature_name": "serving_default", "instances": instance}) headers = {"content-type": "application/json"} json_response = requests.post('http://localhost:8021/v1/models/relevance_v4:predict', data=data, headers=headers) predictions = json.loads(json_response.text)["predictions"] json_response1 = requests.post('http://localhost:8023/v1/models/relevance_v4:predict', data=data, headers=headers) predictions1 = json.loads(json_response1.text)["predictions"] print("v4:", predictions, predictions1)def v5(): instance = [] instance.append({"relevance_input": input}) instance.append({"relevance_input": input}) data = json.dumps({"signature_name": "serving_default", "instances": instance}) headers = {"content-type": "application/json"} json_response = requests.post('http://localhost:8021/v1/models/relevance_v5:predict', data=data, headers=headers) predictions = json.loads(json_response.text)["predictions"] json_response1 = requests.post('http://localhost:8023/v1/models/relevance_v5:predict', data=data, headers=headers) predictions1 = json.loads(json_response1.text)["predictions"] print("v5:", predictions, predictions1)def v6(): instance = [] instance.append({"relevance_input": input}) instance.append({"relevance_input": input}) data = json.dumps({"signature_name": "serving_default", "instances": instance}) headers = {"content-type": "application/json"} json_response = requests.post('http://localhost:8021/v1/models/relevance_v6:predict', data=data, headers=headers) predictions = json.loads(json_response.text)["predictions"] json_response1 = requests.post('http://localhost:8023/v1/models/relevance_v6:predict', data=data, headers=headers) predictions1 = json.loads(json_response1.text)["predictions"] print("v6:", predictions, predictions1)if __name__ == '__main__': v1() v2() v3() v4() v5() |

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 【自荐】一款简洁、开源的在线白板工具 Drawnix

· 没有Manus邀请码?试试免邀请码的MGX或者开源的OpenManus吧

· 无需6万激活码!GitHub神秘组织3小时极速复刻Manus,手把手教你使用OpenManus搭建本

· C#/.NET/.NET Core优秀项目和框架2025年2月简报

· DeepSeek在M芯片Mac上本地化部署