TensorFlow 之 keras.layers.Conv2D( ) 主要参数讲解

keras.layers.Conv2D( ) 函数参数

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | def __init__(self, filters, kernel_size, strides=(1, 1), padding='valid', data_format=None, dilation_rate=(1, 1), activation=None, use_bias=True, kernel_initializer='glorot_uniform', bias_initializer='zeros', kernel_regularizer=None, bias_regularizer=None, activity_regularizer=None, kernel_constraint=None, bias_constraint=None, **kwargs): |

参数:

filters 卷积核个数的变化,filters 影响的是最后输入结果的的第三个维度的变化,例如,输入的维度是 (600, 600, 3), filters 的个数是 64,转变后的维度是 (600, 600, 64)

1 2 3 4 5 | >>> from keras.layers import (Input, Reshape)>>> input = Input(shape=(600, 600, 3))>>> x = Conv2D(64, (1, 1), strides=(1, 1), name='conv1')(input)>>> x<tf.Tensor 'conv1_1/BiasAdd:0' shape=(?, 600, 600, 64) dtype=float32> |

kernel_size 参数 表示卷积核的大小,可以直接写一个数,影响的是输出结果前两个数据的维度,例如,(600, 600, 3)=> (599, 599, 64)

1 2 3 4 | >>> from keras.layers import (Input, Conv2D)>>> input = Input(shape=(600, 600, 3))>>> Conv2D(64, (2, 2), strides=(1, 1), name='conv1')(input)<tf.Tensor 'conv1/BiasAdd:0' shape=(?, 599, 599, 64) dtype=float32> |

直接写 2 也是可以的

1 2 3 4 | >>> from keras.layers import (Input, Conv2D)>>> input = Input(shape=(600, 600, 3))>>> Conv2D(64, 2, strides=(1, 1), name='conv1')(input)<tf.Tensor 'conv1_2/BiasAdd:0' shape=(?, 599, 599, 64) dtype=float32> |

strides 步长 同样会影响输出的前两个维度,例如,(600, 600, 3)=> (300, 300, 64),值得注意的是,括号里的数据可以不一致,分别控制横坐标和纵坐标,

1 2 3 4 | >>> from keras.layers import (Input, Conv2D)>>> input = Input(shape=(600, 600, 3))>>> Conv2D(64, 1, strides=(2, 2), name='conv1')(input)<tf.Tensor 'conv1_4/BiasAdd:0' shape=(?, 300, 300, 64) dtype=float32> |

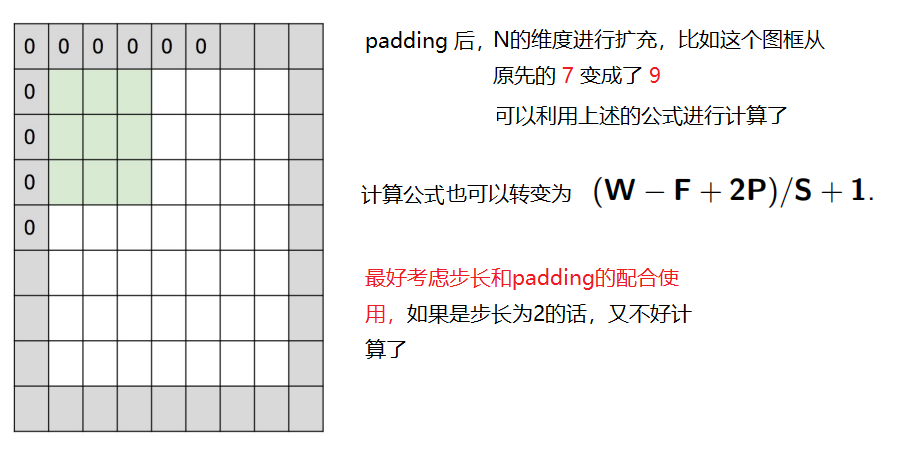

padding 是否对周围进行填充,“same” 即使通过kernel_size 缩小了维度,但是四周会填充 0,保持原先的维度;“valid”表示存储不为0的有效信息。多个对比效果如下:

>>> Conv2D(64, 1, strides=(2, 2), padding="same", name='conv1')(input)<tf.Tensor 'conv1_6/BiasAdd:0' shape=(?, 300, 300, 64) dtype=float32>>>> Conv2D(64, 3, strides=(2, 2), padding="same", name='conv1')(input)<tf.Tensor 'conv1_7/BiasAdd:0' shape=(?, 300, 300, 64) dtype=float32>>>> Conv2D(64, 3, strides=(1, 1), padding="same", name='conv1')(input)<tf.Tensor 'conv1_8/BiasAdd:0' shape=(?, 600, 600, 64) dtype=float32>>>> Conv2D(64, 3, strides=(1, 1), padding="valid", name='conv1')(input)<tf.Tensor 'conv1_9/BiasAdd:0' shape=(?, 598, 598, 64) dtype=float32> |

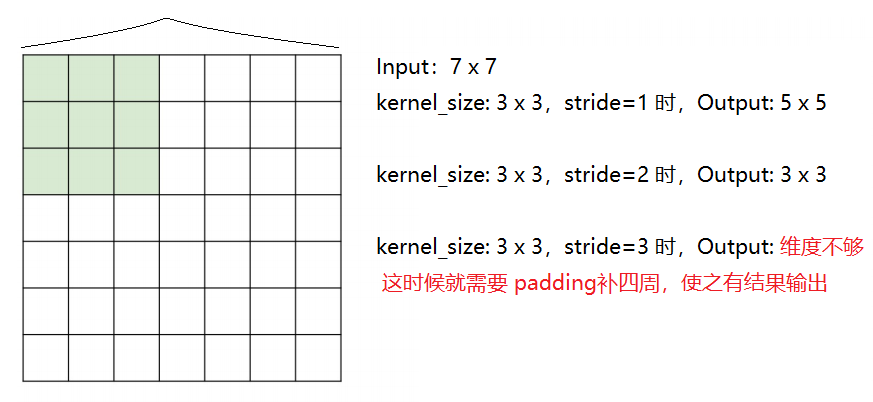

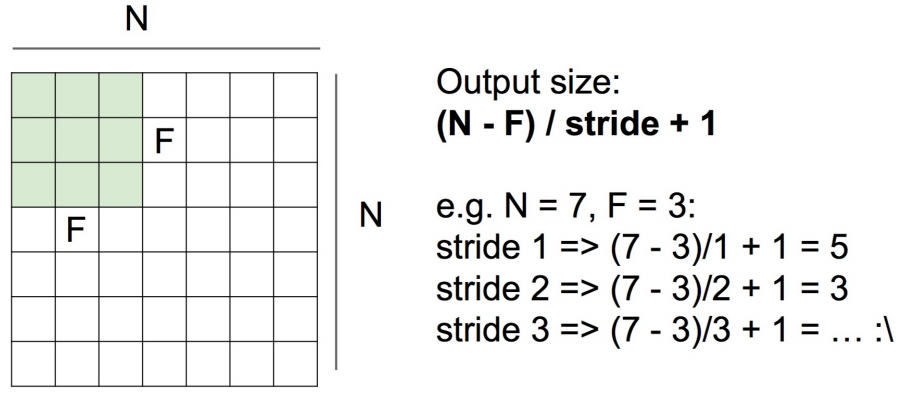

为了更好的说明计算的原理,假设输入为 7 X 7 的输入框,卷积核为3 x 3 :

计算公式:

可以推出:

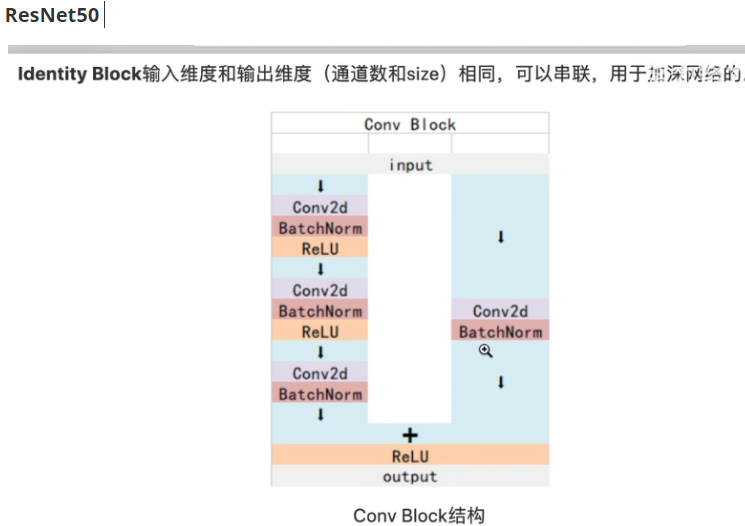

通过这种最简单的方式,可以观察 ResNet50 的组成结构

Conv Block 的架构:

def conv_block(input_tensor, kernel_size, filters, stage, block, strides): filters1, filters2, filters3 = filters # filters1 64, filters3 256 将数值传入到filters。。。中 conv_name_base = 'res' + str(stage) + block + '_branch' bn_name_base = 'bn' + str(stage) + block + '_branch' x = Conv2D(filters1, (1, 1), strides=strides, name=conv_name_base + '2a')(input_tensor) x = BatchNormalization(name=bn_name_base + '2a')(x) x = Activation('relu')(x) x = Conv2D(filters2, kernel_size, padding='same', name=conv_name_base + '2b')(x) x = BatchNormalization(name=bn_name_base + '2b')(x) x = Activation('relu')(x) x = Conv2D(filters3, (1, 1), name=conv_name_base + '2c')(x) x = BatchNormalization(name=bn_name_base + '2c')(x) shortcut = Conv2D(filters3, (1, 1), strides=strides, name=conv_name_base + '1')(input_tensor) shortcut = BatchNormalization(name=bn_name_base + '1')(shortcut) x = layers.add([x, shortcut]) x = Activation("relu")(x) return x |

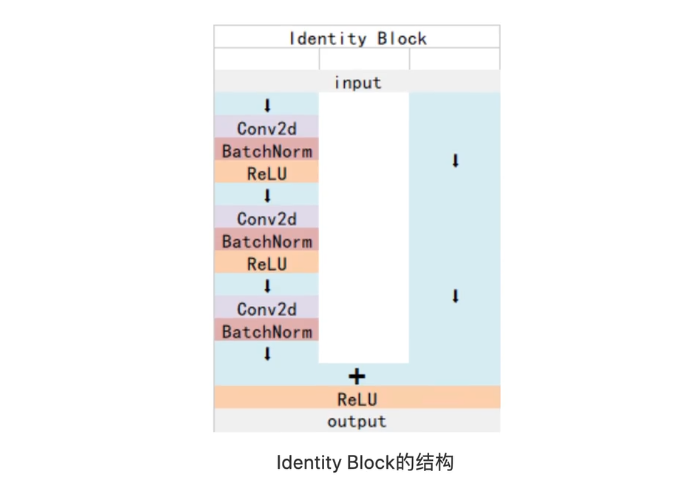

Identity Block 的架构:

def identity_block(input_tensor, kernel_size, filters, stage, block): filters1, filters2, filters3 = filters conv_name_base = 'res' + str(stage) + block + '_branch' bn_name_base = 'bn' + str(stage) + block + '_branch' x = Conv2D(filters1, (1, 1), name=conv_name_base + '2a')(input_tensor) x = BatchNormalization(name=bn_name_base + '2a')(x) x = Activation('relu')(x) x = Conv2D(filters2, kernel_size, padding='same', name=conv_name_base + '2b')(input_tensor) x = BatchNormalization(name=bn_name_base + '2b')(x) x = Activation('relu')(x) x = Conv2D(filters3, (1, 1), name=conv_name_base + '2c')(input_tensor) x = BatchNormalization(name=bn_name_base + '2c')(x) x = layers.add([x, input_tensor]) x = Activation('relu')(x) return x |

最后是整体架构:

def ResNet50(inputs): #-----------------------------------# # 假设输入进来的图片是600,600,3 #-----------------------------------# img_input = inputs # 600,600,3 -> 300,300,64 x = ZeroPadding2D((3, 3))(img_input) x = Conv2D(64, (7, 7), strides=(2, 2), name='conv1')(x) x = BatchNormalization(name='bn_conv1')(x) x = Activation('relu')(x) # 300,300,64 -> 150,150,64 x = MaxPooling2D((3, 3), strides=(2, 2), padding="same")(x) # 150,150,64 -> 150,150,256 x = conv_block(x, 3, [64, 64, 256], stage=2, block='a', strides=(1, 1)) x = identity_block(x, 3, [64, 64, 256], stage=2, block='b') x = identity_block(x, 3, [64, 64, 256], stage=2, block='c') # 150,150,256 -> 75,75,512 x = conv_block(x, 3, [128, 128, 512], stage=3, block='a') x = identity_block(x, 3, [128, 128, 512], stage=3, block='b') x = identity_block(x, 3, [128, 128, 512], stage=3, block='c') x = identity_block(x, 3, [128, 128, 512], stage=3, block='d') # 75,75,512 -> 38,38,1024 x = conv_block(x, 3, [256, 256, 1024], stage=4, block='a') x = identity_block(x, 3, [256, 256, 1024], stage=4, block='b') x = identity_block(x, 3, [256, 256, 1024], stage=4, block='c') x = identity_block(x, 3, [256, 256, 1024], stage=4, block='d') x = identity_block(x, 3, [256, 256, 1024], stage=4, block='e') x = identity_block(x, 3, [256, 256, 1024], stage=4, block='f') # 最终获得一个38,38,1024的共享特征层 return x |

附上理论链接 Resnet-50网络结构详解 https://www.cnblogs.com/qianchaomoon/p/12315906.html

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】凌霞软件回馈社区,博客园 & 1Panel & Halo 联合会员上线

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】博客园社区专享云产品让利特惠,阿里云新客6.5折上折

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步