作业来源:https://edu.cnblogs.com/campus/gzcc/GZCC-16SE1/homework/3002

1 import re 2 from datetime import datetime 3 import requests 4 import bs4 5 from bs4 import BeautifulSoup

0.从新闻url获取点击次数,并整理成函数

- newsUrl

- newsId(re.search())

- clickUrl(str.format())

- requests.get(clickUrl)

- re.search()/.split()

- str.lstrip(),str.rstrip()

- int

- 整理成函数

- 获取新闻发布时间及类型转换也整理成函数

点击次数函数:

1 def click(newsurl): 2 number = re.search('/(\d+).html' , newsurl).groups(0)[0] 3 clickurl = 'http://oa.gzcc.cn/api.php?op=count&id={}&modelid=80'.format(number) 4 cilckRes = requests.get(clickurl) 5 cilckTimes = int(cilckRes.text.split('.html')[-1].split("'")[1]) 6 return cilckTimes

发布时间函数:

1 def newsdt(soupn): 2 times = soupn.split()[0].split(':')[1] 3 times = times +' '+ soupn.split()[1] 4 times = times.replace('-', ' ').replace(':', ' ').lower().split() 5 dt = datetime(int(times[0]),int(times[1]),int(times[2]),int(times[3]),int(times[4]),int(times[5])) 6 return dt

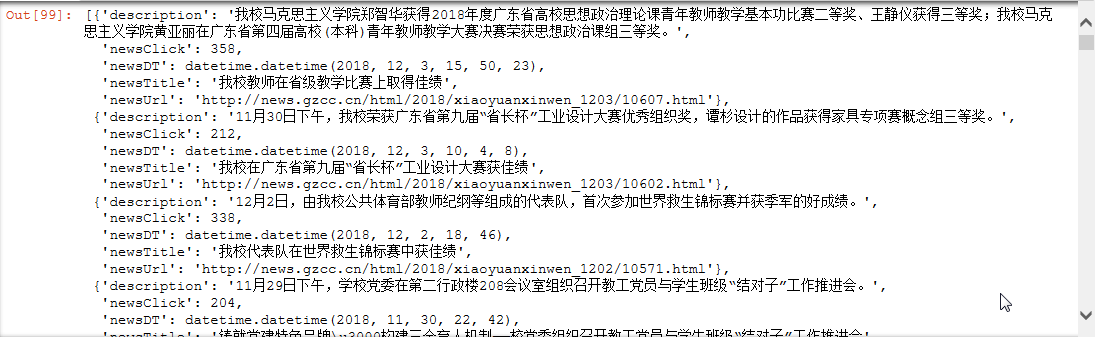

1.从新闻url获取新闻详情: 字典,anews

1 def anews(url,title): 2 newsDetail = {} 3 res = requests.get(url) 4 res.encoding = 'utf-8' 5 soup = BeautifulSoup(res.text,'html.parser') 6 newsDetail['newsTitle'] = soup.select('.show-title')[0].text 7 showinfo = soup.select('.show-info')[0].text 8 newsDetail['newsDT'] = newsdt(showinfo) 9 newsDetail['newsClick'] = click(url) 10 newsDetail['newsUrl'] = url 11 newsDetail['description'] = title 12 return newsDetail

2.从列表页的url获取新闻url:列表append(字典) alist

urls = [] titles = [] for i in range(8, 17) : url = 'http://news.gzcc.cn/html/xiaoyuanxinwen/{}.html'.format(i) res = requests.get(url) res.encoding = 'utf-8' urls += re.findall('<a href="(.*)">\r\n',res.text) titles += re.findall('<div class="news-list-description">(.*)</div>',res.text) titles

3.生成所页列表页的url并获取全部新闻 :列表extend(列表) allnews

*每个同学爬学号尾数开始的10个列表页

1 newsList = [] 2 for i in range(0,len(urls)): 3 newsList.append(anews(urls[i],titles[i])) 4 newsList

4.设置合理的爬取间隔

import time

import random

time.sleep(random.random()*3)

1 import time 2 import random 3 4 urls = [] 5 titles = [] 6 for i in range(8, 17) : 7 url = 'http://news.gzcc.cn/html/xiaoyuanxinwen/{}.html'.format(i) 8 res = requests.get(url) 9 res.encoding = 'utf-8' 10 urls += re.findall('<a href="(.*)">\r\n',res.text) 11 titles += re.findall('<div class="news-list-description">(.*)</div>',res.text) 12 time.sleep(random.random()*3)

1 newsList = [] 2 for i in range(0,len(urls)): 3 newsList.append(anews(urls[i],titles[i])) 4 time.sleep(random.random()*3)

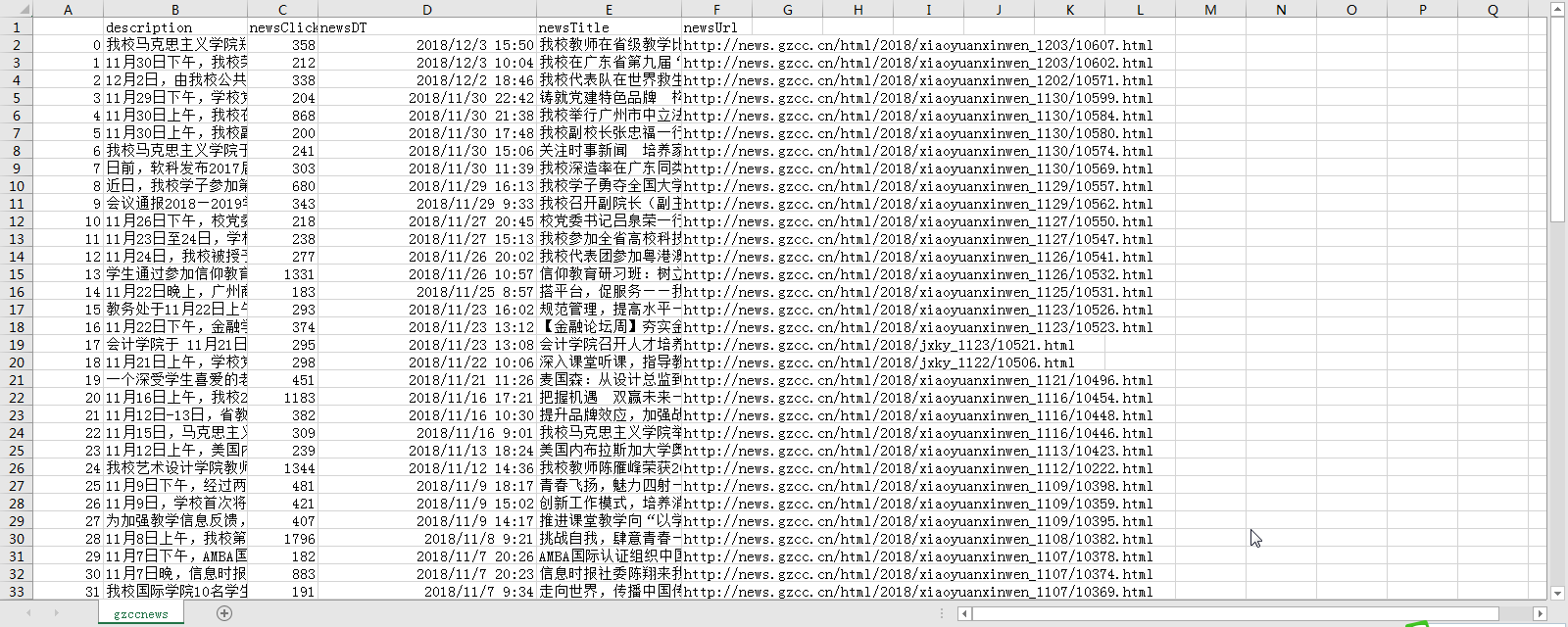

5.用pandas做简单的数据处理并保存

保存到csv或excel文件

newsdf.to_csv(r'F:\duym\爬虫\gzccnews.csv')

1 import pandas as pd 2 newsdf = pd.DataFrame(newsList) 3 newsdf.to_csv(r'C:\Users\Administrator\Desktop\gzccnews.csv')