AirFlow 连接访问MYSQL

from airflow import DAG from airflow.operators.python import PythonOperator # from airflow.providers.mysql.operators.mysql import MySqlOperator from airflow.operators.mysql_operator import MySqlOperator from airflow.operators.dummy import DummyOperator from liveramp.common.metadata import default_args from airflow.models import Variable from liveramp.common.notification import send_to_slack, generate_message_blocks_according_to_upstream import uuid def failure_callback(context): send_to_slack(generate_message_blocks_according_to_upstream(context)) def success_callback(context): send_to_slack(generate_message_blocks_according_to_upstream(context)) # uuid3 need namespace and each time input an different namespace # value = uuid.uuid3(uuid.NAMESPACE_DNS, "AirFlow") value = uuid.uuid4() suid = ''.join(str(value).split("-")) sql_ = 'insert job(job_id, tenant_id, tenant_env, tenant_display_name, tenant_name, tenant_settings) values( "' + str( suid) + '",111,"QA","TEST","Test_display_name","hdfghjdsagfdhsgf");' sql_ = sql_ + "insert job_status(job_id, job_reference_id) values ('{}',1);".format(suid) sql_ = sql_ + "insert job_status_log(job_id, job_reference_id,memo) values ('{}',1,'init insert');".format(suid) print(sql_) dag = DAG( 'collect_requests_dag_backup', default_args=default_args, tags=['mysql', 'MySqlOperator'], # start_date=datetime(2021, 1, 1), schedule_interval=None, catchup=False, ) start = DummyOperator( task_id='start', dag=dag) end = DummyOperator( task_id='end', dag=dag) sql_insert = MySqlOperator( task_id='sql_insert', mysql_conn_id='mysql_conn_id', sql=sql_, dag=dag, ) start >> sql_insert >> end

走的是一个已经弃用的方法,

from airflow.operators.mysql_operator import MySqlOperator 实测可用

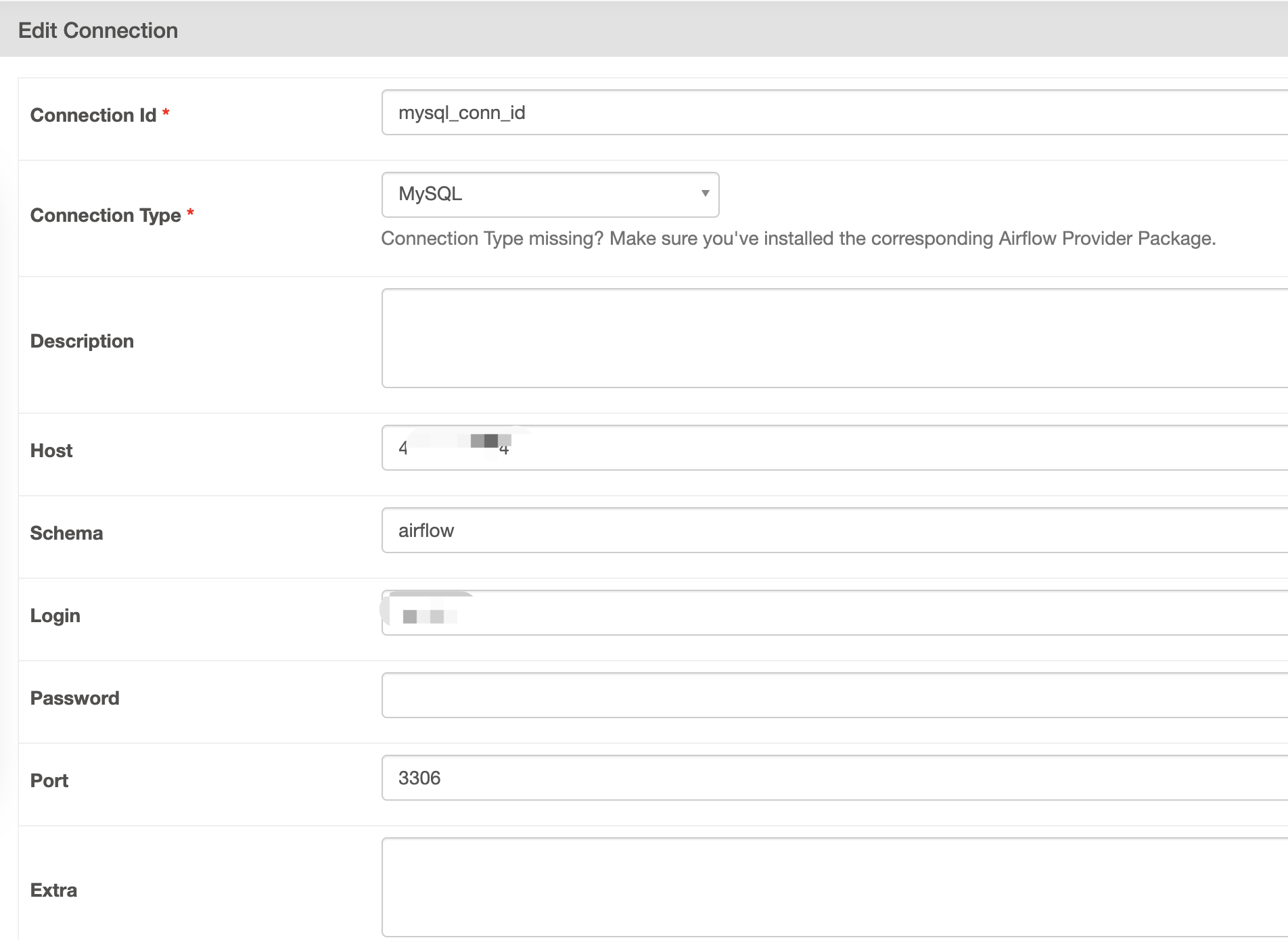

需要在Connection这一端进行配置ConnectionID,写好后点击Test按钮

import 包的时候,如果放弃弃用的方法

from airflow.operators.mysql_operator import MySqlOperator

可以用新的依赖:

from airflow.providers.mysql.operators.mysql import MySqlOperator

from airflow import DAG from airflow.providers.mysql.operators.mysql import MySqlOperator from airflow.operators.dummy import DummyOperator from liveramp.common.metadata import default_args from liveramp.common.notification import send_to_slack, generate_message_blocks_according_to_upstream import uuid def failure_callback(context): send_to_slack(generate_message_blocks_according_to_upstream(context)) def success_callback(context): send_to_slack(generate_message_blocks_according_to_upstream(context)) value = uuid.uuid4() suid = ''.join(str(value).split("-")) sql_ = 'insert job(job_id, tenant_id, tenant_env, tenant_display_name, tenant_name, tenant_settings) values( "' + str( suid) + '",111,"QA","TEST","Test_display_name","hdfghjdsagfdhsgf");' sql_ = sql_ + "insert job_status(job_id, job_reference_id) values ('{}',1);".format(suid) sql_ = sql_ + "insert job_status_log(job_id, job_reference_id,memo) values ('{}',1,'init insert');".format(suid) print(sql_) dag = DAG( 'collect_requests_dag', default_args=default_args, tags=['mysql', 'MySqlOperator'], # start_date=datetime(2021, 1, 1), schedule_interval=None, catchup=False, ) start = DummyOperator( task_id='start', dag=dag) end = DummyOperator( task_id='end', dag=dag) sql_insert = MySqlOperator( task_id='sql_insert', mysql_conn_id='mysql_conn_id', sql=sql_, autocommit=True, dag=dag, ) start >> sql_insert >> end

------------------------- A little Progress a day makes you a big success... ----------------------------

------------------------- A little Progress a day makes you a big success... ----------------------------

【推荐】国内首个AI IDE,深度理解中文开发场景,立即下载体验Trae

【推荐】编程新体验,更懂你的AI,立即体验豆包MarsCode编程助手

【推荐】抖音旗下AI助手豆包,你的智能百科全书,全免费不限次数

【推荐】轻量又高性能的 SSH 工具 IShell:AI 加持,快人一步

· 10年+ .NET Coder 心语,封装的思维:从隐藏、稳定开始理解其本质意义

· .NET Core 中如何实现缓存的预热?

· 从 HTTP 原因短语缺失研究 HTTP/2 和 HTTP/3 的设计差异

· AI与.NET技术实操系列:向量存储与相似性搜索在 .NET 中的实现

· 基于Microsoft.Extensions.AI核心库实现RAG应用

· 10年+ .NET Coder 心语 ── 封装的思维:从隐藏、稳定开始理解其本质意义

· 地球OL攻略 —— 某应届生求职总结

· 提示词工程——AI应用必不可少的技术

· Open-Sora 2.0 重磅开源!

· 周边上新:园子的第一款马克杯温暖上架

2020-12-08 charles 对js/png等不进行录制