机器学习模型

一、监督学习

1、回归模型

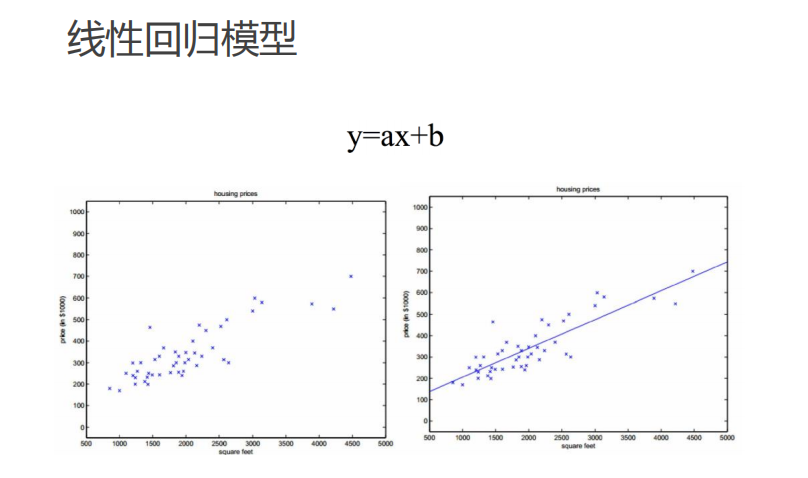

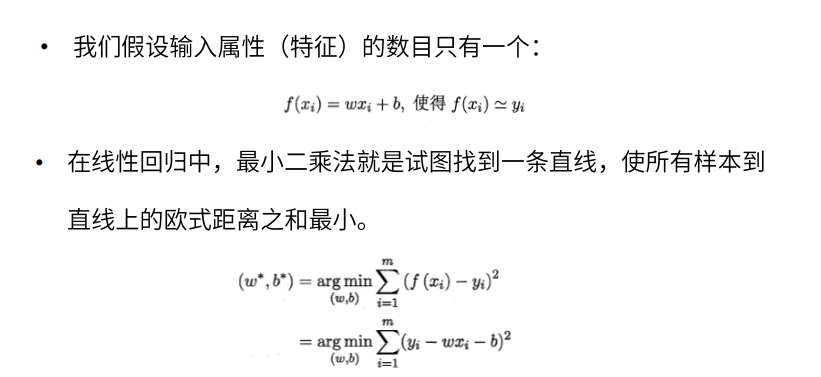

1.1 线性回归模型

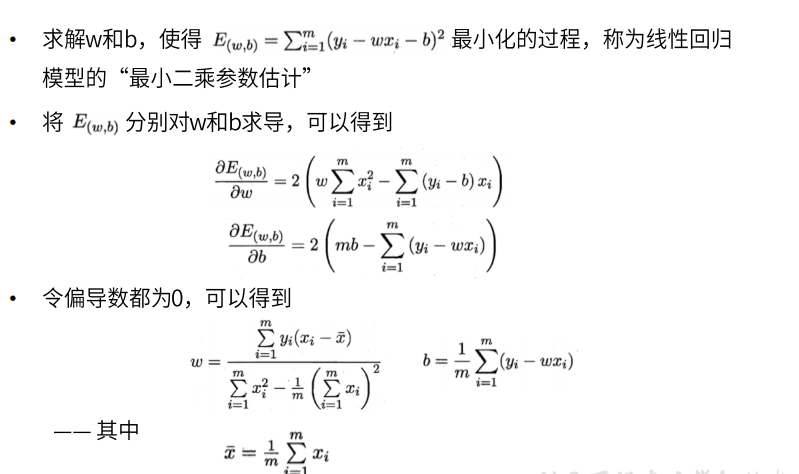

求解

最小二乘法

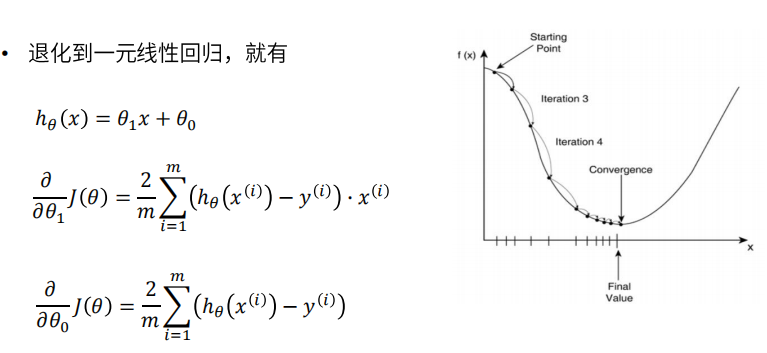

梯度下降法

2、分类模型

2.1 K近邻(KNN)

示例

KNN距离计算

KNN算法

2.2 逻辑斯蒂回归

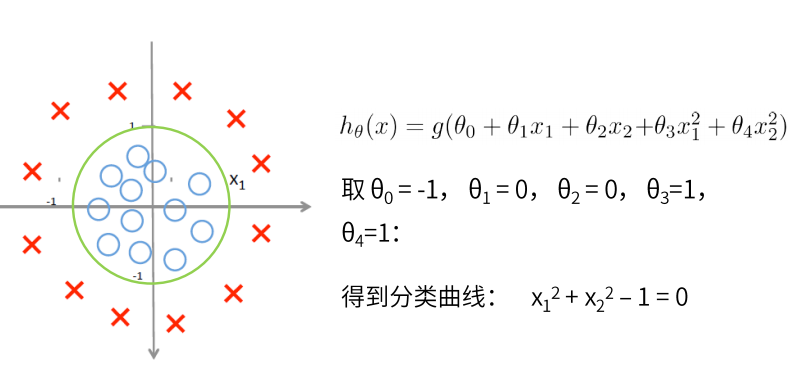

逻辑斯蒂回归 —— 分类问题

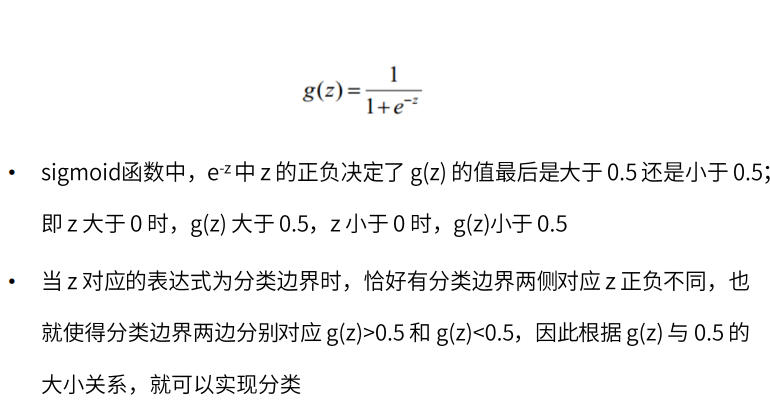

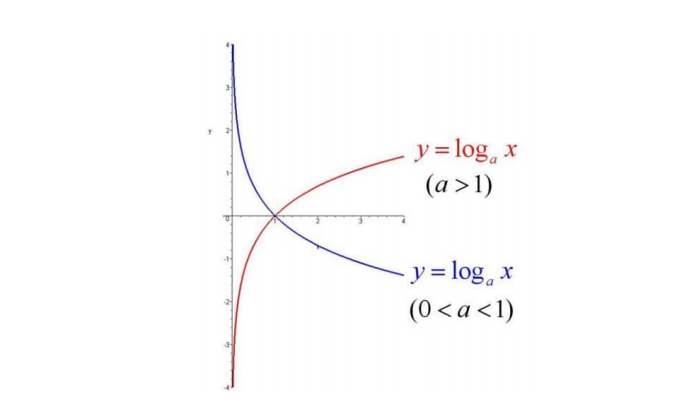

Sigmoid函数(压缩函数)

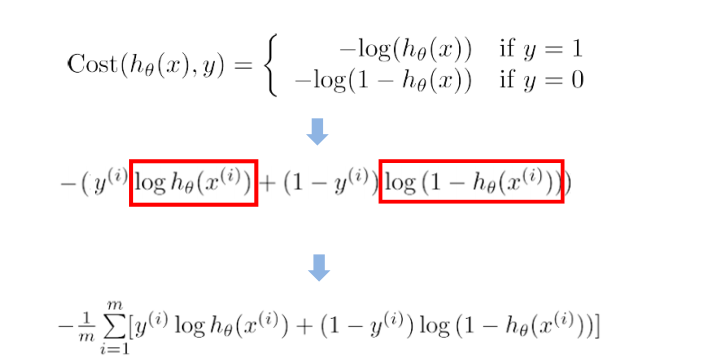

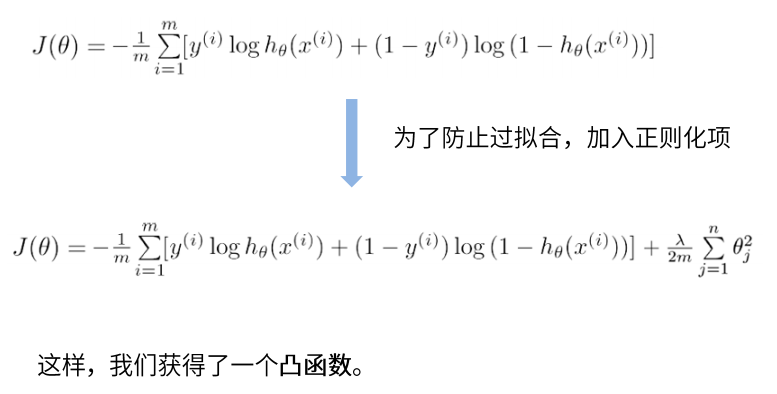

逻辑斯谛回归损失函数

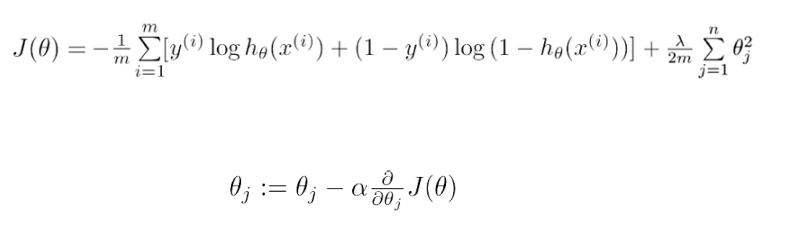

梯度下降法求解

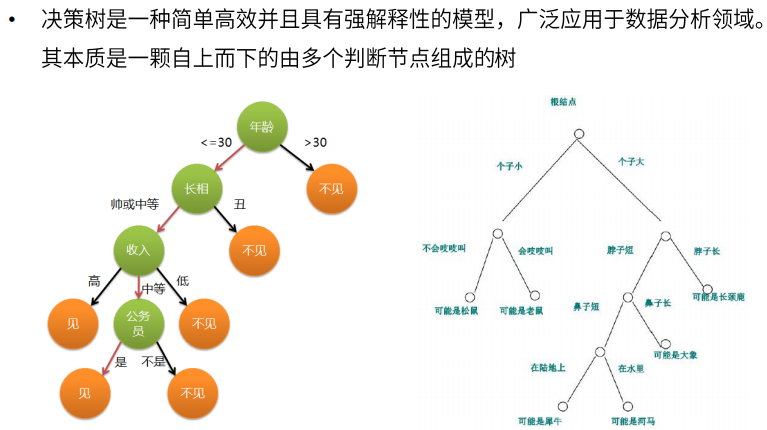

2..3 决策树

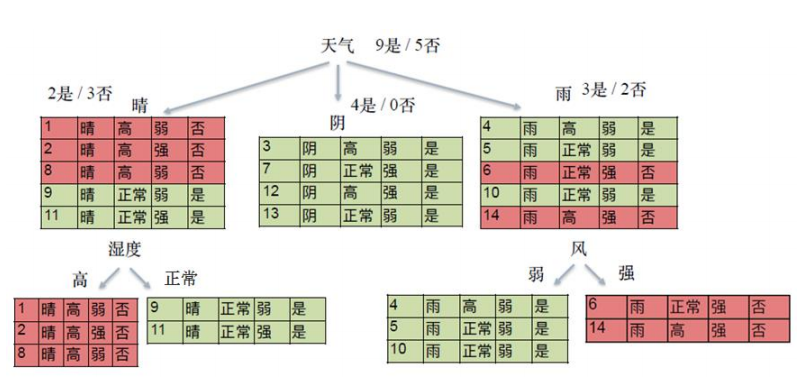

示例

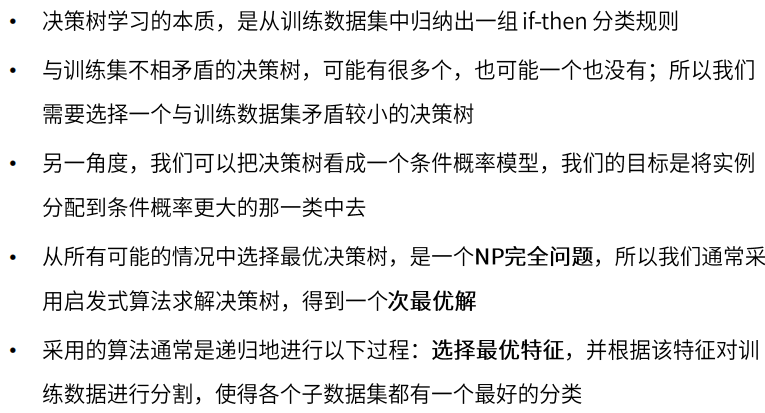

决策树与 if-then 规则

决策树的目标

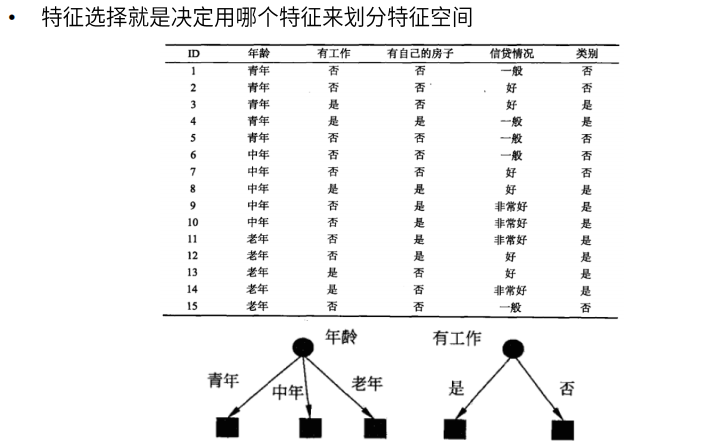

特征选择

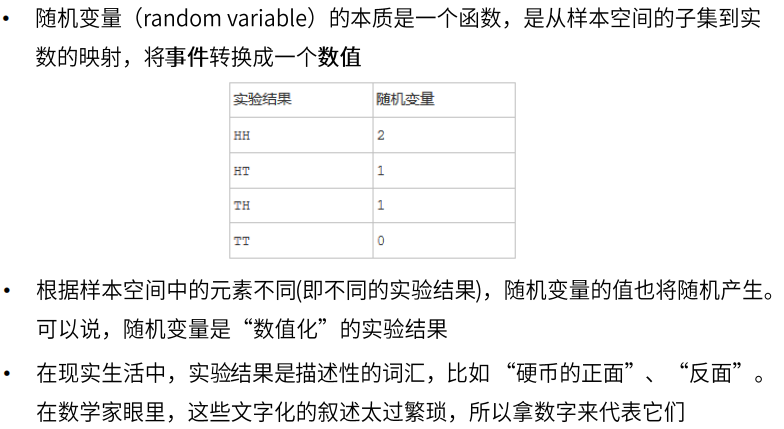

随机变量

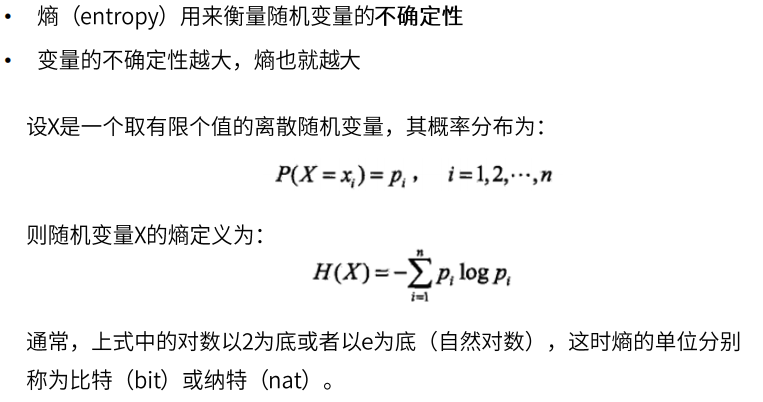

熵

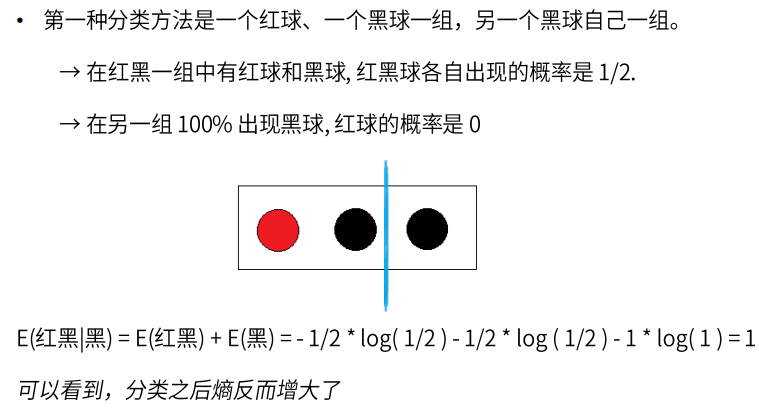

示例

决策树的目标

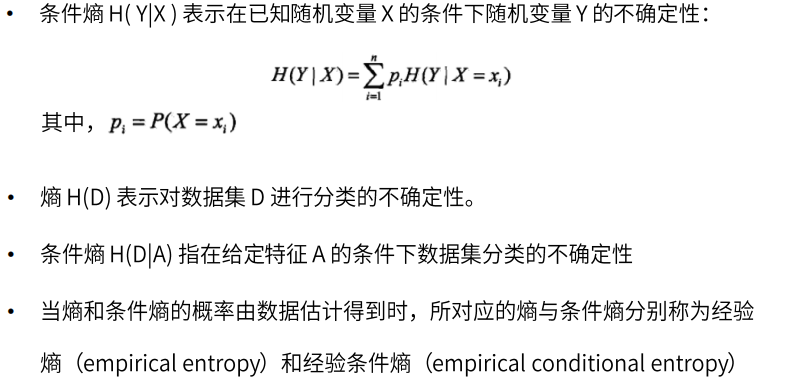

条件熵(conditional entropy)

信息增益

决策树生成算法

二、无监督学习

1、聚类 —— k均值