impala支持的数据库里的double(float)类型,通过迁移inceptor后,类型的值都变成了null

impala支持的数据库里的double(float)类型,通过迁移inceptor后,double类型的值都变成了null。

通过查阅日志发现默认将double转换成Decimal(38,10)然而他们的数据小数点后面不止10位。

然而那边的平台不支持decimal这个数据类型(数据目前存放在另一个平台),用户不用那个平台自己建表设成decimal就可以正常显示

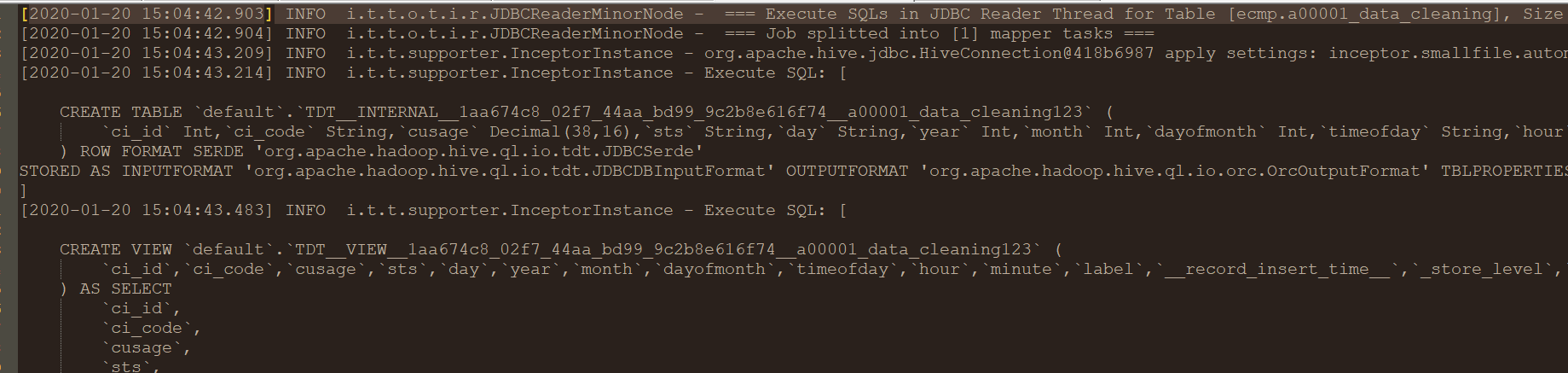

[2020-01-20 09:30:06.804] INFO i.t.t.o.t.i.r.JDBCReaderMinorNode - === Execute SQLs in JDBC Reader Thread for Table [ecmp.a00001_data_cleaning], Size [0 MB] : ===

[2020-01-20 09:30:06.806] INFO i.t.t.o.t.i.r.JDBCReaderMinorNode - === Job splitted into [1] mapper tasks ===

[2020-01-20 09:30:07.213] INFO i.t.t.supporter.InceptorInstance - org.apache.hive.jdbc.HiveConnection@7e1b82f4 apply settings: inceptor.smallfile.automerge=true

[2020-01-20 09:30:07.218] INFO i.t.t.supporter.InceptorInstance - Execute SQL: [

CREATE TABLE `default`.`TDT__INTERNAL__6f970de4_74b9_4bb1_ac3b_94e368315cb8__a00001_data_cleaning` (

`ci_id` Int,`ci_code` String,`cusage` Decimal(38,10),`sts` String,`day` String,`year` Int,`month` Int,`dayofmonth` Int,`timeofday` String,`hour` Int,`minute` Int,`label` Int,`__record_insert_time__` String,`_store_level` TinyInt,`time_label` Int

) ROW FORMAT SERDE 'org.apache.hadoop.hive.ql.io.tdt.JDBCSerde'

STORED AS INPUTFORMAT 'org.apache.hadoop.hive.ql.io.tdt.JDBCDBInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat' TBLPROPERTIES('mapreduce.jdbc.driver.class'='shade.org.apache.hive.jdbc.HiveDriver', 'mapreduce.jdbc.url'='jdbc:cdh2://10.70.248.203:21050/ecmp;auth=noSasl','mapreduce.jdbc.input.query'='SELECT ci_id,ci_code,cusage,sts,day,year,month,dayofmonth,timeofday,hour,minute,label,__record_insert_time__,_store_level,time_label FROM ecmp.a00001_data_cleaning limit 1000','mapreduce.jdbc.input.count.query'='select count(*) from (SELECT ci_id,ci_code,cusage,sts,day,year,month,dayofmonth,timeofday,hour,minute,label,__record_insert_time__,_store_level,time_label FROM ecmp.a00001_data_cleaning limit 1000) tdt_sq_alias','mapreduce.jdbc.splits.number'='1','mapreduce.jdbc.username'='admin@ziadmin01', 'mapreduce.jdbc.password'='ziadmin01','mapreduce.jdbc.driver.file'='/tmp/transporter1/6f543c31-a80f-4e14-8fac-fa71a32b8270/1579483802901.jar')

]

[2020-01-20 09:30:07.438] INFO i.t.t.supporter.InceptorInstance - Execute SQL: [

CREATE VIEW `default`.`TDT__VIEW__6f970de4_74b9_4bb1_ac3b_94e368315cb8__a00001_data_cleaning` (

`ci_id`,`ci_code`,`cusage`,`sts`,`day`,`year`,`month`,`dayofmonth`,`timeofday`,`hour`,`minute`,`label`,`__record_insert_time__`,`_store_level`,`time_label`

) AS SELECT

`ci_id`,

`ci_code`,

`cusage`,

`sts`,

`day`,

`year`,

`month`,

`dayofmonth`,

`timeofday`,

`hour`,

`minute`,

`label`,

`__record_insert_time__`,

`_store_level`,

`time_label`

FROM

`default`.`TDT__INTERNAL__6f970de4_74b9_4bb1_ac3b_94e368315cb8__a00001_data_cleaning`

]

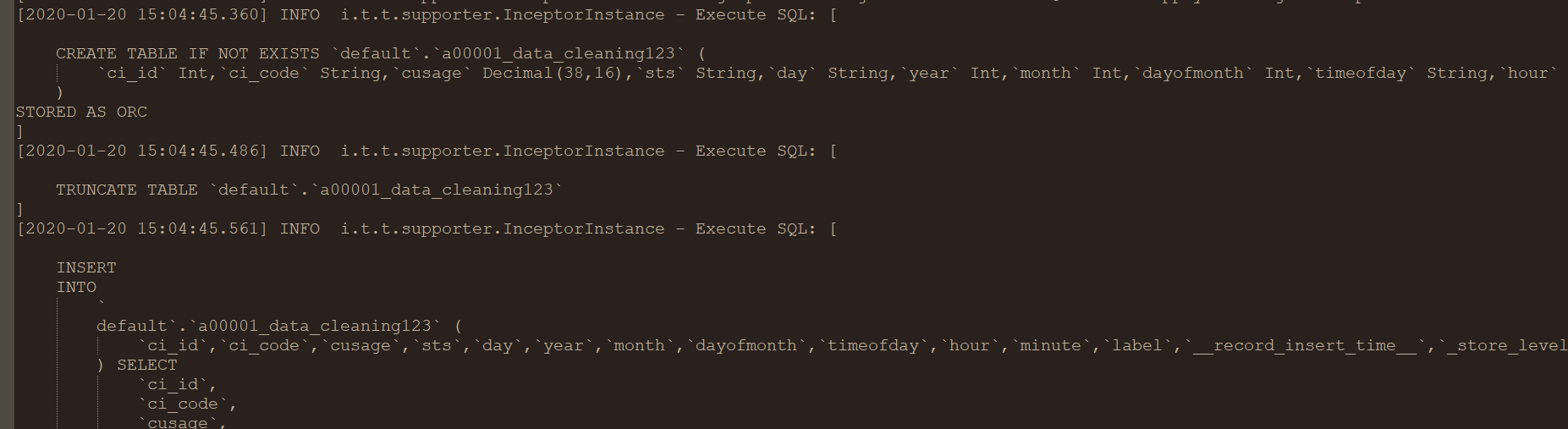

起初怀疑是transporter底层设置的问题,问了相关人士得到:

studio-1.0版本的tdt只完整测试过mysql、oracle、db2、sql server、postgresql、hana、达梦,其它数据库都没测试过

,不能保证其它数据库支持或者没有问题

然后又测试修改精度,读写两边精度都是16,但还是null

最后通过修改读取的字段的精度实现了,无需修改底层

SELECT ci_id,ci_code,CAST(cusage AS DECIMAL(38,16)) AS cusage,sts,day,year,month,dayofmonth,timeofday,hour,minute,label,__record_insert_time__,_store_level,time_label FROM ecmp.a00001_data_cleaning limit 1000