MHA 数据库高可用+ GTID 同步测试部署

目录

一、部署MHA 环境准备

1.1 虚拟机信息:

192.168.1.57 node57 数据库主节点

192.168.1.58 node58 数据库从节点1

192.168.1.59 node59 数据库从节点2

192.168.1.59 node59 MHA服务

192.168.1.59 vip vip

1.2 安装包信息:良心博主已经下载好rpm离线版

manager

mha4mysql-manager-0.58-0.el7.centos.noarch.rpm

node

mha4mysql-node-0.58-0.el7.centos.noarch.rpm

mysql 监控

perl-DBD-MySQL-4.023-6.el7.x86_64.rpm

epel-release-latest-7.noarch.rpm mha4mysql-manager-0.58-0.el7.centos.noarch.rpm mha4mysql-node-0.58-0.el7.centos.noarch.rpm mysql57-community-release-el7-10.noarch.rpm perl-5.16.3-294.el7_6.x86_64.rpm perl-Class-Load-0.20-3.el7.noarch.rpm perl-Compress-Raw-Bzip2-2.061-3.el7.x86_64.rpm perl-Compress-Raw-Zlib-2.061-4.el7.x86_64.rpm perl-Config-Tiny-2.14-7.el7.noarch.rpm perl-Data-Dumper-2.145-3.el7.x86_64.rpm perl-Data-OptList-0.107-9.el7.noarch.rpm perl-DBD-MySQL-4.023-6.el7.x86_64.rpm perl-DBI-1.627-4.el7.x86_64.rpm perl-devel-5.16.3-294.el7_6.x86_64.rpm perl-IO-Compress-2.061-2.el7.noarch.rpm perl-IO-Socket-IP-0.21-5.el7.noarch.rpm perl-IO-Socket-SSL-1.94-7.el7.noarch.rpm perl-List-MoreUtils-0.33-9.el7.x86_64.rpm perl-MailTools-2.12-2.el7.noarch.rpm perl-Module-Implementation-0.06-6.el7.noarch.rpm perl-Module-Runtime-0.013-4.el7.noarch.rpm perl-Mozilla-CA-20130114-5.el7.noarch.rpm perl-Net-Daemon-0.48-5.el7.noarch.rpm perl-Net-LibIDN-0.12-15.el7.x86_64.rpm perl-Net-SMTP-SSL-1.01-13.el7.noarch.rpm perl-Net-SSLeay-1.55-6.el7.x86_64.rpm perl-Package-DeprecationManager-0.13-7.el7.noarch.rpm perl-Package-Stash-0.34-2.el7.noarch.rpm perl-Package-Stash-XS-0.26-3.el7.x86_64.rpm perl-Params-Util-1.07-6.el7.x86_64.rpm perl-Params-Validate-1.08-4.el7.x86_64.rpm perl-PlRPC-0.2020-14.el7.noarch.rpm perl-Sub-Install-0.926-6.el7.noarch.rpm perl-Sys-Syslog-0.33-3.el7.x86_64.rpm perl-TimeDate-2.30-2.el7.noarch.rpm perl-Try-Tiny-0.12-2.el7.noarch.rpm

二、MHA 基本信息操作

MHA 通俗就是监控数据库状态,实现高可用,即使宕机一台,不影响程序使用,原理:MHA 有检测VIP状态,检测不到时,通过自带的脚本在slave 端选数据库主节点,并启动VIP(VIP漂移)。

2.1 添加IP映射

cat >> /etc/hosts << EOF

############ MHA IP hosts ############ 192.168.1.57 node57

192.168.1.58 node58

192.168.1.59 node59

192.168.1.59 vip

EOF

2.2 安装依赖包

cd /home/backupfile/mha

yum install wget -y

yum install -y perl-DBD-MySQL

perl-Config-Tiny

perl-Log-Dispatch

perl-Parallel-ForkManager

yum install perl-Time-HiRes perl-Parallel-ForkManager -y

wget http://mysql-master-ha.googlecode.com/files/mha4mysql-manager-0.55-0.el6.noarch.rpm

wget http://mysql-master-ha.googlecode.com/files/mha4mysql-node-0.54-0.el6.noarch.rpm

rpm -ivh mha4mysql-node-0.54-0.el6.noarch.rpm

rpm -ivh mha4mysql-manager-0.55-0.el6.noarch.rpm

三、部署mysql 主从参考:mysql搭建以及主从复制

https://www.cnblogs.com/pythonx/p/12054819.html

四、MHA 搭建

安装MHA服务,如果资源允许的话,在两台从节点安装,我这里为减轻mysql主服务压力,吧MHA安装在两台从节点,或者你mysql是一主一从,在主从都可以安装MHA服务。

以下操作在 192.168.1.59 操作

4.1 创建MHA工作目录

mkdir /etc/masterha

4.2 创建MHA日志目录

mkdir /etc/masterha/log

4.3 编辑MHA配置文件

slave : 复制用户

manager : 管理Mysql用户(当时是写root,不知道为什么健康检查一直 是 is NOT OK ,改为其他普通用户就可以了)

master_ip_online_change_script IP漂移故障脚本检查,网上有的添加,我这里直接注释

[root@node59]

vi /etc/masterha/app1.cnf [server default]

#MHA配置文件 remote_workdir=/etc/masterha/app1

#MHA日志 manager_log=/etc/masterha/log/manager.log

#MHA工作目录 manager_workdir=/etc/masterha

#mysql日志目录,根据自己安装目录定义 master_binlog_dir=/home/ap/mysql/data #切换调用的脚本 master_ip_failover_script=/etc/masterha/master_ip_failover report_script=/etc/masterha/send_report #网上说有添加故障脚本,我这里测试没有通过,反而注释就可以

#master_ip_online_change_script=/etc/masterha/master_ip_online_change #MySQL的用户和密码 user=manager password=123456 port=31061 #系统ssh用户 ssh_user=root ssh_port=22 #复制用户 ping_interval=2 repl_user=slave repl_password=123456 port=3306 ssh_port=22

[server1] candidate_master=1 check_repl_delay=0 hostname=192.168.1.57 port=3306 ssh_port=22 [server2] candidate_master=1 check_repl_delay=0 hostname=192.168.1.58 port=3306 ssh_port=22 [server3] candidate_master=1 no_master=1 check_repl_delay=0 hostname=192.168.1.59 ssh_port=22 port=3306

#//设置为候选master,如果设置该参数以后,发生主从切换以后将会将此从库提升为主库,即使这个主库不是集群中事件最新的slave

#//默认情况下如果一个slave落后master 100M的relay logs的话,MHA将不会选择该slave作为一个新的master

#因为对于这个slave的恢复需要花费很长时间,通过设置check_repl_delay=0,

#MHA触发切换在选择一个新的master的时候将会忽略复制延时,这个参数对于设置了candidate_master=1的主机非常有用,

#因为这个候选主在切换的过程中一定是新的master

4.4 添加 vip启动脚本

这里我使用的是ifconfig 命令添加,也可以使用ip 命令

/sbin/ip addr add $vip dev ens192

/sbin/ip addr del $vip dev ens192

[root@node59]

vi init_vip.sh

#!/bin/bash

vip="192.168.1.249/24"

key="2"

/sbin/ifconfig ens192:$key $vip

4.5 添加 vip 删除脚本

[root@node59] vi drop_vip.sh

#!/bin/bash

vip="192.168.1.249/24"

key="2"

/sbin/ifconfig ens192:$key down

4.6 mysqlbinlog设置软连接

我mysql安装/home/ap/mysql

ln -s /home/ap/mysql/bin/mysql /usr/bin/mysql ln -s /home/ap/mysql/bin/mysqlbinlog /usr/bin/mysqlbinlog

五、各个主机配置免密登录,配置互信

以下操作每台都执行

5.1 生成秘钥,如果有秘钥可以忽略此步骤 ssh-keygen 5.2 拷贝公钥 ssh-copy-id node57 ssh-copy-id node58 ssh-copy-id node59 5.3 测试是否可以免密登录,第一次需要输入yes ssh node57 ssh node58 ssh node59

六、MHA服务检查

6.1、主机信任检查

显示All SSH connection tests passed successfully. 为正常。

masterha_check_ssh --conf=/etc/masterha/app1.cnf

Wed Jan 8 18:00:57 2020 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Wed Jan 8 18:00:57 2020 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Wed Jan 8 18:00:57 2020 - [info] Updating application default configuration from /etc/masterha/pm/load_cnf..

Can't exec "/etc/masterha/pm/load_cnf": No such file or directory at /usr/share/perl5/vendor_perl/MHA/Config.pm line 365.

Wed Jan 8 18:00:57 2020 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Wed Jan 8 18:00:57 2020 - [info] Starting SSH connection tests..

Wed Jan 8 18:00:58 2020 - [debug]

Wed Jan 8 18:00:57 2020 - [debug] Connecting via SSH from root@192.168.1.57(192.168.1.57:22) to root@192.168.1.58(192.168.1.58:22)..

Wed Jan 8 18:00:57 2020 - [debug] ok.

Wed Jan 8 18:00:58 2020 - [debug]

Wed Jan 8 18:00:58 2020 - [debug] Connecting via SSH from root@192.168.1.57(192.168.1.57:22) to root@192.168.1.59(192.168.1.59:22)..

Wed Jan 8 18:00:58 2020 - [debug] ok.

Wed Jan 8 18:00:58 2020 - [debug] Connecting via SSH from root@192.168.1.58(192.168.1.58:22) to root@192.168.1.57(192.168.1.57:22)..

Wed Jan 8 18:00:58 2020 - [debug] ok.

Wed Jan 8 18:00:57 2020 - [debug] Connecting via SSH from root@192.168.1.58(192.168.1.58:22) to root@192.168.1.58(192.168.1.59:22)..

Wed Jan 8 18:00:57 2020 - [debug] ok.

Wed Jan 8 18:00:58 2020 - [debug]

Wed Jan 8 18:00:58 2020 - [debug] Connecting via SSH from root@192.168.1.59(192.168.1.59:22) to root@192.168.1.57(192.168.1.57:22)..

Wed Jan 8 18:00:58 2020 - [debug] ok.

Wed Jan 8 18:00:58 2020 - [debug] Connecting via SSH from root@192.168.1.59(192.168.1.59:22) to root@192.168.1.147(192.168.1.58:22)..

Wed Jan 8 18:00:58 2020 - [debug] ok.

Wed Jan 8 18:00:58 2020 - [info] All SSH connection tests passed successfully.

6.2、mysql健康检查

最后显示 MySQL Replication Health is OK. 为正常。

masterha_check_repl --conf=/etc/masterha/app1.cnf

Sat Jan 11 17:03:41 2020 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping.

Sat Jan 11 17:03:41 2020 - [info] Reading application default configuration from /etc/masterha/app1.cnf..

Sat Jan 11 17:03:41 2020 - [info] Reading server configuration from /etc/masterha/app1.cnf..

Sat Jan 11 17:03:41 2020 - [info] MHA::MasterMonitor version 0.58.

Sat Jan 11 17:03:42 2020 - [info] GTID failover mode = 1

Sat Jan 11 17:03:42 2020 - [info] Dead Servers:

Sat Jan 11 17:03:42 2020 - [info] Alive Servers:

Sat Jan 11 17:03:42 2020 - [info] 192.168.1.57(192.168.1.57:3306)

Sat Jan 11 17:03:42 2020 - [info] 192.168.1.58(192.168.1.58:3306)

Sat Jan 11 17:03:42 2020 - [info] 192.168.1.59(192.168.1.59:3306)

Sat Jan 11 17:03:42 2020 - [info] Alive Slaves:

Sat Jan 11 17:03:42 2020 - [info] 192.168.1.58(192.168.1.58:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled

Sat Jan 11 17:03:42 2020 - [info] GTID ON

Sat Jan 11 17:03:42 2020 - [info] Replicating from 192.168.1.57(192.168.1.57:3306)

Sat Jan 11 17:03:42 2020 - [info] Primary candidate for the new Master (candidate_master is set)

Sat Jan 11 17:03:42 2020 - [info] Current Alive Master: 192.168.1.57(192.168.1.57:3306)

Sat Jan 11 17:03:42 2020 - [info] Checking slave configurations..

Sat Jan 11 17:03:42 2020 - [info] Checking replication filtering settings..

Sat Jan 11 17:03:42 2020 - [info] binlog_do_db= , binlog_ignore_db=

Sat Jan 11 17:03:42 2020 - [info] Replication filtering check ok.

Sat Jan 11 17:03:42 2020 - [info] GTID (with auto-pos) is supported. Skipping all SSH and Node package checking.

Sat Jan 11 17:03:42 2020 - [info] Checking SSH publickey authentication settings on the current master..

Sat Jan 11 17:03:42 2020 - [info] HealthCheck: SSH to 192.168.1.57 is reachable.

Sat Jan 11 17:03:42 2020 - [info]

192.168.1.57(192.168.1.57:3306) (current master)

+--192.168.1.58(192.168.1.58:3306)

Sat Jan 11 17:03:42 2020 - [info] Checking replication health on 192.168.1.58..

Sat Jan 11 17:03:42 2020 - [info] ok.

Sat Jan 11 17:03:42 2020 - [info] Checking master_ip_failover_script status:

Sat Jan 11 17:03:42 2020 - [info] /etc/masterha/master_ip_failover --command=status --ssh_user=root --orig_master_host=192.168.1.57 --orig_master_ip=192.168.1.57 --orig_master_port=3306

IN SCRIPT TEST====/sbin/ifconfig ens192:2 down==/sbin/ifconfig ens192:2 192.168.1.249/24===

Checking the Status of the script.. OK

ssh: Could not resolve hostname cluster1: Name or service not known

Sat Jan 11 17:03:43 2020 - [info] OK.

Sat Jan 11 17:03:43 2020 - [warning] shutdown_script is not defined.

Sat Jan 11 17:03:43 2020 - [info] Got exit code 0 (Not master dead).

MySQL Replication Health is OK.

6.3 确认mysql event_scheduler 是否关闭

如果没有关闭三台数据库都执行以下命令

mysql>show variables like 'event_scheduler'

mysql>set global event_scheduler=off;

6.4、从启动 mha

nohup /usr/bin/masterha_manager --conf=/etc/masterha/app1.cnf --ignore_last_failover < /dev/null > /etc/masterha/log/manager.log 2 >&1 &

6.5、检查状态

masterha_check_status --conf=/etc/masterha/app1.cnf

app1 (pid:20454) is running(0:PING_OK), master:192.168.1.57

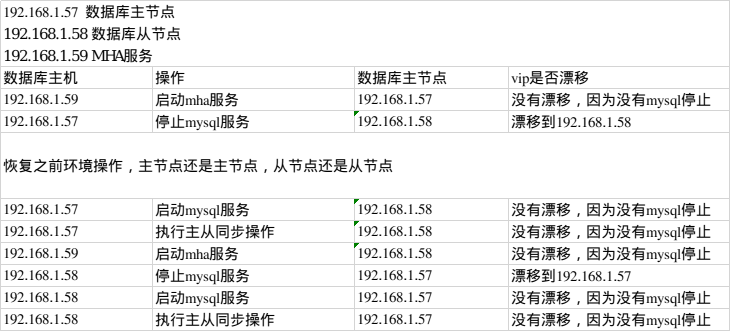

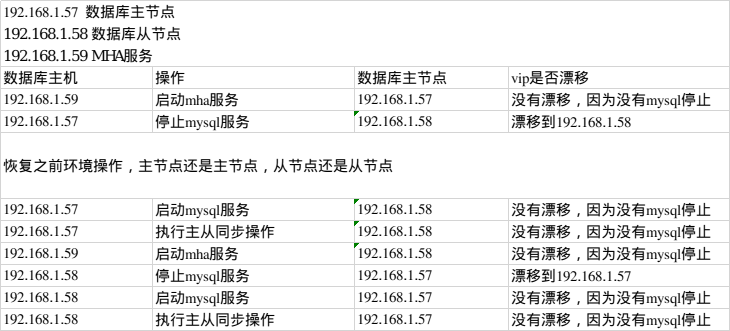

七、检查vip 漂移

为了模拟MHA服务是否真正管用,这里测试MHAVIP漂移是否真正漂移,第一测试需要手动在数据库主节点(57) 操作,创建vip,执行sh init_vip.sh

测试步骤,停止主数据库节点(57),查询vip 是否漂移到从数据库节点上(58)。

使用vip 登录数据库, select @@server_id; 查询是否和从数据库的server_id一致。

然后在启动主数据库节点(57),这里从数据库节点为主(58),刚启动旧主数据库节点(57),在(57)执行以下命令,主从同步。

stop slave; change master to master_host='192.168.1.58',master_port=3306, master_user='slave', master_password='123456', MASTER_AUTO_POSITION=1; reset slave; start slave; show slave status\G;

7.1、192.168.1.57 操作

检查是否有vip ,如果没有执行 sh init_vip.sh

[root@node-57 masterha]#ifconfig

ens192:2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.1.249 netmask 255.255.255.0 broadcast 192.168.1.255

ether 00:0c:29:5b:ff:bb txqueuelen 1000 (Ethernet)

停止主mysql 在 192.168.1.57 操作

[root@node-57 masterha]#/etc/init.d/mysql stop

7.2 在192.168.1.58 操作

[root@node58 masterha]#ifconfig

ens192:2: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 192.168.1.249 netmask 255.255.255.0 broadcast 192.168.1.255

ether 00:0c:29:5b:ff:bb txqueuelen 1000 (Ethernet)

[root@node58 masterha]# mysql -uroot -h192.168.1.249 -P31061 -p123456 -e "select @@server_id" mysql: [Warning] Using a password on the command line interface can be insecure. +-------------+ | @@server_id | +-------------+ | 10 | +-------------+

7.3 这里也可以使用手动恢复之前环境。

192.168.1.57 主数据库节点

192.168.1.58 主数据库从节点,手动添加节点和删除节点,我这里没有使用,大家可以尝试一下。

#####################################################################################################################################################

masterha_conf_host --command=delete --conf=/etc/masterha/app1.cnf --hostname=192.168.1.147 -block=1

masterha_conf_host --command=add --conf=/etc/masterha/app1.cnf --hostname=192.168.1.147 -block=1 --params="candidate_master=1;check_repl_delay=0;port=31061"

#####################################################################################################################################################

将slava 变为主, 将 主 变为slava ,恢复之前操作,为了方便下面操作。

两次 YES

[root@node59 masterha]# masterha_master_switch --conf=/etc/masterha/app1.cnf --master_state=alive --orig_master_is_new_slave --running_updates_limit=1000 Sat Jan 11 17:40:54 2020 - [info] MHA::MasterRotate version 0.58. Sat Jan 11 17:40:54 2020 - [info] Starting online master switch.. Sat Jan 11 17:40:54 2020 - [info] Sat Jan 11 17:40:54 2020 - [info] * Phase 1: Configuration Check Phase.. Sat Jan 11 17:40:54 2020 - [info] Sat Jan 11 17:40:54 2020 - [warning] Global configuration file /etc/masterha_default.cnf not found. Skipping. Sat Jan 11 17:40:54 2020 - [info] Reading application default configuration from /etc/masterha/app1.cnf.. Sat Jan 11 17:40:54 2020 - [info] Reading server configuration from /etc/masterha/app1.cnf.. Sat Jan 11 17:40:55 2020 - [info] GTID failover mode = 1 Sat Jan 11 17:40:55 2020 - [info] Current Alive Master: 192.168.1.57(192.168.1.57:3306) Sat Jan 11 17:40:55 2020 - [info] Alive Slaves: Sat Jan 11 17:40:55 2020 - [info] 192.168.1.58(192.168.1.58:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled Sat Jan 11 17:40:55 2020 - [info] GTID ON Sat Jan 11 17:40:55 2020 - [info] Replicating from 192.168.1.57(192.168.1.57:3306) Sat Jan 11 17:40:55 2020 - [info] Primary candidate for the new Master (candidate_master is set) It is better to execute FLUSH NO_WRITE_TO_BINLOG TABLES on the master before switching. Is it ok to execute on 192.168.1.57(192.168.1.57:3306)? (YES/no): YES Sat Jan 11 17:40:58 2020 - [info] Executing FLUSH NO_WRITE_TO_BINLOG TABLES. This may take long time.. Sat Jan 11 17:40:58 2020 - [info] ok. Sat Jan 11 17:40:58 2020 - [info] Checking MHA is not monitoring or doing failover.. Sat Jan 11 17:40:58 2020 - [info] Checking replication health on 192.168.1.58.. Sat Jan 11 17:40:58 2020 - [info] ok. Sat Jan 11 17:40:58 2020 - [info] Searching new master from slaves.. Sat Jan 11 17:40:58 2020 - [info] Candidate masters from the configuration file: Sat Jan 11 17:40:58 2020 - [info] 192.168.1.57(192.168.1.57:3306) Version=5.7.26-log log-bin:enabled Sat Jan 11 17:40:58 2020 - [info] GTID ON Sat Jan 11 17:40:58 2020 - [info] 192.168.1.58(192.168.1.58:3306) Version=5.7.26-log (oldest major version between slaves) log-bin:enabled Sat Jan 11 17:40:58 2020 - [info] GTID ON Sat Jan 11 17:40:58 2020 - [info] Replicating from 192.168.1.57(192.168.1.57:3306) Sat Jan 11 17:40:58 2020 - [info] Primary candidate for the new Master (candidate_master is set) Sat Jan 11 17:40:58 2020 - [info] Non-candidate masters: Sat Jan 11 17:40:58 2020 - [info] Searching from candidate_master slaves which have received the latest relay log events.. Sat Jan 11 17:40:58 2020 - [info] From: 192.168.1.57(192.168.1.57:3306) (current master) +--192.168.1.58(192.168.1.58:3306) To: 192.168.1.58(192.168.1.58:3306) (new master) +--192.168.1.57(192.168.1.57:3306) Starting master switch from 192.168.1.57(192.168.1.57:3306) to 192.168.1.58(192.168.1.58:3306)? (yes/NO): yes Sat Jan 11 17:41:00 2020 - [info] Checking whether 192.168.1.58(192.168.1.58:3306) is ok for the new master.. Sat Jan 11 17:41:00 2020 - [info] ok. Sat Jan 11 17:41:00 2020 - [info] 192.168.1.57(192.168.1.57:3306): SHOW SLAVE STATUS returned empty result. To check replication filtering rules, temporarily executing CHANGE MASTER to a dummy host. Sat Jan 11 17:41:00 2020 - [info] 192.168.1.57(192.168.1.57:3306): Resetting slave pointing to the dummy host. Sat Jan 11 17:41:00 2020 - [info] ** Phase 1: Configuration Check Phase completed. Sat Jan 11 17:41:00 2020 - [info] Sat Jan 11 17:41:00 2020 - [info] * Phase 2: Rejecting updates Phase.. Sat Jan 11 17:41:00 2020 - [info] master_ip_online_change_script is not defined. If you do not disable writes on the current master manually, applications keep writing on the current master. Is it ok to proceed? (yes/NO): yes Sat Jan 11 17:41:04 2020 - [info] Locking all tables on the orig master to reject updates from everybody (including root): Sat Jan 11 17:41:04 2020 - [info] Executing FLUSH TABLES WITH READ LOCK.. Sat Jan 11 17:41:04 2020 - [info] ok. Sat Jan 11 17:41:04 2020 - [info] Orig master binlog:pos is master-bin.000014:8073944. Sat Jan 11 17:41:04 2020 - [info] Waiting to execute all relay logs on 192.168.1.58(192.168.1.58:3306).. Sat Jan 11 17:41:04 2020 - [info] master_pos_wait(master-bin.000014:8073944) completed on 192.168.1.58(192.168.1.58:3306). Executed 0 events. Sat Jan 11 17:41:04 2020 - [info] done. Sat Jan 11 17:41:04 2020 - [info] Getting new master's binlog name and position.. Sat Jan 11 17:41:04 2020 - [info] slave-bin.000009:7714282 Sat Jan 11 17:41:04 2020 - [info] All other slaves should start replication from here. Statement should be: CHANGE MASTER TO MASTER_HOST='192.168.1.58', MASTER_PORT=3306, MASTER_AUTO_POSITION=1, MASTER_USER='slave', MASTER_PASSWORD='xxx'; Sat Jan 11 17:41:04 2020 - [info] Setting read_only=0 on 192.168.1.58(192.168.1.58:3306).. Sat Jan 11 17:41:04 2020 - [info] ok. Sat Jan 11 17:41:04 2020 - [info] Sat Jan 11 17:41:04 2020 - [info] * Switching slaves in parallel.. Sat Jan 11 17:41:04 2020 - [info] Sat Jan 11 17:41:04 2020 - [info] Unlocking all tables on the orig master: Sat Jan 11 17:41:04 2020 - [info] Executing UNLOCK TABLES.. Sat Jan 11 17:41:04 2020 - [info] ok. Sat Jan 11 17:41:04 2020 - [info] Starting orig master as a new slave.. Sat Jan 11 17:41:04 2020 - [info] Resetting slave 192.168.1.57(192.168.1.57:3306) and starting replication from the new master 192.168.1.58(192.168.1.58:3306).. Sat Jan 11 17:41:04 2020 - [info] Executed CHANGE MASTER. Sat Jan 11 17:41:04 2020 - [info] Slave started. Sat Jan 11 17:41:04 2020 - [info] All new slave servers switched successfully. Sat Jan 11 17:41:04 2020 - [info] Sat Jan 11 17:41:04 2020 - [info] * Phase 5: New master cleanup phase.. Sat Jan 11 17:41:04 2020 - [info] Sat Jan 11 17:41:04 2020 - [info] 192.168.1.58: Resetting slave info succeeded. Sat Jan 11 17:41:04 2020 - [info] Switching master to 192.168.1.58(192.168.1.58:3306) completed successfully.

7.4 恢复之前环境还有一种办法

192.168.1.57 主 ----->停止mysql服务

192.168.1.58 变为了主节点,vip 也漂移到58上。

启动 192.168.1.57 mysql 服务,执行主从同步 192.168.1.57 是从节点。192.168.1.58 是主节点。

stop slave; change master to master_host='192.168.1.58',master_port=3306, master_user='slave', master_password='123456', MASTER_AUTO_POSITION=1; reset slave; start slave; show slave status\G;

停止 现在的主数据库节点192.168.1.58, 然而 192.168.1.57 从节点会变为数据库的主节点,vip 会漂移到57 服务上

在启动192.168.1.58mysql 服务,执行主从同步,环境还原。

MHA常见问题:

https://www.cnblogs.com/pythonx/protected/p/12177588.html

问题:

slava 没有权限change

change master to master_auto_position=0;192.168.1.57

浙公网安备 33010602011771号

浙公网安备 33010602011771号