torch.nn.AdaptiveMaxPool2d

官方给出的例子:

target output size of 5x7

m = nn.AdaptiveMaxPool2d((5,7))

input = torch.randn(1, 64, 8, 9)

output = m(input)

output.size()

torch.Size([1, 64, 5, 7])

target output size of 7x7 (square)

m = nn.AdaptiveMaxPool2d(7)

input = torch.randn(1, 64, 10, 9)

output = m(input)

output.size()

torch.Size([1, 64, 7, 7])

target output size of 10x7

m = nn.AdaptiveMaxPool2d((None, 7))

input = torch.randn(1, 64, 10, 9)

output = m(input)

output.size()

torch.Size([1, 64, 10, 7])

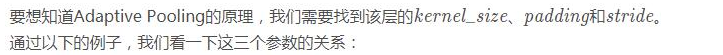

Adaptive Pooling特殊性在于,输出张量的大小都是给定的output_size output_sizeoutput_size。例如输入张量大小为(1, 64, 8, 9),设定输出大小为(5,7),通过Adaptive Pooling层,可以得到大小为(1, 64, 5, 7)的张量。

inputsize = 9

outputsize = 4

input = torch.randn(1, 1, inputsize)

input

tensor([[[ 1.5695, -0.4357, 1.5179, 0.9639, -0.4226, 0.5312, -0.5689, 0.4945, 0.1421]]])

m1 = nn.AdaptiveMaxPool1d(outputsize)

m2 = nn.MaxPool1d(kernel_size=math.ceil(inputsize / outputsize), stride=math.floor(inputsize / outputsize), padding=0)

output1 = m1(input)

output2 = m2(input)

output1

tensor([[[1.5695, 1.5179, 0.5312, 0.4945]]]) torch.Size([1, 1, 4])

output2

tensor([[[1.5695, 1.5179, 0.5312, 0.4945]]]) torch.Size([1, 1, 4])

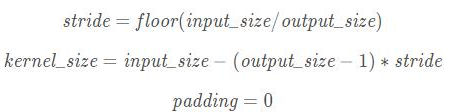

通过实验发现: