yolov1代码阅读

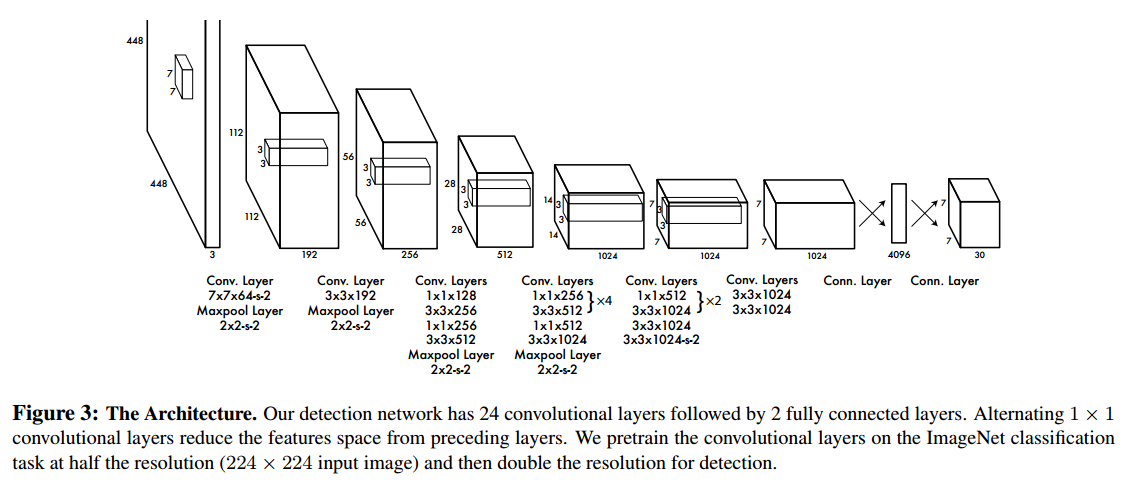

yolov1使用的backbone是由GoogLeNet启发而来,有24个卷积层,最后接2个全连接层,详细结构如下图:

检测网络的输入分辨率是448X448,最后的特征图大小为7X7。在特征图的每一个位置都预测如下数据项:

1、一个C维的向量,表示在该位置含有物体的条件下,含有的物体属于C个类别中每一类别的条件概率;

2、一个B维的向量,网络为每个位置预测了B个bounding boxes,每个bounding boxes都有一个“分数”,表示该box与真正的物体框的IOU,也可以理解成该bounding box含有物体的概率。这里的“分数”和1中的每个类别的分数相乘就是每个框含有每一类物体的概率;

3、一个B*4维的向量。前两个数是bbox的中心点坐标,坐标值是在7X7的尺度下,相对于feature map当前位置的偏移量,范围为[0, 1],当前位置坐标和偏移量相加即为实际的中心点坐标。这个中心点坐标的范围实际上就在以当前位置为左上角顶点的grid cell内。

综上输出的Tensor元素的排列顺序为:7*7*C-->7*7*B-->7*7*4*B,而标签Tensor元素的排列顺序为(1-->C-->4)*7*7。

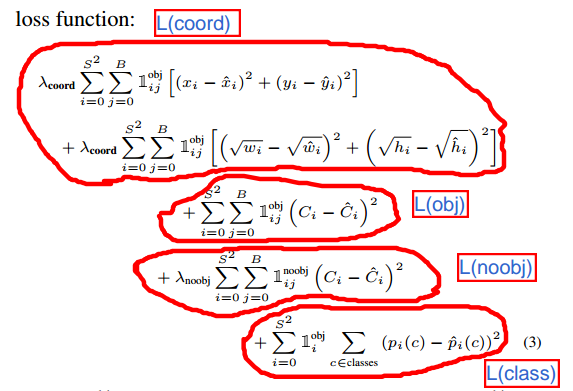

yolov1的Loss由4部分组成,如下图:

yolov1最后一层的代码如下:

/* l.coord_scale=5 l.object_scale=1 l.noobject_scale=0.5 l.class_scale=1 l.delta[box_index+0] = l.coord_scale*(net.truth[tbox_index + 0] - l.output[box_index + 0]); l.delta[box_index+1] = l.coord_scale*(net.truth[tbox_index + 1] - l.output[box_index + 1]); l.delta[box_index+2] = l.coord_scale*(sqrt(net.truth[tbox_index + 2]) - l.output[box_index + 2]); l.delta[box_index+3] = l.coord_scale*(sqrt(net.truth[tbox_index + 3]) - l.output[box_index + 3]); l.delta[p_index] = l.object_scale * (iou - l.output[p_index]); l.delta[p_index] = l.noobject_scale*(0 - l.output[p_index]); l.delta[class_index+j] = l.class_scale * (net.truth[truth_index+1+j] - l.output[class_index+j]); */ void forward_detection_layer(const detection_layer l, network net) { int locations = l.side*l.side; int i,j; memcpy(l.output, net.input, l.outputs*l.batch*sizeof(float)); //if(l.reorg) reorg(l.output, l.w*l.h, size*l.n, l.batch, 1); int b; // l.softmax=0 if (l.softmax){ for(b = 0; b < l.batch; ++b){ int index = b*l.inputs; for (i = 0; i < locations; ++i) { int offset = i*l.classes; softmax(l.output + index + offset, l.classes, 1, 1, l.output + index + offset); } } } if(net.train){ float avg_iou = 0; float avg_cat = 0; float avg_allcat = 0; float avg_obj = 0; float avg_anyobj = 0; int count = 0; *(l.cost) = 0; int size = l.inputs * l.batch; memset(l.delta, 0, size * sizeof(float)); for (b = 0; b < l.batch; ++b){ int index = b*l.inputs; for (i = 0; i < locations; ++i) { int truth_index = (b*locations + i)*(1+l.coords+l.classes); int is_obj = net.truth[truth_index]; for (j = 0; j < l.n; ++j) { int p_index = index + locations*l.classes + i*l.n + j; // l.noobject_scale=0.5 l.delta[p_index] = l.noobject_scale*(0 - l.output[p_index]); *(l.cost) += l.noobject_scale*pow(l.output[p_index], 2); avg_anyobj += l.output[p_index]; } if (!is_obj){ continue; } // l.class_scale=1 int class_index = index + i*l.classes; for(j = 0; j < l.classes; ++j) { l.delta[class_index+j] = l.class_scale * (net.truth[truth_index+1+j] - l.output[class_index+j]); *(l.cost) += l.class_scale * pow(net.truth[truth_index+1+j] - l.output[class_index+j], 2); if(net.truth[truth_index + 1 + j]) avg_cat += l.output[class_index+j]; avg_allcat += l.output[class_index+j]; } int best_index = -1; float best_iou = 0; float best_rmse = 20; int row = i / l.side; int col = i % l.side; box truth = float_to_box(net.truth + truth_index + 1 + l.classes, 1); truth.x = (truth.x + col) / l.side; truth.y = (truth.y + row) / l.side; for(j = 0; j < l.n; ++j){ int box_index = index + locations*(l.classes + l.n) + (i*l.n + j) * l.coords; box out = float_to_box(l.output + box_index, 1); out.x = (out.x + col) / l.side; out.y = (out.y + row) / l.side; // l.sqrt=1 if (l.sqrt){ out.w = out.w*out.w; out.h = out.h*out.h; } float iou = box_iou(out, truth); float rmse = box_rmse(out, truth); if(best_iou > 0 || iou > 0){ if(iou > best_iou){ best_iou = iou; best_index = j; } }else{ if(rmse < best_rmse){ best_rmse = rmse; best_index = j; } } } // l.forced=0 if(l.forced){ if(truth.w*truth.h < .1){ best_index = 1; }else{ best_index = 0; } } // l.random=0 if(l.random && *(net.seen) < 64000){ best_index = rand()%l.n; } int box_index = index + locations*(l.classes + l.n) + (i*l.n + best_index) * l.coords; int tbox_index = truth_index + 1 + l.classes; box out = float_to_box(l.output + box_index, 1); out.x = (out.x + col) / l.side; out.y = (out.y + row) / l.side; if (l.sqrt) { out.w = out.w*out.w; out.h = out.h*out.h; } float iou = box_iou(out, truth); // l.noobject_scale=0.5, l.object_scale=1 int p_index = index + locations*l.classes + i*l.n + best_index; *(l.cost) -= l.noobject_scale * pow(l.output[p_index], 2); *(l.cost) += l.object_scale * pow(1-l.output[p_index], 2); avg_obj += l.output[p_index]; l.delta[p_index] = l.object_scale * (1.-l.output[p_index]); // l.rescore=1 if(l.rescore){ l.delta[p_index] = l.object_scale * (iou - l.output[p_index]); } // l.coord_scale=5 l.delta[box_index+0] = l.coord_scale*(net.truth[tbox_index + 0] - l.output[box_index + 0]); l.delta[box_index+1] = l.coord_scale*(net.truth[tbox_index + 1] - l.output[box_index + 1]); l.delta[box_index+2] = l.coord_scale*(net.truth[tbox_index + 2] - l.output[box_index + 2]); l.delta[box_index+3] = l.coord_scale*(net.truth[tbox_index + 3] - l.output[box_index + 3]); if(l.sqrt){ l.delta[box_index+2] = l.coord_scale*(sqrt(net.truth[tbox_index + 2]) - l.output[box_index + 2]); l.delta[box_index+3] = l.coord_scale*(sqrt(net.truth[tbox_index + 3]) - l.output[box_index + 3]); } *(l.cost) += pow(1-iou, 2); avg_iou += iou; ++count; } } *(l.cost) = pow(mag_array(l.delta, l.outputs * l.batch), 2); printf("Detection Avg IOU: %f, Pos Cat: %f, All Cat: %f, Pos Obj: %f, Any Obj: %f, count: %d\n", avg_iou/count, avg_cat/count, avg_allcat/(count*l.classes), avg_obj/count, avg_anyobj/(l.batch*locations*l.n), count); //if(l.reorg) reorg(l.delta, l.w*l.h, size*l.n, l.batch, 0); } } void backward_detection_layer(const detection_layer l, network net) { axpy_cpu(l.batch*l.inputs, 1, l.delta, 1, net.delta, 1); }

预测时根据最终的输出得到bbox的代码如下:(值得注意的是,在yolov1的训练阶段,会使用原图的宽、高将标注的bbox归一化,在预测阶段输出的bbox坐标也是归一化的。)

void get_detection_detections(layer l, int w, int h, float thresh, detection *dets) { int i,j,n; float *predictions = l.output; //int per_cell = 5*num+classes; for (i = 0; i < l.side*l.side; ++i){ int row = i / l.side; int col = i % l.side; for(n = 0; n < l.n; ++n){ int index = i*l.n + n; int p_index = l.side*l.side*l.classes + i*l.n + n; float scale = predictions[p_index]; int box_index = l.side*l.side*(l.classes + l.n) + (i*l.n + n)*4; box b; // b.x = (predictions[box_index + 0] + col) / l.side * w; // b.y = (predictions[box_index + 1] + row) / l.side * h; // b.w = pow(predictions[box_index + 2], (l.sqrt?2:1)) * w; // b.h = pow(predictions[box_index + 3], (l.sqrt?2:1)) * h; b.x = (predictions[box_index + 0] + col) / l.side; b.y = (predictions[box_index + 1] + row) / l.side; b.w = pow(predictions[box_index + 2], (l.sqrt?2:1)); b.h = pow(predictions[box_index + 3], (l.sqrt?2:1)); dets[index].bbox = b; dets[index].objectness = scale; for(j = 0; j < l.classes; ++j){ int class_index = i*l.classes; float prob = scale*predictions[class_index+j]; dets[index].prob[j] = (prob > thresh) ? prob : 0; } } } }

生成net.truth的关键代码如下:

void fill_truth_region(char *path, float *truth, int classes, int num_boxes, int flip, float dx, float dy, float sx, float sy) { char labelpath[4096]; find_replace(path, "images", "labels", labelpath); find_replace(labelpath, "JPEGImages", "labels", labelpath); find_replace(labelpath, ".jpg", ".txt", labelpath); find_replace(labelpath, ".png", ".txt", labelpath); find_replace(labelpath, ".JPG", ".txt", labelpath); find_replace(labelpath, ".JPEG", ".txt", labelpath); int count = 0; box_label *boxes = read_boxes(labelpath, &count); randomize_boxes(boxes, count); correct_boxes(boxes, count, dx, dy, sx, sy, flip); float x,y,w,h; int id; int i; for (i = 0; i < count; ++i) { x = boxes[i].x; y = boxes[i].y; w = boxes[i].w; h = boxes[i].h; id = boxes[i].id; if (w < .005 || h < .005) continue; // num_boxes is S in article, yolov1 divides the input image into SxS grid int col = (int)(x*num_boxes); int row = (int)(y*num_boxes); x = x*num_boxes - col; y = y*num_boxes - row; int index = (col+row*num_boxes)*(5+classes); if (truth[index]) continue; truth[index++] = 1; if (id < classes) truth[index+id] = 1; index += classes; truth[index++] = x; truth[index++] = y; truth[index++] = w; truth[index++] = h; } free(boxes); }