prometheus 基于consul、file实现服务发现

安装consul

consul官网:https://www.consul.io/

Consul 是分布式 k/v 数据存储集群,目前常用于服务的服务注册和发现。

官网下载

下载链接1 https://www.consul.io/downloads

下载链接2

环境:

192.168.100.9、192.168.100.10、192.168.100.11

安装consul

root@consul1:/usr/local\# unzip consul_1.13.1_linux_amd64.zip

root@consul1:/usr/local\# mv consul /usr/local/bin/

root@consul1:/usr/local\# scp /usr/local/bin/consul 192.168.100.10:/usr/local/bin/

root@consul1:/usr/local\# scp /usr/local/bin/consul 192.168.100.11:/usr/local/bin/

mkdir /data/consul/data -p

mkdir /data/consul/logs -p

consul命令参数:

consul agent -server #使用 server 模式运行 consul 服务

-bootstrap #首次部署使用初始化模式

-bind #设置群集通信的监听地址

-client #设置客户端访问的监听地址

-data-dir #指定数据保存路径

-ui #启用内置静态 web UI 服务器

-node #此节点的名称,群集中必须唯一

-datacenter=dc1 #集群名称,默认是 dc1

-join #加入到已有 consul 集群环境

-bootstrap-expect #在一个datacenter中期望提供的server节点数目,当该值提供的时候,consul一直等到达到指定sever数目的时候才会引导整个集群,该标记不能和bootstrap共用(推荐使用的方式)

-config-dir #配置文件目录,里面所有以.json结尾的文件都会被加载

-log-rotate-bytes=102400000 #指定日志大小,100MB(字节)

主节点配置

root@consul1:/data/consul/logs\# vim /etc/systemd/system/consul.service

[Unit]

Description=consul server

After=network.target

[Service]

Type=simple

User=root

ExecStart=/usr/local/bin/consul agent -server -bootstrap-expect=3 -bind=192.168.100.9 -client=192.168.100.9 -data-dir=/data/consul/data -ui -node=192.168.100.9 -datacenter=dc1 -log-rotate-bytes=102400000 -log-file=/data/consul/logs/

ExecReload=/bin/kill -HUP $MAINPID

KillSignal=SIGTERM

[Install]

WantedBy=multi-user.target

从节点配置

consul2配置

root@consul2:~\# vim /etc/systemd/system/consul.service

[Unit]

Description=consul server

After=network.target

[Service]

Type=simple

User=root

ExecStart=/usr/local/bin/consul agent -server -bootstrap-expect=3 -bind=192.168.100.10 -client=192.168.100.10 -data-dir=/data/consul/data -ui -node=192.168.100.10 -datacenter=dc1 -join=192.168.100.9 -log-rotate-bytes=102400000 -log-file=/data/consul/logs/

ExecReload=/bin/kill -HUP $MAINPID

KillSignal=SIGTERM

[Install]

WantedBy=multi-user.target

consul3配置

root@consul3:~\# cat /etc/systemd/system/consul.service

[Unit]

Description=consul server

After=network.target

[Service]

Type=simple

User=root

ExecStart=/usr/local/bin/consul agent -server -bootstrap-expect=3 -bind=192.168.100.11 -client=192.168.100.11 -data-dir=/data/consul/data -ui -node=192.168.100.11 -datacenter=dc1 -join=192.168.100.9 -log-rotate-bytes=102400000 -log-file=/data/consul/logs/

ExecReload=/bin/kill -HUP $MAINPID

KillSignal=SIGTERM

[Install]

WantedBy=multi-user.target

systemctl daemon-reload && systemctl start consul && systemctl enable consul

浏览器访问consul主节点:IP:8500

测试写入数据

1、服务发现node-exporter

通过 consul 的 API 写入数据,添加k8s三个prometheus 的node-exporter服务地址

curl -X PUT -d '{"id": "node-exporter1","name": "node-exporter1","address":"192.168.100.3","port":9100,"tags": ["node-exporter"],"checks": [{"http":"http://192.168.100.3:9100/","interval": "5s"}]}' http://192.168.100.9:8500/v1/agent/service/register

curl -X PUT -d '{"id": "node-exporter2","name": "node-exporter2","address":"192.168.100.4","port":9100,"tags": ["node-exporter"],"checks": [{"http":"http://192.168.100.4:9100/","interval": "5s"}]}' http://192.168.100.9:8500/v1/agent/service/register

curl -X PUT -d '{"id": "node-exporter3","name": "node-exporter3","address":"192.168.100.5","port":9100,"tags": ["node-exporter"],"checks": [{"http":"http://192.168.100.5:9100/","interval": "5s"}]}' http://192.168.100.9:8500/v1/agent/service/register

2、服务发现cadvisor

通过 consul 的 API 写入数据,添加k8s三个prometheus 的cadvisor服务地址

curl -X PUT -d '{"id": "cadvisor1","name": "cadvisor1","address":"192.168.100.3","port":8080,"tags": ["cadvisor"],"checks": [{"http":"http://192.168.100.3:8080/","interval": "5s"}]}' http://192.168.100.9:8500/v1/agent/service/register

curl -X PUT -d '{"id": "cadvisor2","name": "cadvisor2","address":"192.168.100.4","port":8080,"tags": ["cadvisor"],"checks": [{"http":"http://192.168.100.4:8080/","interval": "5s"}]}' http://192.168.100.9:8500/v1/agent/service/register

curl -X PUT -d '{"id": "cadvisor3","name": "cadvisor3","address":"192.168.100.5","port":8080,"tags": ["cadvisor"],"checks": [{"http":"http://192.168.100.5:8080/","interval": "5s"}]}' http://192.168.100.9:8500/v1/agent/service/register

服务详细

删除服务

PUT请求URI /v1/agent/service/deregister/{服务ID}

curl -XPUT http://192.168.100.9:8500/v1/agent/service/deregister/node-exporter3

配置prometheus到consul发现服务

prometheus官网:https://prometheus.io/docs/prometheus/latest/configuration/configuration/#consul_sd_config

主要配置字段

static_configs: #配置数据源

consul_sd_configs: #指定基于 consul 服务发现的配置

rebel_configs: #重新标记

services: [] #表示匹配 consul 中所有的 service

consul_sd_configs配置字段

metrics_path: /metrics #指定的是k8s中的node-exporter的metrics指标URI

scheme: http #指定的是k8s中node-exporter的scheme模式,不是consul的scheme

refresh_interval: 15s #间隔15s从consul服务发现,默认30s

honor_labels: true

#honor_labels 控制 Prometheus 如何处理已经存在于已抓取数据中的标签与 Prometheus 将附加服务器端的标签之间的冲突("job"和"instance"标签,手动配置的目标标签以及服务发现实现生成的标签)。如果 honor_labels 设置为"true",则保留已抓取数据的标签值并忽略冲突的 prometheus 服务器端标签来解决标签冲突,另外如果被采集端有标签但是值为空、则使用 prometheus 本地标签值,如果被采集端没有此标签、但是 prometheus 配置了,那也使用 prometheus 的配置的标签值。如果 honor_labels 设置为 "false" ,则通过将已抓取数据中的冲突标签重命名为 "exported_<original-label>"(例如"exported_instance","exported_job")然后附加服务器端标签来解决标签冲突。

1、k8s部署prometheus-server 的配置yaml

root@master1:~/yaml# cat prometheus-consul-cfg.yaml

kind: ConfigMap

apiVersion: v1

metadata:

labels:

app: prometheus

name: prometheus-config

namespace: monitoring

data:

prometheus.yml: |

global:

scrape_interval: 15s

scrape_timeout: 10s

evaluation_interval: 1m

scrape_configs:

- job_name: consul

honor_labels: true #则保留已抓取数据的标签值并忽略冲突的 prometheus 服务器端标签来解决标签冲突

metrics_path: /metrics #指定的是k8s中的node-exporter的metrics指标URI

scheme: http #指定的是k8s中node-exporter的scheme模式,不是consul的scheme

consul_sd_configs:

- server: 192.168.100.9:8500

services: []

- server: 192.168.100.10:8500

services: []

- server: 192.168.100.11:8500

services: []

- refresh_interval: 15s #间隔15s从consul服务发现,默认30s

relabel_configs:

- source_labels: ['__meta_consul_tags']

target_label: 'product' #将__meta_consul_tags替换为新的标签product

- source_labels: ['__meta_consul_dc']

target_label: 'idc' #将__meta_consul_dc标签替换为新的标签idc

- source_labels: ['__meta_consul_service']

regex: "consul" #取消发现consul的服务

action: drop

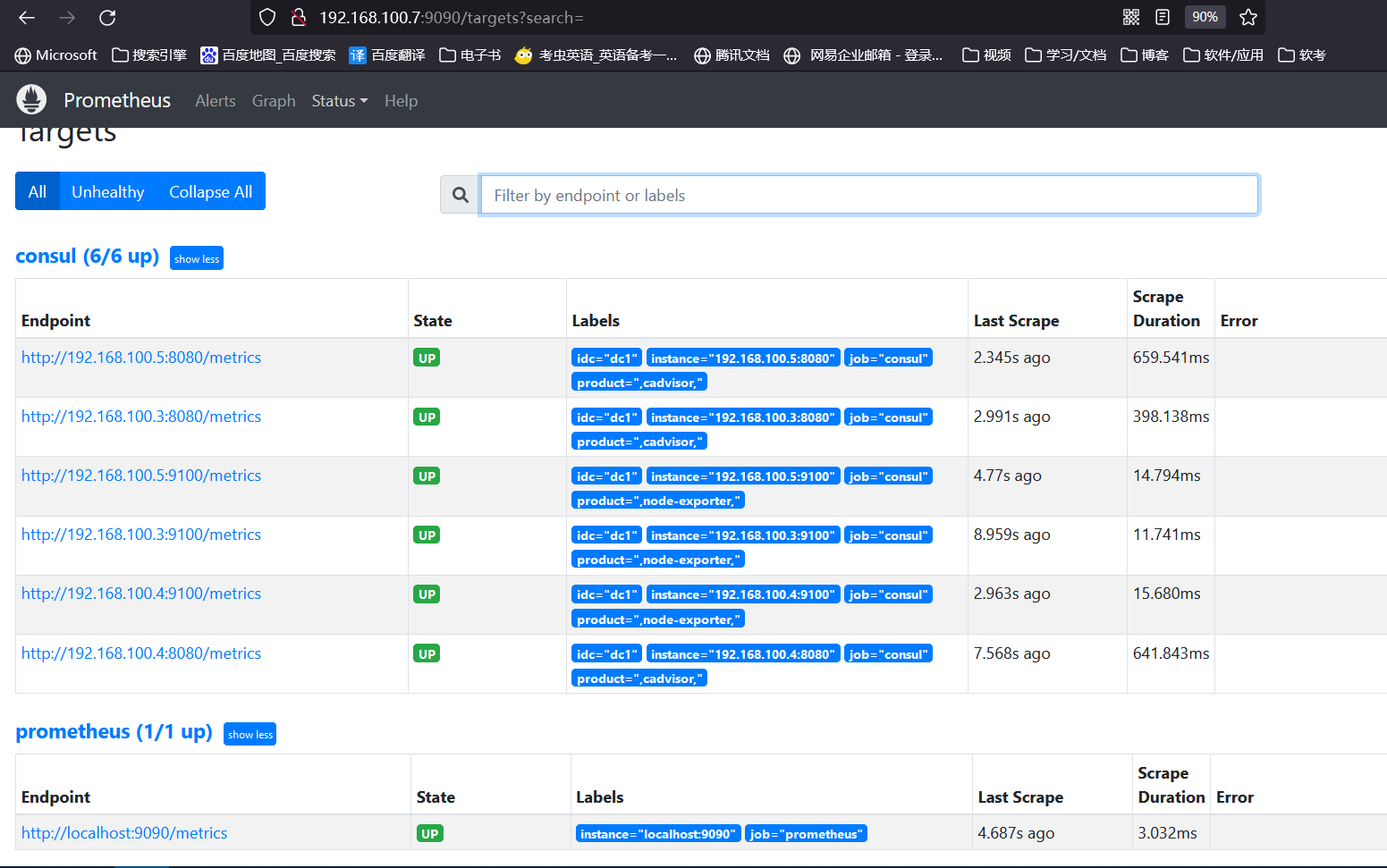

浏览器访问验证prometheus-server

root@prometheus:/etc/profile.d\# cd /usr/local/prometheus

root@prometheus:/usr/local/prometheus\# vim prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

#配置consul服务发现,从而抓取监控指标

- job_name: consul

honor_labels: true

metrics_path: /metrics

scheme: http

consul_sd_configs:

- server: 192.168.100.9:8500

services: []

- server: 192.168.100.10:8500

services: []

- server: 192.168.100.11:8500

services: []

- refresh_interval: 15s

relabel_configs:

- source_labels: ['__meta_consul_tags']

target_label: 'product'

- source_labels: ['__meta_consul_dc']

target_label: 'idc'

- source_labels: ['__meta_consul_service']

regex: "consul"

action: drop

浏览器访问:

file_sd_configs

prometheus-server可以从指定的文件中动态发现监控指标服务,并且文件如果发生修改prometheus-server也无需重新服务,可以动态从文件中抓取获取到对应的target

https://prometheus.io/docs/prometheus/latest/configuration/configuration/#file_sd_config

编辑 file_sd_configs 配置文件:

在prometheus目录下创建一个存放file_sd_configs目录

root@prometheus:/usr/local/prometheus\# mkdir file_sd创建file_sd_configs 配置文件添加prometheus服务发现的target(node_exporter)

root@prometheus:/usr/local/prometheus\# vim file_sd/target.json

[

{

"targets": [ "192.168.100.3:9100", "192.168.100.4:9100", "192.168.100.5:9100" ]

}

]

root@prometheus:/usr/local/prometheus\# vim file_sd/cadvisor.json

[

{

"targets": [ "192.168.100.3:8080", "192.168.100.4:8080", "192.168.100.5:8080" ]

}

]修改prometheus服务配置文件

root@prometheus:/usr/local/prometheus\# cat prometheus.yml

# my global config

global:

scrape_interval: 15s # Set the scrape interval to every 15 seconds. Default is every 1 minute.

evaluation_interval: 15s # Evaluate rules every 15 seconds. The default is every 1 minute.

# scrape_timeout is set to the global default (10s).

# Alertmanager configuration

alerting:

alertmanagers:

- static_configs:

- targets:

# - alertmanager:9093

# Load rules once and periodically evaluate them according to the global 'evaluation_interval'.

rule_files:

# - "first_rules.yml"

# - "second_rules.yml"

# A scrape configuration containing exactly one endpoint to scrape:

# Here it's Prometheus itself.

scrape_configs:

# The job name is added as a label `job=<job_name>` to any timeseries scraped from this config.

- job_name: "prometheus"

# metrics_path defaults to '/metrics'

# scheme defaults to 'http'.

static_configs:

- targets: ["localhost:9090"]

- job_name: "file_sd_my_server"

file_sd_configs:

- files:

- /usr/local/prometheus/file_sd/target.json #服务发现node-exporter

- /usr/local/prometheus/file_sd/cadvisor.json #服务发现cadvisor

refresh_interval: 10s #间隔10s从文件服务发现,默认5min浏览器访问prometheus-server验证

本文来自博客园,作者:PunchLinux,转载请注明原文链接:https://www.cnblogs.com/punchlinux/p/16856615.html

浙公网安备 33010602011771号

浙公网安备 33010602011771号